Unveiling Subtle Yet Impactful AI Modifications in Authentic Video Content

In 2019, a deceptive video of Nancy Pelosi, then Speaker of the US House of Representatives, circulated widely. The video, which was edited to make her appear intoxicated, was a stark reminder of how easily manipulated media can mislead the public. Despite its simplicity, this incident highlighted the potential damage of even basic audio-visual edits.

At the time, the deepfake landscape was largely dominated by autoencoder-based face-replacement technologies, which had been around since late 2017. These early systems struggled to make the nuanced changes seen in the Pelosi video, focusing instead on more overt face swaps.

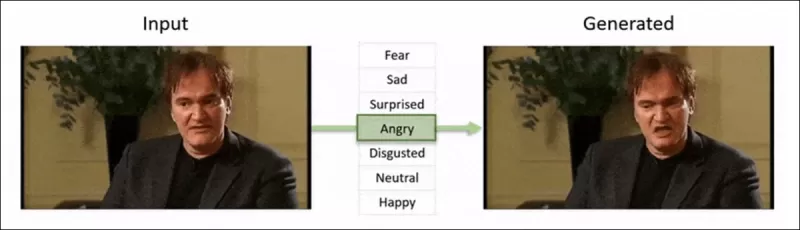

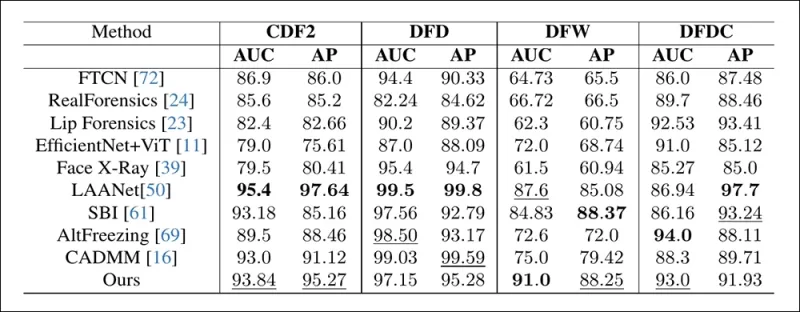

The 2022 ‘Neural Emotion Director' framework changes the mood of a famous face. Source: https://www.youtube.com/watch?v=Li6W8pRDMJQ

The 2022 ‘Neural Emotion Director' framework changes the mood of a famous face. Source: https://www.youtube.com/watch?v=Li6W8pRDMJQ

Fast forward to today, and the film and TV industry is increasingly exploring AI-driven post-production edits. This trend has sparked both interest and criticism, as AI enables a level of perfectionism that was previously unattainable. In response, the research community has developed various projects focused on 'local edits' of facial captures, such as Diffusion Video Autoencoders, Stitch it in Time, ChatFace, MagicFace, and DISCO.

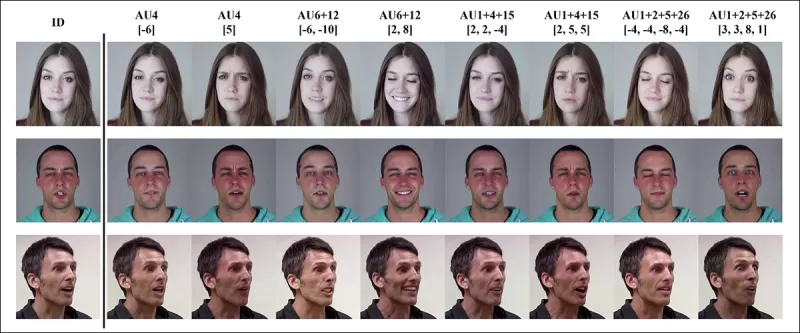

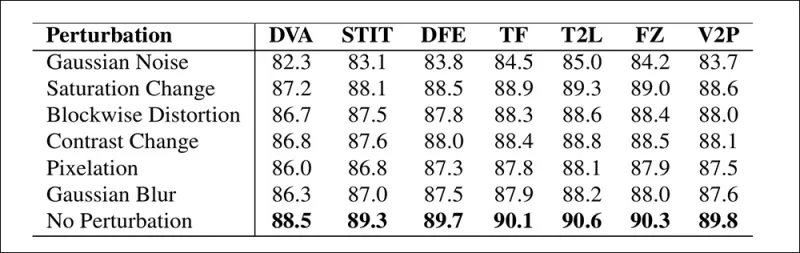

Expression-editing with the January 2025 project MagicFace. Source: https://arxiv.org/pdf/2501.02260

Expression-editing with the January 2025 project MagicFace. Source: https://arxiv.org/pdf/2501.02260

New Faces, New Wrinkles

However, the technology for creating these subtle edits is advancing much faster than our ability to detect them. Most deepfake detection methods are outdated, focusing on older techniques and datasets. That is, until a recent breakthrough from researchers in India.

Detection of Subtle Local Edits in Deepfakes: A real video is altered to produce fakes with nuanced changes such as raised eyebrows, modified gender traits, and shifts in expression toward disgust (illustrated here with a single frame). Source: https://arxiv.org/pdf/2503.22121

Detection of Subtle Local Edits in Deepfakes: A real video is altered to produce fakes with nuanced changes such as raised eyebrows, modified gender traits, and shifts in expression toward disgust (illustrated here with a single frame). Source: https://arxiv.org/pdf/2503.22121

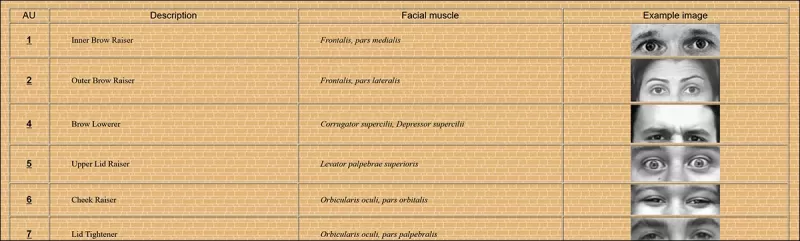

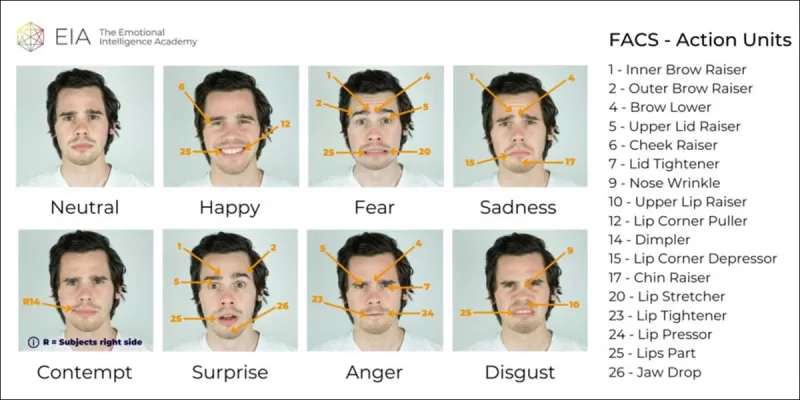

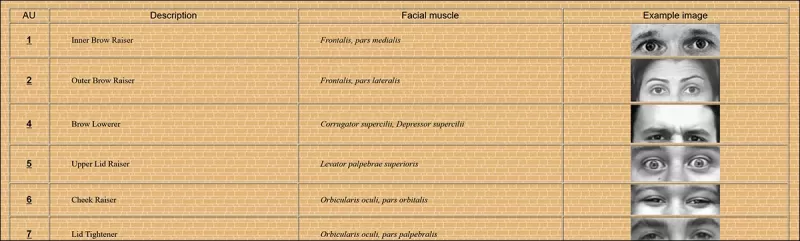

This new research targets the detection of subtle, localized facial manipulations, a type of forgery often overlooked. Instead of looking for broad inconsistencies or identity mismatches, the method zeroes in on fine details like slight expression shifts or minor edits to specific facial features. It leverages the Facial Action Coding System (FACS), which breaks down facial expressions into 64 mutable areas.

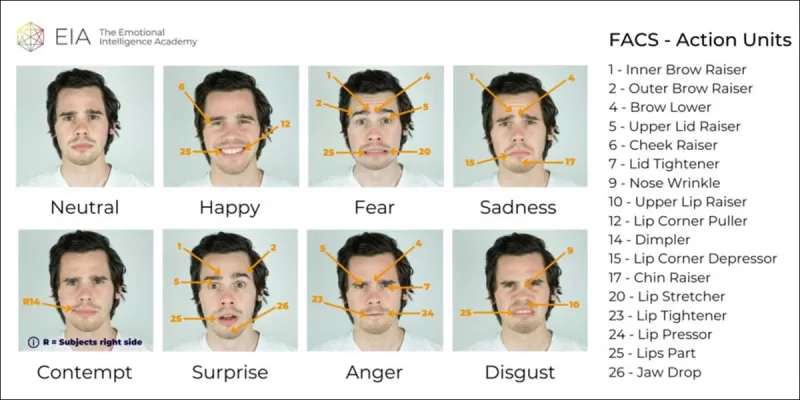

Some of the constituent 64 expression parts in FACS. Source: https://www.cs.cmu.edu/~face/facs.htm

Some of the constituent 64 expression parts in FACS. Source: https://www.cs.cmu.edu/~face/facs.htm

The researchers tested their approach against various recent editing methods and found it consistently outperformed existing solutions, even with older datasets and newer attack vectors.

‘By using AU-based features to guide video representations learned through Masked Autoencoders (MAE), our method effectively captures localized changes crucial for detecting subtle facial edits.

‘This approach enables us to construct a unified latent representation that encodes both localized edits and broader alterations in face-centered videos, providing a comprehensive and adaptable solution for deepfake detection.'

The paper, titled Detecting Localized Deepfake Manipulations Using Action Unit-Guided Video Representations, was authored by researchers at the Indian Institute of Technology at Madras.

Method

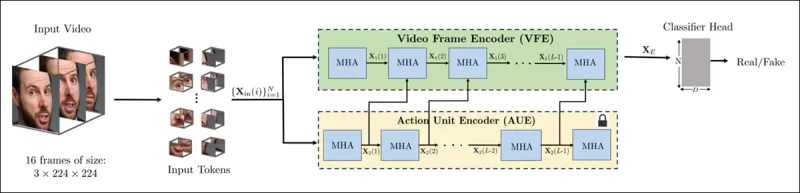

The method starts by detecting faces in a video and sampling evenly spaced frames centered on these faces. These frames are then broken down into small 3D patches, capturing local spatial and temporal details.

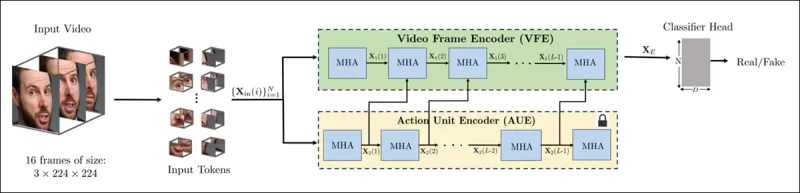

Schema for the new method. The input video is processed with face detection to extract evenly spaced, face-centered frames, which are then divided into ‘tubular' patches and passed through an encoder that fuses latent representations from two pretrained pretext tasks. The resulting vector is then used by a classifier to determine whether the video is real or fake.

Schema for the new method. The input video is processed with face detection to extract evenly spaced, face-centered frames, which are then divided into ‘tubular' patches and passed through an encoder that fuses latent representations from two pretrained pretext tasks. The resulting vector is then used by a classifier to determine whether the video is real or fake.

Each patch contains a small window of pixels from a few successive frames, allowing the model to learn short-term motion and expression changes. These patches are embedded and positionally encoded before being fed into an encoder designed to distinguish real from fake videos.

The challenge of detecting subtle manipulations is addressed by using an encoder that combines two types of learned representations through a cross-attention mechanism, aiming to create a more sensitive and generalizable feature space.

Pretext Tasks

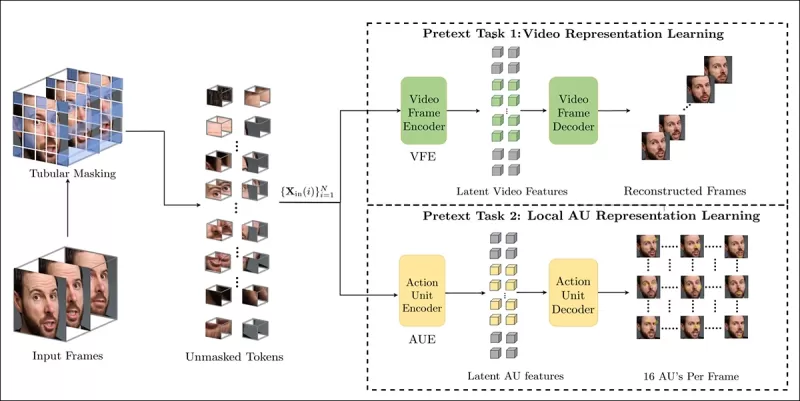

The first representation comes from an encoder trained with a masked autoencoding task. By hiding most of the video's 3D patches, the encoder learns to reconstruct the missing parts, capturing important spatiotemporal patterns like facial motion.

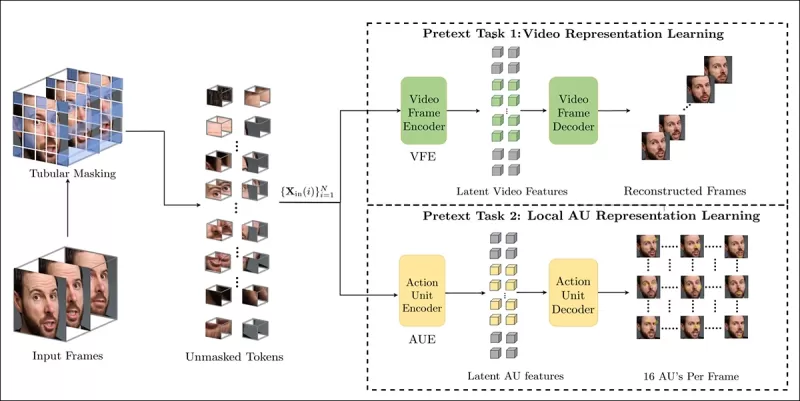

Pretext task training involves masking parts of the video input and using an encoder-decoder setup to reconstruct either the original frames or per-frame action unit maps, depending on the task.

Pretext task training involves masking parts of the video input and using an encoder-decoder setup to reconstruct either the original frames or per-frame action unit maps, depending on the task.

However, this alone isn't enough to detect fine-grained edits. The researchers introduced a second encoder trained to detect facial action units (AUs), encouraging it to focus on localized muscle activity where subtle deepfake edits often occur.

Further examples of Facial Action Units (FAUs, or AUs). Source: https://www.eiagroup.com/the-facial-action-coding-system/

Further examples of Facial Action Units (FAUs, or AUs). Source: https://www.eiagroup.com/the-facial-action-coding-system/

After pretraining, the outputs of both encoders are combined using cross-attention, with the AU-based features guiding the attention over the spatial-temporal features. This results in a fused latent representation that captures both broader motion context and localized expression details, used for the final classification task.

Data and Tests

Implementation

The system was implemented using the FaceXZoo PyTorch-based face detection framework, extracting 16 face-centered frames from each video clip. The pretext tasks were trained on the CelebV-HQ dataset, which includes 35,000 high-quality facial videos.

From the source paper, examples from the CelebV-HQ dataset used in the new project. Source: https://arxiv.org/pdf/2207.12393

From the source paper, examples from the CelebV-HQ dataset used in the new project. Source: https://arxiv.org/pdf/2207.12393

Half of the data was masked to prevent overfitting. For the masked frame reconstruction task, the model was trained to predict missing regions using L1 loss. For the second task, it was trained to generate maps for 16 facial action units, supervised by L1 loss.

After pretraining, the encoders were fused and fine-tuned for deepfake detection using the FaceForensics++ dataset, which includes both real and manipulated videos.

The FaceForensics++ dataset has been the cornerstone of deepfake detection since 2017, though it is now considerably out of date, in regards to the latest facial synthesis techniques. Source: https://www.youtube.com/watch?v=x2g48Q2I2ZQ

The FaceForensics++ dataset has been the cornerstone of deepfake detection since 2017, though it is now considerably out of date, in regards to the latest facial synthesis techniques. Source: https://www.youtube.com/watch?v=x2g48Q2I2ZQ

To address class imbalance, the authors used Focal Loss, emphasizing more challenging examples during training. All training was conducted on a single RTX 4090 GPU with 24Gb of VRAM, using pre-trained checkpoints from VideoMAE.

Tests

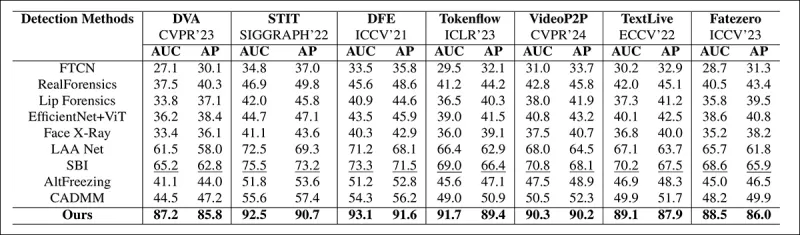

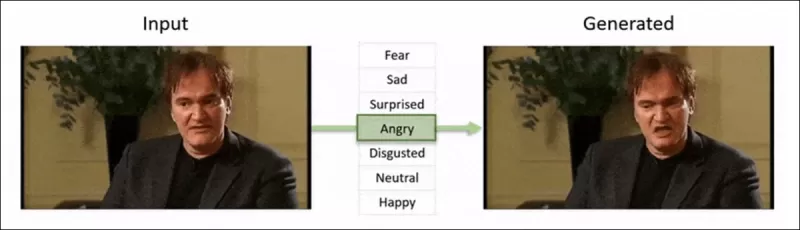

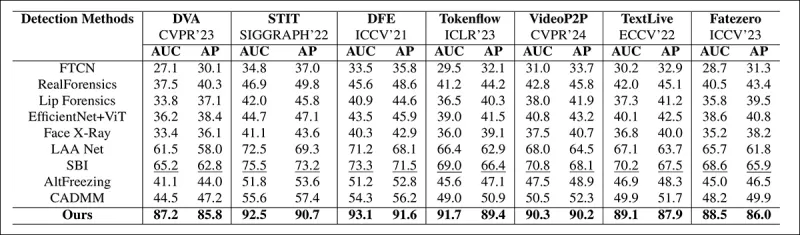

The method was evaluated against various deepfake detection techniques, focusing on locally-edited deepfakes. The tests included a range of editing methods and older deepfake datasets, using metrics like Area Under Curve (AUC), Average Precision, and Mean F1 Score.

From the paper: comparison on recent localized deepfakes shows that the proposed method outperformed all others, with a 15 to 20 percent gain in both AUC and average precision over the next-best approach.

From the paper: comparison on recent localized deepfakes shows that the proposed method outperformed all others, with a 15 to 20 percent gain in both AUC and average precision over the next-best approach.

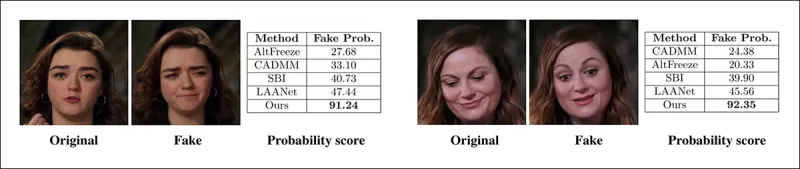

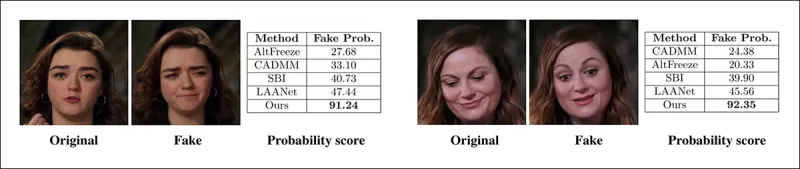

The authors provided visual comparisons of locally manipulated videos, showing their method's superior sensitivity to subtle edits.

A real video was altered using three different localized manipulations to produce fakes that remained visually similar to the original. Shown here are representative frames along with the average fake detection scores for each method. While existing detectors struggled with these subtle edits, the proposed model consistently assigned high fake probabilities, indicating greater sensitivity to localized changes.

A real video was altered using three different localized manipulations to produce fakes that remained visually similar to the original. Shown here are representative frames along with the average fake detection scores for each method. While existing detectors struggled with these subtle edits, the proposed model consistently assigned high fake probabilities, indicating greater sensitivity to localized changes.

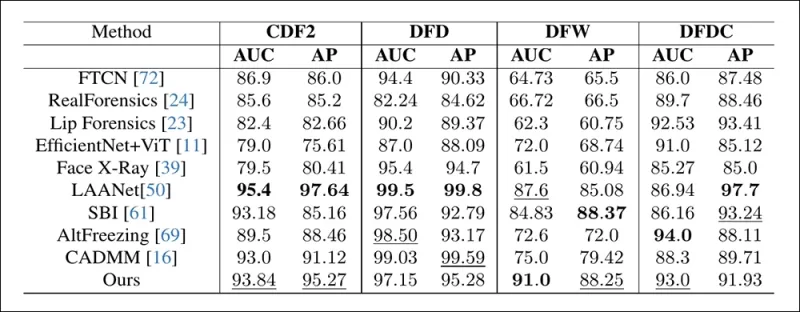

The researchers noted that existing state-of-the-art detection methods struggled with the latest deepfake generation techniques, while their method showed robust generalization, achieving high AUC and average precision scores.

Performance on traditional deepfake datasets shows that the proposed method remained competitive with leading approaches, indicating strong generalization across a range of manipulation types.

Performance on traditional deepfake datasets shows that the proposed method remained competitive with leading approaches, indicating strong generalization across a range of manipulation types.

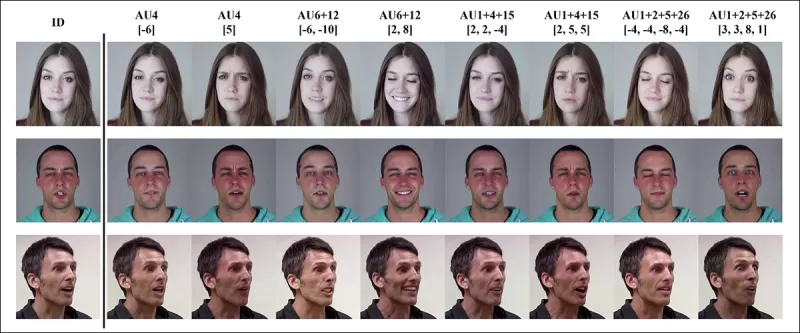

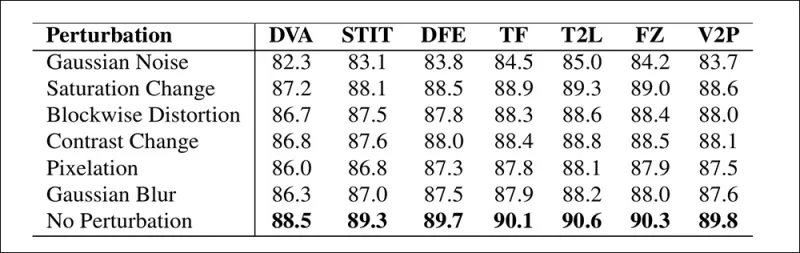

The authors also tested the model's reliability under real-world conditions, finding it resilient to common video distortions like saturation adjustments, Gaussian blur, and pixelation.

An illustration of how detection accuracy changes under different video distortions. The new method remained resilient in most cases, with only a small decline in AUC. The most significant drop occurred when Gaussian noise was introduced.

An illustration of how detection accuracy changes under different video distortions. The new method remained resilient in most cases, with only a small decline in AUC. The most significant drop occurred when Gaussian noise was introduced.

Conclusion

While the public often thinks of deepfakes as identity swaps, the reality of AI manipulation is more nuanced and potentially more insidious. The kind of local editing discussed in this new research might not capture public attention until another high-profile incident occurs. Yet, as actor Nic Cage has pointed out, the potential for post-production edits to alter performances is a concern we should all be aware of. We're naturally sensitive to even the slightest changes in facial expressions, and context can dramatically alter their impact.

First published Wednesday, April 2, 2025

Related article

Tencent Unveils HunyuanCustom for Single-Image Video Customization

This article explores the launch of HunyuanCustom, a multimodal video generation model by Tencent. The extensive scope of the accompanying research paper and challenges with the provided example video

Tencent Unveils HunyuanCustom for Single-Image Video Customization

This article explores the launch of HunyuanCustom, a multimodal video generation model by Tencent. The extensive scope of the accompanying research paper and challenges with the provided example video

CivitAI Strengthens Deepfake Regulations Amid Pressure from Mastercard and Visa

CivitAI, one of the most prominent AI model repositories on the internet, has recently made significant changes to its policies on NSFW content, particularly concerning celebrity LoRAs. These changes were spurred by pressure from payment facilitators MasterCard and Visa. Celebrity LoRAs, which are u

CivitAI Strengthens Deepfake Regulations Amid Pressure from Mastercard and Visa

CivitAI, one of the most prominent AI model repositories on the internet, has recently made significant changes to its policies on NSFW content, particularly concerning celebrity LoRAs. These changes were spurred by pressure from payment facilitators MasterCard and Visa. Celebrity LoRAs, which are u

Google Utilizes AI to Suspend Over 39 Million Ad Accounts for Suspected Fraud

Google announced on Wednesday that it had taken a major step in fighting ad fraud by suspending a staggering 39.2 million advertiser accounts on its platform in 2024. This number is more than triple what was reported the previous year, showcasing Google's intensified efforts to clean up its ad ecosy

Comments (43)

0/200

Google Utilizes AI to Suspend Over 39 Million Ad Accounts for Suspected Fraud

Google announced on Wednesday that it had taken a major step in fighting ad fraud by suspending a staggering 39.2 million advertiser accounts on its platform in 2024. This number is more than triple what was reported the previous year, showcasing Google's intensified efforts to clean up its ad ecosy

Comments (43)

0/200

![WilliamCarter]() WilliamCarter

WilliamCarter

August 19, 2025 at 5:01:13 PM EDT

August 19, 2025 at 5:01:13 PM EDT

That Pelosi video from 2019 is wild! It’s scary how a few tweaks can make someone look totally drunk. AI’s power to mess with videos is both cool and creepy—makes you wonder what’s real anymore. 😬

0

0

![JuanMartínez]() JuanMartínez

JuanMartínez

August 19, 2025 at 1:01:10 PM EDT

August 19, 2025 at 1:01:10 PM EDT

This Pelosi video incident is wild! 🤯 It’s scary how a few tweaks can make someone look totally out of it. Makes me wonder how much of what we see online is real anymore. AI’s cool, but this kind of stuff could mess with trust big time.

0

0

![RyanPerez]() RyanPerez

RyanPerez

July 29, 2025 at 8:25:16 AM EDT

July 29, 2025 at 8:25:16 AM EDT

That Pelosi video from 2019 is wild! It’s scary how a few tweaks can make someone look totally out of it. AI’s power to mess with reality is no joke—makes you wonder what’s real anymore. 🫣

0

0

![MarkRoberts]() MarkRoberts

MarkRoberts

April 23, 2025 at 10:24:54 PM EDT

April 23, 2025 at 10:24:54 PM EDT

Este herramienta de IA me mostró lo fácil que es manipular videos. El incidente de Nancy Pelosi fue un recordatorio impactante. Es aterrador pensar en cuántas noticias falsas pueden existir. Ahora estoy más atento a lo que creo en línea. ¡Cuidado, amigos! 👀

0

0

![RobertMartin]() RobertMartin

RobertMartin

April 20, 2025 at 4:42:51 PM EDT

April 20, 2025 at 4:42:51 PM EDT

このAIツールは、ビデオを操作するのがどれほど簡単かを教えてくれました。ナンシー・ペロシの事件は衝撃的でした。偽ニュースがどれだけあるかと思うと恐ろしいです。オンラインで何を信じるかについて、今はもっと注意しています。みなさんも気をつけてくださいね!👀

0

0

![PaulMartínez]() PaulMartínez

PaulMartínez

April 19, 2025 at 6:25:50 AM EDT

April 19, 2025 at 6:25:50 AM EDT

Dieses KI-Tool hat mir gezeigt, wie einfach es ist, Videos zu manipulieren! Der Vorfall mit Nancy Pelosi war ein Weckruf. Es ist beängstigend, wie viele gefälschte Nachrichten es geben könnte. Ich bin jetzt vorsichtiger mit dem, was ich online glaube. Seid wachsam, Leute! 👀

0

0

In 2019, a deceptive video of Nancy Pelosi, then Speaker of the US House of Representatives, circulated widely. The video, which was edited to make her appear intoxicated, was a stark reminder of how easily manipulated media can mislead the public. Despite its simplicity, this incident highlighted the potential damage of even basic audio-visual edits.

At the time, the deepfake landscape was largely dominated by autoencoder-based face-replacement technologies, which had been around since late 2017. These early systems struggled to make the nuanced changes seen in the Pelosi video, focusing instead on more overt face swaps.

The 2022 ‘Neural Emotion Director' framework changes the mood of a famous face. Source: https://www.youtube.com/watch?v=Li6W8pRDMJQ

The 2022 ‘Neural Emotion Director' framework changes the mood of a famous face. Source: https://www.youtube.com/watch?v=Li6W8pRDMJQ

Fast forward to today, and the film and TV industry is increasingly exploring AI-driven post-production edits. This trend has sparked both interest and criticism, as AI enables a level of perfectionism that was previously unattainable. In response, the research community has developed various projects focused on 'local edits' of facial captures, such as Diffusion Video Autoencoders, Stitch it in Time, ChatFace, MagicFace, and DISCO.

Expression-editing with the January 2025 project MagicFace. Source: https://arxiv.org/pdf/2501.02260

Expression-editing with the January 2025 project MagicFace. Source: https://arxiv.org/pdf/2501.02260

New Faces, New Wrinkles

However, the technology for creating these subtle edits is advancing much faster than our ability to detect them. Most deepfake detection methods are outdated, focusing on older techniques and datasets. That is, until a recent breakthrough from researchers in India.

Detection of Subtle Local Edits in Deepfakes: A real video is altered to produce fakes with nuanced changes such as raised eyebrows, modified gender traits, and shifts in expression toward disgust (illustrated here with a single frame). Source: https://arxiv.org/pdf/2503.22121

Detection of Subtle Local Edits in Deepfakes: A real video is altered to produce fakes with nuanced changes such as raised eyebrows, modified gender traits, and shifts in expression toward disgust (illustrated here with a single frame). Source: https://arxiv.org/pdf/2503.22121

This new research targets the detection of subtle, localized facial manipulations, a type of forgery often overlooked. Instead of looking for broad inconsistencies or identity mismatches, the method zeroes in on fine details like slight expression shifts or minor edits to specific facial features. It leverages the Facial Action Coding System (FACS), which breaks down facial expressions into 64 mutable areas.

Some of the constituent 64 expression parts in FACS. Source: https://www.cs.cmu.edu/~face/facs.htm

Some of the constituent 64 expression parts in FACS. Source: https://www.cs.cmu.edu/~face/facs.htm

The researchers tested their approach against various recent editing methods and found it consistently outperformed existing solutions, even with older datasets and newer attack vectors.

‘By using AU-based features to guide video representations learned through Masked Autoencoders (MAE), our method effectively captures localized changes crucial for detecting subtle facial edits.

‘This approach enables us to construct a unified latent representation that encodes both localized edits and broader alterations in face-centered videos, providing a comprehensive and adaptable solution for deepfake detection.'

The paper, titled Detecting Localized Deepfake Manipulations Using Action Unit-Guided Video Representations, was authored by researchers at the Indian Institute of Technology at Madras.

Method

The method starts by detecting faces in a video and sampling evenly spaced frames centered on these faces. These frames are then broken down into small 3D patches, capturing local spatial and temporal details.

Schema for the new method. The input video is processed with face detection to extract evenly spaced, face-centered frames, which are then divided into ‘tubular' patches and passed through an encoder that fuses latent representations from two pretrained pretext tasks. The resulting vector is then used by a classifier to determine whether the video is real or fake.

Schema for the new method. The input video is processed with face detection to extract evenly spaced, face-centered frames, which are then divided into ‘tubular' patches and passed through an encoder that fuses latent representations from two pretrained pretext tasks. The resulting vector is then used by a classifier to determine whether the video is real or fake.

Each patch contains a small window of pixels from a few successive frames, allowing the model to learn short-term motion and expression changes. These patches are embedded and positionally encoded before being fed into an encoder designed to distinguish real from fake videos.

The challenge of detecting subtle manipulations is addressed by using an encoder that combines two types of learned representations through a cross-attention mechanism, aiming to create a more sensitive and generalizable feature space.

Pretext Tasks

The first representation comes from an encoder trained with a masked autoencoding task. By hiding most of the video's 3D patches, the encoder learns to reconstruct the missing parts, capturing important spatiotemporal patterns like facial motion.

Pretext task training involves masking parts of the video input and using an encoder-decoder setup to reconstruct either the original frames or per-frame action unit maps, depending on the task.

Pretext task training involves masking parts of the video input and using an encoder-decoder setup to reconstruct either the original frames or per-frame action unit maps, depending on the task.

However, this alone isn't enough to detect fine-grained edits. The researchers introduced a second encoder trained to detect facial action units (AUs), encouraging it to focus on localized muscle activity where subtle deepfake edits often occur.

Further examples of Facial Action Units (FAUs, or AUs). Source: https://www.eiagroup.com/the-facial-action-coding-system/

Further examples of Facial Action Units (FAUs, or AUs). Source: https://www.eiagroup.com/the-facial-action-coding-system/

After pretraining, the outputs of both encoders are combined using cross-attention, with the AU-based features guiding the attention over the spatial-temporal features. This results in a fused latent representation that captures both broader motion context and localized expression details, used for the final classification task.

Data and Tests

Implementation

The system was implemented using the FaceXZoo PyTorch-based face detection framework, extracting 16 face-centered frames from each video clip. The pretext tasks were trained on the CelebV-HQ dataset, which includes 35,000 high-quality facial videos.

From the source paper, examples from the CelebV-HQ dataset used in the new project. Source: https://arxiv.org/pdf/2207.12393

From the source paper, examples from the CelebV-HQ dataset used in the new project. Source: https://arxiv.org/pdf/2207.12393

Half of the data was masked to prevent overfitting. For the masked frame reconstruction task, the model was trained to predict missing regions using L1 loss. For the second task, it was trained to generate maps for 16 facial action units, supervised by L1 loss.

After pretraining, the encoders were fused and fine-tuned for deepfake detection using the FaceForensics++ dataset, which includes both real and manipulated videos.

The FaceForensics++ dataset has been the cornerstone of deepfake detection since 2017, though it is now considerably out of date, in regards to the latest facial synthesis techniques. Source: https://www.youtube.com/watch?v=x2g48Q2I2ZQ

The FaceForensics++ dataset has been the cornerstone of deepfake detection since 2017, though it is now considerably out of date, in regards to the latest facial synthesis techniques. Source: https://www.youtube.com/watch?v=x2g48Q2I2ZQ

To address class imbalance, the authors used Focal Loss, emphasizing more challenging examples during training. All training was conducted on a single RTX 4090 GPU with 24Gb of VRAM, using pre-trained checkpoints from VideoMAE.

Tests

The method was evaluated against various deepfake detection techniques, focusing on locally-edited deepfakes. The tests included a range of editing methods and older deepfake datasets, using metrics like Area Under Curve (AUC), Average Precision, and Mean F1 Score.

From the paper: comparison on recent localized deepfakes shows that the proposed method outperformed all others, with a 15 to 20 percent gain in both AUC and average precision over the next-best approach.

From the paper: comparison on recent localized deepfakes shows that the proposed method outperformed all others, with a 15 to 20 percent gain in both AUC and average precision over the next-best approach.

The authors provided visual comparisons of locally manipulated videos, showing their method's superior sensitivity to subtle edits.

A real video was altered using three different localized manipulations to produce fakes that remained visually similar to the original. Shown here are representative frames along with the average fake detection scores for each method. While existing detectors struggled with these subtle edits, the proposed model consistently assigned high fake probabilities, indicating greater sensitivity to localized changes.

A real video was altered using three different localized manipulations to produce fakes that remained visually similar to the original. Shown here are representative frames along with the average fake detection scores for each method. While existing detectors struggled with these subtle edits, the proposed model consistently assigned high fake probabilities, indicating greater sensitivity to localized changes.

The researchers noted that existing state-of-the-art detection methods struggled with the latest deepfake generation techniques, while their method showed robust generalization, achieving high AUC and average precision scores.

Performance on traditional deepfake datasets shows that the proposed method remained competitive with leading approaches, indicating strong generalization across a range of manipulation types.

Performance on traditional deepfake datasets shows that the proposed method remained competitive with leading approaches, indicating strong generalization across a range of manipulation types.

The authors also tested the model's reliability under real-world conditions, finding it resilient to common video distortions like saturation adjustments, Gaussian blur, and pixelation.

An illustration of how detection accuracy changes under different video distortions. The new method remained resilient in most cases, with only a small decline in AUC. The most significant drop occurred when Gaussian noise was introduced.

An illustration of how detection accuracy changes under different video distortions. The new method remained resilient in most cases, with only a small decline in AUC. The most significant drop occurred when Gaussian noise was introduced.

Conclusion

While the public often thinks of deepfakes as identity swaps, the reality of AI manipulation is more nuanced and potentially more insidious. The kind of local editing discussed in this new research might not capture public attention until another high-profile incident occurs. Yet, as actor Nic Cage has pointed out, the potential for post-production edits to alter performances is a concern we should all be aware of. We're naturally sensitive to even the slightest changes in facial expressions, and context can dramatically alter their impact.

First published Wednesday, April 2, 2025

Tencent Unveils HunyuanCustom for Single-Image Video Customization

This article explores the launch of HunyuanCustom, a multimodal video generation model by Tencent. The extensive scope of the accompanying research paper and challenges with the provided example video

Tencent Unveils HunyuanCustom for Single-Image Video Customization

This article explores the launch of HunyuanCustom, a multimodal video generation model by Tencent. The extensive scope of the accompanying research paper and challenges with the provided example video

Google Utilizes AI to Suspend Over 39 Million Ad Accounts for Suspected Fraud

Google announced on Wednesday that it had taken a major step in fighting ad fraud by suspending a staggering 39.2 million advertiser accounts on its platform in 2024. This number is more than triple what was reported the previous year, showcasing Google's intensified efforts to clean up its ad ecosy

Google Utilizes AI to Suspend Over 39 Million Ad Accounts for Suspected Fraud

Google announced on Wednesday that it had taken a major step in fighting ad fraud by suspending a staggering 39.2 million advertiser accounts on its platform in 2024. This number is more than triple what was reported the previous year, showcasing Google's intensified efforts to clean up its ad ecosy

August 19, 2025 at 5:01:13 PM EDT

August 19, 2025 at 5:01:13 PM EDT

That Pelosi video from 2019 is wild! It’s scary how a few tweaks can make someone look totally drunk. AI’s power to mess with videos is both cool and creepy—makes you wonder what’s real anymore. 😬

0

0

August 19, 2025 at 1:01:10 PM EDT

August 19, 2025 at 1:01:10 PM EDT

This Pelosi video incident is wild! 🤯 It’s scary how a few tweaks can make someone look totally out of it. Makes me wonder how much of what we see online is real anymore. AI’s cool, but this kind of stuff could mess with trust big time.

0

0

July 29, 2025 at 8:25:16 AM EDT

July 29, 2025 at 8:25:16 AM EDT

That Pelosi video from 2019 is wild! It’s scary how a few tweaks can make someone look totally out of it. AI’s power to mess with reality is no joke—makes you wonder what’s real anymore. 🫣

0

0

April 23, 2025 at 10:24:54 PM EDT

April 23, 2025 at 10:24:54 PM EDT

Este herramienta de IA me mostró lo fácil que es manipular videos. El incidente de Nancy Pelosi fue un recordatorio impactante. Es aterrador pensar en cuántas noticias falsas pueden existir. Ahora estoy más atento a lo que creo en línea. ¡Cuidado, amigos! 👀

0

0

April 20, 2025 at 4:42:51 PM EDT

April 20, 2025 at 4:42:51 PM EDT

このAIツールは、ビデオを操作するのがどれほど簡単かを教えてくれました。ナンシー・ペロシの事件は衝撃的でした。偽ニュースがどれだけあるかと思うと恐ろしいです。オンラインで何を信じるかについて、今はもっと注意しています。みなさんも気をつけてくださいね!👀

0

0

April 19, 2025 at 6:25:50 AM EDT

April 19, 2025 at 6:25:50 AM EDT

Dieses KI-Tool hat mir gezeigt, wie einfach es ist, Videos zu manipulieren! Der Vorfall mit Nancy Pelosi war ein Weckruf. Es ist beängstigend, wie viele gefälschte Nachrichten es geben könnte. Ich bin jetzt vorsichtiger mit dem, was ich online glaube. Seid wachsam, Leute! 👀

0

0