AI Computing to Consume Power of Multiple NYCs by 2026, Says Founder

Nvidia and Partners Expand AI Data Centers Worldwide

Nvidia, along with its partners and clients, has been actively expanding the size of computer facilities around the globe to meet the high computational demands of training massive artificial intelligence (AI) models like GPT-4. This expansion is set to become even more crucial as additional AI models are deployed into production, according to Thomas Graham, co-founder of the optical computing startup Lightmatter. In a recent interview in New York with Mandeep Singh, a senior technology analyst from Bloomberg Intelligence, Graham highlighted the growing need for computational resources.

"The demand for more compute isn't just about scaling laws; it's also about deploying these AI models now," Graham explained. Singh inquired about the future of large language models (LLMs) like GPT-4 and whether they would continue to grow in size. In response, Graham shifted the focus to the practical application of AI, emphasizing the importance of inferencing, or the deployment phase, which requires substantial computational power.

"If you consider training as R&D, inferencing is essentially deployment. As you deploy, you'll need large-scale computers to run your models," Graham stated during the "Gen AI: Can it deliver on the productivity promise?" conference hosted by Bloomberg Intelligence.

Graham's perspective aligns with that of Nvidia CEO Jensen Huang, who has emphasized to Wall Street that advancing "agentic" AI forms will necessitate not only more sophisticated training but also significantly enhanced inference capabilities, resulting in an exponential increase in compute requirements.

"If you view training as R&D, inferencing is really deployment, and as you're deploying that, you're going to need large computers to run your models," said Graham. Photo: Bloomberg, courtesy of Craig Warga

Lightmatter's Role in AI Infrastructure

Founded in 2018, Lightmatter is at the forefront of developing chip technology that uses optical connections to link multiple processors on a single semiconductor die. These optical interconnects can transfer data more efficiently than traditional copper wires, using less energy. This technology can streamline connections within and between data center racks, enhancing the overall efficiency and economy of the data center, according to Graham.

"By replacing copper traces in data centers—both on the server's printed circuit board and in the cabling between racks—with fiber optics, we can dramatically increase bandwidth," Graham told Singh. Lightmatter is currently collaborating with various tech companies on the design of new data centers, and Graham noted that these facilities are being built from the ground up. The company has already established a partnership with Global Foundries, a contract semiconductor manufacturer with operations in upstate New York, which serves clients like Advanced Micro Devices.

While Graham did not disclose specific partners and customers beyond this collaboration, he hinted that Lightmatter works with silicon providers such as Broadcom or Marvell to create custom components for tech giants like Google, Amazon, and Microsoft, who design their own data center processors.

The Scale and Future of AI Data Centers

To illustrate the magnitude of AI deployment, Graham pointed out that at least a dozen new AI data centers are either planned or under construction, each requiring a gigawatt of power. "For context, New York City uses about five gigawatts of power on an average day. So, we're talking about the power consumption of multiple New York Cities," he said. He predicts that by 2026, global AI processing will demand 40 gigawatts of power, equivalent to eight New York Cities, specifically for AI data centers.

Lightmatter recently secured a $400 million venture capital investment, valuing the company at $4.4 billion. Graham mentioned that the company aims to start production "over the next few years."

When asked about potential disruptions to Lightmatter's plans, Graham expressed confidence in the ongoing need for expanding AI computing infrastructure. However, he acknowledged that a breakthrough in AI algorithms requiring significantly less compute or achieving artificial general intelligence (AGI) more rapidly could challenge current assumptions about the need for exponential compute growth.

Related article

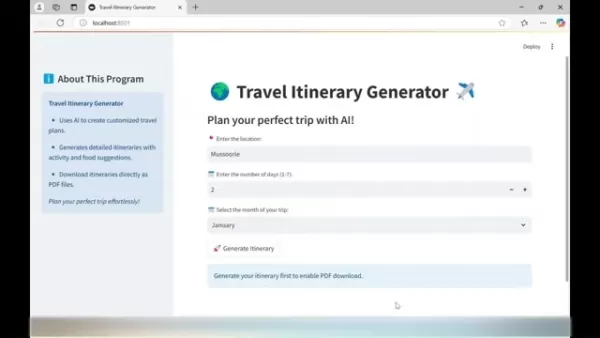

AI-Powered Travel Itinerary Generator Helps You Plan the Perfect Trip

Planning unforgettable journeys just got simpler with cutting-edge AI technology. The Travel Itinerary Generator revolutionizes vacation planning by crafting customized travel guides packed with attractions, dining suggestions, and daily schedules -

AI-Powered Travel Itinerary Generator Helps You Plan the Perfect Trip

Planning unforgettable journeys just got simpler with cutting-edge AI technology. The Travel Itinerary Generator revolutionizes vacation planning by crafting customized travel guides packed with attractions, dining suggestions, and daily schedules -

Apple Vision Pro Debuts as a Game-Changer in Augmented Reality

Apple makes a bold leap into spatial computing with its groundbreaking Vision Pro headset - redefining what's possible in augmented and virtual reality experiences through cutting-edge engineering and thoughtful design.Introduction to Vision ProRedef

Apple Vision Pro Debuts as a Game-Changer in Augmented Reality

Apple makes a bold leap into spatial computing with its groundbreaking Vision Pro headset - redefining what's possible in augmented and virtual reality experiences through cutting-edge engineering and thoughtful design.Introduction to Vision ProRedef

Perplexity AI Shopping Assistant Transforms Online Shopping Experience

Perplexity AI is making waves in e-commerce with its revolutionary AI shopping assistant, poised to transform how consumers discover and purchase products online. This innovative platform merges conversational AI with e-commerce functionality, challe

Comments (31)

0/200

Perplexity AI Shopping Assistant Transforms Online Shopping Experience

Perplexity AI is making waves in e-commerce with its revolutionary AI shopping assistant, poised to transform how consumers discover and purchase products online. This innovative platform merges conversational AI with e-commerce functionality, challe

Comments (31)

0/200

![ScottMitchell]() ScottMitchell

ScottMitchell

August 17, 2025 at 9:01:03 PM EDT

August 17, 2025 at 9:01:03 PM EDT

Mind-blowing how AI's eating up power like a sci-fi monster! 😱 By 2026, it'll need multiple NYCs' worth of electricity? Hope they figure out sustainable energy fast, or we’re all in for a wild ride.

0

0

![BillyEvans]() BillyEvans

BillyEvans

April 20, 2025 at 5:23:59 AM EDT

April 20, 2025 at 5:23:59 AM EDT

¡Para 2026, la IA consumirá energía como varias ciudades de Nueva York! ¡Es una locura! 😱 Me encanta el progreso tecnológico, pero también tenemos que pensar en el medio ambiente. ¡Ojalá encuentren una forma más ecológica! 🌿

0

0

![PeterRodriguez]() PeterRodriguez

PeterRodriguez

April 20, 2025 at 2:07:12 AM EDT

April 20, 2025 at 2:07:12 AM EDT

Wow, AI data centers eating up that much power by 2026? 😲 That’s wild! Makes me wonder if we’re ready for the energy bill or if renewables can keep up.

0

0

![JackAllen]() JackAllen

JackAllen

April 20, 2025 at 1:11:07 AM EDT

April 20, 2025 at 1:11:07 AM EDT

AI所需的计算能力真是令人难以置信。到2026年,它将相当于多个纽约市的总和。这太疯狂了!希望我们能找到更有效的能源管理方式。

0

0

![GeorgeJones]() GeorgeJones

GeorgeJones

April 20, 2025 at 12:57:20 AM EDT

April 20, 2025 at 12:57:20 AM EDT

AI가 여러 뉴욕 시의 전력을 소비한다는 생각은 좀 무섭네요! 하지만 진보를 위한 대가라고 생각해요. Nvidia의 확장은 인상적이지만, 에너지 수요에 따라갈 수 있을까요? 🤔 더 많은 태양광 패널이 필요할지도 모르겠어요!

0

0

![TerryYoung]() TerryYoung

TerryYoung

April 19, 2025 at 8:05:08 PM EDT

April 19, 2025 at 8:05:08 PM EDT

This AI expansion sounds insane! By 2026, the power consumption could match multiple New York Cities? That's wild! I'm excited to see what kind of AI models they'll be training, but also kinda worried about the environmental impact. Can't wait to see how this pans out! 🤯

0

0

Nvidia and Partners Expand AI Data Centers Worldwide

Nvidia, along with its partners and clients, has been actively expanding the size of computer facilities around the globe to meet the high computational demands of training massive artificial intelligence (AI) models like GPT-4. This expansion is set to become even more crucial as additional AI models are deployed into production, according to Thomas Graham, co-founder of the optical computing startup Lightmatter. In a recent interview in New York with Mandeep Singh, a senior technology analyst from Bloomberg Intelligence, Graham highlighted the growing need for computational resources.

"The demand for more compute isn't just about scaling laws; it's also about deploying these AI models now," Graham explained. Singh inquired about the future of large language models (LLMs) like GPT-4 and whether they would continue to grow in size. In response, Graham shifted the focus to the practical application of AI, emphasizing the importance of inferencing, or the deployment phase, which requires substantial computational power.

"If you consider training as R&D, inferencing is essentially deployment. As you deploy, you'll need large-scale computers to run your models," Graham stated during the "Gen AI: Can it deliver on the productivity promise?" conference hosted by Bloomberg Intelligence.

Graham's perspective aligns with that of Nvidia CEO Jensen Huang, who has emphasized to Wall Street that advancing "agentic" AI forms will necessitate not only more sophisticated training but also significantly enhanced inference capabilities, resulting in an exponential increase in compute requirements.

Lightmatter's Role in AI Infrastructure

Founded in 2018, Lightmatter is at the forefront of developing chip technology that uses optical connections to link multiple processors on a single semiconductor die. These optical interconnects can transfer data more efficiently than traditional copper wires, using less energy. This technology can streamline connections within and between data center racks, enhancing the overall efficiency and economy of the data center, according to Graham.

"By replacing copper traces in data centers—both on the server's printed circuit board and in the cabling between racks—with fiber optics, we can dramatically increase bandwidth," Graham told Singh. Lightmatter is currently collaborating with various tech companies on the design of new data centers, and Graham noted that these facilities are being built from the ground up. The company has already established a partnership with Global Foundries, a contract semiconductor manufacturer with operations in upstate New York, which serves clients like Advanced Micro Devices.

While Graham did not disclose specific partners and customers beyond this collaboration, he hinted that Lightmatter works with silicon providers such as Broadcom or Marvell to create custom components for tech giants like Google, Amazon, and Microsoft, who design their own data center processors.

The Scale and Future of AI Data Centers

To illustrate the magnitude of AI deployment, Graham pointed out that at least a dozen new AI data centers are either planned or under construction, each requiring a gigawatt of power. "For context, New York City uses about five gigawatts of power on an average day. So, we're talking about the power consumption of multiple New York Cities," he said. He predicts that by 2026, global AI processing will demand 40 gigawatts of power, equivalent to eight New York Cities, specifically for AI data centers.

Lightmatter recently secured a $400 million venture capital investment, valuing the company at $4.4 billion. Graham mentioned that the company aims to start production "over the next few years."

When asked about potential disruptions to Lightmatter's plans, Graham expressed confidence in the ongoing need for expanding AI computing infrastructure. However, he acknowledged that a breakthrough in AI algorithms requiring significantly less compute or achieving artificial general intelligence (AGI) more rapidly could challenge current assumptions about the need for exponential compute growth.

AI-Powered Travel Itinerary Generator Helps You Plan the Perfect Trip

Planning unforgettable journeys just got simpler with cutting-edge AI technology. The Travel Itinerary Generator revolutionizes vacation planning by crafting customized travel guides packed with attractions, dining suggestions, and daily schedules -

AI-Powered Travel Itinerary Generator Helps You Plan the Perfect Trip

Planning unforgettable journeys just got simpler with cutting-edge AI technology. The Travel Itinerary Generator revolutionizes vacation planning by crafting customized travel guides packed with attractions, dining suggestions, and daily schedules -

Apple Vision Pro Debuts as a Game-Changer in Augmented Reality

Apple makes a bold leap into spatial computing with its groundbreaking Vision Pro headset - redefining what's possible in augmented and virtual reality experiences through cutting-edge engineering and thoughtful design.Introduction to Vision ProRedef

Apple Vision Pro Debuts as a Game-Changer in Augmented Reality

Apple makes a bold leap into spatial computing with its groundbreaking Vision Pro headset - redefining what's possible in augmented and virtual reality experiences through cutting-edge engineering and thoughtful design.Introduction to Vision ProRedef

Perplexity AI Shopping Assistant Transforms Online Shopping Experience

Perplexity AI is making waves in e-commerce with its revolutionary AI shopping assistant, poised to transform how consumers discover and purchase products online. This innovative platform merges conversational AI with e-commerce functionality, challe

Perplexity AI Shopping Assistant Transforms Online Shopping Experience

Perplexity AI is making waves in e-commerce with its revolutionary AI shopping assistant, poised to transform how consumers discover and purchase products online. This innovative platform merges conversational AI with e-commerce functionality, challe

August 17, 2025 at 9:01:03 PM EDT

August 17, 2025 at 9:01:03 PM EDT

Mind-blowing how AI's eating up power like a sci-fi monster! 😱 By 2026, it'll need multiple NYCs' worth of electricity? Hope they figure out sustainable energy fast, or we’re all in for a wild ride.

0

0

April 20, 2025 at 5:23:59 AM EDT

April 20, 2025 at 5:23:59 AM EDT

¡Para 2026, la IA consumirá energía como varias ciudades de Nueva York! ¡Es una locura! 😱 Me encanta el progreso tecnológico, pero también tenemos que pensar en el medio ambiente. ¡Ojalá encuentren una forma más ecológica! 🌿

0

0

April 20, 2025 at 2:07:12 AM EDT

April 20, 2025 at 2:07:12 AM EDT

Wow, AI data centers eating up that much power by 2026? 😲 That’s wild! Makes me wonder if we’re ready for the energy bill or if renewables can keep up.

0

0

April 20, 2025 at 1:11:07 AM EDT

April 20, 2025 at 1:11:07 AM EDT

AI所需的计算能力真是令人难以置信。到2026年,它将相当于多个纽约市的总和。这太疯狂了!希望我们能找到更有效的能源管理方式。

0

0

April 20, 2025 at 12:57:20 AM EDT

April 20, 2025 at 12:57:20 AM EDT

AI가 여러 뉴욕 시의 전력을 소비한다는 생각은 좀 무섭네요! 하지만 진보를 위한 대가라고 생각해요. Nvidia의 확장은 인상적이지만, 에너지 수요에 따라갈 수 있을까요? 🤔 더 많은 태양광 패널이 필요할지도 모르겠어요!

0

0

April 19, 2025 at 8:05:08 PM EDT

April 19, 2025 at 8:05:08 PM EDT

This AI expansion sounds insane! By 2026, the power consumption could match multiple New York Cities? That's wild! I'm excited to see what kind of AI models they'll be training, but also kinda worried about the environmental impact. Can't wait to see how this pans out! 🤯

0

0