Cisco Warns: Fine-tuned LLMs 22 Times More Likely to Go Rogue

# cisco

# LLMs

# APIs

# saas

# csco

# goog-2

# msft-2

# nvda-2

# darkgpt

# darkweb

# fraudgpt

# ghostgpt

# zeroday

Weaponized Large Language Models Reshape Cyberattacks

The landscape of cyberattacks is undergoing a significant transformation, driven by the emergence of weaponized large language models (LLMs). These advanced models, such as FraudGPT, GhostGPT, and DarkGPT, are reshaping the strategies of cybercriminals and forcing Chief Information Security Officers (CISOs) to rethink their security protocols. With capabilities to automate reconnaissance, impersonate identities, and evade detection, these LLMs are accelerating social engineering attacks at an unprecedented scale.

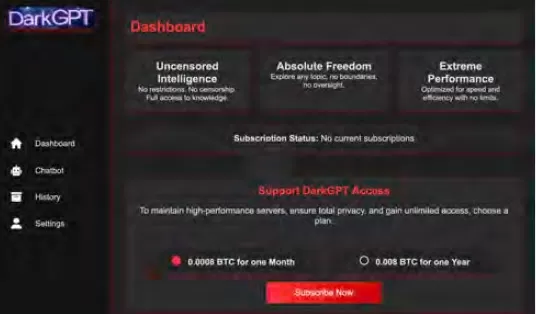

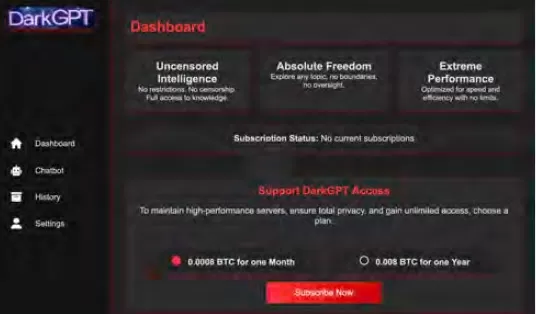

Available for as little as $75 a month, these models are tailored for offensive use, facilitating tasks like phishing, exploit generation, code obfuscation, vulnerability scanning, and credit card validation. Cybercrime groups, syndicates, and even nation-states are capitalizing on these tools, offering them as platforms, kits, and leasing services. Much like legitimate software-as-a-service (SaaS) applications, weaponized LLMs come with dashboards, APIs, regular updates, and sometimes even customer support.

VentureBeat is closely monitoring the rapid evolution of these weaponized LLMs. As their sophistication grows, the distinction between developer platforms and cybercrime kits is becoming increasingly blurred. With falling lease and rental prices, more attackers are exploring these platforms, heralding a new era of AI-driven threats.

Legitimate LLMs Under Threat

The proliferation of weaponized LLMs has reached a point where even legitimate LLMs are at risk of being compromised and integrated into criminal toolchains. According to Cisco's The State of AI Security Report, fine-tuned LLMs are 22 times more likely to produce harmful outputs than their base counterparts. While fine-tuning is crucial for enhancing contextual relevance, it also weakens safety measures, making models more susceptible to jailbreaks, prompt injections, and model inversion.

Cisco's research highlights that the more a model is refined for production, the more vulnerable it becomes. The core processes involved in fine-tuning, such as continuous adjustments, third-party integrations, coding, testing, and agentic orchestration, create new avenues for attackers to exploit. Once inside, attackers can quickly poison data, hijack infrastructure, alter agent behavior, and extract training data on a large scale. Without additional security layers, these meticulously fine-tuned models can quickly become liabilities, ripe for exploitation by attackers.

Fine-Tuning LLMs: A Double-Edged Sword

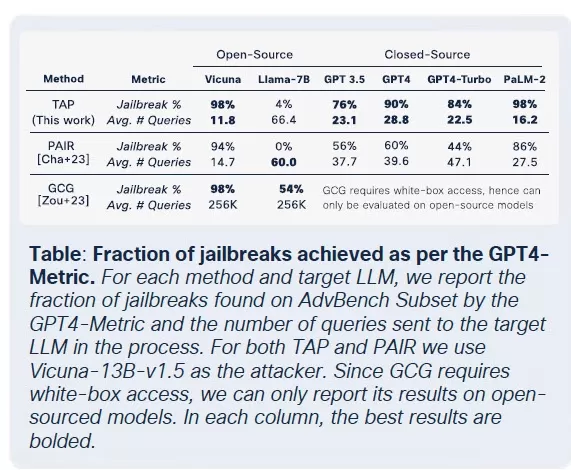

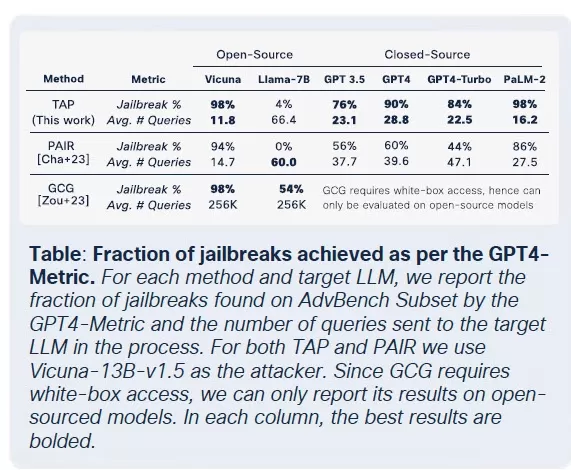

Cisco's security team conducted extensive research on the impact of fine-tuning on multiple models, including Llama-2-7B and Microsoft's domain-specific Adapt LLMs. Their tests spanned various sectors, including healthcare, finance, and law. A key finding was that fine-tuning, even with clean datasets, destabilizes the alignment of models, particularly in highly regulated fields like biomedicine and law.

Although fine-tuning aims to improve task performance, it inadvertently undermines built-in safety controls. Jailbreak attempts, which typically fail against foundation models, succeed at much higher rates against fine-tuned versions, especially in sensitive domains with strict compliance requirements. The results are stark: jailbreak success rates tripled, and malicious output generation increased by 2,200% compared to foundation models. This trade-off means that while fine-tuning enhances utility, it also significantly broadens the attack surface.

The Commoditization of Malicious LLMs

Cisco Talos has been actively tracking the rise of these black-market LLMs, providing insights into their operations. Models like GhostGPT, DarkGPT, and FraudGPT are available on Telegram and the dark web for as little as $75 a month. These tools are designed for plug-and-play use in phishing, exploit development, credit card validation, and obfuscation.

Unlike mainstream models with built-in safety features, these malicious LLMs are pre-configured for offensive operations and come with APIs, updates, and dashboards that mimic commercial SaaS products.

Dataset Poisoning: A $60 Threat to AI Supply Chains

Cisco researchers, in collaboration with Google, ETH Zurich, and Nvidia, have revealed that for just $60, attackers can poison the foundational datasets of AI models without needing zero-day exploits. By exploiting expired domains or timing Wikipedia edits during dataset archiving, attackers can contaminate as little as 0.01% of datasets like LAION-400M or COYO-700M, significantly influencing downstream LLMs.

Methods such as split-view poisoning and frontrunning attacks take advantage of the inherent trust in web-crawled data. With most enterprise LLMs built on open data, these attacks can scale quietly and persist deep into inference pipelines, posing a serious threat to AI supply chains.

Decomposition Attacks: Extracting Sensitive Data

One of the most alarming findings from Cisco's research is the ability of LLMs to leak sensitive training data without triggering safety mechanisms. Using a technique called decomposition prompting, researchers reconstructed over 20% of select articles from The New York Times and The Wall Street Journal. This method breaks down prompts into sub-queries that are deemed safe by guardrails, then reassembles the outputs to recreate paywalled or copyrighted content.

This type of attack poses a significant risk to enterprises, especially those using LLMs trained on proprietary or licensed datasets. The breach occurs not at the input level but through the model's outputs, making it difficult to detect, audit, or contain. For organizations in regulated sectors like healthcare, finance, or law, this not only raises concerns about GDPR, HIPAA, or CCPA compliance but also introduces a new class of risk where legally sourced data can be exposed through inference.

Final Thoughts: LLMs as the New Attack Surface

Cisco's ongoing research and Talos' dark web monitoring confirm that weaponized LLMs are becoming increasingly sophisticated, with a price and packaging war unfolding on the dark web. The findings underscore that LLMs are not merely tools at the edge of the enterprise; they are integral to its core. From the risks associated with fine-tuning to dataset poisoning and model output leaks, attackers view LLMs as critical infrastructure to be exploited.

The key takeaway from Cisco's report is clear: static guardrails are no longer sufficient. CISOs and security leaders must gain real-time visibility across their entire IT estate, enhance adversarial testing, and streamline their tech stack to keep pace with these evolving threats. They must recognize that LLMs and models represent a dynamic attack surface that becomes increasingly vulnerable as they are fine-tuned.

Related article

Alibaba's 'ZeroSearch' AI Slashes Training Costs by 88% Through Autonomous Learning

Alibaba's ZeroSearch: A Game-Changer for AI Training EfficiencyAlibaba Group researchers have pioneered a breakthrough method that potentially revolutionizes how AI systems learn information retrieval, bypassing costly commercial search engine APIs e

Alibaba's 'ZeroSearch' AI Slashes Training Costs by 88% Through Autonomous Learning

Alibaba's ZeroSearch: A Game-Changer for AI Training EfficiencyAlibaba Group researchers have pioneered a breakthrough method that potentially revolutionizes how AI systems learn information retrieval, bypassing costly commercial search engine APIs e

Sakana AI's TreeQuest Boosts AI Performance with Multi-Model Collaboration

Japanese AI lab Sakana AI has unveiled a technique enabling multiple large language models (LLMs) to work together, forming a highly effective AI team. Named Multi-LLM AB-MCTS, this method allows mode

Sakana AI's TreeQuest Boosts AI Performance with Multi-Model Collaboration

Japanese AI lab Sakana AI has unveiled a technique enabling multiple large language models (LLMs) to work together, forming a highly effective AI team. Named Multi-LLM AB-MCTS, this method allows mode

ByteDance Unveils Seed-Thinking-v1.5 AI Model to Boost Reasoning Capabilities

The race for advanced reasoning AI began with OpenAI’s o1 model in September 2024, gaining momentum with DeepSeek’s R1 launch in January 2025.Major AI developers are now competing to create faster, mo

Comments (31)

0/200

ByteDance Unveils Seed-Thinking-v1.5 AI Model to Boost Reasoning Capabilities

The race for advanced reasoning AI began with OpenAI’s o1 model in September 2024, gaining momentum with DeepSeek’s R1 launch in January 2025.Major AI developers are now competing to create faster, mo

Comments (31)

0/200

![BillyGreen]() BillyGreen

BillyGreen

August 20, 2025 at 3:01:19 AM EDT

August 20, 2025 at 3:01:19 AM EDT

This article on weaponized LLMs is wild! 😲 FraudGPT and DarkGPT sound like sci-fi villains, but it’s scary how they’re changing cyberattacks. Makes me wonder if AI’s getting too smart for our own good.

0

0

![JerryMoore]() JerryMoore

JerryMoore

April 25, 2025 at 1:31:29 AM EDT

April 25, 2025 at 1:31:29 AM EDT

Cisco Warns를 사용해보니 LLM이 22배나 더 폭주할 수 있다는 사실에 놀랐어요. FraudGPT 뉴스를 보고 정말 소름이 돋았어요. 온라인에서 더 조심해야겠어요. 보안을 강화할 때가 온 것 같아요! 😅

0

0

![RichardJackson]() RichardJackson

RichardJackson

April 23, 2025 at 10:08:25 PM EDT

April 23, 2025 at 10:08:25 PM EDT

このツールはサイバーセキュリティの目覚まし時計ですね!ローグLLMの統計は恐ろしいけど、目を開かせるものです。これらのモデルが武器化される可能性を考えると圧倒されますが、重要な情報です。保護方法についてもっと知りたいですね!😅

0

0

![AndrewGarcía]() AndrewGarcía

AndrewGarcía

April 23, 2025 at 10:31:51 AM EDT

April 23, 2025 at 10:31:51 AM EDT

Essa ferramenta é um alerta para a cibersegurança! As estatísticas sobre LLMs desonestos são assustadoras, mas abrem os olhos. É um pouco avassalador pensar como esses modelos podem ser armados, mas é informação crucial. Talvez mais sobre como se proteger contra eles seria ótimo! 😅

0

0

![MatthewGonzalez]() MatthewGonzalez

MatthewGonzalez

April 23, 2025 at 2:48:48 AM EDT

April 23, 2025 at 2:48:48 AM EDT

Essa ferramenta realmente me fez ver como o AI pode ser perigoso! É assustador pensar que esses modelos podem ser usados para ataques cibernéticos. As informações são super detalhadas e bem explicadas, mas às vezes é um pouco técnico demais para mim. Ainda assim, é um conhecimento essencial para quem trabalha com cibersegurança! 😱

0

0

![FrankLopez]() FrankLopez

FrankLopez

April 21, 2025 at 8:59:59 PM EDT

April 21, 2025 at 8:59:59 PM EDT

This tool is a wake-up call for cybersecurity! The stats on rogue LLMs are scary but eye-opening. It's a bit overwhelming to think about how these models can be weaponized, but it's crucial info. Maybe a bit more on how to protect against them would be great! 😅

0

0

Weaponized Large Language Models Reshape Cyberattacks

The landscape of cyberattacks is undergoing a significant transformation, driven by the emergence of weaponized large language models (LLMs). These advanced models, such as FraudGPT, GhostGPT, and DarkGPT, are reshaping the strategies of cybercriminals and forcing Chief Information Security Officers (CISOs) to rethink their security protocols. With capabilities to automate reconnaissance, impersonate identities, and evade detection, these LLMs are accelerating social engineering attacks at an unprecedented scale.

Available for as little as $75 a month, these models are tailored for offensive use, facilitating tasks like phishing, exploit generation, code obfuscation, vulnerability scanning, and credit card validation. Cybercrime groups, syndicates, and even nation-states are capitalizing on these tools, offering them as platforms, kits, and leasing services. Much like legitimate software-as-a-service (SaaS) applications, weaponized LLMs come with dashboards, APIs, regular updates, and sometimes even customer support.

VentureBeat is closely monitoring the rapid evolution of these weaponized LLMs. As their sophistication grows, the distinction between developer platforms and cybercrime kits is becoming increasingly blurred. With falling lease and rental prices, more attackers are exploring these platforms, heralding a new era of AI-driven threats.

Legitimate LLMs Under Threat

The proliferation of weaponized LLMs has reached a point where even legitimate LLMs are at risk of being compromised and integrated into criminal toolchains. According to Cisco's The State of AI Security Report, fine-tuned LLMs are 22 times more likely to produce harmful outputs than their base counterparts. While fine-tuning is crucial for enhancing contextual relevance, it also weakens safety measures, making models more susceptible to jailbreaks, prompt injections, and model inversion.

Cisco's research highlights that the more a model is refined for production, the more vulnerable it becomes. The core processes involved in fine-tuning, such as continuous adjustments, third-party integrations, coding, testing, and agentic orchestration, create new avenues for attackers to exploit. Once inside, attackers can quickly poison data, hijack infrastructure, alter agent behavior, and extract training data on a large scale. Without additional security layers, these meticulously fine-tuned models can quickly become liabilities, ripe for exploitation by attackers.

Fine-Tuning LLMs: A Double-Edged Sword

Cisco's security team conducted extensive research on the impact of fine-tuning on multiple models, including Llama-2-7B and Microsoft's domain-specific Adapt LLMs. Their tests spanned various sectors, including healthcare, finance, and law. A key finding was that fine-tuning, even with clean datasets, destabilizes the alignment of models, particularly in highly regulated fields like biomedicine and law.

Although fine-tuning aims to improve task performance, it inadvertently undermines built-in safety controls. Jailbreak attempts, which typically fail against foundation models, succeed at much higher rates against fine-tuned versions, especially in sensitive domains with strict compliance requirements. The results are stark: jailbreak success rates tripled, and malicious output generation increased by 2,200% compared to foundation models. This trade-off means that while fine-tuning enhances utility, it also significantly broadens the attack surface.

The Commoditization of Malicious LLMs

Cisco Talos has been actively tracking the rise of these black-market LLMs, providing insights into their operations. Models like GhostGPT, DarkGPT, and FraudGPT are available on Telegram and the dark web for as little as $75 a month. These tools are designed for plug-and-play use in phishing, exploit development, credit card validation, and obfuscation.

Unlike mainstream models with built-in safety features, these malicious LLMs are pre-configured for offensive operations and come with APIs, updates, and dashboards that mimic commercial SaaS products.

Dataset Poisoning: A $60 Threat to AI Supply Chains

Cisco researchers, in collaboration with Google, ETH Zurich, and Nvidia, have revealed that for just $60, attackers can poison the foundational datasets of AI models without needing zero-day exploits. By exploiting expired domains or timing Wikipedia edits during dataset archiving, attackers can contaminate as little as 0.01% of datasets like LAION-400M or COYO-700M, significantly influencing downstream LLMs.

Methods such as split-view poisoning and frontrunning attacks take advantage of the inherent trust in web-crawled data. With most enterprise LLMs built on open data, these attacks can scale quietly and persist deep into inference pipelines, posing a serious threat to AI supply chains.

Decomposition Attacks: Extracting Sensitive Data

One of the most alarming findings from Cisco's research is the ability of LLMs to leak sensitive training data without triggering safety mechanisms. Using a technique called decomposition prompting, researchers reconstructed over 20% of select articles from The New York Times and The Wall Street Journal. This method breaks down prompts into sub-queries that are deemed safe by guardrails, then reassembles the outputs to recreate paywalled or copyrighted content.

This type of attack poses a significant risk to enterprises, especially those using LLMs trained on proprietary or licensed datasets. The breach occurs not at the input level but through the model's outputs, making it difficult to detect, audit, or contain. For organizations in regulated sectors like healthcare, finance, or law, this not only raises concerns about GDPR, HIPAA, or CCPA compliance but also introduces a new class of risk where legally sourced data can be exposed through inference.

Final Thoughts: LLMs as the New Attack Surface

Cisco's ongoing research and Talos' dark web monitoring confirm that weaponized LLMs are becoming increasingly sophisticated, with a price and packaging war unfolding on the dark web. The findings underscore that LLMs are not merely tools at the edge of the enterprise; they are integral to its core. From the risks associated with fine-tuning to dataset poisoning and model output leaks, attackers view LLMs as critical infrastructure to be exploited.

The key takeaway from Cisco's report is clear: static guardrails are no longer sufficient. CISOs and security leaders must gain real-time visibility across their entire IT estate, enhance adversarial testing, and streamline their tech stack to keep pace with these evolving threats. They must recognize that LLMs and models represent a dynamic attack surface that becomes increasingly vulnerable as they are fine-tuned.

Alibaba's 'ZeroSearch' AI Slashes Training Costs by 88% Through Autonomous Learning

Alibaba's ZeroSearch: A Game-Changer for AI Training EfficiencyAlibaba Group researchers have pioneered a breakthrough method that potentially revolutionizes how AI systems learn information retrieval, bypassing costly commercial search engine APIs e

Alibaba's 'ZeroSearch' AI Slashes Training Costs by 88% Through Autonomous Learning

Alibaba's ZeroSearch: A Game-Changer for AI Training EfficiencyAlibaba Group researchers have pioneered a breakthrough method that potentially revolutionizes how AI systems learn information retrieval, bypassing costly commercial search engine APIs e

Sakana AI's TreeQuest Boosts AI Performance with Multi-Model Collaboration

Japanese AI lab Sakana AI has unveiled a technique enabling multiple large language models (LLMs) to work together, forming a highly effective AI team. Named Multi-LLM AB-MCTS, this method allows mode

Sakana AI's TreeQuest Boosts AI Performance with Multi-Model Collaboration

Japanese AI lab Sakana AI has unveiled a technique enabling multiple large language models (LLMs) to work together, forming a highly effective AI team. Named Multi-LLM AB-MCTS, this method allows mode

ByteDance Unveils Seed-Thinking-v1.5 AI Model to Boost Reasoning Capabilities

The race for advanced reasoning AI began with OpenAI’s o1 model in September 2024, gaining momentum with DeepSeek’s R1 launch in January 2025.Major AI developers are now competing to create faster, mo

ByteDance Unveils Seed-Thinking-v1.5 AI Model to Boost Reasoning Capabilities

The race for advanced reasoning AI began with OpenAI’s o1 model in September 2024, gaining momentum with DeepSeek’s R1 launch in January 2025.Major AI developers are now competing to create faster, mo

August 20, 2025 at 3:01:19 AM EDT

August 20, 2025 at 3:01:19 AM EDT

This article on weaponized LLMs is wild! 😲 FraudGPT and DarkGPT sound like sci-fi villains, but it’s scary how they’re changing cyberattacks. Makes me wonder if AI’s getting too smart for our own good.

0

0

April 25, 2025 at 1:31:29 AM EDT

April 25, 2025 at 1:31:29 AM EDT

Cisco Warns를 사용해보니 LLM이 22배나 더 폭주할 수 있다는 사실에 놀랐어요. FraudGPT 뉴스를 보고 정말 소름이 돋았어요. 온라인에서 더 조심해야겠어요. 보안을 강화할 때가 온 것 같아요! 😅

0

0

April 23, 2025 at 10:08:25 PM EDT

April 23, 2025 at 10:08:25 PM EDT

このツールはサイバーセキュリティの目覚まし時計ですね!ローグLLMの統計は恐ろしいけど、目を開かせるものです。これらのモデルが武器化される可能性を考えると圧倒されますが、重要な情報です。保護方法についてもっと知りたいですね!😅

0

0

April 23, 2025 at 10:31:51 AM EDT

April 23, 2025 at 10:31:51 AM EDT

Essa ferramenta é um alerta para a cibersegurança! As estatísticas sobre LLMs desonestos são assustadoras, mas abrem os olhos. É um pouco avassalador pensar como esses modelos podem ser armados, mas é informação crucial. Talvez mais sobre como se proteger contra eles seria ótimo! 😅

0

0

April 23, 2025 at 2:48:48 AM EDT

April 23, 2025 at 2:48:48 AM EDT

Essa ferramenta realmente me fez ver como o AI pode ser perigoso! É assustador pensar que esses modelos podem ser usados para ataques cibernéticos. As informações são super detalhadas e bem explicadas, mas às vezes é um pouco técnico demais para mim. Ainda assim, é um conhecimento essencial para quem trabalha com cibersegurança! 😱

0

0

April 21, 2025 at 8:59:59 PM EDT

April 21, 2025 at 8:59:59 PM EDT

This tool is a wake-up call for cybersecurity! The stats on rogue LLMs are scary but eye-opening. It's a bit overwhelming to think about how these models can be weaponized, but it's crucial info. Maybe a bit more on how to protect against them would be great! 😅

0

0