Google Launches On-Device Gemini AI Model for Robots

Google DeepMind Unveils Gemini Robotics On-Device for Offline Robot Control

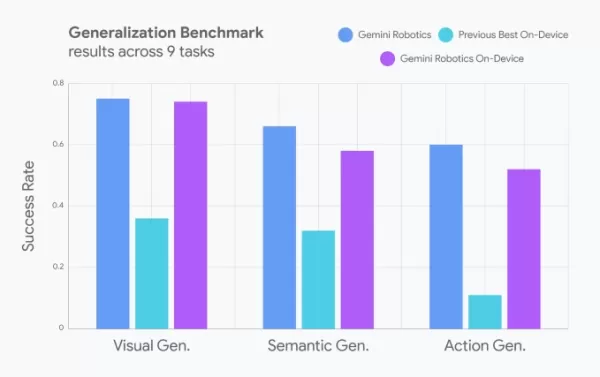

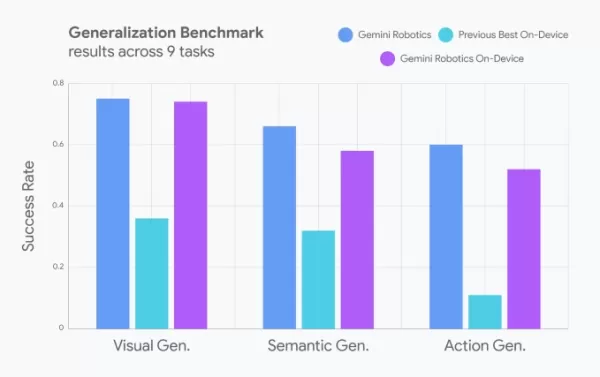

Google DeepMind just dropped an exciting update in the robotics space—Gemini Robotics On-Device, a new language model that lets robots perform tasks without needing an internet connection. This builds on their earlier Gemini Robotics model (released in March) but with a key upgrade: local processing.

Developers can now fine-tune robot movements using natural language prompts, making it easier to adapt robots for different tasks. Google claims its performance is nearly on par with its cloud-based counterpart and outperforms other on-device models (though they didn’t specify which ones).

Image Credits: Google Real-World Robot Skills: From Laundry to Assembly Lines

In demos, robots running this model successfully:

- Unzipped bags

- Folded clothes

- Adapted to new objects (like assembling parts on an industrial belt)

Originally trained for ALOHA robots, the model was later adapted to work on:

- Franka FR3 (a bi-arm industrial robot)

- Apptronik’s Apollo humanoid

Gemini Robotics SDK: Training Robots with Demonstrations

Google also announced a Gemini Robotics SDK, allowing developers to train robots using 50-100 task demonstrations in the MuJoCo physics simulator. This could speed up robot learning for real-world applications.

The Bigger Picture: AI’s Push into Robotics

Google isn’t alone in this race:

- Nvidia is building foundation models for humanoids

- Hugging Face is working on open models—and actual robots

- RLWRLD (a Korean startup) is developing foundational models for robotics

The future of AI-powered robots is heating up—and it’s happening offline, on-device, and in real time.

Want more tech insights?

Join us at TechCrunch Disrupt in Boston, MA (July 15) for deep dives into AI, robotics, and venture trends. Save $200+ on All Stage passes and connect with leaders from Precursor Ventures, NEA, Index Ventures, and Underscore VC.

Related article

Google Relaunches AI-Powered 'Ask Photos' with Improved Speed Features

Following a temporary halt in testing, Google is relaunching its AI-driven "Ask Photos" search functionality in Google Photos with significant enhancements. Powered by Google's Gemini AI technology, this innovative feature helps users locate specific

Google Relaunches AI-Powered 'Ask Photos' with Improved Speed Features

Following a temporary halt in testing, Google is relaunching its AI-driven "Ask Photos" search functionality in Google Photos with significant enhancements. Powered by Google's Gemini AI technology, this innovative feature helps users locate specific

Google AI Ultra Unveiled: Premium Subscription Priced at $249.99 Monthly

Google Unveils Premium AI Ultra SubscriptionAt Google I/O 2025, the tech giant announced its new comprehensive AI subscription service - Google AI Ultra. Priced at $249.99 monthly, this premium offering provides exclusive access to Google's most adva

Google AI Ultra Unveiled: Premium Subscription Priced at $249.99 Monthly

Google Unveils Premium AI Ultra SubscriptionAt Google I/O 2025, the tech giant announced its new comprehensive AI subscription service - Google AI Ultra. Priced at $249.99 monthly, this premium offering provides exclusive access to Google's most adva

Microsoft Study Finds More AI Tokens Increase Reasoning Errors

Emerging Insights Into LLM Reasoning EfficiencyNew research from Microsoft demonstrates that advanced reasoning techniques in large language models don't produce uniform improvements across different AI systems. Their groundbreaking study analyzed ho

Comments (3)

0/200

Microsoft Study Finds More AI Tokens Increase Reasoning Errors

Emerging Insights Into LLM Reasoning EfficiencyNew research from Microsoft demonstrates that advanced reasoning techniques in large language models don't produce uniform improvements across different AI systems. Their groundbreaking study analyzed ho

Comments (3)

0/200

![JuanLewis]() JuanLewis

JuanLewis

August 27, 2025 at 1:01:00 AM EDT

August 27, 2025 at 1:01:00 AM EDT

Super cool that robots can now think offline with Gemini! Imagine them zipping around without Wi-Fi, making decisions on the fly. Can’t wait to see this in action at home! 🤖

0

0

![RonaldNelson]() RonaldNelson

RonaldNelson

August 4, 2025 at 2:48:52 AM EDT

August 4, 2025 at 2:48:52 AM EDT

This is wild! Robots running Gemini AI offline? Google’s pushing the future hard. Can’t wait to see how this shakes up industries, but I’m low-key worried about robots getting too smart too fast. 😅

0

0

![BrianRoberts]() BrianRoberts

BrianRoberts

July 27, 2025 at 9:19:30 PM EDT

July 27, 2025 at 9:19:30 PM EDT

Super cool to see Google pushing offline AI for robots with Gemini! 🤖 Makes me wonder how soon we’ll have fully autonomous helpers at home. Exciting times!

0

0

Google DeepMind Unveils Gemini Robotics On-Device for Offline Robot Control

Google DeepMind just dropped an exciting update in the robotics space—Gemini Robotics On-Device, a new language model that lets robots perform tasks without needing an internet connection. This builds on their earlier Gemini Robotics model (released in March) but with a key upgrade: local processing.

Developers can now fine-tune robot movements using natural language prompts, making it easier to adapt robots for different tasks. Google claims its performance is nearly on par with its cloud-based counterpart and outperforms other on-device models (though they didn’t specify which ones).

Real-World Robot Skills: From Laundry to Assembly Lines

In demos, robots running this model successfully:

- Unzipped bags

- Folded clothes

- Adapted to new objects (like assembling parts on an industrial belt)

Originally trained for ALOHA robots, the model was later adapted to work on:

- Franka FR3 (a bi-arm industrial robot)

- Apptronik’s Apollo humanoid

Gemini Robotics SDK: Training Robots with Demonstrations

Google also announced a Gemini Robotics SDK, allowing developers to train robots using 50-100 task demonstrations in the MuJoCo physics simulator. This could speed up robot learning for real-world applications.

The Bigger Picture: AI’s Push into Robotics

Google isn’t alone in this race:

- Nvidia is building foundation models for humanoids

- Hugging Face is working on open models—and actual robots

- RLWRLD (a Korean startup) is developing foundational models for robotics

The future of AI-powered robots is heating up—and it’s happening offline, on-device, and in real time.

Want more tech insights?

Join us at TechCrunch Disrupt in Boston, MA (July 15) for deep dives into AI, robotics, and venture trends. Save $200+ on All Stage passes and connect with leaders from Precursor Ventures, NEA, Index Ventures, and Underscore VC.

Google Relaunches AI-Powered 'Ask Photos' with Improved Speed Features

Following a temporary halt in testing, Google is relaunching its AI-driven "Ask Photos" search functionality in Google Photos with significant enhancements. Powered by Google's Gemini AI technology, this innovative feature helps users locate specific

Google Relaunches AI-Powered 'Ask Photos' with Improved Speed Features

Following a temporary halt in testing, Google is relaunching its AI-driven "Ask Photos" search functionality in Google Photos with significant enhancements. Powered by Google's Gemini AI technology, this innovative feature helps users locate specific

Google AI Ultra Unveiled: Premium Subscription Priced at $249.99 Monthly

Google Unveils Premium AI Ultra SubscriptionAt Google I/O 2025, the tech giant announced its new comprehensive AI subscription service - Google AI Ultra. Priced at $249.99 monthly, this premium offering provides exclusive access to Google's most adva

Google AI Ultra Unveiled: Premium Subscription Priced at $249.99 Monthly

Google Unveils Premium AI Ultra SubscriptionAt Google I/O 2025, the tech giant announced its new comprehensive AI subscription service - Google AI Ultra. Priced at $249.99 monthly, this premium offering provides exclusive access to Google's most adva

Microsoft Study Finds More AI Tokens Increase Reasoning Errors

Emerging Insights Into LLM Reasoning EfficiencyNew research from Microsoft demonstrates that advanced reasoning techniques in large language models don't produce uniform improvements across different AI systems. Their groundbreaking study analyzed ho

Microsoft Study Finds More AI Tokens Increase Reasoning Errors

Emerging Insights Into LLM Reasoning EfficiencyNew research from Microsoft demonstrates that advanced reasoning techniques in large language models don't produce uniform improvements across different AI systems. Their groundbreaking study analyzed ho

August 27, 2025 at 1:01:00 AM EDT

August 27, 2025 at 1:01:00 AM EDT

Super cool that robots can now think offline with Gemini! Imagine them zipping around without Wi-Fi, making decisions on the fly. Can’t wait to see this in action at home! 🤖

0

0

August 4, 2025 at 2:48:52 AM EDT

August 4, 2025 at 2:48:52 AM EDT

This is wild! Robots running Gemini AI offline? Google’s pushing the future hard. Can’t wait to see how this shakes up industries, but I’m low-key worried about robots getting too smart too fast. 😅

0

0

July 27, 2025 at 9:19:30 PM EDT

July 27, 2025 at 9:19:30 PM EDT

Super cool to see Google pushing offline AI for robots with Gemini! 🤖 Makes me wonder how soon we’ll have fully autonomous helpers at home. Exciting times!

0

0