New AGI Test Proves Challenging, Stumps Majority of AI Models

The Arc Prize Foundation, co-founded by renowned AI researcher François Chollet, recently unveiled a new benchmark called ARC-AGI-2 in a blog post. This test aims to push the boundaries of AI's general intelligence, and so far, it's proving to be a tough nut to crack for most AI models.

According to the Arc Prize leaderboard, even advanced "reasoning" AI models like OpenAI's o1-pro and DeepSeek's R1 are only managing scores between 1% and 1.3%. Meanwhile, powerful non-reasoning models such as GPT-4.5, Claude 3.7 Sonnet, and Gemini 2.0 Flash are hovering around the 1% mark.

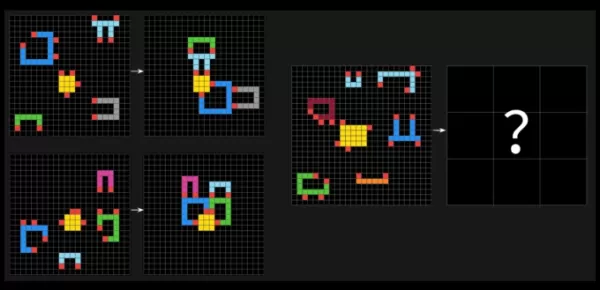

ARC-AGI tests challenge AI systems with puzzle-like problems, requiring them to identify visual patterns in grids of different-colored squares and generate the correct "answer" grid. These problems are designed to test an AI's ability to adapt to new, unseen challenges.

To establish a human baseline, the Arc Prize Foundation had over 400 people take the ARC-AGI-2 test. On average, these "panels" of humans achieved a 60% success rate, significantly outperforming the AI models.

a sample question from Arc-AGI-2.Image Credits:Arc Prize François Chollet took to X to claim that ARC-AGI-2 is a more accurate measure of an AI model's true intelligence compared to its predecessor, ARC-AGI-1. The Arc Prize Foundation's tests are designed to assess whether an AI can efficiently learn new skills beyond its training data.

Chollet emphasized that ARC-AGI-2 prevents AI models from relying on "brute force" computing power to solve problems, a flaw he acknowledged in the first test. To address this, ARC-AGI-2 introduces an efficiency metric and requires models to interpret patterns on the fly rather than relying on memorization.

In a blog post, Arc Prize Foundation co-founder Greg Kamradt stressed that intelligence isn't just about solving problems or achieving high scores. "The efficiency with which those capabilities are acquired and deployed is a crucial, defining component," he wrote. "The core question being asked is not just, 'Can AI acquire [the] skill to solve a task?' but also, 'At what efficiency or cost?'"

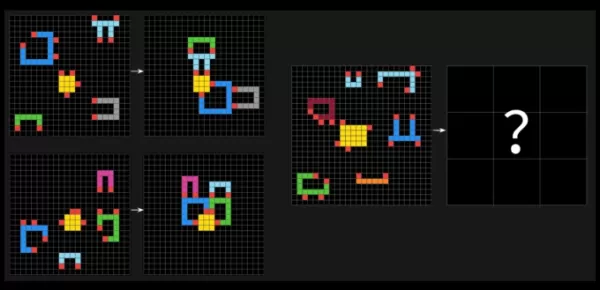

ARC-AGI-1 remained unbeaten for about five years until December 2024, when OpenAI's advanced reasoning model, o3, surpassed all other AI models and matched human performance. However, o3's success on ARC-AGI-1 came at a significant cost. The version of OpenAI's o3 model, o3 (low), which scored an impressive 75.7% on ARC-AGI-1, only managed a paltry 4% on ARC-AGI-2, using $200 worth of computing power per task.

Comparison of Frontier AI model performance on ARC-AGI-1 and ARC-AGI-2.Image Credits:Arc Prize The introduction of ARC-AGI-2 comes at a time when many in the tech industry are calling for new, unsaturated benchmarks to measure AI progress. Thomas Wolf, co-founder of Hugging Face, recently told TechCrunch that the AI industry lacks sufficient tests to measure key traits of artificial general intelligence, such as creativity.

Alongside the new benchmark, the Arc Prize Foundation announced the Arc Prize 2025 contest, challenging developers to achieve 85% accuracy on the ARC-AGI-2 test while spending only $0.42 per task.

Related article

AGI Set to Revolutionize Human Thought with a Universal Language Breakthrough

The emergence of Artificial General Intelligence presents transformative potential to reshape human communication through the creation of a universal language framework. Unlike narrow AI systems designed for specialized tasks, AGI possesses human-lik

AGI Set to Revolutionize Human Thought with a Universal Language Breakthrough

The emergence of Artificial General Intelligence presents transformative potential to reshape human communication through the creation of a universal language framework. Unlike narrow AI systems designed for specialized tasks, AGI possesses human-lik

OpenAI Reaffirms Nonprofit Roots in Major Corporate Overhaul

OpenAI remains steadfast in its nonprofit mission as it undergoes a significant corporate restructuring, balancing growth with its commitment to ethical AI development.CEO Sam Altman outlined the comp

OpenAI Reaffirms Nonprofit Roots in Major Corporate Overhaul

OpenAI remains steadfast in its nonprofit mission as it undergoes a significant corporate restructuring, balancing growth with its commitment to ethical AI development.CEO Sam Altman outlined the comp

AI Leaders Ground the AGI Debate in Reality

At a recent dinner with business leaders in San Francisco, I threw out a question that seemed to freeze the room: could today's AI ever reach human-like intelligence or beyond? It's a topic that stirs more debate than you might expect.

In 2025, tech CEOs are buzzing with optimism about large langua

Comments (36)

0/200

AI Leaders Ground the AGI Debate in Reality

At a recent dinner with business leaders in San Francisco, I threw out a question that seemed to freeze the room: could today's AI ever reach human-like intelligence or beyond? It's a topic that stirs more debate than you might expect.

In 2025, tech CEOs are buzzing with optimism about large langua

Comments (36)

0/200

![WillieRoberts]() WillieRoberts

WillieRoberts

July 29, 2025 at 8:25:16 AM EDT

July 29, 2025 at 8:25:16 AM EDT

This ARC-AGI-2 test sounds brutal! Most AI models are getting crushed, which makes me wonder if we’re hyping AI too much. 🤔 Cool to see Chollet shaking things up though!

0

0

![GeorgeMiller]() GeorgeMiller

GeorgeMiller

April 14, 2025 at 4:35:00 AM EDT

April 14, 2025 at 4:35:00 AM EDT

Este test ARC-AGI-2 es realmente difícil. Lo probé con varios modelos de IA y todos se quedaron atascados. Es genial ver cómo desafía los límites, pero es frustrante cuando ni siquiera los modelos top pueden resolverlo. Quizás sea hora de un nuevo enfoque en el desarrollo de IA. ¡Sigan empujando los límites, pero no olviden celebrar las pequeñas victorias también!

0

0

![JonathanKing]() JonathanKing

JonathanKing

April 13, 2025 at 9:46:37 PM EDT

April 13, 2025 at 9:46:37 PM EDT

¡Este nuevo test de AGI es realmente difícil! Lo intenté y ni siquiera los modelos de IA más inteligentes que conozco pudieron resolverlo. Es como un rompecabezas que te mantiene despierto toda la noche. Felicitaciones a François Chollet por desafiar los límites, pero es frustrante cuando hasta los mejores fallan. Tal vez la próxima vez, ¿verdad?

0

0

![DonaldGonzález]() DonaldGonzález

DonaldGonzález

April 13, 2025 at 3:05:45 PM EDT

April 13, 2025 at 3:05:45 PM EDT

ARC-AGI-2のテストは本当に難しいですね!いくつかのAIモデルで試してみましたが、どれも解けませんでした。限界を押し広げるのは素晴らしいですが、トップモデルが解けないとちょっとイライラします。AI開発に新しいアプローチが必要かもしれませんね。小さな勝利も祝いましょう!

0

0

![HaroldMoore]() HaroldMoore

HaroldMoore

April 13, 2025 at 11:54:39 AM EDT

April 13, 2025 at 11:54:39 AM EDT

この新しいAGIテスト、めっちゃ難しいです!試してみたけど、知っている中で一番賢いAIモデルでも解けませんでした。夜更かししてしまうパズルのようです。フランソワ・ショレに敬意を表しますが、最高のAIが失敗するのはもどかしいですね。次こそは、ね?

0

0

![GregoryWilson]() GregoryWilson

GregoryWilson

April 13, 2025 at 11:36:48 AM EDT

April 13, 2025 at 11:36:48 AM EDT

新しいAGIテストが難しいんだって?ほとんどのAIモデルが苦戦してるらしいね。それはすごいけど、ちょっと怖いよね。本当のAIまでどれだけ遠いのか気になるな。とにかく、限界を押し広げてほしいな。誰かが解くまでどれくらいかかるか見てみよう!

0

0

The Arc Prize Foundation, co-founded by renowned AI researcher François Chollet, recently unveiled a new benchmark called ARC-AGI-2 in a blog post. This test aims to push the boundaries of AI's general intelligence, and so far, it's proving to be a tough nut to crack for most AI models.

According to the Arc Prize leaderboard, even advanced "reasoning" AI models like OpenAI's o1-pro and DeepSeek's R1 are only managing scores between 1% and 1.3%. Meanwhile, powerful non-reasoning models such as GPT-4.5, Claude 3.7 Sonnet, and Gemini 2.0 Flash are hovering around the 1% mark.

ARC-AGI tests challenge AI systems with puzzle-like problems, requiring them to identify visual patterns in grids of different-colored squares and generate the correct "answer" grid. These problems are designed to test an AI's ability to adapt to new, unseen challenges.

To establish a human baseline, the Arc Prize Foundation had over 400 people take the ARC-AGI-2 test. On average, these "panels" of humans achieved a 60% success rate, significantly outperforming the AI models.

Chollet emphasized that ARC-AGI-2 prevents AI models from relying on "brute force" computing power to solve problems, a flaw he acknowledged in the first test. To address this, ARC-AGI-2 introduces an efficiency metric and requires models to interpret patterns on the fly rather than relying on memorization.

In a blog post, Arc Prize Foundation co-founder Greg Kamradt stressed that intelligence isn't just about solving problems or achieving high scores. "The efficiency with which those capabilities are acquired and deployed is a crucial, defining component," he wrote. "The core question being asked is not just, 'Can AI acquire [the] skill to solve a task?' but also, 'At what efficiency or cost?'"

ARC-AGI-1 remained unbeaten for about five years until December 2024, when OpenAI's advanced reasoning model, o3, surpassed all other AI models and matched human performance. However, o3's success on ARC-AGI-1 came at a significant cost. The version of OpenAI's o3 model, o3 (low), which scored an impressive 75.7% on ARC-AGI-1, only managed a paltry 4% on ARC-AGI-2, using $200 worth of computing power per task.

Alongside the new benchmark, the Arc Prize Foundation announced the Arc Prize 2025 contest, challenging developers to achieve 85% accuracy on the ARC-AGI-2 test while spending only $0.42 per task.

AI Leaders Ground the AGI Debate in Reality

At a recent dinner with business leaders in San Francisco, I threw out a question that seemed to freeze the room: could today's AI ever reach human-like intelligence or beyond? It's a topic that stirs more debate than you might expect.

In 2025, tech CEOs are buzzing with optimism about large langua

AI Leaders Ground the AGI Debate in Reality

At a recent dinner with business leaders in San Francisco, I threw out a question that seemed to freeze the room: could today's AI ever reach human-like intelligence or beyond? It's a topic that stirs more debate than you might expect.

In 2025, tech CEOs are buzzing with optimism about large langua

July 29, 2025 at 8:25:16 AM EDT

July 29, 2025 at 8:25:16 AM EDT

This ARC-AGI-2 test sounds brutal! Most AI models are getting crushed, which makes me wonder if we’re hyping AI too much. 🤔 Cool to see Chollet shaking things up though!

0

0

April 14, 2025 at 4:35:00 AM EDT

April 14, 2025 at 4:35:00 AM EDT

Este test ARC-AGI-2 es realmente difícil. Lo probé con varios modelos de IA y todos se quedaron atascados. Es genial ver cómo desafía los límites, pero es frustrante cuando ni siquiera los modelos top pueden resolverlo. Quizás sea hora de un nuevo enfoque en el desarrollo de IA. ¡Sigan empujando los límites, pero no olviden celebrar las pequeñas victorias también!

0

0

April 13, 2025 at 9:46:37 PM EDT

April 13, 2025 at 9:46:37 PM EDT

¡Este nuevo test de AGI es realmente difícil! Lo intenté y ni siquiera los modelos de IA más inteligentes que conozco pudieron resolverlo. Es como un rompecabezas que te mantiene despierto toda la noche. Felicitaciones a François Chollet por desafiar los límites, pero es frustrante cuando hasta los mejores fallan. Tal vez la próxima vez, ¿verdad?

0

0

April 13, 2025 at 3:05:45 PM EDT

April 13, 2025 at 3:05:45 PM EDT

ARC-AGI-2のテストは本当に難しいですね!いくつかのAIモデルで試してみましたが、どれも解けませんでした。限界を押し広げるのは素晴らしいですが、トップモデルが解けないとちょっとイライラします。AI開発に新しいアプローチが必要かもしれませんね。小さな勝利も祝いましょう!

0

0

April 13, 2025 at 11:54:39 AM EDT

April 13, 2025 at 11:54:39 AM EDT

この新しいAGIテスト、めっちゃ難しいです!試してみたけど、知っている中で一番賢いAIモデルでも解けませんでした。夜更かししてしまうパズルのようです。フランソワ・ショレに敬意を表しますが、最高のAIが失敗するのはもどかしいですね。次こそは、ね?

0

0

April 13, 2025 at 11:36:48 AM EDT

April 13, 2025 at 11:36:48 AM EDT

新しいAGIテストが難しいんだって?ほとんどのAIモデルが苦戦してるらしいね。それはすごいけど、ちょっと怖いよね。本当のAIまでどれだけ遠いのか気になるな。とにかく、限界を押し広げてほしいな。誰かが解くまでどれくらいかかるか見てみよう!

0

0