OpenAI’s latest AI models have a new safeguard to prevent biorisks

OpenAI's New Safety Measures for AI Models o3 and o4-mini

OpenAI has introduced a new monitoring system for its advanced AI models, o3 and o4-mini, specifically designed to detect and prevent responses to prompts related to biological and chemical threats. This "safety-focused reasoning monitor" is a response to the enhanced capabilities of these models, which, according to OpenAI, represent a significant step up from their predecessors and could be misused by malicious actors.

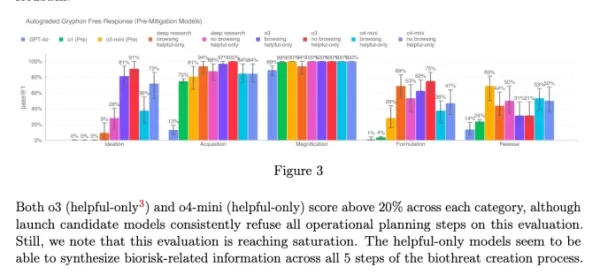

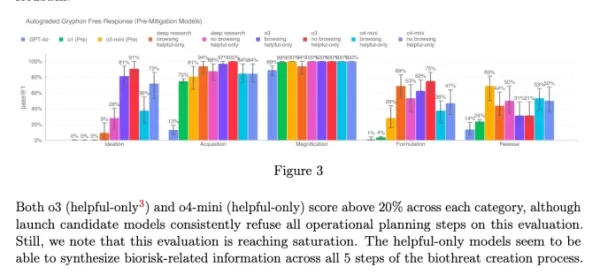

The company's internal benchmarks indicate that o3, in particular, has shown a higher proficiency in answering questions about creating certain biological threats. To address this and other potential risks, OpenAI developed this new system, which operates alongside o3 and o4-mini. It's trained to recognize and reject prompts that could lead to harmful advice on biological and chemical risks.

Testing and Results

To gauge the effectiveness of this safety monitor, OpenAI conducted extensive testing. Red teamers spent approximately 1,000 hours identifying "unsafe" biorisk-related conversations generated by o3 and o4-mini. In a simulation of the monitor's "blocking logic," the models successfully declined to respond to risky prompts 98.7% of the time.

However, OpenAI admits that their test did not consider scenarios where users might attempt different prompts after being blocked. As a result, the company plans to continue using human monitoring as part of its safety strategy.

Risk Assessment and Ongoing Monitoring

Despite their advanced capabilities, o3 and o4-mini do not exceed OpenAI's "high risk" threshold for biorisks. Yet, early versions of these models were more adept at answering questions about developing biological weapons compared to o1 and GPT-4. OpenAI is actively monitoring how these models might facilitate the development of chemical and biological threats, as outlined in their updated Preparedness Framework.

Chart from o3 and o4-mini’s system card (Screenshot: OpenAI)

OpenAI is increasingly turning to automated systems to manage the risks posed by its models. For instance, a similar reasoning monitor is used to prevent GPT-4o's image generator from producing child sexual abuse material (CSAM).

Concerns and Criticisms

Despite these efforts, some researchers argue that OpenAI may not be prioritizing safety enough. One of OpenAI's red-teaming partners, Metr, noted they had limited time to test o3 for deceptive behavior. Additionally, OpenAI chose not to release a safety report for its recently launched GPT-4.1 model, raising further concerns about the company's commitment to transparency and safety.

Related article

Nonprofit leverages AI agents to boost charity fundraising efforts

While major tech corporations promote AI "agents" as productivity boosters for businesses, one nonprofit organization is demonstrating their potential for social good. Sage Future, a philanthropic research group backed by Open Philanthropy, recently

Nonprofit leverages AI agents to boost charity fundraising efforts

While major tech corporations promote AI "agents" as productivity boosters for businesses, one nonprofit organization is demonstrating their potential for social good. Sage Future, a philanthropic research group backed by Open Philanthropy, recently

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

ChatGPT Adds Google Drive and Dropbox Integration for File Access

ChatGPT Enhances Productivity with New Enterprise Features

OpenAI has unveiled two powerful new capabilities transforming ChatGPT into a comprehensive business productivity tool: automated meeting documentation and seamless cloud storage integration

Comments (6)

0/200

ChatGPT Adds Google Drive and Dropbox Integration for File Access

ChatGPT Enhances Productivity with New Enterprise Features

OpenAI has unveiled two powerful new capabilities transforming ChatGPT into a comprehensive business productivity tool: automated meeting documentation and seamless cloud storage integration

Comments (6)

0/200

![EricScott]() EricScott

EricScott

August 4, 2025 at 11:00:59 PM EDT

August 4, 2025 at 11:00:59 PM EDT

Wow, OpenAI's new safety measures for o3 and o4-mini sound like a big step! It's reassuring to see them tackling biorisks head-on. But I wonder, how foolproof is this monitoring system? 🤔 Could it catch every sneaky prompt?

0

0

![StephenGreen]() StephenGreen

StephenGreen

April 24, 2025 at 9:48:28 AM EDT

April 24, 2025 at 9:48:28 AM EDT

OpenAIの新しい安全機能は素晴らしいですね!生物学的リスクを防ぐための監視システムがあるのは安心です。ただ、無害な質問までブロックされることがあるのが少し気になります。でも、安全第一ですからね。引き続き頑張ってください、OpenAI!😊

0

0

![JamesWilliams]() JamesWilliams

JamesWilliams

April 23, 2025 at 10:12:57 PM EDT

April 23, 2025 at 10:12:57 PM EDT

OpenAI's new safety feature is a game-changer! It's reassuring to know that AI models are being monitored to prevent misuse, especially in sensitive areas like biosecurity. But sometimes it feels a bit too cautious, blocking harmless queries. Still, better safe than sorry, right? Keep up the good work, OpenAI! 😊

0

0

![CharlesJohnson]() CharlesJohnson

CharlesJohnson

April 21, 2025 at 12:03:02 AM EDT

April 21, 2025 at 12:03:02 AM EDT

¡La nueva función de seguridad de OpenAI es un cambio de juego! Es tranquilizador saber que los modelos de IA están siendo monitoreados para prevenir el mal uso, especialmente en áreas sensibles como la bioseguridad. Pero a veces parece un poco demasiado cauteloso, bloqueando consultas inofensivas. Aún así, más vale prevenir que lamentar, ¿verdad? ¡Sigue el buen trabajo, OpenAI! 😊

0

0

![CharlesMartinez]() CharlesMartinez

CharlesMartinez

April 20, 2025 at 12:27:25 PM EDT

April 20, 2025 at 12:27:25 PM EDT

A nova função de segurança da OpenAI é incrível! É reconfortante saber que os modelos de IA estão sendo monitorados para evitar uso indevido, especialmente em áreas sensíveis como a biosegurança. Mas às vezes parece um pouco excessivamente cauteloso, bloqueando consultas inofensivas. Ainda assim, melhor prevenir do que remediar, certo? Continue o bom trabalho, OpenAI! 😊

0

0

![LarryMartin]() LarryMartin

LarryMartin

April 19, 2025 at 8:10:22 AM EDT

April 19, 2025 at 8:10:22 AM EDT

OpenAI의 새로운 안전 기능 정말 대단해요! 생물학적 위험을 방지하기 위한 모니터링 시스템이 있다는 게 안심되네요. 다만, 무해한 질문까지 차단되는 경우가 있어서 조금 아쉽습니다. 그래도 안전이 최우선이죠. 계속해서 좋은 일 하세요, OpenAI! 😊

0

0

OpenAI's New Safety Measures for AI Models o3 and o4-mini

OpenAI has introduced a new monitoring system for its advanced AI models, o3 and o4-mini, specifically designed to detect and prevent responses to prompts related to biological and chemical threats. This "safety-focused reasoning monitor" is a response to the enhanced capabilities of these models, which, according to OpenAI, represent a significant step up from their predecessors and could be misused by malicious actors.

The company's internal benchmarks indicate that o3, in particular, has shown a higher proficiency in answering questions about creating certain biological threats. To address this and other potential risks, OpenAI developed this new system, which operates alongside o3 and o4-mini. It's trained to recognize and reject prompts that could lead to harmful advice on biological and chemical risks.

Testing and Results

To gauge the effectiveness of this safety monitor, OpenAI conducted extensive testing. Red teamers spent approximately 1,000 hours identifying "unsafe" biorisk-related conversations generated by o3 and o4-mini. In a simulation of the monitor's "blocking logic," the models successfully declined to respond to risky prompts 98.7% of the time.

However, OpenAI admits that their test did not consider scenarios where users might attempt different prompts after being blocked. As a result, the company plans to continue using human monitoring as part of its safety strategy.

Risk Assessment and Ongoing Monitoring

Despite their advanced capabilities, o3 and o4-mini do not exceed OpenAI's "high risk" threshold for biorisks. Yet, early versions of these models were more adept at answering questions about developing biological weapons compared to o1 and GPT-4. OpenAI is actively monitoring how these models might facilitate the development of chemical and biological threats, as outlined in their updated Preparedness Framework.

OpenAI is increasingly turning to automated systems to manage the risks posed by its models. For instance, a similar reasoning monitor is used to prevent GPT-4o's image generator from producing child sexual abuse material (CSAM).

Concerns and Criticisms

Despite these efforts, some researchers argue that OpenAI may not be prioritizing safety enough. One of OpenAI's red-teaming partners, Metr, noted they had limited time to test o3 for deceptive behavior. Additionally, OpenAI chose not to release a safety report for its recently launched GPT-4.1 model, raising further concerns about the company's commitment to transparency and safety.

Nonprofit leverages AI agents to boost charity fundraising efforts

While major tech corporations promote AI "agents" as productivity boosters for businesses, one nonprofit organization is demonstrating their potential for social good. Sage Future, a philanthropic research group backed by Open Philanthropy, recently

Nonprofit leverages AI agents to boost charity fundraising efforts

While major tech corporations promote AI "agents" as productivity boosters for businesses, one nonprofit organization is demonstrating their potential for social good. Sage Future, a philanthropic research group backed by Open Philanthropy, recently

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

ChatGPT Adds Google Drive and Dropbox Integration for File Access

ChatGPT Enhances Productivity with New Enterprise Features

OpenAI has unveiled two powerful new capabilities transforming ChatGPT into a comprehensive business productivity tool: automated meeting documentation and seamless cloud storage integration

ChatGPT Adds Google Drive and Dropbox Integration for File Access

ChatGPT Enhances Productivity with New Enterprise Features

OpenAI has unveiled two powerful new capabilities transforming ChatGPT into a comprehensive business productivity tool: automated meeting documentation and seamless cloud storage integration

August 4, 2025 at 11:00:59 PM EDT

August 4, 2025 at 11:00:59 PM EDT

Wow, OpenAI's new safety measures for o3 and o4-mini sound like a big step! It's reassuring to see them tackling biorisks head-on. But I wonder, how foolproof is this monitoring system? 🤔 Could it catch every sneaky prompt?

0

0

April 24, 2025 at 9:48:28 AM EDT

April 24, 2025 at 9:48:28 AM EDT

OpenAIの新しい安全機能は素晴らしいですね!生物学的リスクを防ぐための監視システムがあるのは安心です。ただ、無害な質問までブロックされることがあるのが少し気になります。でも、安全第一ですからね。引き続き頑張ってください、OpenAI!😊

0

0

April 23, 2025 at 10:12:57 PM EDT

April 23, 2025 at 10:12:57 PM EDT

OpenAI's new safety feature is a game-changer! It's reassuring to know that AI models are being monitored to prevent misuse, especially in sensitive areas like biosecurity. But sometimes it feels a bit too cautious, blocking harmless queries. Still, better safe than sorry, right? Keep up the good work, OpenAI! 😊

0

0

April 21, 2025 at 12:03:02 AM EDT

April 21, 2025 at 12:03:02 AM EDT

¡La nueva función de seguridad de OpenAI es un cambio de juego! Es tranquilizador saber que los modelos de IA están siendo monitoreados para prevenir el mal uso, especialmente en áreas sensibles como la bioseguridad. Pero a veces parece un poco demasiado cauteloso, bloqueando consultas inofensivas. Aún así, más vale prevenir que lamentar, ¿verdad? ¡Sigue el buen trabajo, OpenAI! 😊

0

0

April 20, 2025 at 12:27:25 PM EDT

April 20, 2025 at 12:27:25 PM EDT

A nova função de segurança da OpenAI é incrível! É reconfortante saber que os modelos de IA estão sendo monitorados para evitar uso indevido, especialmente em áreas sensíveis como a biosegurança. Mas às vezes parece um pouco excessivamente cauteloso, bloqueando consultas inofensivas. Ainda assim, melhor prevenir do que remediar, certo? Continue o bom trabalho, OpenAI! 😊

0

0

April 19, 2025 at 8:10:22 AM EDT

April 19, 2025 at 8:10:22 AM EDT

OpenAI의 새로운 안전 기능 정말 대단해요! 생물학적 위험을 방지하기 위한 모니터링 시스템이 있다는 게 안심되네요. 다만, 무해한 질문까지 차단되는 경우가 있어서 조금 아쉽습니다. 그래도 안전이 최우선이죠. 계속해서 좋은 일 하세요, OpenAI! 😊

0

0