Microsoft Study Reveals AI Models' Limitations in Software Debugging

AI models from OpenAI, Anthropic, and other leading AI labs are increasingly utilized for coding tasks. Google CEO Sundar Pichai noted in October that AI generates 25% of new code at the company, while Meta CEO Mark Zuckerberg aims to broadly implement AI coding tools within the social media giant.

However, even top-performing models struggle to fix software bugs that experienced developers handle with ease.

A recent Microsoft Research study, conducted by Microsoft’s R&D division, shows that models like Anthropic’s Claude 3.7 Sonnet and OpenAI’s o3-mini struggle to resolve many issues in the SWE-bench Lite software development benchmark. The findings highlight that, despite ambitious claims from firms like OpenAI, AI still falls short of human expertise in areas like coding.

The study’s researchers tested nine models as the foundation for a “single prompt-based agent” equipped with debugging tools, including a Python debugger. The agent was tasked with addressing 300 curated software debugging challenges from SWE-bench Lite.

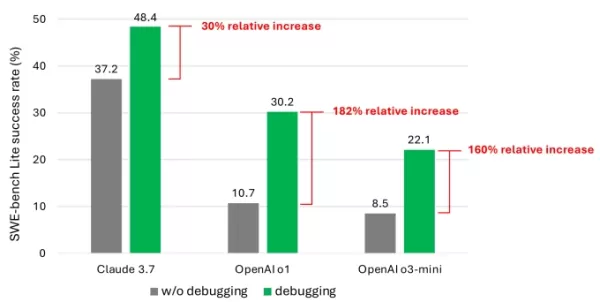

The results showed that even with advanced models, the agent rarely resolved more than half of the tasks successfully. Claude 3.7 Sonnet led with a 48.4% success rate, followed by OpenAI’s o1 at 30.2%, and o3-mini at 22.1%.

A chart from the study showing the performance boost models gained from debugging tools. Image Credits: Microsoft What explains the lackluster results? Some models struggled to effectively use available debugging tools or identify which tools suited specific issues. The primary issue, according to the researchers, was a lack of sufficient training data, particularly data capturing “sequential decision-making processes” like human debugging traces.

“We believe that training or fine-tuning these models can improve their debugging capabilities,” the researchers wrote. “However, this requires specialized data, such as trajectory data capturing agents interacting with a debugger to gather information before proposing fixes.”

Attend TechCrunch Sessions: AI

Reserve your place at our premier AI industry event, featuring speakers from OpenAI, Anthropic, and Cohere. For a limited time, tickets cost just $292 for a full day of expert talks, workshops, and networking opportunities.

Showcase at TechCrunch Sessions: AI

Book your spot at TC Sessions: AI to present your work to over 1,200 decision-makers. Exhibit opportunities are available through May 9 or until tables are fully booked.

The findings aren’t surprising. Numerous studies have shown that AI-generated code often introduces security flaws and errors due to weaknesses in understanding programming logic. A recent test of Devin, a well-known AI coding tool, revealed it could only complete three out of 20 programming tasks.

Microsoft’s study offers one of the most in-depth examinations of this ongoing challenge for AI models. While it’s unlikely to curb investor interest in AI-powered coding tools, it may prompt developers and their leaders to reconsider relying heavily on AI for coding tasks.

Notably, several tech leaders have pushed back against the idea that AI will eliminate coding jobs. Microsoft co-founder Bill Gates, Replit CEO Amjad Masad, Okta CEO Todd McKinnon, and IBM CEO Arvind Krishna have all expressed confidence that programming as a profession will endure.

Related article

ChatGPT Exploited to Steal Sensitive Gmail Data in Security Breach

Security Alert: Researchers Demonstrate AI-Powered Data Exfiltration TechniqueCybersecurity experts recently uncovered a concerning vulnerability wherein ChatGPT's Deep Research feature could be manipulated to silently extract confidential Gmail data

ChatGPT Exploited to Steal Sensitive Gmail Data in Security Breach

Security Alert: Researchers Demonstrate AI-Powered Data Exfiltration TechniqueCybersecurity experts recently uncovered a concerning vulnerability wherein ChatGPT's Deep Research feature could be manipulated to silently extract confidential Gmail data

Anthropic Admits Claude AI Error in Legal Filing, Calls It "Embarrassing and Unintentional"

Anthropic has addressed accusations regarding an AI-generated source in its ongoing legal dispute with music publishers, characterizing the incident as an "unintentional citation error" made by its Claude chatbot.

The disputed citation appeared in a

Anthropic Admits Claude AI Error in Legal Filing, Calls It "Embarrassing and Unintentional"

Anthropic has addressed accusations regarding an AI-generated source in its ongoing legal dispute with music publishers, characterizing the incident as an "unintentional citation error" made by its Claude chatbot.

The disputed citation appeared in a

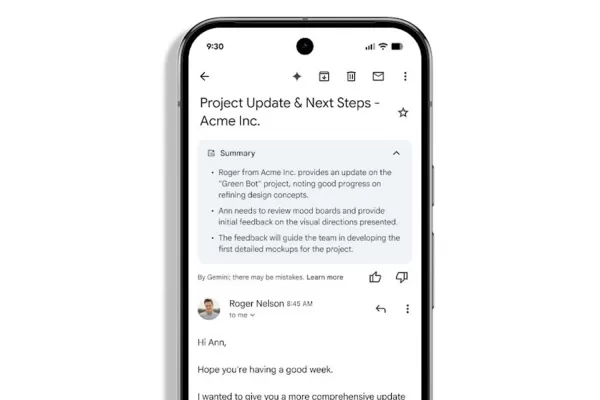

Gmail Rolls Out Automatic AI-Powered Email Summaries

Gemini-Powered Email Summaries Coming to Workspace Users

Google Workspace subscribers will notice Gemini's enhanced role in managing their inboxes as Gmail begins automatically generating summaries for complex email threads. These AI-created digests

Comments (6)

0/200

Gmail Rolls Out Automatic AI-Powered Email Summaries

Gemini-Powered Email Summaries Coming to Workspace Users

Google Workspace subscribers will notice Gemini's enhanced role in managing their inboxes as Gmail begins automatically generating summaries for complex email threads. These AI-created digests

Comments (6)

0/200

![ThomasScott]() ThomasScott

ThomasScott

September 7, 2025 at 12:30:35 AM EDT

September 7, 2025 at 12:30:35 AM EDT

微软这个研究结果太真实了😂 前几天用Copilot改bug,它居然把正确代码改得更错了…看来AI写代码还是得人工把关,至少现阶段别太依赖它们debug。

0

0

![HenryWalker]() HenryWalker

HenryWalker

August 17, 2025 at 1:00:59 AM EDT

August 17, 2025 at 1:00:59 AM EDT

It's wild that AI is pumping out 25% of Google's code, but this Microsoft study shows it's not perfect at debugging. Kinda makes you wonder if we're trusting these models a bit too much too soon. 😅 Anyone else worried about buggy AI code sneaking into big projects?

0

0

![BrianRoberts]() BrianRoberts

BrianRoberts

August 14, 2025 at 3:00:59 AM EDT

August 14, 2025 at 3:00:59 AM EDT

It's wild that AI is cranking out 25% of Google's code, but the debugging struggles are real. Makes me wonder if we're leaning too hard on AI without fixing its blind spots first. 🧑💻

0

0

![KevinDavis]() KevinDavis

KevinDavis

August 9, 2025 at 5:00:59 PM EDT

August 9, 2025 at 5:00:59 PM EDT

It's wild that AI is pumping out 25% of Google's code, but the debugging limitations in this study make me wonder if we're leaning too hard on these models without enough human oversight. 🤔

0

0

![PeterThomas]() PeterThomas

PeterThomas

July 31, 2025 at 10:48:18 PM EDT

July 31, 2025 at 10:48:18 PM EDT

Interesting read! AI generating 25% of Google's code is wild, but I'm not surprised it struggles with debugging. Machines can churn out code fast, but catching tricky bugs? That’s still a human’s game. 🧑💻

0

0

![JuanWhite]() JuanWhite

JuanWhite

July 23, 2025 at 12:59:29 AM EDT

July 23, 2025 at 12:59:29 AM EDT

AI coding sounds cool, but if it can't debug properly, what's the point? 🤔 Feels like we're hyping up half-baked tools while devs still clean up the mess.

0

0

AI models from OpenAI, Anthropic, and other leading AI labs are increasingly utilized for coding tasks. Google CEO Sundar Pichai noted in October that AI generates 25% of new code at the company, while Meta CEO Mark Zuckerberg aims to broadly implement AI coding tools within the social media giant.

However, even top-performing models struggle to fix software bugs that experienced developers handle with ease.

A recent Microsoft Research study, conducted by Microsoft’s R&D division, shows that models like Anthropic’s Claude 3.7 Sonnet and OpenAI’s o3-mini struggle to resolve many issues in the SWE-bench Lite software development benchmark. The findings highlight that, despite ambitious claims from firms like OpenAI, AI still falls short of human expertise in areas like coding.

The study’s researchers tested nine models as the foundation for a “single prompt-based agent” equipped with debugging tools, including a Python debugger. The agent was tasked with addressing 300 curated software debugging challenges from SWE-bench Lite.

The results showed that even with advanced models, the agent rarely resolved more than half of the tasks successfully. Claude 3.7 Sonnet led with a 48.4% success rate, followed by OpenAI’s o1 at 30.2%, and o3-mini at 22.1%.

What explains the lackluster results? Some models struggled to effectively use available debugging tools or identify which tools suited specific issues. The primary issue, according to the researchers, was a lack of sufficient training data, particularly data capturing “sequential decision-making processes” like human debugging traces.

“We believe that training or fine-tuning these models can improve their debugging capabilities,” the researchers wrote. “However, this requires specialized data, such as trajectory data capturing agents interacting with a debugger to gather information before proposing fixes.”

Attend TechCrunch Sessions: AI

Reserve your place at our premier AI industry event, featuring speakers from OpenAI, Anthropic, and Cohere. For a limited time, tickets cost just $292 for a full day of expert talks, workshops, and networking opportunities.

Showcase at TechCrunch Sessions: AI

Book your spot at TC Sessions: AI to present your work to over 1,200 decision-makers. Exhibit opportunities are available through May 9 or until tables are fully booked.

The findings aren’t surprising. Numerous studies have shown that AI-generated code often introduces security flaws and errors due to weaknesses in understanding programming logic. A recent test of Devin, a well-known AI coding tool, revealed it could only complete three out of 20 programming tasks.

Microsoft’s study offers one of the most in-depth examinations of this ongoing challenge for AI models. While it’s unlikely to curb investor interest in AI-powered coding tools, it may prompt developers and their leaders to reconsider relying heavily on AI for coding tasks.

Notably, several tech leaders have pushed back against the idea that AI will eliminate coding jobs. Microsoft co-founder Bill Gates, Replit CEO Amjad Masad, Okta CEO Todd McKinnon, and IBM CEO Arvind Krishna have all expressed confidence that programming as a profession will endure.

ChatGPT Exploited to Steal Sensitive Gmail Data in Security Breach

Security Alert: Researchers Demonstrate AI-Powered Data Exfiltration TechniqueCybersecurity experts recently uncovered a concerning vulnerability wherein ChatGPT's Deep Research feature could be manipulated to silently extract confidential Gmail data

ChatGPT Exploited to Steal Sensitive Gmail Data in Security Breach

Security Alert: Researchers Demonstrate AI-Powered Data Exfiltration TechniqueCybersecurity experts recently uncovered a concerning vulnerability wherein ChatGPT's Deep Research feature could be manipulated to silently extract confidential Gmail data

Anthropic Admits Claude AI Error in Legal Filing, Calls It "Embarrassing and Unintentional"

Anthropic has addressed accusations regarding an AI-generated source in its ongoing legal dispute with music publishers, characterizing the incident as an "unintentional citation error" made by its Claude chatbot.

The disputed citation appeared in a

Anthropic Admits Claude AI Error in Legal Filing, Calls It "Embarrassing and Unintentional"

Anthropic has addressed accusations regarding an AI-generated source in its ongoing legal dispute with music publishers, characterizing the incident as an "unintentional citation error" made by its Claude chatbot.

The disputed citation appeared in a

Gmail Rolls Out Automatic AI-Powered Email Summaries

Gemini-Powered Email Summaries Coming to Workspace Users

Google Workspace subscribers will notice Gemini's enhanced role in managing their inboxes as Gmail begins automatically generating summaries for complex email threads. These AI-created digests

Gmail Rolls Out Automatic AI-Powered Email Summaries

Gemini-Powered Email Summaries Coming to Workspace Users

Google Workspace subscribers will notice Gemini's enhanced role in managing their inboxes as Gmail begins automatically generating summaries for complex email threads. These AI-created digests

September 7, 2025 at 12:30:35 AM EDT

September 7, 2025 at 12:30:35 AM EDT

微软这个研究结果太真实了😂 前几天用Copilot改bug,它居然把正确代码改得更错了…看来AI写代码还是得人工把关,至少现阶段别太依赖它们debug。

0

0

August 17, 2025 at 1:00:59 AM EDT

August 17, 2025 at 1:00:59 AM EDT

It's wild that AI is pumping out 25% of Google's code, but this Microsoft study shows it's not perfect at debugging. Kinda makes you wonder if we're trusting these models a bit too much too soon. 😅 Anyone else worried about buggy AI code sneaking into big projects?

0

0

August 14, 2025 at 3:00:59 AM EDT

August 14, 2025 at 3:00:59 AM EDT

It's wild that AI is cranking out 25% of Google's code, but the debugging struggles are real. Makes me wonder if we're leaning too hard on AI without fixing its blind spots first. 🧑💻

0

0

August 9, 2025 at 5:00:59 PM EDT

August 9, 2025 at 5:00:59 PM EDT

It's wild that AI is pumping out 25% of Google's code, but the debugging limitations in this study make me wonder if we're leaning too hard on these models without enough human oversight. 🤔

0

0

July 31, 2025 at 10:48:18 PM EDT

July 31, 2025 at 10:48:18 PM EDT

Interesting read! AI generating 25% of Google's code is wild, but I'm not surprised it struggles with debugging. Machines can churn out code fast, but catching tricky bugs? That’s still a human’s game. 🧑💻

0

0

July 23, 2025 at 12:59:29 AM EDT

July 23, 2025 at 12:59:29 AM EDT

AI coding sounds cool, but if it can't debug properly, what's the point? 🤔 Feels like we're hyping up half-baked tools while devs still clean up the mess.

0

0