Anthropic Admits Claude AI Error in Legal Filing, Calls It Embarrassing and Unintentional

Anthropic has addressed accusations regarding an AI-generated source in its ongoing legal dispute with music publishers, characterizing the incident as an "unintentional citation error" made by its Claude chatbot.

The disputed citation appeared in an April 30th legal filing submitted by Anthropic data scientist Olivia Chen, part of the company's defense against allegations that Claude was trained using copyrighted song lyrics. During court proceedings, legal counsel representing Universal Music Group and other publishers flagged the cited sources as potentially fabricated, suggesting they might have been invented by Anthropic's AI system.

In a recently filed response, Anthropic's defense attorney Ivana Dukanovic clarified that while the cited material was authentic, Claude had been employed to format legal citations in the document. The company acknowledged that while automated checks caught and corrected inaccurate volume and page numbers generated by the AI, certain phrasing inaccuracies remained undetected during manual review.

Dukanovic explained, "The system correctly identified the publication name, year, and accessible link, but erroneously included incorrect article titles and author information," emphasizing this represented an honest mistake rather than deliberate misrepresentation. Anthropic expressed regret for the confusion caused, describing the oversight as "an unfortunate but unintentional error."

This case joins a growing list of legal proceedings where AI-assisted citation has created complications. Recently, a California judge reprimanded attorneys for not disclosing AI's role in preparing a brief containing non-existent legal references. The issue gained further attention last December when a misinformation researcher conceded that ChatGPT had invented citations in one of his legal submissions.

Related article

ChatGPT Exploited to Steal Sensitive Gmail Data in Security Breach

Security Alert: Researchers Demonstrate AI-Powered Data Exfiltration TechniqueCybersecurity experts recently uncovered a concerning vulnerability wherein ChatGPT's Deep Research feature could be manipulated to silently extract confidential Gmail data

ChatGPT Exploited to Steal Sensitive Gmail Data in Security Breach

Security Alert: Researchers Demonstrate AI-Powered Data Exfiltration TechniqueCybersecurity experts recently uncovered a concerning vulnerability wherein ChatGPT's Deep Research feature could be manipulated to silently extract confidential Gmail data

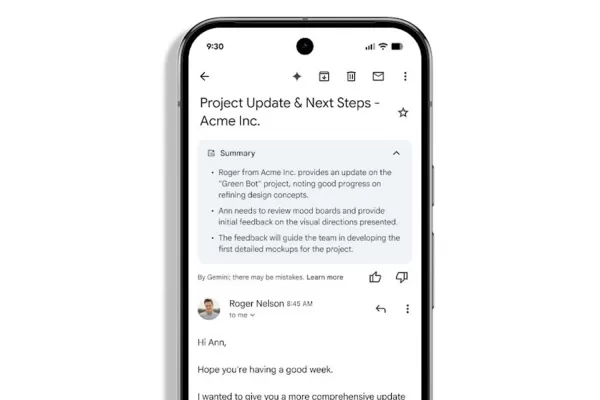

Gmail Rolls Out Automatic AI-Powered Email Summaries

Gemini-Powered Email Summaries Coming to Workspace Users

Google Workspace subscribers will notice Gemini's enhanced role in managing their inboxes as Gmail begins automatically generating summaries for complex email threads. These AI-created digests

Gmail Rolls Out Automatic AI-Powered Email Summaries

Gemini-Powered Email Summaries Coming to Workspace Users

Google Workspace subscribers will notice Gemini's enhanced role in managing their inboxes as Gmail begins automatically generating summaries for complex email threads. These AI-created digests

Apple Could Delay Next iPhone Launch for Foldable Model Release

According to The Information, Apple is preparing a significant reshuffle of its iPhone release calendar for 2026, introducing the company's first foldable iPhone alongside newly scheduled "Air" models. This strategic shift reportedly moves the standa

Comments (0)

0/200

Apple Could Delay Next iPhone Launch for Foldable Model Release

According to The Information, Apple is preparing a significant reshuffle of its iPhone release calendar for 2026, introducing the company's first foldable iPhone alongside newly scheduled "Air" models. This strategic shift reportedly moves the standa

Comments (0)

0/200

Anthropic has addressed accusations regarding an AI-generated source in its ongoing legal dispute with music publishers, characterizing the incident as an "unintentional citation error" made by its Claude chatbot.

The disputed citation appeared in an April 30th legal filing submitted by Anthropic data scientist Olivia Chen, part of the company's defense against allegations that Claude was trained using copyrighted song lyrics. During court proceedings, legal counsel representing Universal Music Group and other publishers flagged the cited sources as potentially fabricated, suggesting they might have been invented by Anthropic's AI system.

In a recently filed response, Anthropic's defense attorney Ivana Dukanovic clarified that while the cited material was authentic, Claude had been employed to format legal citations in the document. The company acknowledged that while automated checks caught and corrected inaccurate volume and page numbers generated by the AI, certain phrasing inaccuracies remained undetected during manual review.

Dukanovic explained, "The system correctly identified the publication name, year, and accessible link, but erroneously included incorrect article titles and author information," emphasizing this represented an honest mistake rather than deliberate misrepresentation. Anthropic expressed regret for the confusion caused, describing the oversight as "an unfortunate but unintentional error."

This case joins a growing list of legal proceedings where AI-assisted citation has created complications. Recently, a California judge reprimanded attorneys for not disclosing AI's role in preparing a brief containing non-existent legal references. The issue gained further attention last December when a misinformation researcher conceded that ChatGPT had invented citations in one of his legal submissions.

ChatGPT Exploited to Steal Sensitive Gmail Data in Security Breach

Security Alert: Researchers Demonstrate AI-Powered Data Exfiltration TechniqueCybersecurity experts recently uncovered a concerning vulnerability wherein ChatGPT's Deep Research feature could be manipulated to silently extract confidential Gmail data

ChatGPT Exploited to Steal Sensitive Gmail Data in Security Breach

Security Alert: Researchers Demonstrate AI-Powered Data Exfiltration TechniqueCybersecurity experts recently uncovered a concerning vulnerability wherein ChatGPT's Deep Research feature could be manipulated to silently extract confidential Gmail data

Gmail Rolls Out Automatic AI-Powered Email Summaries

Gemini-Powered Email Summaries Coming to Workspace Users

Google Workspace subscribers will notice Gemini's enhanced role in managing their inboxes as Gmail begins automatically generating summaries for complex email threads. These AI-created digests

Gmail Rolls Out Automatic AI-Powered Email Summaries

Gemini-Powered Email Summaries Coming to Workspace Users

Google Workspace subscribers will notice Gemini's enhanced role in managing their inboxes as Gmail begins automatically generating summaries for complex email threads. These AI-created digests

Apple Could Delay Next iPhone Launch for Foldable Model Release

According to The Information, Apple is preparing a significant reshuffle of its iPhone release calendar for 2026, introducing the company's first foldable iPhone alongside newly scheduled "Air" models. This strategic shift reportedly moves the standa

Apple Could Delay Next iPhone Launch for Foldable Model Release

According to The Information, Apple is preparing a significant reshuffle of its iPhone release calendar for 2026, introducing the company's first foldable iPhone alongside newly scheduled "Air" models. This strategic shift reportedly moves the standa