Study: OpenAI Models Memorized Copyrighted Content

A recent study suggests that OpenAI may have indeed used copyrighted material to train some of its AI models, adding fuel to the ongoing legal battles the company faces. Authors, programmers, and other content creators have accused OpenAI of using their works—such as books and code—without permission to develop its AI models. While OpenAI has defended itself by claiming fair use, the plaintiffs argue that U.S. copyright law doesn't provide an exception for training data.

The study, a collaboration between researchers from the University of Washington, the University of Copenhagen, and Stanford, introduces a new technique for detecting "memorized" training data in models accessed through an API, like those from OpenAI. AI models essentially learn from vast amounts of data to recognize patterns, enabling them to create essays, images, and more. Although most outputs aren't direct copies of the training data, some inevitably are due to the learning process. For instance, image models have been known to reproduce movie screenshots, while language models have been caught essentially plagiarizing news articles.

The method described in the study focuses on "high-surprisal" words—words that are unusual in a given context. For example, in the sentence "Jack and I sat perfectly still with the radar humming," "radar" would be a high-surprisal word because it's less expected than words like "engine" or "radio" to precede "humming."

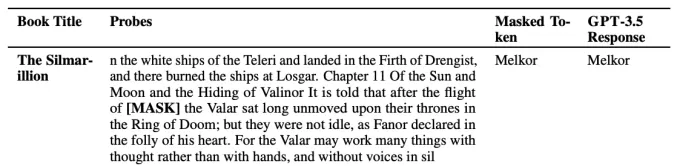

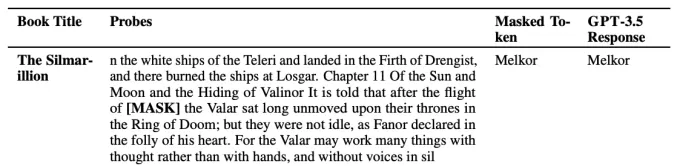

The researchers tested several OpenAI models, including GPT-4 and GPT-3.5, by removing high-surprisal words from excerpts of fiction books and New York Times articles and asking the models to predict these missing words. If the models accurately guessed the words, it suggested they had memorized the text during training.

An example of having a model “guess” a high-surprisal word.Image Credits:OpenAI The results indicated that GPT-4 had likely memorized parts of popular fiction books, including those in the BookMIA dataset of copyrighted ebooks. It also appeared to have memorized some New York Times articles, though at a lower frequency.

Abhilasha Ravichander, a doctoral student at the University of Washington and co-author of the study, emphasized to TechCrunch that these findings highlight the "contentious data" that might have been used to train these models. "In order to have large language models that are trustworthy, we need to have models that we can probe and audit and examine scientifically," Ravichander stated. "Our work aims to provide a tool to probe large language models, but there is a real need for greater data transparency in the whole ecosystem."

OpenAI has pushed for more relaxed rules on using copyrighted data to develop AI models. Although the company has some content licensing agreements and offers opt-out options for copyright holders, it has lobbied various governments to establish "fair use" rules specifically for AI training.

Related article

Nonprofit leverages AI agents to boost charity fundraising efforts

While major tech corporations promote AI "agents" as productivity boosters for businesses, one nonprofit organization is demonstrating their potential for social good. Sage Future, a philanthropic research group backed by Open Philanthropy, recently

Nonprofit leverages AI agents to boost charity fundraising efforts

While major tech corporations promote AI "agents" as productivity boosters for businesses, one nonprofit organization is demonstrating their potential for social good. Sage Future, a philanthropic research group backed by Open Philanthropy, recently

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

ChatGPT Adds Google Drive and Dropbox Integration for File Access

ChatGPT Enhances Productivity with New Enterprise Features

OpenAI has unveiled two powerful new capabilities transforming ChatGPT into a comprehensive business productivity tool: automated meeting documentation and seamless cloud storage integration

Comments (32)

0/200

ChatGPT Adds Google Drive and Dropbox Integration for File Access

ChatGPT Enhances Productivity with New Enterprise Features

OpenAI has unveiled two powerful new capabilities transforming ChatGPT into a comprehensive business productivity tool: automated meeting documentation and seamless cloud storage integration

Comments (32)

0/200

![WilliamGonzalez]() WilliamGonzalez

WilliamGonzalez

August 25, 2025 at 5:01:06 AM EDT

August 25, 2025 at 5:01:06 AM EDT

This is wild! OpenAI might’ve gobbled up copyrighted stuff to train their models? I’m not shocked, but it’s kinda shady. Hope those authors and coders get some justice! 😤

0

0

![GregoryBaker]() GregoryBaker

GregoryBaker

August 23, 2025 at 7:01:18 AM EDT

August 23, 2025 at 7:01:18 AM EDT

This is wild! OpenAI might've trained their models on copyrighted stuff? 😳 I wonder how many books and code snippets got swept up in that data vacuum. Ethics in AI is such a messy topic right now.

0

0

![JohnGarcia]() JohnGarcia

JohnGarcia

April 23, 2025 at 11:10:14 AM EDT

April 23, 2025 at 11:10:14 AM EDT

Me sorprendió un poco que OpenAI podría haber usado material con derechos de autor para entrenar sus modelos. Es un poco decepcionante, pero supongo que es el salvaje oeste allá en el mundo de la IA. 🤔 ¿Quizás deberían ser más cuidadosos la próxima vez?

0

0

![TimothyMitchell]() TimothyMitchell

TimothyMitchell

April 21, 2025 at 8:12:42 PM EDT

April 21, 2025 at 8:12:42 PM EDT

OpenAIが著作権付きの資料を使ってAIを訓練しているという研究は本当に驚きですね!クリエイターにとっては残念ですが、AIの訓練方法について知るのは面白いです。もっと透明性が必要かもしれませんね?🤔

0

0

![WillLopez]() WillLopez

WillLopez

April 21, 2025 at 7:49:05 AM EDT

April 21, 2025 at 7:49:05 AM EDT

오픈AI가 저작권 있는 자료를 사용해 AI를 훈련했다는 연구는 정말 충격적이에요! 창작자들에게는 안타까운 일이지만, AI가 어떻게 훈련되는지 아는 건 흥미로워요. 오픈AI가 더 투명해져야 할까요? 🤔

0

0

![WillMitchell]() WillMitchell

WillMitchell

April 20, 2025 at 11:30:11 PM EDT

April 20, 2025 at 11:30:11 PM EDT

Este estudio sobre los modelos de OpenAI usando contenido con derechos de autor es bastante aterrador! 😱 Está genial que la IA se esté volviendo más inteligente, pero usar libros y códigos sin permiso no parece correcto. ¡Espero que lo resuelvan pronto! 🤞

0

0

A recent study suggests that OpenAI may have indeed used copyrighted material to train some of its AI models, adding fuel to the ongoing legal battles the company faces. Authors, programmers, and other content creators have accused OpenAI of using their works—such as books and code—without permission to develop its AI models. While OpenAI has defended itself by claiming fair use, the plaintiffs argue that U.S. copyright law doesn't provide an exception for training data.

The study, a collaboration between researchers from the University of Washington, the University of Copenhagen, and Stanford, introduces a new technique for detecting "memorized" training data in models accessed through an API, like those from OpenAI. AI models essentially learn from vast amounts of data to recognize patterns, enabling them to create essays, images, and more. Although most outputs aren't direct copies of the training data, some inevitably are due to the learning process. For instance, image models have been known to reproduce movie screenshots, while language models have been caught essentially plagiarizing news articles.

The method described in the study focuses on "high-surprisal" words—words that are unusual in a given context. For example, in the sentence "Jack and I sat perfectly still with the radar humming," "radar" would be a high-surprisal word because it's less expected than words like "engine" or "radio" to precede "humming."

The researchers tested several OpenAI models, including GPT-4 and GPT-3.5, by removing high-surprisal words from excerpts of fiction books and New York Times articles and asking the models to predict these missing words. If the models accurately guessed the words, it suggested they had memorized the text during training.

Abhilasha Ravichander, a doctoral student at the University of Washington and co-author of the study, emphasized to TechCrunch that these findings highlight the "contentious data" that might have been used to train these models. "In order to have large language models that are trustworthy, we need to have models that we can probe and audit and examine scientifically," Ravichander stated. "Our work aims to provide a tool to probe large language models, but there is a real need for greater data transparency in the whole ecosystem."

OpenAI has pushed for more relaxed rules on using copyrighted data to develop AI models. Although the company has some content licensing agreements and offers opt-out options for copyright holders, it has lobbied various governments to establish "fair use" rules specifically for AI training.

Nonprofit leverages AI agents to boost charity fundraising efforts

While major tech corporations promote AI "agents" as productivity boosters for businesses, one nonprofit organization is demonstrating their potential for social good. Sage Future, a philanthropic research group backed by Open Philanthropy, recently

Nonprofit leverages AI agents to boost charity fundraising efforts

While major tech corporations promote AI "agents" as productivity boosters for businesses, one nonprofit organization is demonstrating their potential for social good. Sage Future, a philanthropic research group backed by Open Philanthropy, recently

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

ChatGPT Adds Google Drive and Dropbox Integration for File Access

ChatGPT Enhances Productivity with New Enterprise Features

OpenAI has unveiled two powerful new capabilities transforming ChatGPT into a comprehensive business productivity tool: automated meeting documentation and seamless cloud storage integration

ChatGPT Adds Google Drive and Dropbox Integration for File Access

ChatGPT Enhances Productivity with New Enterprise Features

OpenAI has unveiled two powerful new capabilities transforming ChatGPT into a comprehensive business productivity tool: automated meeting documentation and seamless cloud storage integration

August 25, 2025 at 5:01:06 AM EDT

August 25, 2025 at 5:01:06 AM EDT

This is wild! OpenAI might’ve gobbled up copyrighted stuff to train their models? I’m not shocked, but it’s kinda shady. Hope those authors and coders get some justice! 😤

0

0

August 23, 2025 at 7:01:18 AM EDT

August 23, 2025 at 7:01:18 AM EDT

This is wild! OpenAI might've trained their models on copyrighted stuff? 😳 I wonder how many books and code snippets got swept up in that data vacuum. Ethics in AI is such a messy topic right now.

0

0

April 23, 2025 at 11:10:14 AM EDT

April 23, 2025 at 11:10:14 AM EDT

Me sorprendió un poco que OpenAI podría haber usado material con derechos de autor para entrenar sus modelos. Es un poco decepcionante, pero supongo que es el salvaje oeste allá en el mundo de la IA. 🤔 ¿Quizás deberían ser más cuidadosos la próxima vez?

0

0

April 21, 2025 at 8:12:42 PM EDT

April 21, 2025 at 8:12:42 PM EDT

OpenAIが著作権付きの資料を使ってAIを訓練しているという研究は本当に驚きですね!クリエイターにとっては残念ですが、AIの訓練方法について知るのは面白いです。もっと透明性が必要かもしれませんね?🤔

0

0

April 21, 2025 at 7:49:05 AM EDT

April 21, 2025 at 7:49:05 AM EDT

오픈AI가 저작권 있는 자료를 사용해 AI를 훈련했다는 연구는 정말 충격적이에요! 창작자들에게는 안타까운 일이지만, AI가 어떻게 훈련되는지 아는 건 흥미로워요. 오픈AI가 더 투명해져야 할까요? 🤔

0

0

April 20, 2025 at 11:30:11 PM EDT

April 20, 2025 at 11:30:11 PM EDT

Este estudio sobre los modelos de OpenAI usando contenido con derechos de autor es bastante aterrador! 😱 Está genial que la IA se esté volviendo más inteligente, pero usar libros y códigos sin permiso no parece correcto. ¡Espero que lo resuelvan pronto! 🤞

0

0