Meta's AI Model Benchmarks: Misleading?

So, Meta dropped their new AI model, Maverick, over the weekend, and it's already making waves by snagging second place on LM Arena. You know, that's the place where humans get to play judge and jury, comparing different AI models and picking their favorites. But, hold up, there's a twist! It turns out the Maverick version strutting its stuff on LM Arena isn't quite the same as the one you can download and play with as a developer.

Some eagle-eyed AI researchers on X (yeah, the platform formerly known as Twitter) spotted that Meta called the LM Arena version an "experimental chat version." And if you peek at the Llama website, there's a chart that spills the beans, saying the testing was done with "Llama 4 Maverick optimized for conversationality." Now, we've talked about this before, but LM Arena isn't exactly the gold standard for measuring AI performance. Most AI companies don't mess with their models just to score better on this test—or at least, they don't admit to it.

The thing is, when you tweak a model to ace a benchmark but then release a different "vanilla" version to the public, it's tough for developers to figure out how well the model will actually perform in real-world scenarios. Plus, it's kinda misleading, right? Benchmarks, flawed as they are, should give us a clear picture of what a model can and can't do across different tasks.

Researchers on X have been quick to notice some big differences between the Maverick you can download and the one on LM Arena. The Arena version is apparently all about emojis and loves to give you long, drawn-out answers.

Okay Llama 4 is def a littled cooked lol, what is this yap city pic.twitter.com/y3GvhbVz65

— Nathan Lambert (@natolambert) April 6, 2025

for some reason, the Llama 4 model in Arena uses a lot more Emojis

on together . ai, it seems better: pic.twitter.com/f74ODX4zTt

— Tech Dev Notes (@techdevnotes) April 6, 2025

We've reached out to Meta and the folks at Chatbot Arena, who run LM Arena, to see what they have to say about all this. Stay tuned!

Related article

Meta's Zuckerberg Says Not All AI 'Superintelligence' Models Will Be Open-Sourced

Meta's Strategic Shift Toward Personal SuperintelligenceMeta CEO Mark Zuckerberg outlined an ambitious vision this week for "personal superintelligence" – AI systems that empower individuals to accomplish personal objectives - signaling potential cha

Meta's Zuckerberg Says Not All AI 'Superintelligence' Models Will Be Open-Sourced

Meta's Strategic Shift Toward Personal SuperintelligenceMeta CEO Mark Zuckerberg outlined an ambitious vision this week for "personal superintelligence" – AI systems that empower individuals to accomplish personal objectives - signaling potential cha

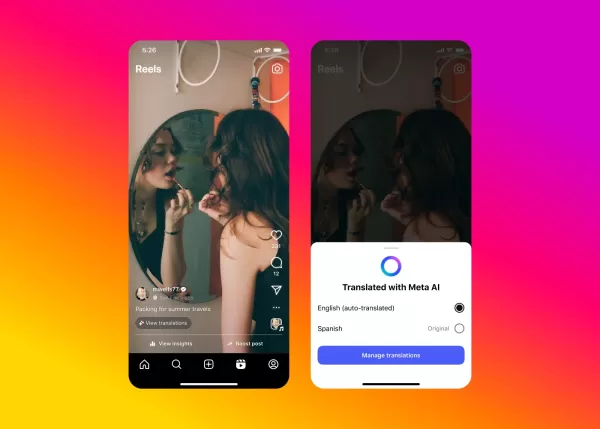

Meta's AI Tackles Video Dubbing for Instagram Content

Meta is expanding access to its groundbreaking AI-powered dubbing technology across Facebook and Instagram, introducing seamless Video translation capabilities that maintain your authentic voice and natural lip movements.Revolutionizing Cross-Cultura

Meta's AI Tackles Video Dubbing for Instagram Content

Meta is expanding access to its groundbreaking AI-powered dubbing technology across Facebook and Instagram, introducing seamless Video translation capabilities that maintain your authentic voice and natural lip movements.Revolutionizing Cross-Cultura

Meta AI App to Introduce Premium Tier and Ads

Meta's AI app may soon feature a paid subscription, mirroring offerings from competitors like OpenAI, Google, and Microsoft. During a Q1 2025 earnings call, Meta CEO Mark Zuckerberg outlined plans for

Comments (36)

0/200

Meta AI App to Introduce Premium Tier and Ads

Meta's AI app may soon feature a paid subscription, mirroring offerings from competitors like OpenAI, Google, and Microsoft. During a Q1 2025 earnings call, Meta CEO Mark Zuckerberg outlined plans for

Comments (36)

0/200

![ScottWalker]() ScottWalker

ScottWalker

July 27, 2025 at 9:20:54 PM EDT

July 27, 2025 at 9:20:54 PM EDT

Meta's Maverick hitting second on LM Arena? Impressive, but I'm skeptical about those benchmarks. Feels like a hype train—wonder if it’s more flash than substance. 🤔 Anyone tested it in real-world tasks yet?

0

0

![KennethMartin]() KennethMartin

KennethMartin

April 21, 2025 at 6:14:21 AM EDT

April 21, 2025 at 6:14:21 AM EDT

Meta's Maverick AI model is impressive, snagging second place on LM Arena! But are the benchmarks really telling the whole story? It's cool to see AI models go head-to-head, but I'm not sure if it's all fair play. Makes you wonder, right? 🤔 Maybe we need a more transparent way to judge these models!

0

0

![WalterThomas]() WalterThomas

WalterThomas

April 20, 2025 at 10:55:14 PM EDT

April 20, 2025 at 10:55:14 PM EDT

मेटा का नया AI मॉडल, मैवरिक, LM एरिना में दूसरे स्थान पर पहुंचा! यह प्रभावशाली है, लेकिन क्या बेंचमार्क वास्तव में पूरी कहानी बता रहे हैं? AI मॉडल्स को आपस में प्रतिस्पर्धा करते देखना मजेदार है, लेकिन मुझे नहीं पता कि यह निष्पक्ष है या नहीं। आपको सोचने पर मजबूर करता है, है ना? 🤔 शायद हमें इन मॉडल्स को जज करने का एक और पारदर्शी तरीका चाहिए!

0

0

![JohnYoung]() JohnYoung

JohnYoung

April 18, 2025 at 11:03:42 AM EDT

April 18, 2025 at 11:03:42 AM EDT

메타의 새로운 AI 모델, 마브릭이 LM Arena에서 2위를 차지하다니 대단해요! 하지만 벤치마크가 정말 모든 것을 말해주고 있는지 궁금해요. AI 모델 간의 경쟁은 재미있지만, 공정한지 확신할 수 없네요. 더 투명한 평가 방법이 필요할 것 같아요 🤔

0

0

![JohnHernández]() JohnHernández

JohnHernández

April 17, 2025 at 12:58:48 PM EDT

April 17, 2025 at 12:58:48 PM EDT

Meta's Maverick AI model snagging second place on LM Arena is pretty cool, but the benchmarks might be a bit off! 🤔 It's fun to see these models go head-to-head, but I'm not sure if the results are totally fair. Worth keeping an eye on! 👀

0

0

![MarkScott]() MarkScott

MarkScott

April 17, 2025 at 1:54:17 AM EDT

April 17, 2025 at 1:54:17 AM EDT

Модель ИИ Maverick от Meta заняла второе место на LM Arena, это круто, но бенчмарки могут быть немного не точными! 🤔 Забавно наблюдать за соревнованием этих моделей, но я не уверен, что результаты полностью справедливы. Стоит за этим следить! 👀

0

0

So, Meta dropped their new AI model, Maverick, over the weekend, and it's already making waves by snagging second place on LM Arena. You know, that's the place where humans get to play judge and jury, comparing different AI models and picking their favorites. But, hold up, there's a twist! It turns out the Maverick version strutting its stuff on LM Arena isn't quite the same as the one you can download and play with as a developer.

Some eagle-eyed AI researchers on X (yeah, the platform formerly known as Twitter) spotted that Meta called the LM Arena version an "experimental chat version." And if you peek at the Llama website, there's a chart that spills the beans, saying the testing was done with "Llama 4 Maverick optimized for conversationality." Now, we've talked about this before, but LM Arena isn't exactly the gold standard for measuring AI performance. Most AI companies don't mess with their models just to score better on this test—or at least, they don't admit to it.

The thing is, when you tweak a model to ace a benchmark but then release a different "vanilla" version to the public, it's tough for developers to figure out how well the model will actually perform in real-world scenarios. Plus, it's kinda misleading, right? Benchmarks, flawed as they are, should give us a clear picture of what a model can and can't do across different tasks.

Researchers on X have been quick to notice some big differences between the Maverick you can download and the one on LM Arena. The Arena version is apparently all about emojis and loves to give you long, drawn-out answers.

Okay Llama 4 is def a littled cooked lol, what is this yap city pic.twitter.com/y3GvhbVz65

— Nathan Lambert (@natolambert) April 6, 2025

for some reason, the Llama 4 model in Arena uses a lot more Emojis

— Tech Dev Notes (@techdevnotes) April 6, 2025

on together . ai, it seems better: pic.twitter.com/f74ODX4zTt

We've reached out to Meta and the folks at Chatbot Arena, who run LM Arena, to see what they have to say about all this. Stay tuned!

Meta's Zuckerberg Says Not All AI 'Superintelligence' Models Will Be Open-Sourced

Meta's Strategic Shift Toward Personal SuperintelligenceMeta CEO Mark Zuckerberg outlined an ambitious vision this week for "personal superintelligence" – AI systems that empower individuals to accomplish personal objectives - signaling potential cha

Meta's Zuckerberg Says Not All AI 'Superintelligence' Models Will Be Open-Sourced

Meta's Strategic Shift Toward Personal SuperintelligenceMeta CEO Mark Zuckerberg outlined an ambitious vision this week for "personal superintelligence" – AI systems that empower individuals to accomplish personal objectives - signaling potential cha

Meta's AI Tackles Video Dubbing for Instagram Content

Meta is expanding access to its groundbreaking AI-powered dubbing technology across Facebook and Instagram, introducing seamless Video translation capabilities that maintain your authentic voice and natural lip movements.Revolutionizing Cross-Cultura

Meta's AI Tackles Video Dubbing for Instagram Content

Meta is expanding access to its groundbreaking AI-powered dubbing technology across Facebook and Instagram, introducing seamless Video translation capabilities that maintain your authentic voice and natural lip movements.Revolutionizing Cross-Cultura

Meta AI App to Introduce Premium Tier and Ads

Meta's AI app may soon feature a paid subscription, mirroring offerings from competitors like OpenAI, Google, and Microsoft. During a Q1 2025 earnings call, Meta CEO Mark Zuckerberg outlined plans for

Meta AI App to Introduce Premium Tier and Ads

Meta's AI app may soon feature a paid subscription, mirroring offerings from competitors like OpenAI, Google, and Microsoft. During a Q1 2025 earnings call, Meta CEO Mark Zuckerberg outlined plans for

July 27, 2025 at 9:20:54 PM EDT

July 27, 2025 at 9:20:54 PM EDT

Meta's Maverick hitting second on LM Arena? Impressive, but I'm skeptical about those benchmarks. Feels like a hype train—wonder if it’s more flash than substance. 🤔 Anyone tested it in real-world tasks yet?

0

0

April 21, 2025 at 6:14:21 AM EDT

April 21, 2025 at 6:14:21 AM EDT

Meta's Maverick AI model is impressive, snagging second place on LM Arena! But are the benchmarks really telling the whole story? It's cool to see AI models go head-to-head, but I'm not sure if it's all fair play. Makes you wonder, right? 🤔 Maybe we need a more transparent way to judge these models!

0

0

April 20, 2025 at 10:55:14 PM EDT

April 20, 2025 at 10:55:14 PM EDT

मेटा का नया AI मॉडल, मैवरिक, LM एरिना में दूसरे स्थान पर पहुंचा! यह प्रभावशाली है, लेकिन क्या बेंचमार्क वास्तव में पूरी कहानी बता रहे हैं? AI मॉडल्स को आपस में प्रतिस्पर्धा करते देखना मजेदार है, लेकिन मुझे नहीं पता कि यह निष्पक्ष है या नहीं। आपको सोचने पर मजबूर करता है, है ना? 🤔 शायद हमें इन मॉडल्स को जज करने का एक और पारदर्शी तरीका चाहिए!

0

0

April 18, 2025 at 11:03:42 AM EDT

April 18, 2025 at 11:03:42 AM EDT

메타의 새로운 AI 모델, 마브릭이 LM Arena에서 2위를 차지하다니 대단해요! 하지만 벤치마크가 정말 모든 것을 말해주고 있는지 궁금해요. AI 모델 간의 경쟁은 재미있지만, 공정한지 확신할 수 없네요. 더 투명한 평가 방법이 필요할 것 같아요 🤔

0

0

April 17, 2025 at 12:58:48 PM EDT

April 17, 2025 at 12:58:48 PM EDT

Meta's Maverick AI model snagging second place on LM Arena is pretty cool, but the benchmarks might be a bit off! 🤔 It's fun to see these models go head-to-head, but I'm not sure if the results are totally fair. Worth keeping an eye on! 👀

0

0

April 17, 2025 at 1:54:17 AM EDT

April 17, 2025 at 1:54:17 AM EDT

Модель ИИ Maverick от Meta заняла второе место на LM Arena, это круто, но бенчмарки могут быть немного не точными! 🤔 Забавно наблюдать за соревнованием этих моделей, но я не уверен, что результаты полностью справедливы. Стоит за этим следить! 👀

0

0