Meta的AI模型基準:誤導性?

所以,Meta 在週末推出了他們的新 AI 模型 Maverick,它已經在 LM Arena 上掀起波瀾,奪得了第二名的位置。你知道的,那是個人類可以扮演法官與陪審團的地方,比較不同的 AI 模型並挑選他們的最愛。但是,等等,有個轉折!結果顯示,在 LM Arena 上展現風采的 Maverick 版本,與開發者可以下載並使用的版本並不完全相同。

一些在 X(是的,就是以前被稱為 Twitter 的平台)上的敏銳 AI 研究人員發現,Meta 將 LM Arena 上的版本稱為「實驗性聊天版本」。如果你偷瞄 Llama 網站,會看到一張圖表透露了真相,說測試是用「針對對話性優化的 Llama 4 Maverick」進行的。我們之前討論過這個,但 LM Arena 並不完全是衡量 AI 表現的黃金標準。大多數 AI 公司不會為了在這個測試中獲得更好成績而特意調整他們的模型——至少他們不會承認這一點。

問題在於,當你調整一個模型以在基準測試中表現出色,卻向公眾發布一個不同的「原版」版本時,開發者很難判斷這個模型在現實場景中的實際表現如何。而且,這有點誤導,對吧?基準測試雖然有其缺陷,但應該能清楚地展示一個模型在不同任務上的能力和局限性。

X 上的研究人員很快注意到,你可以下載的 Maverick 與 LM Arena 上的版本之間存在一些重大差異。Arena 版本似乎特別喜歡使用表情符號,而且愛給出冗長的回答。

好的,Llama 4 確實有點被過分誇大了,哈哈,這是什麼長篇大論的城市 pic.twitter.com/y3GvhbVz65

— Nathan Lambert (@natolambert) 2025年4月6日

不知為何,Arena 中的 Llama 4 模型使用了更多的表情符號

在 together.ai 上,它看起來更好: pic.twitter.com/f74ODX4zTt

— Tech Dev Notes (@techdevnotes) 2025年4月6日

我們已經聯繫了 Meta 和運營 LM Arena 的 Chatbot Arena 團隊,看看他們對此有什麼說法。敬請期待!

相關文章

Meta 的扎克伯格表示並非所有 AI「超級智慧」模型都會開放原始碼

Meta 邁向個人超級智慧的策略轉移Meta 執行長 Mark Zuckerberg 本週概述了「個人超級智慧」(personal superintelligence)的遠大願景,也就是讓個人有能力完成個人目標的 AI 系統,這意味著該公司的 AI 部署策略可能會有所改變。開放原始碼的困境扎克伯格的聲明顯示,Meta 在追求超級智慧系統時,可能會重新考慮其開放先進 AI 模型的承諾:"我們相信超級

Meta 的扎克伯格表示並非所有 AI「超級智慧」模型都會開放原始碼

Meta 邁向個人超級智慧的策略轉移Meta 執行長 Mark Zuckerberg 本週概述了「個人超級智慧」(personal superintelligence)的遠大願景,也就是讓個人有能力完成個人目標的 AI 系統,這意味著該公司的 AI 部署策略可能會有所改變。開放原始碼的困境扎克伯格的聲明顯示,Meta 在追求超級智慧系統時,可能會重新考慮其開放先進 AI 模型的承諾:"我們相信超級

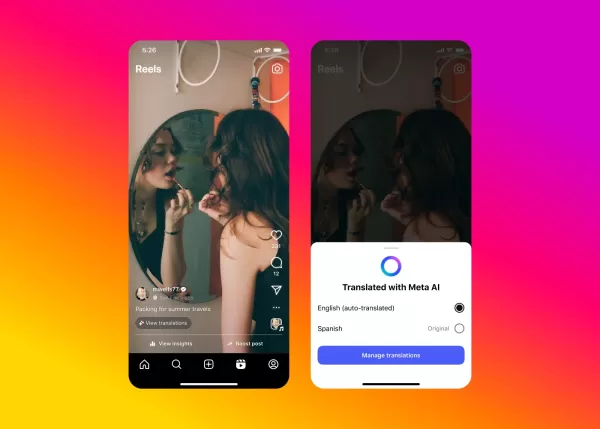

Meta 的 AI 攻克 Instagram 內容的視訊配音問題

Meta 將其突破性的 AI 配音技術擴展至 Facebook 和 Instagram,推出無縫視訊翻譯功能,以保持您真實的聲音和自然的嘴唇動作。革新跨文化內容Meta 的全新 AI 翻譯功能可自動在英文和西班牙文之間轉換 Reels,同時保留創作者的聲音特徵和唇語同步。這項創新是以去年 Meta Connect 活動中展示的技術為基礎,為內容創作人提供強大的工具,以吸引國際觀眾。如何運作此系統採

Meta 的 AI 攻克 Instagram 內容的視訊配音問題

Meta 將其突破性的 AI 配音技術擴展至 Facebook 和 Instagram,推出無縫視訊翻譯功能,以保持您真實的聲音和自然的嘴唇動作。革新跨文化內容Meta 的全新 AI 翻譯功能可自動在英文和西班牙文之間轉換 Reels,同時保留創作者的聲音特徵和唇語同步。這項創新是以去年 Meta Connect 活動中展示的技術為基礎,為內容創作人提供強大的工具,以吸引國際觀眾。如何運作此系統採

Meta AI應用程式將推出高級訂閱與廣告

Meta的AI應用程式即將推出付費訂閱服務,類似於OpenAI、Google和Microsoft等競爭對手的產品。在2025年第一季財報電話會議中,Meta首席執行官馬克·祖克柏格概述了高級服務的計劃,讓用戶能夠使用更強大的運算能力或Meta AI的額外功能。為了與ChatGPT競爭,Meta本週推出了一款獨立的AI應用程式,允許用戶直接與聊天機器人互動並進行圖像生成。該聊天機器人目前擁有近10億

評論 (36)

0/200

Meta AI應用程式將推出高級訂閱與廣告

Meta的AI應用程式即將推出付費訂閱服務,類似於OpenAI、Google和Microsoft等競爭對手的產品。在2025年第一季財報電話會議中,Meta首席執行官馬克·祖克柏格概述了高級服務的計劃,讓用戶能夠使用更強大的運算能力或Meta AI的額外功能。為了與ChatGPT競爭,Meta本週推出了一款獨立的AI應用程式,允許用戶直接與聊天機器人互動並進行圖像生成。該聊天機器人目前擁有近10億

評論 (36)

0/200

![ScottWalker]() ScottWalker

ScottWalker

2025-07-28 09:20:54

2025-07-28 09:20:54

Meta's Maverick hitting second on LM Arena? Impressive, but I'm skeptical about those benchmarks. Feels like a hype train—wonder if it’s more flash than substance. 🤔 Anyone tested it in real-world tasks yet?

0

0

![KennethMartin]() KennethMartin

KennethMartin

2025-04-21 18:14:21

2025-04-21 18:14:21

Meta's Maverick AI model is impressive, snagging second place on LM Arena! But are the benchmarks really telling the whole story? It's cool to see AI models go head-to-head, but I'm not sure if it's all fair play. Makes you wonder, right? 🤔 Maybe we need a more transparent way to judge these models!

0

0

![WalterThomas]() WalterThomas

WalterThomas

2025-04-21 10:55:14

2025-04-21 10:55:14

मेटा का नया AI मॉडल, मैवरिक, LM एरिना में दूसरे स्थान पर पहुंचा! यह प्रभावशाली है, लेकिन क्या बेंचमार्क वास्तव में पूरी कहानी बता रहे हैं? AI मॉडल्स को आपस में प्रतिस्पर्धा करते देखना मजेदार है, लेकिन मुझे नहीं पता कि यह निष्पक्ष है या नहीं। आपको सोचने पर मजबूर करता है, है ना? 🤔 शायद हमें इन मॉडल्स को जज करने का एक और पारदर्शी तरीका चाहिए!

0

0

![JohnYoung]() JohnYoung

JohnYoung

2025-04-18 23:03:42

2025-04-18 23:03:42

메타의 새로운 AI 모델, 마브릭이 LM Arena에서 2위를 차지하다니 대단해요! 하지만 벤치마크가 정말 모든 것을 말해주고 있는지 궁금해요. AI 모델 간의 경쟁은 재미있지만, 공정한지 확신할 수 없네요. 더 투명한 평가 방법이 필요할 것 같아요 🤔

0

0

![JohnHernández]() JohnHernández

JohnHernández

2025-04-18 00:58:48

2025-04-18 00:58:48

Meta's Maverick AI model snagging second place on LM Arena is pretty cool, but the benchmarks might be a bit off! 🤔 It's fun to see these models go head-to-head, but I'm not sure if the results are totally fair. Worth keeping an eye on! 👀

0

0

![MarkScott]() MarkScott

MarkScott

2025-04-17 13:54:17

2025-04-17 13:54:17

Модель ИИ Maverick от Meta заняла второе место на LM Arena, это круто, но бенчмарки могут быть немного не точными! 🤔 Забавно наблюдать за соревнованием этих моделей, но я не уверен, что результаты полностью справедливы. Стоит за этим следить! 👀

0

0

所以,Meta 在週末推出了他們的新 AI 模型 Maverick,它已經在 LM Arena 上掀起波瀾,奪得了第二名的位置。你知道的,那是個人類可以扮演法官與陪審團的地方,比較不同的 AI 模型並挑選他們的最愛。但是,等等,有個轉折!結果顯示,在 LM Arena 上展現風采的 Maverick 版本,與開發者可以下載並使用的版本並不完全相同。

一些在 X(是的,就是以前被稱為 Twitter 的平台)上的敏銳 AI 研究人員發現,Meta 將 LM Arena 上的版本稱為「實驗性聊天版本」。如果你偷瞄 Llama 網站,會看到一張圖表透露了真相,說測試是用「針對對話性優化的 Llama 4 Maverick」進行的。我們之前討論過這個,但 LM Arena 並不完全是衡量 AI 表現的黃金標準。大多數 AI 公司不會為了在這個測試中獲得更好成績而特意調整他們的模型——至少他們不會承認這一點。

問題在於,當你調整一個模型以在基準測試中表現出色,卻向公眾發布一個不同的「原版」版本時,開發者很難判斷這個模型在現實場景中的實際表現如何。而且,這有點誤導,對吧?基準測試雖然有其缺陷,但應該能清楚地展示一個模型在不同任務上的能力和局限性。

X 上的研究人員很快注意到,你可以下載的 Maverick 與 LM Arena 上的版本之間存在一些重大差異。Arena 版本似乎特別喜歡使用表情符號,而且愛給出冗長的回答。

好的,Llama 4 確實有點被過分誇大了,哈哈,這是什麼長篇大論的城市 pic.twitter.com/y3GvhbVz65

— Nathan Lambert (@natolambert) 2025年4月6日

不知為何,Arena 中的 Llama 4 模型使用了更多的表情符號

— Tech Dev Notes (@techdevnotes) 2025年4月6日

在 together.ai 上,它看起來更好: pic.twitter.com/f74ODX4zTt

我們已經聯繫了 Meta 和運營 LM Arena 的 Chatbot Arena 團隊,看看他們對此有什麼說法。敬請期待!

Meta 的扎克伯格表示並非所有 AI「超級智慧」模型都會開放原始碼

Meta 邁向個人超級智慧的策略轉移Meta 執行長 Mark Zuckerberg 本週概述了「個人超級智慧」(personal superintelligence)的遠大願景,也就是讓個人有能力完成個人目標的 AI 系統,這意味著該公司的 AI 部署策略可能會有所改變。開放原始碼的困境扎克伯格的聲明顯示,Meta 在追求超級智慧系統時,可能會重新考慮其開放先進 AI 模型的承諾:"我們相信超級

Meta 的扎克伯格表示並非所有 AI「超級智慧」模型都會開放原始碼

Meta 邁向個人超級智慧的策略轉移Meta 執行長 Mark Zuckerberg 本週概述了「個人超級智慧」(personal superintelligence)的遠大願景,也就是讓個人有能力完成個人目標的 AI 系統,這意味著該公司的 AI 部署策略可能會有所改變。開放原始碼的困境扎克伯格的聲明顯示,Meta 在追求超級智慧系統時,可能會重新考慮其開放先進 AI 模型的承諾:"我們相信超級

Meta 的 AI 攻克 Instagram 內容的視訊配音問題

Meta 將其突破性的 AI 配音技術擴展至 Facebook 和 Instagram,推出無縫視訊翻譯功能,以保持您真實的聲音和自然的嘴唇動作。革新跨文化內容Meta 的全新 AI 翻譯功能可自動在英文和西班牙文之間轉換 Reels,同時保留創作者的聲音特徵和唇語同步。這項創新是以去年 Meta Connect 活動中展示的技術為基礎,為內容創作人提供強大的工具,以吸引國際觀眾。如何運作此系統採

Meta 的 AI 攻克 Instagram 內容的視訊配音問題

Meta 將其突破性的 AI 配音技術擴展至 Facebook 和 Instagram,推出無縫視訊翻譯功能,以保持您真實的聲音和自然的嘴唇動作。革新跨文化內容Meta 的全新 AI 翻譯功能可自動在英文和西班牙文之間轉換 Reels,同時保留創作者的聲音特徵和唇語同步。這項創新是以去年 Meta Connect 活動中展示的技術為基礎,為內容創作人提供強大的工具,以吸引國際觀眾。如何運作此系統採

Meta AI應用程式將推出高級訂閱與廣告

Meta的AI應用程式即將推出付費訂閱服務,類似於OpenAI、Google和Microsoft等競爭對手的產品。在2025年第一季財報電話會議中,Meta首席執行官馬克·祖克柏格概述了高級服務的計劃,讓用戶能夠使用更強大的運算能力或Meta AI的額外功能。為了與ChatGPT競爭,Meta本週推出了一款獨立的AI應用程式,允許用戶直接與聊天機器人互動並進行圖像生成。該聊天機器人目前擁有近10億

Meta AI應用程式將推出高級訂閱與廣告

Meta的AI應用程式即將推出付費訂閱服務,類似於OpenAI、Google和Microsoft等競爭對手的產品。在2025年第一季財報電話會議中,Meta首席執行官馬克·祖克柏格概述了高級服務的計劃,讓用戶能夠使用更強大的運算能力或Meta AI的額外功能。為了與ChatGPT競爭,Meta本週推出了一款獨立的AI應用程式,允許用戶直接與聊天機器人互動並進行圖像生成。該聊天機器人目前擁有近10億

2025-07-28 09:20:54

2025-07-28 09:20:54

Meta's Maverick hitting second on LM Arena? Impressive, but I'm skeptical about those benchmarks. Feels like a hype train—wonder if it’s more flash than substance. 🤔 Anyone tested it in real-world tasks yet?

0

0

2025-04-21 18:14:21

2025-04-21 18:14:21

Meta's Maverick AI model is impressive, snagging second place on LM Arena! But are the benchmarks really telling the whole story? It's cool to see AI models go head-to-head, but I'm not sure if it's all fair play. Makes you wonder, right? 🤔 Maybe we need a more transparent way to judge these models!

0

0

2025-04-21 10:55:14

2025-04-21 10:55:14

मेटा का नया AI मॉडल, मैवरिक, LM एरिना में दूसरे स्थान पर पहुंचा! यह प्रभावशाली है, लेकिन क्या बेंचमार्क वास्तव में पूरी कहानी बता रहे हैं? AI मॉडल्स को आपस में प्रतिस्पर्धा करते देखना मजेदार है, लेकिन मुझे नहीं पता कि यह निष्पक्ष है या नहीं। आपको सोचने पर मजबूर करता है, है ना? 🤔 शायद हमें इन मॉडल्स को जज करने का एक और पारदर्शी तरीका चाहिए!

0

0

2025-04-18 23:03:42

2025-04-18 23:03:42

메타의 새로운 AI 모델, 마브릭이 LM Arena에서 2위를 차지하다니 대단해요! 하지만 벤치마크가 정말 모든 것을 말해주고 있는지 궁금해요. AI 모델 간의 경쟁은 재미있지만, 공정한지 확신할 수 없네요. 더 투명한 평가 방법이 필요할 것 같아요 🤔

0

0

2025-04-18 00:58:48

2025-04-18 00:58:48

Meta's Maverick AI model snagging second place on LM Arena is pretty cool, but the benchmarks might be a bit off! 🤔 It's fun to see these models go head-to-head, but I'm not sure if the results are totally fair. Worth keeping an eye on! 👀

0

0

2025-04-17 13:54:17

2025-04-17 13:54:17

Модель ИИ Maverick от Meta заняла второе место на LM Arena, это круто, но бенчмарки могут быть немного не точными! 🤔 Забавно наблюдать за соревнованием этих моделей, но я не уверен, что результаты полностью справедливы. Стоит за этим следить! 👀

0

0