Meta Unveils Llama 4 with Long Context Scout and Maverick Models, 2T Parameter Behemoth Coming Soon!

Back in January 2025, the AI world was rocked when a relatively unknown Chinese AI startup, DeepSeek, threw down the gauntlet with their groundbreaking open-source language reasoning model, DeepSeek R1. This model not only outperformed the likes of Meta but did so at a fraction of the cost—rumored to be as little as a few million dollars. That's the kind of budget Meta might spend on just a couple of its AI team leaders! This news sent Meta into a bit of a frenzy, especially since their latest Llama model, version 3.3, released just the month before, was already looking a bit dated.

Fast forward to today, and Meta's founder and CEO, Mark Zuckerberg, has taken to Instagram to announce the launch of the new Llama 4 series. This series includes the 400-billion parameter Llama 4 Maverick and the 109-billion parameter Llama 4 Scout, both available for developers to download and start tinkering with right away on llama.com and Hugging Face. There's also a sneak peek at a colossal 2-trillion parameter model, Llama 4 Behemoth, still in training, with no release date in sight.

Multimodal and Long-Context Capabilities

One of the standout features of these new models is their multimodal nature. They're not just about text; they can handle video and imagery too. And they come with incredibly long context windows—1 million tokens for Maverick and a whopping 10 million for Scout. To put that in perspective, that's like handling up to 1,500 and 15,000 pages of text in one go! Imagine the possibilities for fields like medicine, science, or literature where you need to process and generate vast amounts of information.

Mixture-of-Experts Architecture

All three Llama 4 models employ the "mixture-of-experts (MoE)" architecture, a technique that's been making waves, popularized by companies like OpenAI and Mistral. This approach combines multiple smaller, specialized models into one larger, more efficient model. Each Llama 4 model is a mix of 128 different experts, which means only the necessary expert and a shared one handle each token, making the models more cost-effective and faster to run. Meta boasts that Llama 4 Maverick can be run on a single Nvidia H100 DGX host, making deployment a breeze.

Cost-Effective and Accessible

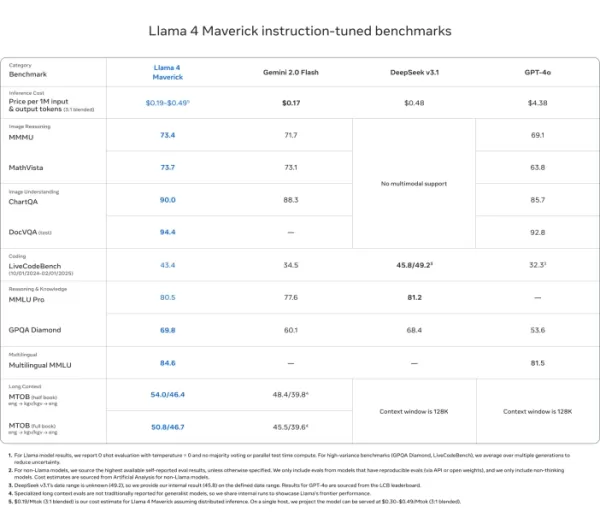

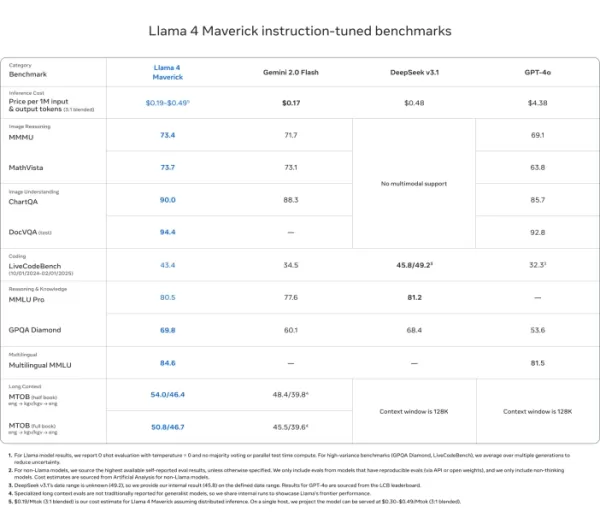

Meta is all about making these models accessible. Both Scout and Maverick are available for self-hosting, and they've even shared some enticing cost estimates. For instance, the inference cost for Llama 4 Maverick is between $0.19 and $0.49 per million tokens, which is a steal compared to other proprietary models like GPT-4o. And if you're interested in using these models via a cloud provider, Groq has already stepped up with competitive pricing.

Enhanced Reasoning and MetaP

These models are built with reasoning, coding, and problem-solving in mind. Meta's used some clever techniques during training to boost these capabilities, like removing easy prompts and using continuous reinforcement learning with increasingly difficult prompts. They've also introduced MetaP, a new technique that allows for setting hyperparameters on one model and applying them to others, saving time and money. It's a game-changer, especially for training monsters like Behemoth, which uses 32K GPUs and processes over 30 trillion tokens.

Performance and Comparisons

So, how do these models stack up? Zuckerberg's been clear about his vision for open-source AI leading the charge, and Llama 4 is a big step in that direction. While they might not set new performance records across the board, they're certainly near the top of their class. For instance, Llama 4 Behemoth outperforms some heavy hitters on certain benchmarks, though it's still playing catch-up with DeepSeek R1 and OpenAI's o1 series in others.

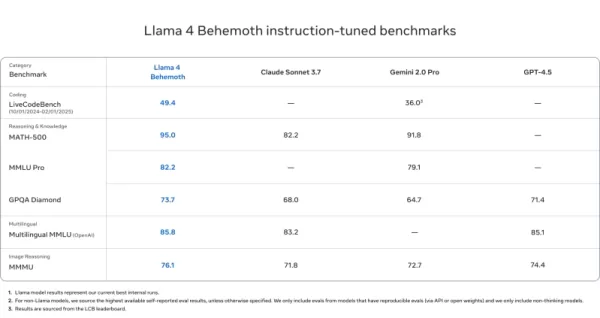

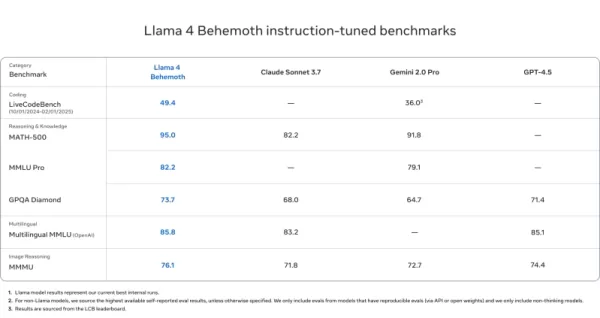

Llama 4 Behemoth

- Outperforms GPT-4.5, Gemini 2.0 Pro, and Claude Sonnet 3.7 on MATH-500 (95.0), GPQA Diamond (73.7), and MMLU Pro (82.2)

Llama 4 Maverick

- Beats GPT-4o and Gemini 2.0 Flash on most multimodal reasoning benchmarks like ChartQA, DocVQA, MathVista, and MMMU

- Competitive with DeepSeek v3.1 while using less than half the active parameters

- Benchmark scores: ChartQA (90.0), DocVQA (94.4), MMLU Pro (80.5)

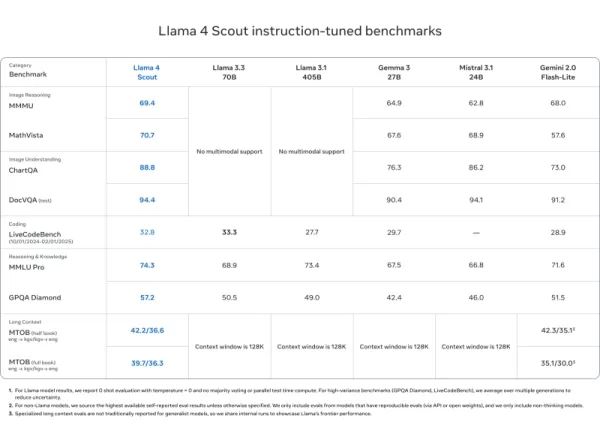

Llama 4 Scout

- Matches or outperforms models like Mistral 3.1, Gemini 2.0 Flash-Lite, and Gemma 3 on DocVQA (94.4), MMLU Pro (74.3), and MathVista (70.7)

- Unmatched 10M token context length—ideal for long documents and codebases

Comparing with DeepSeek R1

When it comes to the big leagues, Llama 4 Behemoth holds its own but doesn't quite dethrone DeepSeek R1 or OpenAI's o1 series. It's slightly behind on MATH-500 and MMLU but ahead on GPQA Diamond. Still, it's clear that Llama 4 is a strong contender in the reasoning space.

Benchmark Llama 4 Behemoth DeepSeek R1 OpenAI o1-1217 MATH-500 95.0 97.3 96.4 GPQA Diamond 73.7 71.5 75.7 MMLU 82.2 90.8 91.8

Safety and Political Neutrality

Meta hasn't forgotten about safety either. They've introduced tools like Llama Guard, Prompt Guard, and CyberSecEval to keep things on the up-and-up. And they're making a point about reducing political bias, aiming for a more balanced approach, especially after Zuckerberg's noted support for Republican politics post-2024 election.

The Future with Llama 4

With Llama 4, Meta is pushing the boundaries of efficiency, openness, and performance in AI. Whether you're looking to build enterprise-level AI assistants or dive deep into AI research, Llama 4 offers powerful, flexible options that prioritize reasoning. It's clear that Meta is committed to making AI more accessible and impactful for everyone.

Related article

Nonprofit leverages AI agents to boost charity fundraising efforts

While major tech corporations promote AI "agents" as productivity boosters for businesses, one nonprofit organization is demonstrating their potential for social good. Sage Future, a philanthropic research group backed by Open Philanthropy, recently

Nonprofit leverages AI agents to boost charity fundraising efforts

While major tech corporations promote AI "agents" as productivity boosters for businesses, one nonprofit organization is demonstrating their potential for social good. Sage Future, a philanthropic research group backed by Open Philanthropy, recently

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

Google Cloud Powers Breakthroughs in Scientific Research and Discovery

The digital revolution is transforming scientific methodologies through unprecedented computational capabilities. Cutting-edge technologies now augment both theoretical frameworks and laboratory experiments, propelling breakthroughs across discipline

Comments (26)

0/200

Google Cloud Powers Breakthroughs in Scientific Research and Discovery

The digital revolution is transforming scientific methodologies through unprecedented computational capabilities. Cutting-edge technologies now augment both theoretical frameworks and laboratory experiments, propelling breakthroughs across discipline

Comments (26)

0/200

![OwenLewis]() OwenLewis

OwenLewis

August 24, 2025 at 9:01:19 AM EDT

August 24, 2025 at 9:01:19 AM EDT

Llama 4 sounds like a beast! That 10M token context window is wild—imagine analyzing entire books in one go. But can Meta keep up with DeepSeek’s efficiency? Excited for Behemoth, though! 🚀

0

0

![RogerSanchez]() RogerSanchez

RogerSanchez

April 24, 2025 at 3:53:44 PM EDT

April 24, 2025 at 3:53:44 PM EDT

Llama 4 정말 대단해요! 긴 문맥 스카우트와 마버릭 모델은 놀랍네요. 2T 파라미터의 괴물이 나올 걸 기대하고 있어요. 다만, 설정하는 게 좀 복잡해요. 그래도, AI의 미래가 밝아 보이네요! 🚀

0

0

![WillieHernández]() WillieHernández

WillieHernández

April 23, 2025 at 8:21:23 PM EDT

April 23, 2025 at 8:21:23 PM EDT

Llama 4はすごい!長いコンテキストのスカウトやマーベリックモデルは驚異的。2Tパラメータのビーストが出るのを待ちきれない。ただ、設定が少し大変かな。でも、これでAIの未来は明るいね!🚀

0

0

![GregoryWilson]() GregoryWilson

GregoryWilson

April 22, 2025 at 1:23:39 PM EDT

April 22, 2025 at 1:23:39 PM EDT

MetaのLlama 4は最高ですね!長いコンテキストをスムーズに処理できるのが本当に便利。マーベリックモデルも面白いけど、2Tパラメータのモデルが来るのが楽しみです!🤩✨

0

0

![BrianThomas]() BrianThomas

BrianThomas

April 22, 2025 at 2:27:50 AM EDT

April 22, 2025 at 2:27:50 AM EDT

O Llama 4 da Meta é incrível! A função de contexto longo é uma mão na roda para minhas pesquisas. Os modelos Maverick também são legais, mas estou ansioso pelo modelo de 2T parâmetros. Mal posso esperar para ver o que ele pode fazer! 🤯🚀

0

0

![JohnGarcia]() JohnGarcia

JohnGarcia

April 21, 2025 at 11:11:00 PM EDT

April 21, 2025 at 11:11:00 PM EDT

Acabo de enterarme de Llama 4 de Meta y ¡es una locura! ¡2T parámetros! Espero que no sea solo hype, pero si cumple con las expectativas, va a ser increíble. ¿Alguien ya lo ha probado? ¡Quiero saber más! 😎

0

0

Back in January 2025, the AI world was rocked when a relatively unknown Chinese AI startup, DeepSeek, threw down the gauntlet with their groundbreaking open-source language reasoning model, DeepSeek R1. This model not only outperformed the likes of Meta but did so at a fraction of the cost—rumored to be as little as a few million dollars. That's the kind of budget Meta might spend on just a couple of its AI team leaders! This news sent Meta into a bit of a frenzy, especially since their latest Llama model, version 3.3, released just the month before, was already looking a bit dated.

Fast forward to today, and Meta's founder and CEO, Mark Zuckerberg, has taken to Instagram to announce the launch of the new Llama 4 series. This series includes the 400-billion parameter Llama 4 Maverick and the 109-billion parameter Llama 4 Scout, both available for developers to download and start tinkering with right away on llama.com and Hugging Face. There's also a sneak peek at a colossal 2-trillion parameter model, Llama 4 Behemoth, still in training, with no release date in sight.

Multimodal and Long-Context Capabilities

One of the standout features of these new models is their multimodal nature. They're not just about text; they can handle video and imagery too. And they come with incredibly long context windows—1 million tokens for Maverick and a whopping 10 million for Scout. To put that in perspective, that's like handling up to 1,500 and 15,000 pages of text in one go! Imagine the possibilities for fields like medicine, science, or literature where you need to process and generate vast amounts of information.

Mixture-of-Experts Architecture

All three Llama 4 models employ the "mixture-of-experts (MoE)" architecture, a technique that's been making waves, popularized by companies like OpenAI and Mistral. This approach combines multiple smaller, specialized models into one larger, more efficient model. Each Llama 4 model is a mix of 128 different experts, which means only the necessary expert and a shared one handle each token, making the models more cost-effective and faster to run. Meta boasts that Llama 4 Maverick can be run on a single Nvidia H100 DGX host, making deployment a breeze.

Cost-Effective and Accessible

Meta is all about making these models accessible. Both Scout and Maverick are available for self-hosting, and they've even shared some enticing cost estimates. For instance, the inference cost for Llama 4 Maverick is between $0.19 and $0.49 per million tokens, which is a steal compared to other proprietary models like GPT-4o. And if you're interested in using these models via a cloud provider, Groq has already stepped up with competitive pricing.

Enhanced Reasoning and MetaP

These models are built with reasoning, coding, and problem-solving in mind. Meta's used some clever techniques during training to boost these capabilities, like removing easy prompts and using continuous reinforcement learning with increasingly difficult prompts. They've also introduced MetaP, a new technique that allows for setting hyperparameters on one model and applying them to others, saving time and money. It's a game-changer, especially for training monsters like Behemoth, which uses 32K GPUs and processes over 30 trillion tokens.

Performance and Comparisons

So, how do these models stack up? Zuckerberg's been clear about his vision for open-source AI leading the charge, and Llama 4 is a big step in that direction. While they might not set new performance records across the board, they're certainly near the top of their class. For instance, Llama 4 Behemoth outperforms some heavy hitters on certain benchmarks, though it's still playing catch-up with DeepSeek R1 and OpenAI's o1 series in others.

Llama 4 Behemoth

- Outperforms GPT-4.5, Gemini 2.0 Pro, and Claude Sonnet 3.7 on MATH-500 (95.0), GPQA Diamond (73.7), and MMLU Pro (82.2)

Llama 4 Maverick

- Beats GPT-4o and Gemini 2.0 Flash on most multimodal reasoning benchmarks like ChartQA, DocVQA, MathVista, and MMMU

- Competitive with DeepSeek v3.1 while using less than half the active parameters

- Benchmark scores: ChartQA (90.0), DocVQA (94.4), MMLU Pro (80.5)

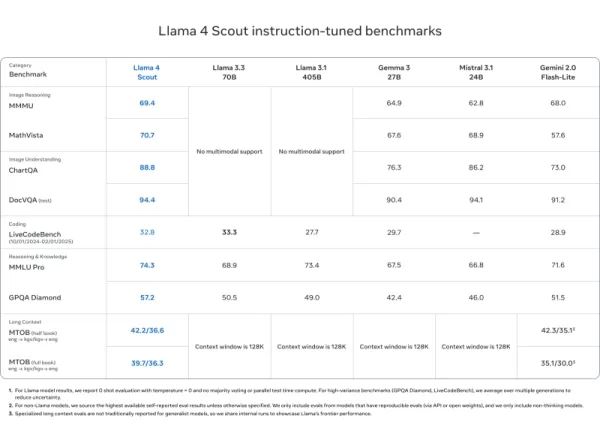

Llama 4 Scout

- Matches or outperforms models like Mistral 3.1, Gemini 2.0 Flash-Lite, and Gemma 3 on DocVQA (94.4), MMLU Pro (74.3), and MathVista (70.7)

- Unmatched 10M token context length—ideal for long documents and codebases

Comparing with DeepSeek R1

When it comes to the big leagues, Llama 4 Behemoth holds its own but doesn't quite dethrone DeepSeek R1 or OpenAI's o1 series. It's slightly behind on MATH-500 and MMLU but ahead on GPQA Diamond. Still, it's clear that Llama 4 is a strong contender in the reasoning space.

| Benchmark | Llama 4 Behemoth | DeepSeek R1 | OpenAI o1-1217 |

|---|---|---|---|

| MATH-500 | 95.0 | 97.3 | 96.4 |

| GPQA Diamond | 73.7 | 71.5 | 75.7 |

| MMLU | 82.2 | 90.8 | 91.8 |

Safety and Political Neutrality

Meta hasn't forgotten about safety either. They've introduced tools like Llama Guard, Prompt Guard, and CyberSecEval to keep things on the up-and-up. And they're making a point about reducing political bias, aiming for a more balanced approach, especially after Zuckerberg's noted support for Republican politics post-2024 election.

The Future with Llama 4

With Llama 4, Meta is pushing the boundaries of efficiency, openness, and performance in AI. Whether you're looking to build enterprise-level AI assistants or dive deep into AI research, Llama 4 offers powerful, flexible options that prioritize reasoning. It's clear that Meta is committed to making AI more accessible and impactful for everyone.

Nonprofit leverages AI agents to boost charity fundraising efforts

While major tech corporations promote AI "agents" as productivity boosters for businesses, one nonprofit organization is demonstrating their potential for social good. Sage Future, a philanthropic research group backed by Open Philanthropy, recently

Nonprofit leverages AI agents to boost charity fundraising efforts

While major tech corporations promote AI "agents" as productivity boosters for businesses, one nonprofit organization is demonstrating their potential for social good. Sage Future, a philanthropic research group backed by Open Philanthropy, recently

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

Google Cloud Powers Breakthroughs in Scientific Research and Discovery

The digital revolution is transforming scientific methodologies through unprecedented computational capabilities. Cutting-edge technologies now augment both theoretical frameworks and laboratory experiments, propelling breakthroughs across discipline

Google Cloud Powers Breakthroughs in Scientific Research and Discovery

The digital revolution is transforming scientific methodologies through unprecedented computational capabilities. Cutting-edge technologies now augment both theoretical frameworks and laboratory experiments, propelling breakthroughs across discipline

August 24, 2025 at 9:01:19 AM EDT

August 24, 2025 at 9:01:19 AM EDT

Llama 4 sounds like a beast! That 10M token context window is wild—imagine analyzing entire books in one go. But can Meta keep up with DeepSeek’s efficiency? Excited for Behemoth, though! 🚀

0

0

April 24, 2025 at 3:53:44 PM EDT

April 24, 2025 at 3:53:44 PM EDT

Llama 4 정말 대단해요! 긴 문맥 스카우트와 마버릭 모델은 놀랍네요. 2T 파라미터의 괴물이 나올 걸 기대하고 있어요. 다만, 설정하는 게 좀 복잡해요. 그래도, AI의 미래가 밝아 보이네요! 🚀

0

0

April 23, 2025 at 8:21:23 PM EDT

April 23, 2025 at 8:21:23 PM EDT

Llama 4はすごい!長いコンテキストのスカウトやマーベリックモデルは驚異的。2Tパラメータのビーストが出るのを待ちきれない。ただ、設定が少し大変かな。でも、これでAIの未来は明るいね!🚀

0

0

April 22, 2025 at 1:23:39 PM EDT

April 22, 2025 at 1:23:39 PM EDT

MetaのLlama 4は最高ですね!長いコンテキストをスムーズに処理できるのが本当に便利。マーベリックモデルも面白いけど、2Tパラメータのモデルが来るのが楽しみです!🤩✨

0

0

April 22, 2025 at 2:27:50 AM EDT

April 22, 2025 at 2:27:50 AM EDT

O Llama 4 da Meta é incrível! A função de contexto longo é uma mão na roda para minhas pesquisas. Os modelos Maverick também são legais, mas estou ansioso pelo modelo de 2T parâmetros. Mal posso esperar para ver o que ele pode fazer! 🤯🚀

0

0

April 21, 2025 at 11:11:00 PM EDT

April 21, 2025 at 11:11:00 PM EDT

Acabo de enterarme de Llama 4 de Meta y ¡es una locura! ¡2T parámetros! Espero que no sea solo hype, pero si cumple con las expectativas, va a ser increíble. ¿Alguien ya lo ha probado? ¡Quiero saber más! 😎

0

0