Boosting AI Performance with Concise Reasoning in Large Language Models

Large Language Models (LLMs) have revolutionized Artificial Intelligence (AI), producing human-like text and tackling complex challenges across industries. Previously, experts assumed extended reasoning chains enhanced accuracy, with more steps yielding reliable outcomes.

A 2025 study by Meta’s FAIR team and The Hebrew University of Jerusalem challenges this notion. It reveals shorter reasoning chains can boost LLM accuracy by up to 34.5% while cutting computational costs by 40%. Concise reasoning accelerates processing, promising to reshape LLM training, deployment, and scalability.

Why Concise Reasoning Enhances AI Efficiency

Traditionally, longer reasoning chains were thought to improve AI outcomes by processing more data. The logic was straightforward: more steps meant deeper analysis, increasing accuracy. Consequently, AI systems prioritized extended reasoning to enhance performance.

Yet, this approach has drawbacks. Longer chains demand significant computational power, slowing processing and raising costs, particularly in real-time applications requiring swift responses. Additionally, complex chains heighten error risks, reducing efficiency and scalability in industries needing both speed and precision.

The Meta-led study highlights these flaws, showing shorter reasoning chains improve accuracy while lowering computational demands. This enables faster task processing without sacrificing reliability.

These insights shift AI development focus from maximizing reasoning steps to optimizing processes. Shorter chains enhance efficiency, deliver reliable results, and reduce processing time.

Optimizing Reasoning with the short-m@k Framework

The study introduces the short-m@k inference framework, designed to streamline multi-step reasoning in LLMs. Unlike traditional sequential or majority-voting methods, it uses parallel processing and early termination to boost efficiency and cut costs.

In the short-m@k approach, k parallel reasoning chains run simultaneously, stopping once the first m chains complete. The final prediction uses majority voting from these early results, minimizing unnecessary computations while preserving accuracy.

The framework offers two variants:

short-1@k: Selects the first completed chain from k parallel attempts, ideal for low-resource, latency-sensitive settings, delivering high accuracy with minimal computational cost.

short-3@k: Combines results from the first three completed chains, surpassing traditional methods in accuracy and throughput, suited for high-performance, large-scale environments.

The short-m@k framework also improves model fine-tuning. Training with concise reasoning sequences speeds up convergence, enhancing inference precision and resource efficiency during training and deployment.

Impact on AI Development and Industry Use

Shorter reasoning chains significantly influence AI model development, deployment, and sustainability.

In training, concise chains reduce computational complexity, lowering costs and accelerating updates without requiring additional infrastructure.

For deployment, especially in time-sensitive applications like chatbots or trading platforms, shorter chains enhance processing speed, enabling systems to handle more requests efficiently and scale effectively under high demand.

Energy efficiency is another advantage. Fewer computations during training and inference reduce power consumption, cutting costs and supporting environmental goals as data centers face growing energy demands.

Overall, these efficiencies accelerate AI development, enabling faster market delivery of AI solutions, helping organizations stay competitive in a dynamic tech landscape.

Addressing Challenges in Adopting Concise Reasoning

While shorter reasoning chains offer clear benefits, implementation poses challenges.

Traditional AI systems, built for longer reasoning, require retooling model architectures, training methods, and optimization strategies, demanding technical expertise and organizational adaptability.

Data quality and structure are critical. Models trained on datasets for extended reasoning may falter with shorter paths. Curating datasets for concise, targeted reasoning is essential to maintain accuracy.

Scalability is another hurdle. While effective in controlled settings, large-scale applications like e-commerce or customer support demand robust infrastructure to manage high request volumes without compromising performance.

Strategies to address these include:

- Implement the short-m@k framework: Leverages parallel processing and early termination for balanced speed and accuracy in real-time applications.

- Focus on concise reasoning in training: Use methods emphasizing shorter chains to optimize resources and speed.

- Track reasoning metrics: Monitor chain length and model performance in real-time for ongoing efficiency and accuracy.

These strategies enable developers to adopt shorter reasoning chains, creating faster, more accurate, and scalable AI systems that meet operational and cost-efficiency goals.

The Bottom Line

Research on concise reasoning chains redefines AI development. Shorter chains enhance speed, accuracy, and cost-efficiency, critical for industries prioritizing performance.

By adopting concise reasoning, AI systems improve without additional resources, enabling efficient development and deployment. This approach positions AI to meet diverse needs, keeping developers and companies competitive in a rapidly evolving tech landscape.

Related article

Magi-1 Unveiled: Pioneering AI Video Generation Technology

Explore our detailed analysis of Magi-1, an innovative AI platform revolutionizing autoregressive video creation. This article delves into its unique features, pricing structure, and performance metri

Magi-1 Unveiled: Pioneering AI Video Generation Technology

Explore our detailed analysis of Magi-1, an innovative AI platform revolutionizing autoregressive video creation. This article delves into its unique features, pricing structure, and performance metri

AI-Powered Graphic Design: Top Tools and Techniques for 2025

In 2025, artificial intelligence (AI) is reshaping industries, with graphic design at the forefront of this transformation. AI tools are empowering designers by boosting creativity, optimizing workflo

AI-Powered Graphic Design: Top Tools and Techniques for 2025

In 2025, artificial intelligence (AI) is reshaping industries, with graphic design at the forefront of this transformation. AI tools are empowering designers by boosting creativity, optimizing workflo

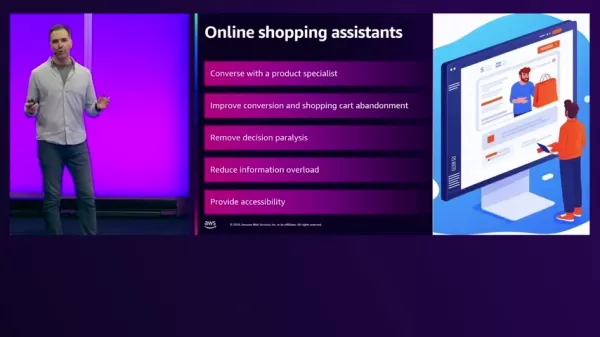

AI-Driven Shopping Assistants Transform E-Commerce on AWS

In today's fast-paced e-commerce environment, retailers strive to elevate customer experiences and boost sales. Generative AI delivers innovative solutions by powering intelligent shopping assistants

Comments (0)

0/200

AI-Driven Shopping Assistants Transform E-Commerce on AWS

In today's fast-paced e-commerce environment, retailers strive to elevate customer experiences and boost sales. Generative AI delivers innovative solutions by powering intelligent shopping assistants

Comments (0)

0/200

Large Language Models (LLMs) have revolutionized Artificial Intelligence (AI), producing human-like text and tackling complex challenges across industries. Previously, experts assumed extended reasoning chains enhanced accuracy, with more steps yielding reliable outcomes.

A 2025 study by Meta’s FAIR team and The Hebrew University of Jerusalem challenges this notion. It reveals shorter reasoning chains can boost LLM accuracy by up to 34.5% while cutting computational costs by 40%. Concise reasoning accelerates processing, promising to reshape LLM training, deployment, and scalability.

Why Concise Reasoning Enhances AI Efficiency

Traditionally, longer reasoning chains were thought to improve AI outcomes by processing more data. The logic was straightforward: more steps meant deeper analysis, increasing accuracy. Consequently, AI systems prioritized extended reasoning to enhance performance.

Yet, this approach has drawbacks. Longer chains demand significant computational power, slowing processing and raising costs, particularly in real-time applications requiring swift responses. Additionally, complex chains heighten error risks, reducing efficiency and scalability in industries needing both speed and precision.

The Meta-led study highlights these flaws, showing shorter reasoning chains improve accuracy while lowering computational demands. This enables faster task processing without sacrificing reliability.

These insights shift AI development focus from maximizing reasoning steps to optimizing processes. Shorter chains enhance efficiency, deliver reliable results, and reduce processing time.

Optimizing Reasoning with the short-m@k Framework

The study introduces the short-m@k inference framework, designed to streamline multi-step reasoning in LLMs. Unlike traditional sequential or majority-voting methods, it uses parallel processing and early termination to boost efficiency and cut costs.

In the short-m@k approach, k parallel reasoning chains run simultaneously, stopping once the first m chains complete. The final prediction uses majority voting from these early results, minimizing unnecessary computations while preserving accuracy.

The framework offers two variants:

short-1@k: Selects the first completed chain from k parallel attempts, ideal for low-resource, latency-sensitive settings, delivering high accuracy with minimal computational cost.

short-3@k: Combines results from the first three completed chains, surpassing traditional methods in accuracy and throughput, suited for high-performance, large-scale environments.

The short-m@k framework also improves model fine-tuning. Training with concise reasoning sequences speeds up convergence, enhancing inference precision and resource efficiency during training and deployment.

Impact on AI Development and Industry Use

Shorter reasoning chains significantly influence AI model development, deployment, and sustainability.

In training, concise chains reduce computational complexity, lowering costs and accelerating updates without requiring additional infrastructure.

For deployment, especially in time-sensitive applications like chatbots or trading platforms, shorter chains enhance processing speed, enabling systems to handle more requests efficiently and scale effectively under high demand.

Energy efficiency is another advantage. Fewer computations during training and inference reduce power consumption, cutting costs and supporting environmental goals as data centers face growing energy demands.

Overall, these efficiencies accelerate AI development, enabling faster market delivery of AI solutions, helping organizations stay competitive in a dynamic tech landscape.

Addressing Challenges in Adopting Concise Reasoning

While shorter reasoning chains offer clear benefits, implementation poses challenges.

Traditional AI systems, built for longer reasoning, require retooling model architectures, training methods, and optimization strategies, demanding technical expertise and organizational adaptability.

Data quality and structure are critical. Models trained on datasets for extended reasoning may falter with shorter paths. Curating datasets for concise, targeted reasoning is essential to maintain accuracy.

Scalability is another hurdle. While effective in controlled settings, large-scale applications like e-commerce or customer support demand robust infrastructure to manage high request volumes without compromising performance.

Strategies to address these include:

- Implement the short-m@k framework: Leverages parallel processing and early termination for balanced speed and accuracy in real-time applications.

- Focus on concise reasoning in training: Use methods emphasizing shorter chains to optimize resources and speed.

- Track reasoning metrics: Monitor chain length and model performance in real-time for ongoing efficiency and accuracy.

These strategies enable developers to adopt shorter reasoning chains, creating faster, more accurate, and scalable AI systems that meet operational and cost-efficiency goals.

The Bottom Line

Research on concise reasoning chains redefines AI development. Shorter chains enhance speed, accuracy, and cost-efficiency, critical for industries prioritizing performance.

By adopting concise reasoning, AI systems improve without additional resources, enabling efficient development and deployment. This approach positions AI to meet diverse needs, keeping developers and companies competitive in a rapidly evolving tech landscape.

Magi-1 Unveiled: Pioneering AI Video Generation Technology

Explore our detailed analysis of Magi-1, an innovative AI platform revolutionizing autoregressive video creation. This article delves into its unique features, pricing structure, and performance metri

Magi-1 Unveiled: Pioneering AI Video Generation Technology

Explore our detailed analysis of Magi-1, an innovative AI platform revolutionizing autoregressive video creation. This article delves into its unique features, pricing structure, and performance metri

AI-Powered Graphic Design: Top Tools and Techniques for 2025

In 2025, artificial intelligence (AI) is reshaping industries, with graphic design at the forefront of this transformation. AI tools are empowering designers by boosting creativity, optimizing workflo

AI-Powered Graphic Design: Top Tools and Techniques for 2025

In 2025, artificial intelligence (AI) is reshaping industries, with graphic design at the forefront of this transformation. AI tools are empowering designers by boosting creativity, optimizing workflo

AI-Driven Shopping Assistants Transform E-Commerce on AWS

In today's fast-paced e-commerce environment, retailers strive to elevate customer experiences and boost sales. Generative AI delivers innovative solutions by powering intelligent shopping assistants

AI-Driven Shopping Assistants Transform E-Commerce on AWS

In today's fast-paced e-commerce environment, retailers strive to elevate customer experiences and boost sales. Generative AI delivers innovative solutions by powering intelligent shopping assistants