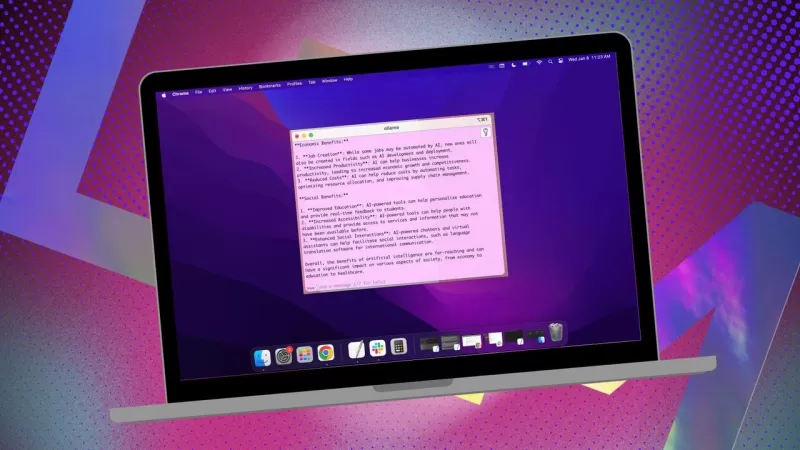

App Simplifies Using Ollama Local AI on MacOS Devices

If you're looking to keep your data private and avoid feeding into third-party profiles or training sets, using a locally installed AI for your research is a smart move. I've been using the open-source Ollama on my Linux system, enhanced with a handy browser extension for a smoother experience. But when I switch to my MacOS, I opt for a straightforward, free app called Msty.

Also: How to turn Ollama from a terminal tool into a browser-based AI with this free extension

Msty is versatile, allowing you to tap into both locally installed and online AI models. Personally, I stick with the local option for maximum privacy. What sets Msty apart from other Ollama tools is its simplicity—no need for containers, terminals, or additional browser tabs.

The app is packed with features that make it a breeze to use. You can run multiple queries simultaneously with split chats, regenerate model responses, clone chats, and even add multiple models. There's real-time data summoning (though it's model-specific), and you can create Knowledge Stacks to train your local model with files, folders, Obsidian vaults, notes, and more. Plus, there's a prompt library to help you get the most out of your queries.

Msty is hands down one of the best ways to interact with Ollama. Here's how you can get started:

Installing Msty

What you'll need: Just a MacOS device and Ollama installed and running. If you haven't set up Ollama yet, follow the steps here. Don't forget to download a local model as well.

Download the Installer

Head over to the Msty website, click on the Download Msty dropdown, choose Mac, and then pick either Apple Silicon or Intel based on your device.

Install Msty

Once the download finishes, double-click the file and drag the Msty icon to the Applications folder when prompted.

Using Msty

Open Msty

Launch Msty from Launchpad on your MacOS.

Connect Your Local Ollama Model

When you first open Msty, click on Setup Local AI. It will download the necessary components and configure everything for you, including downloading a local model other than Ollama.

To link Msty with Ollama, navigate to Local AI Models in the sidebar and click the download button next to Llama 3.2. After it's downloaded, select it from the models dropdown. For other models, you'll need an API key from your account for that specific model. Now Msty should be connected to your local Ollama LLM.

I prefer sticking with the Ollama local model for my queries.

Model Instructions

One of my favorite features in Msty is the ability to customize model instructions. Whether you need the AI to act as a doctor, a writer, an accountant, an alien anthropologist, or an artistic advisor, Msty has you covered.

To tweak the model instructions, click on Edit Model Instructions in the center of the app, then hit the tiny chat button to the left of the broom icon. From the popup menu, pick the instructions you want and click "Apply to this chat" before you run your first query.

There are plenty of model instructions to choose from, helping you refine your queries for specific needs.

This guide should help you get up and running with Msty quickly. Start with the basics and, as you become more comfortable with the app, explore its more advanced features. It's a powerful tool that can really enhance your local AI experience on MacOS.

Related article

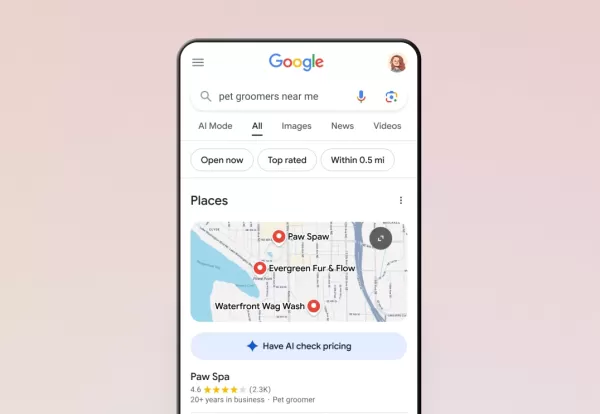

Google's AI Now Handles Phone Calls for You

Google has expanded its AI calling feature to all US users through Search, enabling customers to inquire about pricing and availability with local businesses without phone conversations. Initially tested in January, this capability currently supports

Google's AI Now Handles Phone Calls for You

Google has expanded its AI calling feature to all US users through Search, enabling customers to inquire about pricing and availability with local businesses without phone conversations. Initially tested in January, this capability currently supports

Trump Exempts Smartphones, Computers, and Chips from Tariff Hikes

The Trump administration has granted exclusions for smartphones, computers, and various electronic devices from recent tariff increases, even when imported from China, according to Bloomberg reporting. However, these products remain subject to earlie

Trump Exempts Smartphones, Computers, and Chips from Tariff Hikes

The Trump administration has granted exclusions for smartphones, computers, and various electronic devices from recent tariff increases, even when imported from China, according to Bloomberg reporting. However, these products remain subject to earlie

AI Reimagines Michael Jackson in the Metaverse with Stunning Digital Transformations

Artificial intelligence is fundamentally reshaping our understanding of creativity, entertainment, and cultural legacy. This exploration into AI-generated interpretations of Michael Jackson reveals how cutting-edge technology can breathe new life int

Comments (2)

0/200

AI Reimagines Michael Jackson in the Metaverse with Stunning Digital Transformations

Artificial intelligence is fundamentally reshaping our understanding of creativity, entertainment, and cultural legacy. This exploration into AI-generated interpretations of Michael Jackson reveals how cutting-edge technology can breathe new life int

Comments (2)

0/200

![PaulHill]() PaulHill

PaulHill

August 12, 2025 at 2:50:10 AM EDT

August 12, 2025 at 2:50:10 AM EDT

This app sounds like a game-changer for Mac users! I love the idea of keeping my data local with Ollama—privacy is everything these days. 😎 Anyone tried it yet? How’s the setup?

0

0

![DouglasMartin]() DouglasMartin

DouglasMartin

July 27, 2025 at 9:20:21 PM EDT

July 27, 2025 at 9:20:21 PM EDT

This app sounds like a game-changer for Mac users wanting to keep their AI local! 😎 I love how it simplifies using Ollama—definitely gonna try it out to keep my data private.

0

0

If you're looking to keep your data private and avoid feeding into third-party profiles or training sets, using a locally installed AI for your research is a smart move. I've been using the open-source Ollama on my Linux system, enhanced with a handy browser extension for a smoother experience. But when I switch to my MacOS, I opt for a straightforward, free app called Msty.

Also: How to turn Ollama from a terminal tool into a browser-based AI with this free extension

Msty is versatile, allowing you to tap into both locally installed and online AI models. Personally, I stick with the local option for maximum privacy. What sets Msty apart from other Ollama tools is its simplicity—no need for containers, terminals, or additional browser tabs.

The app is packed with features that make it a breeze to use. You can run multiple queries simultaneously with split chats, regenerate model responses, clone chats, and even add multiple models. There's real-time data summoning (though it's model-specific), and you can create Knowledge Stacks to train your local model with files, folders, Obsidian vaults, notes, and more. Plus, there's a prompt library to help you get the most out of your queries.

Msty is hands down one of the best ways to interact with Ollama. Here's how you can get started:

Installing Msty

What you'll need: Just a MacOS device and Ollama installed and running. If you haven't set up Ollama yet, follow the steps here. Don't forget to download a local model as well.

Download the Installer

Head over to the Msty website, click on the Download Msty dropdown, choose Mac, and then pick either Apple Silicon or Intel based on your device.

Install Msty

Once the download finishes, double-click the file and drag the Msty icon to the Applications folder when prompted.

Using Msty

Open Msty

Launch Msty from Launchpad on your MacOS.

Connect Your Local Ollama Model

When you first open Msty, click on Setup Local AI. It will download the necessary components and configure everything for you, including downloading a local model other than Ollama.

To link Msty with Ollama, navigate to Local AI Models in the sidebar and click the download button next to Llama 3.2. After it's downloaded, select it from the models dropdown. For other models, you'll need an API key from your account for that specific model. Now Msty should be connected to your local Ollama LLM.

I prefer sticking with the Ollama local model for my queries.

Model Instructions

One of my favorite features in Msty is the ability to customize model instructions. Whether you need the AI to act as a doctor, a writer, an accountant, an alien anthropologist, or an artistic advisor, Msty has you covered.

To tweak the model instructions, click on Edit Model Instructions in the center of the app, then hit the tiny chat button to the left of the broom icon. From the popup menu, pick the instructions you want and click "Apply to this chat" before you run your first query.

There are plenty of model instructions to choose from, helping you refine your queries for specific needs.

This guide should help you get up and running with Msty quickly. Start with the basics and, as you become more comfortable with the app, explore its more advanced features. It's a powerful tool that can really enhance your local AI experience on MacOS.

Google's AI Now Handles Phone Calls for You

Google has expanded its AI calling feature to all US users through Search, enabling customers to inquire about pricing and availability with local businesses without phone conversations. Initially tested in January, this capability currently supports

Google's AI Now Handles Phone Calls for You

Google has expanded its AI calling feature to all US users through Search, enabling customers to inquire about pricing and availability with local businesses without phone conversations. Initially tested in January, this capability currently supports

Trump Exempts Smartphones, Computers, and Chips from Tariff Hikes

The Trump administration has granted exclusions for smartphones, computers, and various electronic devices from recent tariff increases, even when imported from China, according to Bloomberg reporting. However, these products remain subject to earlie

Trump Exempts Smartphones, Computers, and Chips from Tariff Hikes

The Trump administration has granted exclusions for smartphones, computers, and various electronic devices from recent tariff increases, even when imported from China, according to Bloomberg reporting. However, these products remain subject to earlie

AI Reimagines Michael Jackson in the Metaverse with Stunning Digital Transformations

Artificial intelligence is fundamentally reshaping our understanding of creativity, entertainment, and cultural legacy. This exploration into AI-generated interpretations of Michael Jackson reveals how cutting-edge technology can breathe new life int

AI Reimagines Michael Jackson in the Metaverse with Stunning Digital Transformations

Artificial intelligence is fundamentally reshaping our understanding of creativity, entertainment, and cultural legacy. This exploration into AI-generated interpretations of Michael Jackson reveals how cutting-edge technology can breathe new life int

August 12, 2025 at 2:50:10 AM EDT

August 12, 2025 at 2:50:10 AM EDT

This app sounds like a game-changer for Mac users! I love the idea of keeping my data local with Ollama—privacy is everything these days. 😎 Anyone tried it yet? How’s the setup?

0

0

July 27, 2025 at 9:20:21 PM EDT

July 27, 2025 at 9:20:21 PM EDT

This app sounds like a game-changer for Mac users wanting to keep their AI local! 😎 I love how it simplifies using Ollama—definitely gonna try it out to keep my data private.

0

0