Sakana AI's TreeQuest Boosts AI Performance with Multi-Model Collaboration

Japanese AI lab Sakana AI has unveiled a technique enabling multiple large language models (LLMs) to work together, forming a highly effective AI team. Named Multi-LLM AB-MCTS, this method allows models to engage in trial-and-error, leveraging their unique strengths to tackle complex tasks beyond the reach of any single model.

For businesses, this approach offers a way to build more powerful AI systems. Instead of relying on one provider or model, companies can dynamically harness the strengths of various frontier models, assigning the best AI for each task segment to achieve optimal outcomes.

Harnessing Collective Intelligence

Frontier AI models are advancing quickly, each with distinct strengths and weaknesses based on their training data and design. One model might shine in coding, another in creative writing. Sakana AI’s team views these differences as assets, not flaws.

“We consider these unique capabilities as valuable tools for building collective intelligence,” the researchers note in their blog. They argue that, like human teams achieving breakthroughs through diversity, AI systems can accomplish more by collaborating. “By combining their strengths, AI systems can solve challenges no single model could overcome.”

Enhancing Performance at Inference

Sakana AI’s algorithm, an “inference-time scaling” technique (also called “test-time scaling”), is gaining traction in AI research. Unlike “training-time scaling,” which focuses on larger models and datasets, inference-time scaling boosts performance by optimizing computational resources post-training.

One method uses reinforcement learning to encourage models to produce detailed chain-of-thought (CoT) sequences, as seen in models like OpenAI o3 and DeepSeek-R1. Another approach, repeated sampling, prompts the model multiple times to generate diverse solutions, akin to brainstorming. Sakana AI’s method refines these concepts.

“Our framework improves on Best-of-N sampling,” said Takuya Akiba, a Sakana AI research scientist and paper co-author, in an interview with VentureBeat. “It enhances reasoning techniques like extended CoT via reinforcement learning. By strategically choosing the search approach and the right LLM, it optimizes performance within limited calls, excelling in complex tasks.”

How Adaptive Branching Search Functions

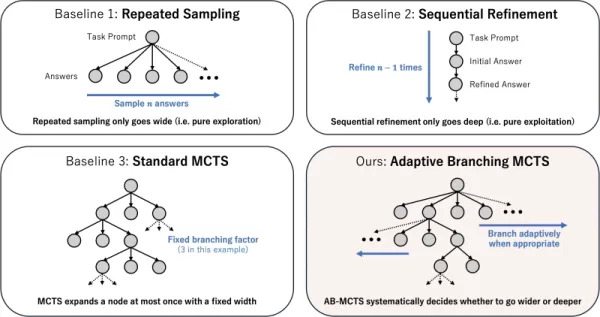

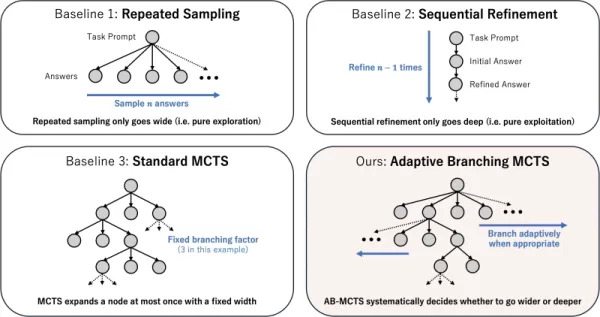

The technique’s core is the Adaptive Branching Monte Carlo Tree Search (AB-MCTS) algorithm. It enables LLMs to perform intelligent trial-and-error by balancing “searching deeper” (refining a promising solution) and “searching wider” (generating new solutions). AB-MCTS blends these strategies, allowing the system to refine ideas or pivot to new ones when needed.

This is powered by Monte Carlo Tree Search (MCTS), a decision-making algorithm used in DeepMind’s AlphaGo. AB-MCTS employs probability models to decide whether to refine or restart at each step.

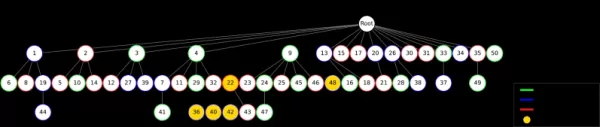

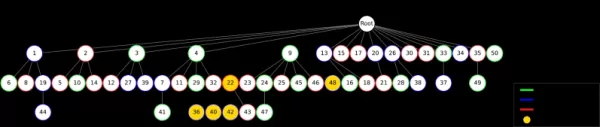

Different test-time scaling strategies Source: Sakana AI Multi-LLM AB-MCTS takes this further by deciding not only “what” to do (refine or generate) but also “which” LLM to use. Initially unaware of the best model for a task, the system tests a mix of LLMs, learning over time which ones perform better and assigning them more work.

Testing the AI Team

The Multi-LLM AB-MCTS system was evaluated on the ARC-AGI-2 benchmark, which tests human-like visual reasoning on novel problems, a tough challenge for AI.

The team combined frontier models like o4-mini, Gemini 2.5 Pro, and DeepSeek-R1.

The model collective solved over 30% of the 120 test problems, far surpassing any single model’s performance. The system dynamically assigned the best model for each task, quickly identifying the most effective LLM when a clear solution path existed.

AB-MCTS vs individual models Source: Sakana AI Remarkably, the system solved problems previously unsolvable by any single model. In one instance, an incorrect solution from o4-mini was refined by DeepSeek-R1 and Gemini-2.5 Pro, which corrected the error and delivered the right answer.

“This shows Multi-LLM AB-MCTS can combine frontier models to tackle previously unsolvable challenges, expanding the limits of collective AI intelligence,” the researchers state.

AB-MTCS can select different models at different stages of solving a problem Source: Sakana AI “Each model’s strengths and hallucination tendencies vary,” Akiba noted. “By pairing models with lower hallucination risks, we can achieve both powerful reasoning and reliability, addressing a key business concern.”

From Research to Practical Use

Sakana AI has released TreeQuest, an open-source framework under the Apache 2.0 license, enabling developers and businesses to implement Multi-LLM AB-MCTS. Its flexible API supports custom scoring and logic for diverse tasks.

“We’re still exploring AB-MCTS for specific business challenges, but its potential is clear,” Akiba said.

Beyond the ARC-AGI-2 benchmark, AB-MCTS has succeeded in tasks like complex coding and improving machine learning model accuracy.

“AB-MCTS excels in iterative trial-and-error tasks, such as optimizing software performance metrics,” Akiba added. “For instance, it could automatically reduce web service response latency.”

This open-source tool could enable a new generation of robust, reliable enterprise AI applications.

Related article

Microsoft trims workforce amid strong financial performance

Microsoft announces strategic workforce realignmentMicrosoft has initiated workforce reductions affecting approximately 7,000 employees, representing 3% of its global staff. Importantly, these changes reflect strategic priorities rather than financia

Microsoft trims workforce amid strong financial performance

Microsoft announces strategic workforce realignmentMicrosoft has initiated workforce reductions affecting approximately 7,000 employees, representing 3% of its global staff. Importantly, these changes reflect strategic priorities rather than financia

MIT Unveils Self-Learning AI Framework That Moves Beyond Static Models

MIT Researchers Pioneer Self-Learning AI FrameworkA team at MIT has developed an innovative system called SEAL (Self-Adapting Language Models) that empowers large language models to autonomously evolve their capabilities. This breakthrough enables AI

MIT Unveils Self-Learning AI Framework That Moves Beyond Static Models

MIT Researchers Pioneer Self-Learning AI FrameworkA team at MIT has developed an innovative system called SEAL (Self-Adapting Language Models) that empowers large language models to autonomously evolve their capabilities. This breakthrough enables AI

Multiverse AI Launches Breakthrough Miniature High-Performance Models

A pioneering European AI startup has unveiled groundbreaking micro-sized AI models named after avian and insect brains, demonstrating that powerful artificial intelligence doesn't require massive scale.Multiverse Computing's innovation centers on ult

Comments (0)

0/200

Multiverse AI Launches Breakthrough Miniature High-Performance Models

A pioneering European AI startup has unveiled groundbreaking micro-sized AI models named after avian and insect brains, demonstrating that powerful artificial intelligence doesn't require massive scale.Multiverse Computing's innovation centers on ult

Comments (0)

0/200

Japanese AI lab Sakana AI has unveiled a technique enabling multiple large language models (LLMs) to work together, forming a highly effective AI team. Named Multi-LLM AB-MCTS, this method allows models to engage in trial-and-error, leveraging their unique strengths to tackle complex tasks beyond the reach of any single model.

For businesses, this approach offers a way to build more powerful AI systems. Instead of relying on one provider or model, companies can dynamically harness the strengths of various frontier models, assigning the best AI for each task segment to achieve optimal outcomes.

Harnessing Collective Intelligence

Frontier AI models are advancing quickly, each with distinct strengths and weaknesses based on their training data and design. One model might shine in coding, another in creative writing. Sakana AI’s team views these differences as assets, not flaws.

“We consider these unique capabilities as valuable tools for building collective intelligence,” the researchers note in their blog. They argue that, like human teams achieving breakthroughs through diversity, AI systems can accomplish more by collaborating. “By combining their strengths, AI systems can solve challenges no single model could overcome.”

Enhancing Performance at Inference

Sakana AI’s algorithm, an “inference-time scaling” technique (also called “test-time scaling”), is gaining traction in AI research. Unlike “training-time scaling,” which focuses on larger models and datasets, inference-time scaling boosts performance by optimizing computational resources post-training.

One method uses reinforcement learning to encourage models to produce detailed chain-of-thought (CoT) sequences, as seen in models like OpenAI o3 and DeepSeek-R1. Another approach, repeated sampling, prompts the model multiple times to generate diverse solutions, akin to brainstorming. Sakana AI’s method refines these concepts.

“Our framework improves on Best-of-N sampling,” said Takuya Akiba, a Sakana AI research scientist and paper co-author, in an interview with VentureBeat. “It enhances reasoning techniques like extended CoT via reinforcement learning. By strategically choosing the search approach and the right LLM, it optimizes performance within limited calls, excelling in complex tasks.”

How Adaptive Branching Search Functions

The technique’s core is the Adaptive Branching Monte Carlo Tree Search (AB-MCTS) algorithm. It enables LLMs to perform intelligent trial-and-error by balancing “searching deeper” (refining a promising solution) and “searching wider” (generating new solutions). AB-MCTS blends these strategies, allowing the system to refine ideas or pivot to new ones when needed.

This is powered by Monte Carlo Tree Search (MCTS), a decision-making algorithm used in DeepMind’s AlphaGo. AB-MCTS employs probability models to decide whether to refine or restart at each step.

Multi-LLM AB-MCTS takes this further by deciding not only “what” to do (refine or generate) but also “which” LLM to use. Initially unaware of the best model for a task, the system tests a mix of LLMs, learning over time which ones perform better and assigning them more work.

Testing the AI Team

The Multi-LLM AB-MCTS system was evaluated on the ARC-AGI-2 benchmark, which tests human-like visual reasoning on novel problems, a tough challenge for AI.

The team combined frontier models like o4-mini, Gemini 2.5 Pro, and DeepSeek-R1.

The model collective solved over 30% of the 120 test problems, far surpassing any single model’s performance. The system dynamically assigned the best model for each task, quickly identifying the most effective LLM when a clear solution path existed.

Remarkably, the system solved problems previously unsolvable by any single model. In one instance, an incorrect solution from o4-mini was refined by DeepSeek-R1 and Gemini-2.5 Pro, which corrected the error and delivered the right answer.

“This shows Multi-LLM AB-MCTS can combine frontier models to tackle previously unsolvable challenges, expanding the limits of collective AI intelligence,” the researchers state.

“Each model’s strengths and hallucination tendencies vary,” Akiba noted. “By pairing models with lower hallucination risks, we can achieve both powerful reasoning and reliability, addressing a key business concern.”

From Research to Practical Use

Sakana AI has released TreeQuest, an open-source framework under the Apache 2.0 license, enabling developers and businesses to implement Multi-LLM AB-MCTS. Its flexible API supports custom scoring and logic for diverse tasks.

“We’re still exploring AB-MCTS for specific business challenges, but its potential is clear,” Akiba said.

Beyond the ARC-AGI-2 benchmark, AB-MCTS has succeeded in tasks like complex coding and improving machine learning model accuracy.

“AB-MCTS excels in iterative trial-and-error tasks, such as optimizing software performance metrics,” Akiba added. “For instance, it could automatically reduce web service response latency.”

This open-source tool could enable a new generation of robust, reliable enterprise AI applications.

MIT Unveils Self-Learning AI Framework That Moves Beyond Static Models

MIT Researchers Pioneer Self-Learning AI FrameworkA team at MIT has developed an innovative system called SEAL (Self-Adapting Language Models) that empowers large language models to autonomously evolve their capabilities. This breakthrough enables AI

MIT Unveils Self-Learning AI Framework That Moves Beyond Static Models

MIT Researchers Pioneer Self-Learning AI FrameworkA team at MIT has developed an innovative system called SEAL (Self-Adapting Language Models) that empowers large language models to autonomously evolve their capabilities. This breakthrough enables AI

Multiverse AI Launches Breakthrough Miniature High-Performance Models

A pioneering European AI startup has unveiled groundbreaking micro-sized AI models named after avian and insect brains, demonstrating that powerful artificial intelligence doesn't require massive scale.Multiverse Computing's innovation centers on ult

Multiverse AI Launches Breakthrough Miniature High-Performance Models

A pioneering European AI startup has unveiled groundbreaking micro-sized AI models named after avian and insect brains, demonstrating that powerful artificial intelligence doesn't require massive scale.Multiverse Computing's innovation centers on ult