Multiverse AI Launches Breakthrough Miniature High-Performance Models

A pioneering European AI startup has unveiled groundbreaking micro-sized AI models named after avian and insect brains, demonstrating that powerful artificial intelligence doesn't require massive scale.

Multiverse Computing's innovation centers on ultra-compact yet capable models designed specifically for edge computing applications. Dubbed "ChickBrain" (3.2 billion parameters) and "SuperFly" (94 million parameters), these miniature neural networks represent a significant leap forward in efficient AI deployment.

"Our compression technology allows these models to operate directly on personal devices," explained founder Román Orús in an exclusive TechCrunch interview. "Imagine having conversational AI capabilities running natively on your smartwatch without cloud dependency."

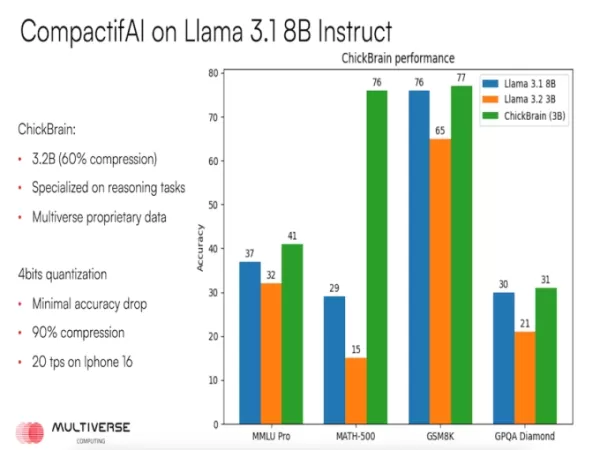

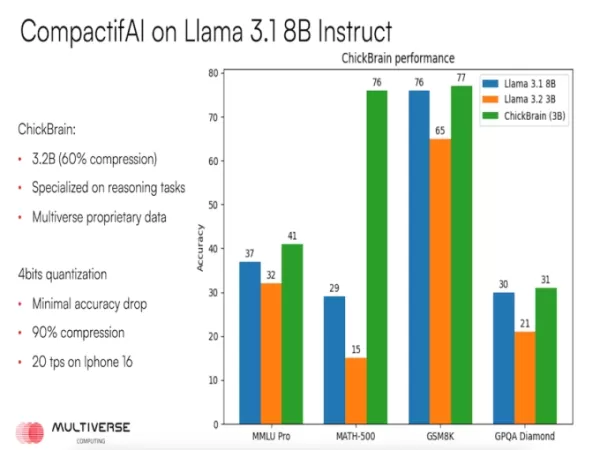

The Spanish quantum computing specialist firm has attracted substantial investment, securing €189 million this June alone. Their proprietary "CompactifAI" technology leverages quantum-inspired algorithms to dramatically reduce model sizes while maintaining - and in some cases improving - performance metrics.

Notably, ChickBrain demonstrates superior performance to its source model (Meta's Llama 3.1 8B) across multiple benchmarks including mathematical reasoning (GSM8K, Math 500) and general knowledge assessment (GPQA Diamond). Meanwhile, SuperFly's insect-scale footprint enables voice interface capabilities for IoT appliances using minimal processing power.

The company collaborates with major tech manufacturers and offers flexible deployment options:

- Direct integration into consumer electronics

- AWS-hosted API services with competitive pricing

- Specialized compression for existing ML implementations

Multiverse's client roster includes chemical giant BASF, financial services provider Ally, and industrial leader Bosch, demonstrating the technology's cross-industry applicability.

Comparative performance metrics show ChickBrain outperforming its source model across multiple cognition benchmarks

This breakthrough in model efficiency arrives as the tech industry increasingly prioritizes on-device processing advantages including privacy preservation, latency reduction, and offline functionality.

Related article

Microsoft Study Finds More AI Tokens Increase Reasoning Errors

Emerging Insights Into LLM Reasoning EfficiencyNew research from Microsoft demonstrates that advanced reasoning techniques in large language models don't produce uniform improvements across different AI systems. Their groundbreaking study analyzed ho

Microsoft Study Finds More AI Tokens Increase Reasoning Errors

Emerging Insights Into LLM Reasoning EfficiencyNew research from Microsoft demonstrates that advanced reasoning techniques in large language models don't produce uniform improvements across different AI systems. Their groundbreaking study analyzed ho

Why LLMs Ignore Instructions & How to Fix It Effectively

Understanding Why Large Language Models Skip Instructions

Large Language Models (LLMs) have transformed how we interact with AI, enabling advanced applications ranging from conversational interfaces to automated content generation and programming ass

Why LLMs Ignore Instructions & How to Fix It Effectively

Understanding Why Large Language Models Skip Instructions

Large Language Models (LLMs) have transformed how we interact with AI, enabling advanced applications ranging from conversational interfaces to automated content generation and programming ass

Alibaba's 'ZeroSearch' AI Slashes Training Costs by 88% Through Autonomous Learning

Alibaba's ZeroSearch: A Game-Changer for AI Training EfficiencyAlibaba Group researchers have pioneered a breakthrough method that potentially revolutionizes how AI systems learn information retrieval, bypassing costly commercial search engine APIs e

Comments (0)

0/200

Alibaba's 'ZeroSearch' AI Slashes Training Costs by 88% Through Autonomous Learning

Alibaba's ZeroSearch: A Game-Changer for AI Training EfficiencyAlibaba Group researchers have pioneered a breakthrough method that potentially revolutionizes how AI systems learn information retrieval, bypassing costly commercial search engine APIs e

Comments (0)

0/200

A pioneering European AI startup has unveiled groundbreaking micro-sized AI models named after avian and insect brains, demonstrating that powerful artificial intelligence doesn't require massive scale.

Multiverse Computing's innovation centers on ultra-compact yet capable models designed specifically for edge computing applications. Dubbed "ChickBrain" (3.2 billion parameters) and "SuperFly" (94 million parameters), these miniature neural networks represent a significant leap forward in efficient AI deployment.

"Our compression technology allows these models to operate directly on personal devices," explained founder Román Orús in an exclusive TechCrunch interview. "Imagine having conversational AI capabilities running natively on your smartwatch without cloud dependency."

The Spanish quantum computing specialist firm has attracted substantial investment, securing €189 million this June alone. Their proprietary "CompactifAI" technology leverages quantum-inspired algorithms to dramatically reduce model sizes while maintaining - and in some cases improving - performance metrics.

Notably, ChickBrain demonstrates superior performance to its source model (Meta's Llama 3.1 8B) across multiple benchmarks including mathematical reasoning (GSM8K, Math 500) and general knowledge assessment (GPQA Diamond). Meanwhile, SuperFly's insect-scale footprint enables voice interface capabilities for IoT appliances using minimal processing power.

The company collaborates with major tech manufacturers and offers flexible deployment options:

- Direct integration into consumer electronics

- AWS-hosted API services with competitive pricing

- Specialized compression for existing ML implementations

Multiverse's client roster includes chemical giant BASF, financial services provider Ally, and industrial leader Bosch, demonstrating the technology's cross-industry applicability.

This breakthrough in model efficiency arrives as the tech industry increasingly prioritizes on-device processing advantages including privacy preservation, latency reduction, and offline functionality.

Microsoft Study Finds More AI Tokens Increase Reasoning Errors

Emerging Insights Into LLM Reasoning EfficiencyNew research from Microsoft demonstrates that advanced reasoning techniques in large language models don't produce uniform improvements across different AI systems. Their groundbreaking study analyzed ho

Microsoft Study Finds More AI Tokens Increase Reasoning Errors

Emerging Insights Into LLM Reasoning EfficiencyNew research from Microsoft demonstrates that advanced reasoning techniques in large language models don't produce uniform improvements across different AI systems. Their groundbreaking study analyzed ho

Why LLMs Ignore Instructions & How to Fix It Effectively

Understanding Why Large Language Models Skip Instructions

Large Language Models (LLMs) have transformed how we interact with AI, enabling advanced applications ranging from conversational interfaces to automated content generation and programming ass

Why LLMs Ignore Instructions & How to Fix It Effectively

Understanding Why Large Language Models Skip Instructions

Large Language Models (LLMs) have transformed how we interact with AI, enabling advanced applications ranging from conversational interfaces to automated content generation and programming ass

Alibaba's 'ZeroSearch' AI Slashes Training Costs by 88% Through Autonomous Learning

Alibaba's ZeroSearch: A Game-Changer for AI Training EfficiencyAlibaba Group researchers have pioneered a breakthrough method that potentially revolutionizes how AI systems learn information retrieval, bypassing costly commercial search engine APIs e

Alibaba's 'ZeroSearch' AI Slashes Training Costs by 88% Through Autonomous Learning

Alibaba's ZeroSearch: A Game-Changer for AI Training EfficiencyAlibaba Group researchers have pioneered a breakthrough method that potentially revolutionizes how AI systems learn information retrieval, bypassing costly commercial search engine APIs e