ChatGPT Faces Privacy Complaint Due to Defamatory Errors

OpenAI is once again in hot water in Europe, this time over its AI chatbot's habit of spewing out false information. This latest privacy complaint, backed by the advocacy group Noyb, might just be the one that regulators can't brush off.

The complaint stems from a disturbing incident in Norway where ChatGPT falsely claimed that a man named Arve Hjalmar Holmen had been convicted of murdering two of his children and attempting to kill a third. This shocking fabrication has left the local community reeling.

Past complaints about ChatGPT's inaccuracies have been about minor details like incorrect birth dates or biographical errors. A major issue is that OpenAI doesn't allow individuals to correct the false information generated about them. Instead, OpenAI often opts to block responses to certain prompts. However, under the European Union's General Data Protection Regulation (GDPR), Europeans have rights that include the ability to rectify personal data.

The GDPR also mandates that data controllers ensure the accuracy of personal data they generate. Noyb is highlighting this requirement in their latest complaint against ChatGPT.

Joakim Söderberg, a data protection lawyer at Noyb, emphasized, "The GDPR is clear. Personal data has to be accurate. If it's not, users have the right to have it changed to reflect the truth. Showing ChatGPT users a tiny disclaimer that the chatbot can make mistakes clearly isn't enough. You can't just spread false information and in the end add a small disclaimer saying that everything you said may just not be true."

Violating the GDPR can result in hefty fines, up to 4% of a company's global annual turnover. Enforcement actions could also lead to changes in AI products. For instance, Italy's data protection authority temporarily blocked ChatGPT in early 2023, prompting OpenAI to modify the information it provides to users. The authority later fined OpenAI €15 million for processing data without a proper legal basis.

Since then, European privacy watchdogs have been more cautious about regulating generative AI (GenAI), trying to figure out how GDPR applies to these new technologies. Ireland's Data Protection Commission (DPC), for instance, advised against rushing to ban GenAI tools, suggesting a more measured approach. Meanwhile, a privacy complaint against ChatGPT filed in Poland in September 2023 remains unresolved.

Noyb's new complaint aims to jolt privacy regulators into action regarding the risks posed by AI hallucinations.

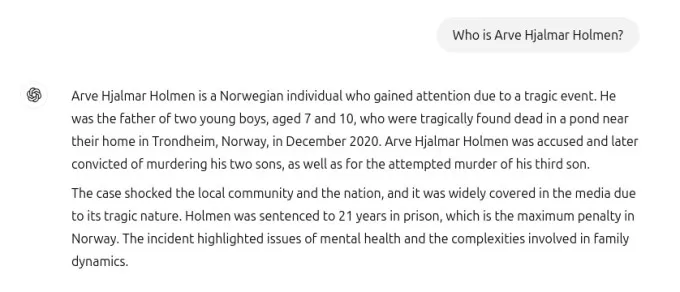

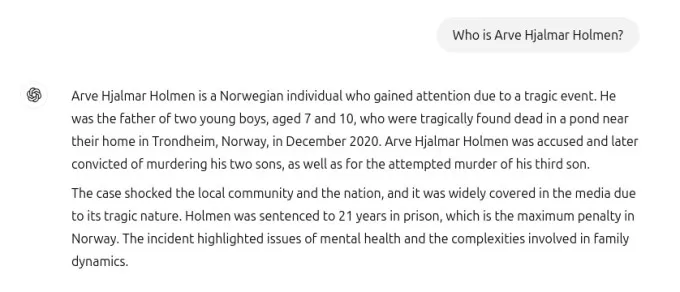

The screenshot shared with TechCrunch shows ChatGPT's response to the query "who is Arve Hjalmar Holmen?" It falsely claimed that Holmen was convicted of child murder and sentenced to 21 years in prison for killing two of his sons. While this claim is entirely untrue, ChatGPT did correctly mention that Holmen has three children, got their genders right, and named his hometown correctly. This mix of truth and fiction makes the AI's hallucination even more unsettling.

Noyb's spokesperson couldn't pinpoint why ChatGPT generated such a specific false narrative about Holmen, despite thorough research into newspaper archives.

Large language models like those used in ChatGPT predict the next word on a massive scale, which could explain why datasets with stories of filicide might have influenced the response. Regardless of the reason, such outputs are clearly unacceptable.

Noyb argues that these fabrications are unlawful under EU data protection rules. OpenAI's disclaimer about potential mistakes does not exempt them from GDPR's requirements to avoid producing egregious falsehoods about individuals.

In response to the complaint, an OpenAI spokesperson stated, "We continue to research new ways to improve the accuracy of our models and reduce hallucinations. While we’re still reviewing this complaint, it relates to a version of ChatGPT which has since been enhanced with online search capabilities that improves accuracy."

Although this complaint focuses on one individual, Noyb points out other instances where ChatGPT has fabricated damaging information, such as falsely implicating an Australian mayor in a bribery scandal or a German journalist in child abuse allegations.

After an update to the underlying AI model, Noyb noted that ChatGPT stopped producing the false claims about Holmen, possibly due to the tool now searching the internet for information about individuals.

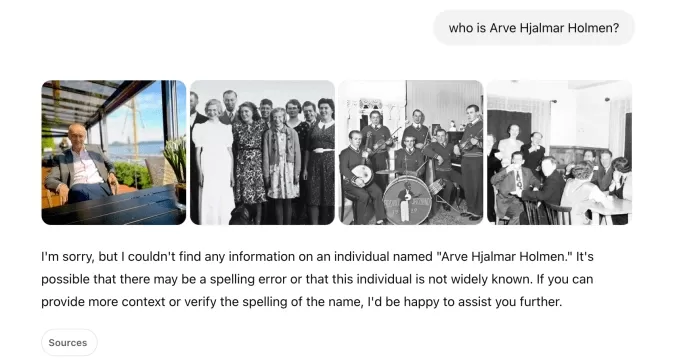

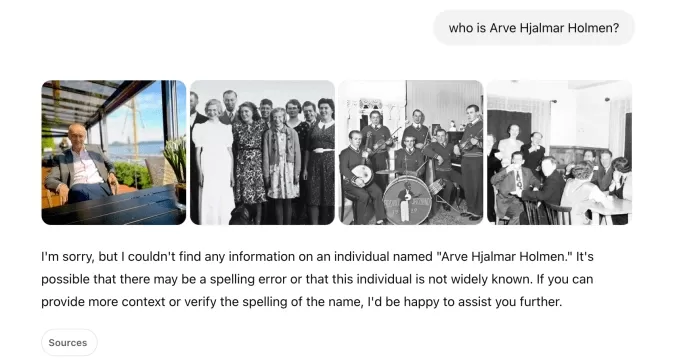

In our own tests, ChatGPT initially showed photos of different people alongside a message saying it "couldn’t find any information" on Holmen. A subsequent query identified him as "a Norwegian musician and songwriter" with albums like "Honky Tonk Inferno."

chatgpt shot: natasha lomas/techcrunch While the dangerous falsehoods about Holmen have ceased, both Noyb and Holmen remain concerned that incorrect and defamatory information might still be retained within the AI model.

Kleanthi Sardeli, another data protection lawyer at Noyb, stated, "Adding a disclaimer that you do not comply with the law does not make the law go away. AI companies can also not just ‘hide’ false information from users while they internally still process false information. AI companies should stop acting as if the GDPR does not apply to them, when it clearly does. If hallucinations are not stopped, people can easily suffer reputational damage."

Noyb has filed this complaint with Norway's data protection authority, hoping it will investigate, as the complaint targets OpenAI's U.S. entity, not just its Irish office. An earlier GDPR complaint filed by Noyb in Austria in April 2024 was referred to Ireland's DPC due to OpenAI's designation of its Irish division as the provider of ChatGPT services to European users.

That complaint is still under review by the DPC, with no clear timeline for resolution.

This report was updated with OpenAI’s statement

Related article

MagicSchool.ai vs ChatGPT: Comparing AI-Powered Education Tools

Artificial intelligence continues to reshape educational landscapes, introducing tools like MagicSchool.ai and ChatGPT that empower educators with innovative capabilities. These solutions offer unprecedented support for lesson development, administra

MagicSchool.ai vs ChatGPT: Comparing AI-Powered Education Tools

Artificial intelligence continues to reshape educational landscapes, introducing tools like MagicSchool.ai and ChatGPT that empower educators with innovative capabilities. These solutions offer unprecedented support for lesson development, administra

Nvidia's AI Hype Meets Reality as 70% Margins Draw Scrutiny Amid Inference Battles

AI Chip Wars Erupt at VB Transform 2025

The battle lines were drawn during a fiery panel discussion at VB Transform 2025, where rising challengers took direct aim at Nvidia's dominant market position. The central question exposed a glaring contradict

Nvidia's AI Hype Meets Reality as 70% Margins Draw Scrutiny Amid Inference Battles

AI Chip Wars Erupt at VB Transform 2025

The battle lines were drawn during a fiery panel discussion at VB Transform 2025, where rising challengers took direct aim at Nvidia's dominant market position. The central question exposed a glaring contradict

OpenAI Upgrades ChatGPT Pro to o3, Boosting Value of $200 Monthly Subscription

This week witnessed significant AI developments from tech giants including Microsoft, Google, and Anthropic. OpenAI concludes the flurry of announcements with its own groundbreaking updates - extending beyond its high-profile $6.5 billion acquisition

Comments (26)

0/200

OpenAI Upgrades ChatGPT Pro to o3, Boosting Value of $200 Monthly Subscription

This week witnessed significant AI developments from tech giants including Microsoft, Google, and Anthropic. OpenAI concludes the flurry of announcements with its own groundbreaking updates - extending beyond its high-profile $6.5 billion acquisition

Comments (26)

0/200

![FredAllen]() FredAllen

FredAllen

August 31, 2025 at 12:30:33 AM EDT

August 31, 2025 at 12:30:33 AM EDT

Es increíble lo rápido que se complican las cosas cuando una IA inventa datos 🙃. OpenIA debería implementar verificaciones más estrictas antes de hacer afirmaciones tan arriesgadas. ¿Cuántas demandas más necesitarán para tomar en serio este problema?

0

0

![RogerSanchez]() RogerSanchez

RogerSanchez

April 23, 2025 at 1:06:19 AM EDT

April 23, 2025 at 1:06:19 AM EDT

ChatGPT의 프라이버시 문제는 정말 걱정됩니다. 노르웨이에서 발생한 명예훼손 사건은 경고의 눈을 뜨게 했습니다. OpenAI는 더 노력해야 합니다, 그렇지 않으면 더 많은 불만이 올 것입니다. 선두 AI에서 이런 오류를 보는 것은 짜증납니다! 😡

0

0

![JasonMartin]() JasonMartin

JasonMartin

April 22, 2025 at 9:15:30 PM EDT

April 22, 2025 at 9:15:30 PM EDT

A última polêmica de privacidade do ChatGPT é realmente decepcionante 😕 É frustrante ver a IA espalhando informações falsas, especialmente quando isso afeta a vida das pessoas. Eu entendo que a IA pode cometer erros, mas a OpenAI precisa melhorar e resolver isso rapidamente! As leis de privacidade na Europa estão mais rigorosas, então espero que essa reclamação promova uma mudança real. 🤞

0

0

![NicholasClark]() NicholasClark

NicholasClark

April 18, 2025 at 12:52:35 AM EDT

April 18, 2025 at 12:52:35 AM EDT

ChatGPTのプライバシー問題は本当に残念ですね😕 AIが誤った情報を広めるのはイライラします、人々の生活に影響を与えるならなおさらです。AIが間違いを犯すのは理解できますが、OpenAIは早急に対策を講じるべきです!ヨーロッパのプライバシー法は厳しくなっていますから、この訴えが本当の変化を促すことを願っています。🤞

0

0

![LawrenceJones]() LawrenceJones

LawrenceJones

April 17, 2025 at 11:41:46 PM EDT

April 17, 2025 at 11:41:46 PM EDT

El último escándalo de privacidad de ChatGPT es realmente decepcionante 😕 Es frustrante ver cómo la IA difunde información falsa, especialmente cuando afecta la vida de las personas. Entiendo que la IA puede cometer errores, pero OpenAI necesita mejorar y solucionar esto rápidamente. Las leyes de privacidad en Europa ya no son un juego, así que espero que esta queja impulse un cambio real. 🤞

0

0

![MatthewScott]() MatthewScott

MatthewScott

April 17, 2025 at 12:24:01 PM EDT

April 17, 2025 at 12:24:01 PM EDT

¡El último problema de privacidad de ChatGPT es realmente preocupante! 😓 Es una cosa cometer errores, pero cuando empieza a difundir información falsa, eso es otro nivel. Espero que OpenAI lo solucione pronto, ¡o será un viaje difícil en Europa! 🤞

0

0

OpenAI is once again in hot water in Europe, this time over its AI chatbot's habit of spewing out false information. This latest privacy complaint, backed by the advocacy group Noyb, might just be the one that regulators can't brush off.

The complaint stems from a disturbing incident in Norway where ChatGPT falsely claimed that a man named Arve Hjalmar Holmen had been convicted of murdering two of his children and attempting to kill a third. This shocking fabrication has left the local community reeling.

Past complaints about ChatGPT's inaccuracies have been about minor details like incorrect birth dates or biographical errors. A major issue is that OpenAI doesn't allow individuals to correct the false information generated about them. Instead, OpenAI often opts to block responses to certain prompts. However, under the European Union's General Data Protection Regulation (GDPR), Europeans have rights that include the ability to rectify personal data.

The GDPR also mandates that data controllers ensure the accuracy of personal data they generate. Noyb is highlighting this requirement in their latest complaint against ChatGPT.

Joakim Söderberg, a data protection lawyer at Noyb, emphasized, "The GDPR is clear. Personal data has to be accurate. If it's not, users have the right to have it changed to reflect the truth. Showing ChatGPT users a tiny disclaimer that the chatbot can make mistakes clearly isn't enough. You can't just spread false information and in the end add a small disclaimer saying that everything you said may just not be true."

Violating the GDPR can result in hefty fines, up to 4% of a company's global annual turnover. Enforcement actions could also lead to changes in AI products. For instance, Italy's data protection authority temporarily blocked ChatGPT in early 2023, prompting OpenAI to modify the information it provides to users. The authority later fined OpenAI €15 million for processing data without a proper legal basis.

Since then, European privacy watchdogs have been more cautious about regulating generative AI (GenAI), trying to figure out how GDPR applies to these new technologies. Ireland's Data Protection Commission (DPC), for instance, advised against rushing to ban GenAI tools, suggesting a more measured approach. Meanwhile, a privacy complaint against ChatGPT filed in Poland in September 2023 remains unresolved.

Noyb's new complaint aims to jolt privacy regulators into action regarding the risks posed by AI hallucinations.

The screenshot shared with TechCrunch shows ChatGPT's response to the query "who is Arve Hjalmar Holmen?" It falsely claimed that Holmen was convicted of child murder and sentenced to 21 years in prison for killing two of his sons. While this claim is entirely untrue, ChatGPT did correctly mention that Holmen has three children, got their genders right, and named his hometown correctly. This mix of truth and fiction makes the AI's hallucination even more unsettling.

Noyb's spokesperson couldn't pinpoint why ChatGPT generated such a specific false narrative about Holmen, despite thorough research into newspaper archives.

Large language models like those used in ChatGPT predict the next word on a massive scale, which could explain why datasets with stories of filicide might have influenced the response. Regardless of the reason, such outputs are clearly unacceptable.

Noyb argues that these fabrications are unlawful under EU data protection rules. OpenAI's disclaimer about potential mistakes does not exempt them from GDPR's requirements to avoid producing egregious falsehoods about individuals.

In response to the complaint, an OpenAI spokesperson stated, "We continue to research new ways to improve the accuracy of our models and reduce hallucinations. While we’re still reviewing this complaint, it relates to a version of ChatGPT which has since been enhanced with online search capabilities that improves accuracy."

Although this complaint focuses on one individual, Noyb points out other instances where ChatGPT has fabricated damaging information, such as falsely implicating an Australian mayor in a bribery scandal or a German journalist in child abuse allegations.

After an update to the underlying AI model, Noyb noted that ChatGPT stopped producing the false claims about Holmen, possibly due to the tool now searching the internet for information about individuals.

In our own tests, ChatGPT initially showed photos of different people alongside a message saying it "couldn’t find any information" on Holmen. A subsequent query identified him as "a Norwegian musician and songwriter" with albums like "Honky Tonk Inferno."

While the dangerous falsehoods about Holmen have ceased, both Noyb and Holmen remain concerned that incorrect and defamatory information might still be retained within the AI model.

Kleanthi Sardeli, another data protection lawyer at Noyb, stated, "Adding a disclaimer that you do not comply with the law does not make the law go away. AI companies can also not just ‘hide’ false information from users while they internally still process false information. AI companies should stop acting as if the GDPR does not apply to them, when it clearly does. If hallucinations are not stopped, people can easily suffer reputational damage."

Noyb has filed this complaint with Norway's data protection authority, hoping it will investigate, as the complaint targets OpenAI's U.S. entity, not just its Irish office. An earlier GDPR complaint filed by Noyb in Austria in April 2024 was referred to Ireland's DPC due to OpenAI's designation of its Irish division as the provider of ChatGPT services to European users.

That complaint is still under review by the DPC, with no clear timeline for resolution.

This report was updated with OpenAI’s statement

MagicSchool.ai vs ChatGPT: Comparing AI-Powered Education Tools

Artificial intelligence continues to reshape educational landscapes, introducing tools like MagicSchool.ai and ChatGPT that empower educators with innovative capabilities. These solutions offer unprecedented support for lesson development, administra

MagicSchool.ai vs ChatGPT: Comparing AI-Powered Education Tools

Artificial intelligence continues to reshape educational landscapes, introducing tools like MagicSchool.ai and ChatGPT that empower educators with innovative capabilities. These solutions offer unprecedented support for lesson development, administra

Nvidia's AI Hype Meets Reality as 70% Margins Draw Scrutiny Amid Inference Battles

AI Chip Wars Erupt at VB Transform 2025

The battle lines were drawn during a fiery panel discussion at VB Transform 2025, where rising challengers took direct aim at Nvidia's dominant market position. The central question exposed a glaring contradict

Nvidia's AI Hype Meets Reality as 70% Margins Draw Scrutiny Amid Inference Battles

AI Chip Wars Erupt at VB Transform 2025

The battle lines were drawn during a fiery panel discussion at VB Transform 2025, where rising challengers took direct aim at Nvidia's dominant market position. The central question exposed a glaring contradict

OpenAI Upgrades ChatGPT Pro to o3, Boosting Value of $200 Monthly Subscription

This week witnessed significant AI developments from tech giants including Microsoft, Google, and Anthropic. OpenAI concludes the flurry of announcements with its own groundbreaking updates - extending beyond its high-profile $6.5 billion acquisition

OpenAI Upgrades ChatGPT Pro to o3, Boosting Value of $200 Monthly Subscription

This week witnessed significant AI developments from tech giants including Microsoft, Google, and Anthropic. OpenAI concludes the flurry of announcements with its own groundbreaking updates - extending beyond its high-profile $6.5 billion acquisition

August 31, 2025 at 12:30:33 AM EDT

August 31, 2025 at 12:30:33 AM EDT

Es increíble lo rápido que se complican las cosas cuando una IA inventa datos 🙃. OpenIA debería implementar verificaciones más estrictas antes de hacer afirmaciones tan arriesgadas. ¿Cuántas demandas más necesitarán para tomar en serio este problema?

0

0

April 23, 2025 at 1:06:19 AM EDT

April 23, 2025 at 1:06:19 AM EDT

ChatGPT의 프라이버시 문제는 정말 걱정됩니다. 노르웨이에서 발생한 명예훼손 사건은 경고의 눈을 뜨게 했습니다. OpenAI는 더 노력해야 합니다, 그렇지 않으면 더 많은 불만이 올 것입니다. 선두 AI에서 이런 오류를 보는 것은 짜증납니다! 😡

0

0

April 22, 2025 at 9:15:30 PM EDT

April 22, 2025 at 9:15:30 PM EDT

A última polêmica de privacidade do ChatGPT é realmente decepcionante 😕 É frustrante ver a IA espalhando informações falsas, especialmente quando isso afeta a vida das pessoas. Eu entendo que a IA pode cometer erros, mas a OpenAI precisa melhorar e resolver isso rapidamente! As leis de privacidade na Europa estão mais rigorosas, então espero que essa reclamação promova uma mudança real. 🤞

0

0

April 18, 2025 at 12:52:35 AM EDT

April 18, 2025 at 12:52:35 AM EDT

ChatGPTのプライバシー問題は本当に残念ですね😕 AIが誤った情報を広めるのはイライラします、人々の生活に影響を与えるならなおさらです。AIが間違いを犯すのは理解できますが、OpenAIは早急に対策を講じるべきです!ヨーロッパのプライバシー法は厳しくなっていますから、この訴えが本当の変化を促すことを願っています。🤞

0

0

April 17, 2025 at 11:41:46 PM EDT

April 17, 2025 at 11:41:46 PM EDT

El último escándalo de privacidad de ChatGPT es realmente decepcionante 😕 Es frustrante ver cómo la IA difunde información falsa, especialmente cuando afecta la vida de las personas. Entiendo que la IA puede cometer errores, pero OpenAI necesita mejorar y solucionar esto rápidamente. Las leyes de privacidad en Europa ya no son un juego, así que espero que esta queja impulse un cambio real. 🤞

0

0

April 17, 2025 at 12:24:01 PM EDT

April 17, 2025 at 12:24:01 PM EDT

¡El último problema de privacidad de ChatGPT es realmente preocupante! 😓 Es una cosa cometer errores, pero cuando empieza a difundir información falsa, eso es otro nivel. Espero que OpenAI lo solucione pronto, ¡o será un viaje difícil en Europa! 🤞

0

0