Titans Architecture: A Breakthrough in AI Memory Technology?

The world of Artificial Intelligence is always on the move, with researchers tirelessly working to push the boundaries of what AI can do, especially with large language models (LLMs). One of the biggest hurdles these models face is their limited context window, which often leads to them 'forgetting' earlier parts of a conversation or document. But there's a glimmer of hope on the horizon—Google's Titans Architecture might just be the solution to this short memory issue in AI.

Key Points

- Traditional AI models often struggle with short-term memory, which limits their context window.

- Google's Titans architecture introduces a dual memory system to tackle this limitation head-on.

- Titans uses both short-term and long-term memory modules to boost performance.

- The long-term memory in Titans can handle context lengths of over two million tokens.

- Titans enables linear scaling, which cuts down the computational costs linked to the quadratic scaling in transformers.

- The architecture shows great potential in tasks that require analyzing long-range dependencies, like genomics.

Understanding the Limits of Short-Term Memory in AI

The Context Window Problem

One of the key areas where AI needs to step up its game is the constraint of short-term memory. In the world of AI models, especially Large Language Models (LLMs), this limitation shows up as a finite context window. Think of it as the AI's attention span—once it's full, older information gets pushed out, making it tough for the AI to keep things coherent and understand long-range dependencies. This short-term memory bottleneck affects several AI applications, such as:

- Extended Conversations: Keeping a conversation coherent over many turns becomes a challenge, as the AI might lose track of earlier topics and references.

- Document Analysis: Processing long documents, like books or research papers, is tough because the AI struggles to remember information from the beginning by the time it reaches the end.

- Code Generation: In coding tasks, the AI might forget previously defined functions or variables, leading to errors and inefficiencies.

Overcoming this limitation is crucial for creating AI models that are more reliable and capable of handling complex tasks, which is why advancements like Titans are so exciting.

The Quadratic Complexity of Self-Attention

Traditional transformer-based architectures, which power many modern LLMs, rely heavily on a mechanism called self-attention. Self-attention is revolutionary, but it comes with a hefty computational cost. In mathematical terms, self-attention has quadratic complexity. This means that the computational resources required increase quadratically with the length of the input sequence. If you double the length of the input, the computation becomes four times more expensive. This scaling problem becomes a major roadblock when dealing with long sequences.

For example, processing a sequence of 1,000 tokens might be manageable, but scaling this to 10,000 tokens increases the computational burden by a factor of 100. This quickly becomes prohibitive, even with the most powerful hardware. As a result, current transformer-based models are often limited to relatively short context windows, hindering their ability to capture long-range dependencies effectively. The exploration of novel architectures like Titans, which can mitigate this complexity, is critical for future advancements in AI.

Titans: Enabling Long-Range Dependency Analysis

Unlocking New AI Capabilities

The ability of Titans to handle longer context windows and achieve linear scaling opens up a variety of new AI applications that were previously impractical. One notable area is long-range dependency analysis, where the relationships between elements separated by large distances in a sequence are critical.

Some examples of long-range dependency analysis include:

- Genomics: Understanding the relationships between genes within a genome. Genes can interact with each other even when they are located far apart on the DNA strand. Titans architecture is well-suited for capturing these complex relationships.

- Financial Modeling: Analyzing long-term trends and dependencies in financial markets. Financial data often exhibits long-term patterns and feedback loops that require considering data from extended periods.

- Climate Science: Modeling complex climate systems and predicting long-term changes. Climate models must account for interactions between different components of the Earth's system over many years.

In each of these areas, the ability to capture long-range dependencies is essential for making accurate predictions and gaining valuable insights. Titans architecture provides a powerful tool for addressing these challenges, enabling AI to tackle problems previously beyond its reach.

How to Use Titans Architecture for AI Development

Leveraging Dual Memory Systems

To effectively utilize the Titans architecture, AI developers need to understand how to leverage its dual memory system. This involves:

- Designing Input Data: Prepare your input data to maximize the benefits of short-term and long-term memory separation.

- Balancing Memory Allocation: Carefully consider how much memory to allocate to the short-term and long-term modules. This will depend on the specific task and the length of the input sequences.

- Optimizing Memory Retrieval: Fine-tune the memory retrieval mechanism to ensure that relevant information is efficiently accessed from the long-term memory module.

- Adapting Existing Models: Adapt existing transformer-based models to incorporate the Titans architecture.

- Experimenting and Evaluating: Thoroughly experiment and evaluate the performance of your Titans-based model on a variety of tasks.

By mastering these techniques, AI developers can unlock the full potential of Titans architecture and build more powerful and capable AI systems.

Pros and Cons of Titans Architecture

Pros

- Improved handling of long-range dependencies.

- Linear scaling reduces computational costs.

- Dual memory system mirrors human brain function.

- Potential for new AI applications.

Cons

- Increased architectural complexity.

- Requires careful memory allocation and retrieval optimization.

- Still in early stages of development.

Frequently Asked Questions About Titans Architecture

What is Titans architecture?

Titans architecture is a novel approach to AI memory management developed by Google. It utilizes a dual memory system, consisting of short-term and long-term memory modules, to improve the handling of long-range dependencies and reduce computational costs in large language models.

How does Titans architecture differ from traditional transformers?

Traditional transformers rely on self-attention, which has quadratic complexity and struggles with long sequences. Titans architecture achieves linear scaling by separating short-term and long-term memory, allowing it to handle longer sequences more efficiently.

What are the potential applications of Titans architecture?

Titans architecture has potential applications in areas requiring long-range dependency analysis, such as genomics, financial modeling, and climate science. It can also improve the performance of AI models in extended conversations, document analysis, and code generation.

What are the challenges of using Titans architecture?

The challenges of using Titans architecture include its increased architectural complexity, the need for careful memory allocation and retrieval optimization, and its relatively early stage of development.

Related Questions on AI Memory and Architecture

How does attention mechanism work in Transformers?

The attention mechanism is a crucial component of transformer models, enabling them to focus on relevant parts of the input sequence when processing information. In essence, it assigns a weight to each word (or token) in the input sequence, indicating its importance concerning other words in the sequence. Let's delve into how the attention mechanism functions within transformers:

Input Embedding: Each word or token from the input sequence is initially converted into a vector representation through embedding layers. These embeddings serve as the input to the attention mechanism.

Query, Key, and Value: The input embeddings are transformed into three distinct vectors: the Query (Q), Key (K), and Value (V) vectors. These transformations are performed through linear transformations or learned weight matrices. Mathematically:

(Q = text{Input} cdot W_Q)

(K = text{Input} cdot W_K)

(V = text{Input} cdot W_V)

Here, (W_Q), (W_K), and (W_V) are the learned weight matrices for the Query, Key, and Value, respectively.

Attention Weights Calculation: The attention weights signify the degree of relevance between each pair of words in the input sequence. These weights are computed by taking the dot product of the Query vector with each Key vector. The resulting scores are then scaled down by the square root of the dimension of the Key vectors to stabilize training. This scaling prevents the dot products from becoming excessively large, which can lead to vanishing gradients during training.

Softmax Normalization: The scaled dot products are passed through a softmax function to normalize them into a probability distribution over the input sequence. This normalization ensures that the attention weights sum up to 1, making them easier to interpret and train.

Weighted Sum: Finally, the Value vectors are weighted by their corresponding attention weights. This weighted sum represents the output of the attention mechanism, which captures the relevant information from the entire input sequence.

The attention mechanism enables Transformers to handle sequential data effectively, capture long-range dependencies, and achieve state-of-the-art performance in various NLP tasks. By dynamically weighing the importance of different parts of the input sequence, the attention mechanism allows the model to focus on the most relevant information, leading to improved performance.

Related article

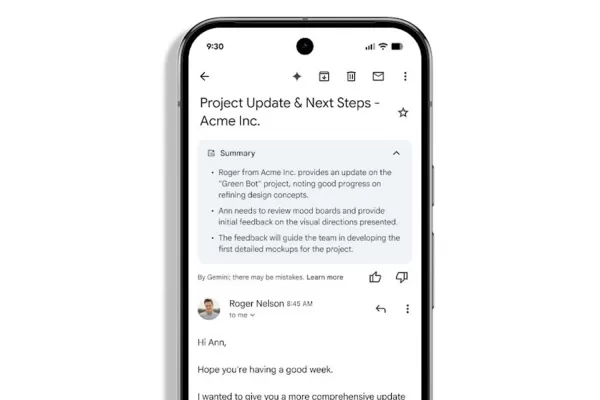

Gmail Rolls Out Automatic AI-Powered Email Summaries

Gemini-Powered Email Summaries Coming to Workspace Users

Google Workspace subscribers will notice Gemini's enhanced role in managing their inboxes as Gmail begins automatically generating summaries for complex email threads. These AI-created digests

Gmail Rolls Out Automatic AI-Powered Email Summaries

Gemini-Powered Email Summaries Coming to Workspace Users

Google Workspace subscribers will notice Gemini's enhanced role in managing their inboxes as Gmail begins automatically generating summaries for complex email threads. These AI-created digests

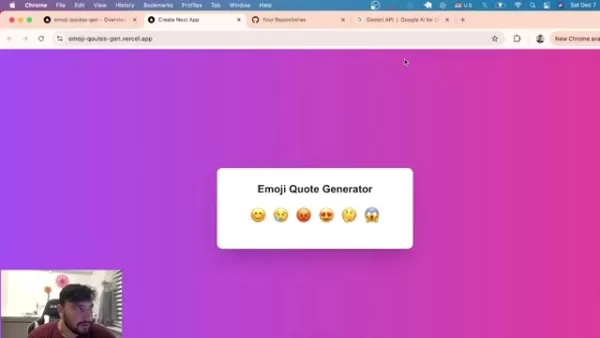

Build an AI-Powered Emoji Quote Generator Using Next.js and Gemini API

Build an AI-Powered Emoji Quote Generator with Next.jsThis hands-on tutorial walks through creating an engaging social media tool that combines web development with artificial intelligence. We'll build a dynamic application that generates inspiration

Build an AI-Powered Emoji Quote Generator Using Next.js and Gemini API

Build an AI-Powered Emoji Quote Generator with Next.jsThis hands-on tutorial walks through creating an engaging social media tool that combines web development with artificial intelligence. We'll build a dynamic application that generates inspiration

AI's Coming Wave: How Artificial Intelligence Will Transform Humanity

We find ourselves at a historic inflection point where artificial intelligence is transitioning from emerging technology to foundational force. Mustafa Suleyman's 'The Coming Wave' offers both visionary insight and urgent warning about AI's growing i

Comments (4)

0/200

AI's Coming Wave: How Artificial Intelligence Will Transform Humanity

We find ourselves at a historic inflection point where artificial intelligence is transitioning from emerging technology to foundational force. Mustafa Suleyman's 'The Coming Wave' offers both visionary insight and urgent warning about AI's growing i

Comments (4)

0/200

![JoseDavis]() JoseDavis

JoseDavis

September 9, 2025 at 10:30:37 PM EDT

September 9, 2025 at 10:30:37 PM EDT

Cette histoire de 'mémoire' des IA me fait toujours sourire. Quand mon chat oublie où il a caché ses jouets, c'est mignon. Quand une IA oublie ce qu'elle vient de lire, ça coûte des millions en R&D... Ironique, non? 😅

0

0

![BenLewis]() BenLewis

BenLewis

August 10, 2025 at 9:00:59 AM EDT

August 10, 2025 at 9:00:59 AM EDT

This Titans Architecture sounds like a game-changer for AI memory! 🤯 Curious if it'll really solve the context window issue or just hype. Anyone tried it yet?

0

0

![WillieAdams]() WillieAdams

WillieAdams

August 1, 2025 at 9:47:34 AM EDT

August 1, 2025 at 9:47:34 AM EDT

Wow, this Titans Architecture sounds like a game-changer for AI memory! Can't wait to see how it tackles the context window issue. 🤯

0

0

![LiamCarter]() LiamCarter

LiamCarter

July 22, 2025 at 3:35:51 AM EDT

July 22, 2025 at 3:35:51 AM EDT

Wow, this Titans Architecture sounds like a game-changer for AI memory! I'm curious how it'll handle massive datasets without forgetting the plot. 😄 Could this finally make LLMs smarter than my goldfish?

0

0

The world of Artificial Intelligence is always on the move, with researchers tirelessly working to push the boundaries of what AI can do, especially with large language models (LLMs). One of the biggest hurdles these models face is their limited context window, which often leads to them 'forgetting' earlier parts of a conversation or document. But there's a glimmer of hope on the horizon—Google's Titans Architecture might just be the solution to this short memory issue in AI.

Key Points

- Traditional AI models often struggle with short-term memory, which limits their context window.

- Google's Titans architecture introduces a dual memory system to tackle this limitation head-on.

- Titans uses both short-term and long-term memory modules to boost performance.

- The long-term memory in Titans can handle context lengths of over two million tokens.

- Titans enables linear scaling, which cuts down the computational costs linked to the quadratic scaling in transformers.

- The architecture shows great potential in tasks that require analyzing long-range dependencies, like genomics.

Understanding the Limits of Short-Term Memory in AI

The Context Window Problem

One of the key areas where AI needs to step up its game is the constraint of short-term memory. In the world of AI models, especially Large Language Models (LLMs), this limitation shows up as a finite context window. Think of it as the AI's attention span—once it's full, older information gets pushed out, making it tough for the AI to keep things coherent and understand long-range dependencies. This short-term memory bottleneck affects several AI applications, such as:

- Extended Conversations: Keeping a conversation coherent over many turns becomes a challenge, as the AI might lose track of earlier topics and references.

- Document Analysis: Processing long documents, like books or research papers, is tough because the AI struggles to remember information from the beginning by the time it reaches the end.

- Code Generation: In coding tasks, the AI might forget previously defined functions or variables, leading to errors and inefficiencies.

Overcoming this limitation is crucial for creating AI models that are more reliable and capable of handling complex tasks, which is why advancements like Titans are so exciting.

The Quadratic Complexity of Self-Attention

Traditional transformer-based architectures, which power many modern LLMs, rely heavily on a mechanism called self-attention. Self-attention is revolutionary, but it comes with a hefty computational cost. In mathematical terms, self-attention has quadratic complexity. This means that the computational resources required increase quadratically with the length of the input sequence. If you double the length of the input, the computation becomes four times more expensive. This scaling problem becomes a major roadblock when dealing with long sequences.

For example, processing a sequence of 1,000 tokens might be manageable, but scaling this to 10,000 tokens increases the computational burden by a factor of 100. This quickly becomes prohibitive, even with the most powerful hardware. As a result, current transformer-based models are often limited to relatively short context windows, hindering their ability to capture long-range dependencies effectively. The exploration of novel architectures like Titans, which can mitigate this complexity, is critical for future advancements in AI.

Titans: Enabling Long-Range Dependency Analysis

Unlocking New AI Capabilities

The ability of Titans to handle longer context windows and achieve linear scaling opens up a variety of new AI applications that were previously impractical. One notable area is long-range dependency analysis, where the relationships between elements separated by large distances in a sequence are critical.

Some examples of long-range dependency analysis include:

- Genomics: Understanding the relationships between genes within a genome. Genes can interact with each other even when they are located far apart on the DNA strand. Titans architecture is well-suited for capturing these complex relationships.

- Financial Modeling: Analyzing long-term trends and dependencies in financial markets. Financial data often exhibits long-term patterns and feedback loops that require considering data from extended periods.

- Climate Science: Modeling complex climate systems and predicting long-term changes. Climate models must account for interactions between different components of the Earth's system over many years.

In each of these areas, the ability to capture long-range dependencies is essential for making accurate predictions and gaining valuable insights. Titans architecture provides a powerful tool for addressing these challenges, enabling AI to tackle problems previously beyond its reach.

How to Use Titans Architecture for AI Development

Leveraging Dual Memory Systems

To effectively utilize the Titans architecture, AI developers need to understand how to leverage its dual memory system. This involves:

- Designing Input Data: Prepare your input data to maximize the benefits of short-term and long-term memory separation.

- Balancing Memory Allocation: Carefully consider how much memory to allocate to the short-term and long-term modules. This will depend on the specific task and the length of the input sequences.

- Optimizing Memory Retrieval: Fine-tune the memory retrieval mechanism to ensure that relevant information is efficiently accessed from the long-term memory module.

- Adapting Existing Models: Adapt existing transformer-based models to incorporate the Titans architecture.

- Experimenting and Evaluating: Thoroughly experiment and evaluate the performance of your Titans-based model on a variety of tasks.

By mastering these techniques, AI developers can unlock the full potential of Titans architecture and build more powerful and capable AI systems.

Pros and Cons of Titans Architecture

Pros

- Improved handling of long-range dependencies.

- Linear scaling reduces computational costs.

- Dual memory system mirrors human brain function.

- Potential for new AI applications.

Cons

- Increased architectural complexity.

- Requires careful memory allocation and retrieval optimization.

- Still in early stages of development.

Frequently Asked Questions About Titans Architecture

What is Titans architecture?

Titans architecture is a novel approach to AI memory management developed by Google. It utilizes a dual memory system, consisting of short-term and long-term memory modules, to improve the handling of long-range dependencies and reduce computational costs in large language models.

How does Titans architecture differ from traditional transformers?

Traditional transformers rely on self-attention, which has quadratic complexity and struggles with long sequences. Titans architecture achieves linear scaling by separating short-term and long-term memory, allowing it to handle longer sequences more efficiently.

What are the potential applications of Titans architecture?

Titans architecture has potential applications in areas requiring long-range dependency analysis, such as genomics, financial modeling, and climate science. It can also improve the performance of AI models in extended conversations, document analysis, and code generation.

What are the challenges of using Titans architecture?

The challenges of using Titans architecture include its increased architectural complexity, the need for careful memory allocation and retrieval optimization, and its relatively early stage of development.

Related Questions on AI Memory and Architecture

How does attention mechanism work in Transformers?

The attention mechanism is a crucial component of transformer models, enabling them to focus on relevant parts of the input sequence when processing information. In essence, it assigns a weight to each word (or token) in the input sequence, indicating its importance concerning other words in the sequence. Let's delve into how the attention mechanism functions within transformers:

Input Embedding: Each word or token from the input sequence is initially converted into a vector representation through embedding layers. These embeddings serve as the input to the attention mechanism.

Query, Key, and Value: The input embeddings are transformed into three distinct vectors: the Query (Q), Key (K), and Value (V) vectors. These transformations are performed through linear transformations or learned weight matrices. Mathematically:

(Q = text{Input} cdot W_Q)

(K = text{Input} cdot W_K)

(V = text{Input} cdot W_V)

Here, (W_Q), (W_K), and (W_V) are the learned weight matrices for the Query, Key, and Value, respectively.

Attention Weights Calculation: The attention weights signify the degree of relevance between each pair of words in the input sequence. These weights are computed by taking the dot product of the Query vector with each Key vector. The resulting scores are then scaled down by the square root of the dimension of the Key vectors to stabilize training. This scaling prevents the dot products from becoming excessively large, which can lead to vanishing gradients during training.

Softmax Normalization: The scaled dot products are passed through a softmax function to normalize them into a probability distribution over the input sequence. This normalization ensures that the attention weights sum up to 1, making them easier to interpret and train.

Weighted Sum: Finally, the Value vectors are weighted by their corresponding attention weights. This weighted sum represents the output of the attention mechanism, which captures the relevant information from the entire input sequence.

The attention mechanism enables Transformers to handle sequential data effectively, capture long-range dependencies, and achieve state-of-the-art performance in various NLP tasks. By dynamically weighing the importance of different parts of the input sequence, the attention mechanism allows the model to focus on the most relevant information, leading to improved performance.

Gmail Rolls Out Automatic AI-Powered Email Summaries

Gemini-Powered Email Summaries Coming to Workspace Users

Google Workspace subscribers will notice Gemini's enhanced role in managing their inboxes as Gmail begins automatically generating summaries for complex email threads. These AI-created digests

Gmail Rolls Out Automatic AI-Powered Email Summaries

Gemini-Powered Email Summaries Coming to Workspace Users

Google Workspace subscribers will notice Gemini's enhanced role in managing their inboxes as Gmail begins automatically generating summaries for complex email threads. These AI-created digests

Build an AI-Powered Emoji Quote Generator Using Next.js and Gemini API

Build an AI-Powered Emoji Quote Generator with Next.jsThis hands-on tutorial walks through creating an engaging social media tool that combines web development with artificial intelligence. We'll build a dynamic application that generates inspiration

Build an AI-Powered Emoji Quote Generator Using Next.js and Gemini API

Build an AI-Powered Emoji Quote Generator with Next.jsThis hands-on tutorial walks through creating an engaging social media tool that combines web development with artificial intelligence. We'll build a dynamic application that generates inspiration

AI's Coming Wave: How Artificial Intelligence Will Transform Humanity

We find ourselves at a historic inflection point where artificial intelligence is transitioning from emerging technology to foundational force. Mustafa Suleyman's 'The Coming Wave' offers both visionary insight and urgent warning about AI's growing i

AI's Coming Wave: How Artificial Intelligence Will Transform Humanity

We find ourselves at a historic inflection point where artificial intelligence is transitioning from emerging technology to foundational force. Mustafa Suleyman's 'The Coming Wave' offers both visionary insight and urgent warning about AI's growing i

September 9, 2025 at 10:30:37 PM EDT

September 9, 2025 at 10:30:37 PM EDT

Cette histoire de 'mémoire' des IA me fait toujours sourire. Quand mon chat oublie où il a caché ses jouets, c'est mignon. Quand une IA oublie ce qu'elle vient de lire, ça coûte des millions en R&D... Ironique, non? 😅

0

0

August 10, 2025 at 9:00:59 AM EDT

August 10, 2025 at 9:00:59 AM EDT

This Titans Architecture sounds like a game-changer for AI memory! 🤯 Curious if it'll really solve the context window issue or just hype. Anyone tried it yet?

0

0

August 1, 2025 at 9:47:34 AM EDT

August 1, 2025 at 9:47:34 AM EDT

Wow, this Titans Architecture sounds like a game-changer for AI memory! Can't wait to see how it tackles the context window issue. 🤯

0

0

July 22, 2025 at 3:35:51 AM EDT

July 22, 2025 at 3:35:51 AM EDT

Wow, this Titans Architecture sounds like a game-changer for AI memory! I'm curious how it'll handle massive datasets without forgetting the plot. 😄 Could this finally make LLMs smarter than my goldfish?

0

0