OpenAI Unveils Two Advanced Open-Weight AI Models

OpenAI revealed on Tuesday the release of two open-weight AI reasoning models, boasting capabilities comparable to its o-series. Both models are available for free download on Hugging Face, with OpenAI touting them as "top-performing" across multiple benchmarks for open models.

The models are offered in two variants: the robust gpt-oss-120b, operable on a single Nvidia GPU, and the lightweight gpt-oss-20b, designed to run on a standard laptop with 16GB of memory.

This launch represents OpenAI's first open language model since GPT-2, introduced over five years ago.

During a briefing, OpenAI noted that its open models can handle complex queries by connecting to more advanced cloud-based AI systems, as previously reported by TechCrunch. This allows developers to link the open model to OpenAI’s proprietary models for tasks like image processing when needed.

Although OpenAI initially embraced open-source AI models, it has largely shifted to a proprietary development model, fueling a thriving business by providing API access to enterprises and developers.

In January, CEO Sam Altman acknowledged that OpenAI may have misstepped by not prioritizing open-source technologies. The company now faces stiff competition from Chinese AI labs like DeepSeek, Alibaba’s Qwen, and Moonshot AI, which have gained traction with their highly capable open models. (Meta’s Llama models, once leaders in the open AI space, have lagged behind in the past year.)

In July, the Trump Administration encouraged U.S. AI developers to open-source more technology to advance AI aligned with American values globally.

Tech and VC Leaders Headline Disrupt 2025

Netflix, ElevenLabs, Wayve, and Sequoia Capital are among the prominent names on the Disrupt 2025 agenda, sharing insights to drive startup success and innovation. Don’t miss the 20th anniversary of TechCrunch Disrupt for a chance to learn from tech’s top voices—secure your ticket now and save up to $675 before prices increase on August 7.

Tech and VC Leaders Headline Disrupt 2025

Netflix, ElevenLabs, Wayve, and Sequoia Capital are among the prominent names on the Disrupt 2025 agenda, sharing insights to drive startup success and innovation. Don’t miss the 20th anniversary of TechCrunch Disrupt for a chance to learn from tech’s top voices—secure your ticket now and save up to $675 before prices increase.

San Francisco | October 27-29, 2025 REGISTER NOWWith gpt-oss, OpenAI aims to win over developers and align with the Trump Administration’s push, as both have noted the rise of Chinese AI labs in the open-source arena.

“Since our founding in 2015, OpenAI’s mission has been to advance AGI for the benefit of all humanity,” said CEO Sam Altman in a statement to TechCrunch. “We’re thrilled to see the world building on an open AI framework rooted in U.S. democratic values, freely accessible, and widely beneficial.”

(Photo by Tomohiro Ohsumi/Getty Images)Image Credits:Tomohiro Ohsumi / Getty Images Model Performance Overview

OpenAI designed its open model to lead among open-weight AI systems, and the company claims it has achieved this goal.

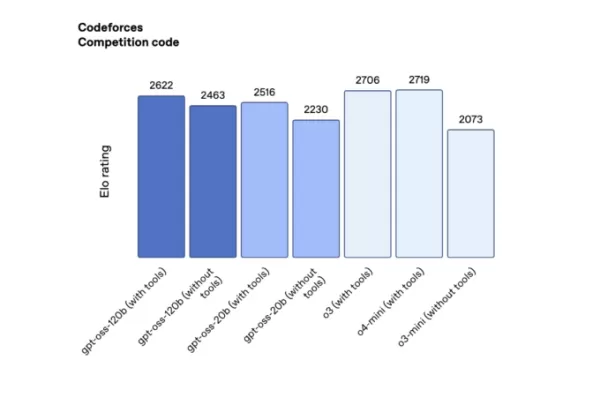

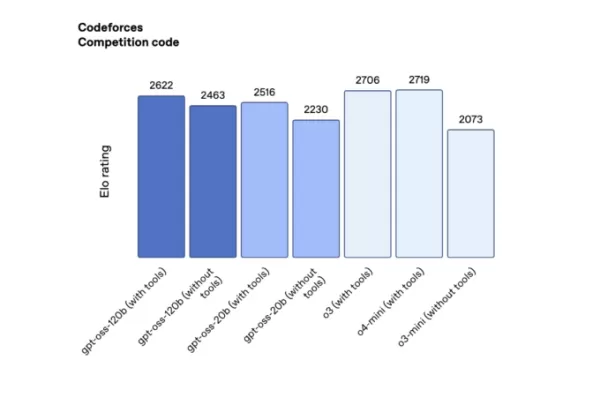

In competitive coding tests on Codeforces (with tools), gpt-oss-120b scored 2622 and gpt-oss-20b scored 2516, surpassing DeepSeek’s R1 but trailing behind o3 and o4-mini.

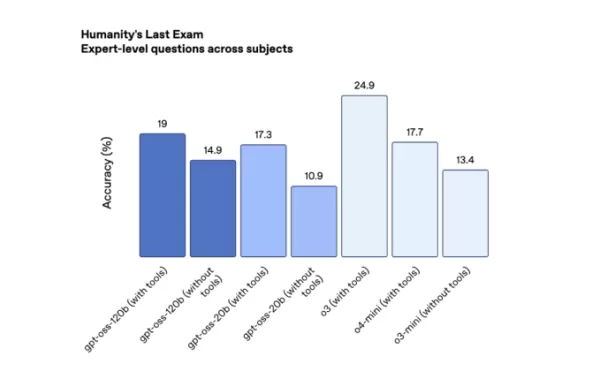

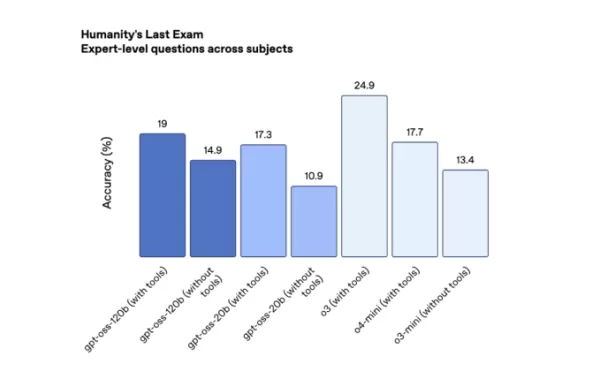

OpenAI’s open model performance on Codeforces (credit: OpenAI). On Humanity’s Last Exam, a rigorous crowd-sourced test spanning multiple subjects (with tools), gpt-oss-120b and gpt-oss-20b achieved 19% and 17.3%, respectively, outperforming leading open models from DeepSeek and Qwen but falling short of o3.

OpenAI’s open model performance on HLE (credit: OpenAI). Notably, OpenAI’s open models exhibit significantly higher hallucination rates than its latest reasoning models, o3 and o4-mini.

Hallucinations have become more pronounced in OpenAI’s recent AI reasoning models, with the company admitting it is still investigating the cause. In a white paper, OpenAI noted that “smaller models, with less world knowledge, are expected to hallucinate more than larger frontier models.”

On PersonQA, OpenAI’s internal benchmark for assessing model accuracy about individuals, gpt-oss-120b and gpt-oss-20b hallucinated in 49% and 53% of responses, respectively—over triple the rate of the o1 model (16%) and higher than o4-mini (36%).

Training the New Models

OpenAI states that its open models were developed using techniques similar to its proprietary models. Each leverages a mixture-of-experts (MoE) approach, activating fewer parameters per query for greater efficiency. For gpt-oss-120b, with 117 billion total parameters, only 5.1 billion are used per token.

The open models were trained with high-compute reinforcement learning (RL), a post-training method using Nvidia GPU clusters to refine AI decision-making in simulated settings. This mirrors the training of OpenAI’s o-series and includes a chain-of-thought process, requiring extra time and resources to reason through responses.

This training enables the open models to excel at powering AI agents, supporting tools like web search or Python code execution within their reasoning process. However, they are limited to text-only tasks, unable to process or generate images or audio, unlike OpenAI’s other models.

The gpt-oss-120b and gpt-oss-20b models are released under the Apache 2.0 license, allowing enterprises to monetize them without fees or permission from OpenAI.

Unlike fully open-source models from labs like AI2, OpenAI will not disclose the training data for its open models, a decision likely influenced by ongoing lawsuits alleging improper use of copyrighted material in AI training.

OpenAI postponed the release of its open models multiple times to address safety concerns. Beyond standard safety protocols, the company evaluated whether malicious actors could fine-tune gpt-oss for harmful purposes, such as cyberattacks or creating biological or chemical weapons.

Testing by OpenAI and external evaluators found that gpt-oss may slightly enhance biological capabilities but does not reach the company’s “high capability” threshold for danger, even after fine-tuning.

While OpenAI’s models lead among open models, developers are anticipating the release of DeepSeek’s R2 and a new open model from Meta’s superintelligence lab.

Related article

US Senate Drops AI Moratorium from Budget Bill Amid Controversy

Senate Overwhelmingly Repeals AI Regulation Moratorium

In a rare show of bipartisan unity, U.S. lawmakers voted nearly unanimously Tuesday to eliminate a contentious decade-long prohibition on state-level AI regulation from landmark legislation orig

US Senate Drops AI Moratorium from Budget Bill Amid Controversy

Senate Overwhelmingly Repeals AI Regulation Moratorium

In a rare show of bipartisan unity, U.S. lawmakers voted nearly unanimously Tuesday to eliminate a contentious decade-long prohibition on state-level AI regulation from landmark legislation orig

ByteDance Unveils Seed-Thinking-v1.5 AI Model to Boost Reasoning Capabilities

The race for advanced reasoning AI began with OpenAI’s o1 model in September 2024, gaining momentum with DeepSeek’s R1 launch in January 2025.Major AI developers are now competing to create faster, mo

ByteDance Unveils Seed-Thinking-v1.5 AI Model to Boost Reasoning Capabilities

The race for advanced reasoning AI began with OpenAI’s o1 model in September 2024, gaining momentum with DeepSeek’s R1 launch in January 2025.Major AI developers are now competing to create faster, mo

Oracle's $40B Nvidia Chip Investment Boosts Texas AI Data Center

Oracle is set to invest approximately $40 billion in Nvidia chips to power a major new data center in Texas, developed by OpenAI, as reported by the Financial Times. This deal, one of the largest chip

Comments (0)

0/200

Oracle's $40B Nvidia Chip Investment Boosts Texas AI Data Center

Oracle is set to invest approximately $40 billion in Nvidia chips to power a major new data center in Texas, developed by OpenAI, as reported by the Financial Times. This deal, one of the largest chip

Comments (0)

0/200

OpenAI revealed on Tuesday the release of two open-weight AI reasoning models, boasting capabilities comparable to its o-series. Both models are available for free download on Hugging Face, with OpenAI touting them as "top-performing" across multiple benchmarks for open models.

The models are offered in two variants: the robust gpt-oss-120b, operable on a single Nvidia GPU, and the lightweight gpt-oss-20b, designed to run on a standard laptop with 16GB of memory.

This launch represents OpenAI's first open language model since GPT-2, introduced over five years ago.

During a briefing, OpenAI noted that its open models can handle complex queries by connecting to more advanced cloud-based AI systems, as previously reported by TechCrunch. This allows developers to link the open model to OpenAI’s proprietary models for tasks like image processing when needed.

Although OpenAI initially embraced open-source AI models, it has largely shifted to a proprietary development model, fueling a thriving business by providing API access to enterprises and developers.

In January, CEO Sam Altman acknowledged that OpenAI may have misstepped by not prioritizing open-source technologies. The company now faces stiff competition from Chinese AI labs like DeepSeek, Alibaba’s Qwen, and Moonshot AI, which have gained traction with their highly capable open models. (Meta’s Llama models, once leaders in the open AI space, have lagged behind in the past year.)

In July, the Trump Administration encouraged U.S. AI developers to open-source more technology to advance AI aligned with American values globally.

Tech and VC Leaders Headline Disrupt 2025

Netflix, ElevenLabs, Wayve, and Sequoia Capital are among the prominent names on the Disrupt 2025 agenda, sharing insights to drive startup success and innovation. Don’t miss the 20th anniversary of TechCrunch Disrupt for a chance to learn from tech’s top voices—secure your ticket now and save up to $675 before prices increase on August 7.

Tech and VC Leaders Headline Disrupt 2025

Netflix, ElevenLabs, Wayve, and Sequoia Capital are among the prominent names on the Disrupt 2025 agenda, sharing insights to drive startup success and innovation. Don’t miss the 20th anniversary of TechCrunch Disrupt for a chance to learn from tech’s top voices—secure your ticket now and save up to $675 before prices increase.

San Francisco | October 27-29, 2025 REGISTER NOWWith gpt-oss, OpenAI aims to win over developers and align with the Trump Administration’s push, as both have noted the rise of Chinese AI labs in the open-source arena.

“Since our founding in 2015, OpenAI’s mission has been to advance AGI for the benefit of all humanity,” said CEO Sam Altman in a statement to TechCrunch. “We’re thrilled to see the world building on an open AI framework rooted in U.S. democratic values, freely accessible, and widely beneficial.”

Model Performance Overview

OpenAI designed its open model to lead among open-weight AI systems, and the company claims it has achieved this goal.

In competitive coding tests on Codeforces (with tools), gpt-oss-120b scored 2622 and gpt-oss-20b scored 2516, surpassing DeepSeek’s R1 but trailing behind o3 and o4-mini.

On Humanity’s Last Exam, a rigorous crowd-sourced test spanning multiple subjects (with tools), gpt-oss-120b and gpt-oss-20b achieved 19% and 17.3%, respectively, outperforming leading open models from DeepSeek and Qwen but falling short of o3.

Notably, OpenAI’s open models exhibit significantly higher hallucination rates than its latest reasoning models, o3 and o4-mini.

Hallucinations have become more pronounced in OpenAI’s recent AI reasoning models, with the company admitting it is still investigating the cause. In a white paper, OpenAI noted that “smaller models, with less world knowledge, are expected to hallucinate more than larger frontier models.”

On PersonQA, OpenAI’s internal benchmark for assessing model accuracy about individuals, gpt-oss-120b and gpt-oss-20b hallucinated in 49% and 53% of responses, respectively—over triple the rate of the o1 model (16%) and higher than o4-mini (36%).

Training the New Models

OpenAI states that its open models were developed using techniques similar to its proprietary models. Each leverages a mixture-of-experts (MoE) approach, activating fewer parameters per query for greater efficiency. For gpt-oss-120b, with 117 billion total parameters, only 5.1 billion are used per token.

The open models were trained with high-compute reinforcement learning (RL), a post-training method using Nvidia GPU clusters to refine AI decision-making in simulated settings. This mirrors the training of OpenAI’s o-series and includes a chain-of-thought process, requiring extra time and resources to reason through responses.

This training enables the open models to excel at powering AI agents, supporting tools like web search or Python code execution within their reasoning process. However, they are limited to text-only tasks, unable to process or generate images or audio, unlike OpenAI’s other models.

The gpt-oss-120b and gpt-oss-20b models are released under the Apache 2.0 license, allowing enterprises to monetize them without fees or permission from OpenAI.

Unlike fully open-source models from labs like AI2, OpenAI will not disclose the training data for its open models, a decision likely influenced by ongoing lawsuits alleging improper use of copyrighted material in AI training.

OpenAI postponed the release of its open models multiple times to address safety concerns. Beyond standard safety protocols, the company evaluated whether malicious actors could fine-tune gpt-oss for harmful purposes, such as cyberattacks or creating biological or chemical weapons.

Testing by OpenAI and external evaluators found that gpt-oss may slightly enhance biological capabilities but does not reach the company’s “high capability” threshold for danger, even after fine-tuning.

While OpenAI’s models lead among open models, developers are anticipating the release of DeepSeek’s R2 and a new open model from Meta’s superintelligence lab.

US Senate Drops AI Moratorium from Budget Bill Amid Controversy

Senate Overwhelmingly Repeals AI Regulation Moratorium

In a rare show of bipartisan unity, U.S. lawmakers voted nearly unanimously Tuesday to eliminate a contentious decade-long prohibition on state-level AI regulation from landmark legislation orig

US Senate Drops AI Moratorium from Budget Bill Amid Controversy

Senate Overwhelmingly Repeals AI Regulation Moratorium

In a rare show of bipartisan unity, U.S. lawmakers voted nearly unanimously Tuesday to eliminate a contentious decade-long prohibition on state-level AI regulation from landmark legislation orig

ByteDance Unveils Seed-Thinking-v1.5 AI Model to Boost Reasoning Capabilities

The race for advanced reasoning AI began with OpenAI’s o1 model in September 2024, gaining momentum with DeepSeek’s R1 launch in January 2025.Major AI developers are now competing to create faster, mo

ByteDance Unveils Seed-Thinking-v1.5 AI Model to Boost Reasoning Capabilities

The race for advanced reasoning AI began with OpenAI’s o1 model in September 2024, gaining momentum with DeepSeek’s R1 launch in January 2025.Major AI developers are now competing to create faster, mo