Meta Defends Llama 4 Release, Cites Bugs as Cause of Mixed Quality Reports

Over the weekend, Meta, the powerhouse behind Facebook, Instagram, WhatsApp, and Quest VR, surprised everyone by unveiling their latest AI language model, Llama 4. Not just one, but three new versions were introduced, each boasting enhanced capabilities thanks to the "Mixture-of-Experts" architecture and a novel training approach called MetaP, which involves fixed hyperparameters. What's more, all three models come with expansive context windows, allowing them to process more information in a single interaction.

Despite the excitement of the release, the AI community's reaction has been lukewarm at best. On Saturday, Meta made two of these models, Llama 4 Scout and Llama 4 Maverick, available for download and use, but the response has been far from enthusiastic.

Llama 4 Sparks Confusion and Criticism Among AI Users

An unverified post on the 1point3acres forum, a popular Chinese language community in North America, found its way to the r/LocalLlama subreddit on Reddit. The post, allegedly from a researcher at Meta’s GenAI organization, claimed that Llama 4 underperformed on internal third-party benchmarks. It suggested that Meta's leadership had manipulated the results by blending test sets during post-training to meet various metrics and present a favorable outcome. The authenticity of this claim was met with skepticism, and Meta has yet to respond to inquiries from VentureBeat.

Yet, the doubts about Llama 4's performance didn't stop there. On X, user @cto_junior expressed disbelief at the model's performance, citing an independent test where Llama 4 Maverick scored a mere 16% on the aider polyglot benchmark, which tests coding tasks. This score is significantly lower than that of older, similarly sized models like DeepSeek V3 and Claude 3.7 Sonnet.

AI PhD and author Andriy Burkov also took to X to question the model's advertised 10 million-token context window for Llama 4 Scout, stating that it's "virtual" because the model wasn't trained on prompts longer than 256k tokens. He warned that sending longer prompts would likely result in low-quality outputs.

On the r/LocalLlama subreddit, user Dr_Karminski shared disappointment with Llama 4, comparing its poor performance to DeepSeek’s non-reasoning V3 model on tasks like simulating ball movements within a heptagon.

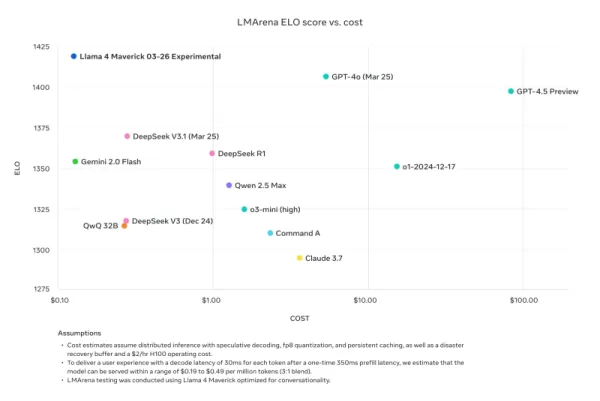

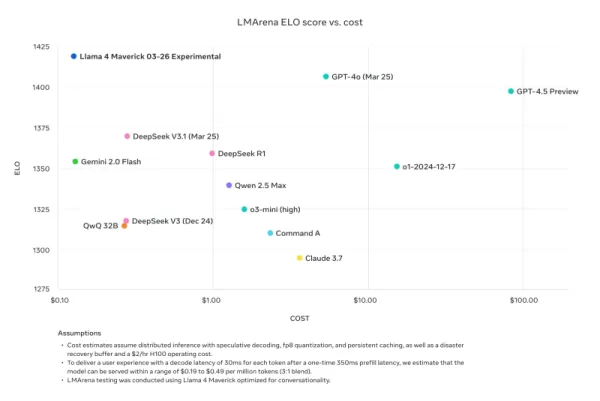

Nathan Lambert, a former Meta researcher and current Senior Research Scientist at AI2, criticized Meta's benchmark comparisons on his Interconnects Substack blog. He pointed out that the Llama 4 Maverick model used in Meta's promotional materials was different from the one publicly released, optimized instead for conversationality. Lambert noted the discrepancy, saying, "Sneaky. The results below are fake, and it is a major slight to Meta’s community to not release the model they used to create their major marketing push." He added that while the promotional model was "tanking the technical reputation of the release because its character is juvenile," the actual model available on other platforms was "quite smart and has a reasonable tone."

Meta Responds, Denying 'Training on Test Sets' and Citing Bugs in Implementation Due to Fast Rollout

In response to the criticism and accusations, Meta's VP and Head of GenAI, Ahmad Al-Dahle, took to X to address the concerns. He expressed enthusiasm for the community's engagement with Llama 4 but acknowledged reports of inconsistent quality across different services. He attributed these issues to the rapid rollout and the time needed for public implementations to stabilize. Al-Dahle firmly denied the allegations of training on test sets, emphasizing that the variable quality was due to implementation bugs rather than any misconduct. He reaffirmed Meta's belief in the significant advancements of the Llama 4 models and their commitment to working with the community to realize their potential.

However, the response did little to quell the community's frustrations, with many still reporting poor performance and demanding more technical documentation about the models' training processes. This release has faced more issues than previous Llama versions, raising questions about its development and rollout.

The timing of this release is notable, as it follows the departure of Joelle Pineau, Meta's VP of Research, who announced her exit on LinkedIn last week with gratitude for her time at the company. Pineau had also promoted the Llama 4 model family over the weekend.

As Llama 4 continues to be adopted by other inference providers with mixed results, it's clear that the initial release has not been the success Meta might have hoped for. The upcoming Meta LlamaCon on April 29, which will be the first gathering for third-party developers of the model family, is likely to be a hotbed of discussion and debate. We'll be keeping a close eye on developments, so stay tuned.

Related article

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

Anthropic's AI Upgrade: Claude Now Searches Entire Google Workspace Instantly

Today's major upgrade from Anthropic transforms Claude from an AI assistant into what the company calls a "true virtual collaborator," introducing groundbreaking autonomous research capabilities and seamless Google Workspace integration. These advanc

Anthropic's AI Upgrade: Claude Now Searches Entire Google Workspace Instantly

Today's major upgrade from Anthropic transforms Claude from an AI assistant into what the company calls a "true virtual collaborator," introducing groundbreaking autonomous research capabilities and seamless Google Workspace integration. These advanc

Alibaba's 'ZeroSearch' AI Slashes Training Costs by 88% Through Autonomous Learning

Alibaba's ZeroSearch: A Game-Changer for AI Training EfficiencyAlibaba Group researchers have pioneered a breakthrough method that potentially revolutionizes how AI systems learn information retrieval, bypassing costly commercial search engine APIs e

Comments (8)

0/200

Alibaba's 'ZeroSearch' AI Slashes Training Costs by 88% Through Autonomous Learning

Alibaba's ZeroSearch: A Game-Changer for AI Training EfficiencyAlibaba Group researchers have pioneered a breakthrough method that potentially revolutionizes how AI systems learn information retrieval, bypassing costly commercial search engine APIs e

Comments (8)

0/200

![JohnWilson]() JohnWilson

JohnWilson

August 25, 2025 at 9:01:18 PM EDT

August 25, 2025 at 9:01:18 PM EDT

Meta's Llama 4 drop was wild! Three versions with that fancy Mixture-of-Experts setup? Sounds powerful, but those bugs they mentioned make me wonder if it’s ready for prime time. Anyone tried it yet? 🧐

0

0

![HarryRoberts]() HarryRoberts

HarryRoberts

August 21, 2025 at 5:01:34 PM EDT

August 21, 2025 at 5:01:34 PM EDT

Wow, Llama 4 sounds like a beast with that Mixture-of-Experts setup! But bugs causing mixed quality? Kinda feels like Meta rushed this out to beat the competition. Hope they patch it up soon! 🦙

0

0

![ArthurJones]() ArthurJones

ArthurJones

August 12, 2025 at 7:00:59 AM EDT

August 12, 2025 at 7:00:59 AM EDT

Wow, Llama 4 sounds like a beast with that Mixture-of-Experts setup! But bugs causing mixed quality? That’s a bit concerning for a big player like Meta. Hope they iron it out soon, I’m curious to see how it stacks up against other models! 🦙

0

0

![CharlesYoung]() CharlesYoung

CharlesYoung

April 24, 2025 at 3:47:05 PM EDT

April 24, 2025 at 3:47:05 PM EDT

Llama 4 a l’air d’une sacrée avancée avec son architecture Mixture-of-Experts ! 😎 Mais les bugs, sérieux ? Ça sent la sortie précipitée pour faire la course avec les autres géants. Curieux de voir ce que ça donne après les correctifs.

0

0

![AlbertLee]() AlbertLee

AlbertLee

April 24, 2025 at 7:01:02 AM EDT

April 24, 2025 at 7:01:02 AM EDT

¡Llama 4 con tres versiones nuevas! 😲 La arquitectura Mixture-of-Experts suena brutal, pero lo de los bugs me da mala espina. Meta siempre quiere estar a la cabeza, ¿no? Espero que lo pulan pronto.

0

0

![HarryLewis]() HarryLewis

HarryLewis

April 23, 2025 at 7:06:55 PM EDT

April 23, 2025 at 7:06:55 PM EDT

ラマ4の発表、めっちゃ驚いた!😮 3つのバージョンってすごいけど、バグで品質がバラバラって…。ちょっと不安だな。AIの進化は楽しみだけど、倫理面どうするんだろ?

0

0

Over the weekend, Meta, the powerhouse behind Facebook, Instagram, WhatsApp, and Quest VR, surprised everyone by unveiling their latest AI language model, Llama 4. Not just one, but three new versions were introduced, each boasting enhanced capabilities thanks to the "Mixture-of-Experts" architecture and a novel training approach called MetaP, which involves fixed hyperparameters. What's more, all three models come with expansive context windows, allowing them to process more information in a single interaction.

Despite the excitement of the release, the AI community's reaction has been lukewarm at best. On Saturday, Meta made two of these models, Llama 4 Scout and Llama 4 Maverick, available for download and use, but the response has been far from enthusiastic.

Llama 4 Sparks Confusion and Criticism Among AI Users

An unverified post on the 1point3acres forum, a popular Chinese language community in North America, found its way to the r/LocalLlama subreddit on Reddit. The post, allegedly from a researcher at Meta’s GenAI organization, claimed that Llama 4 underperformed on internal third-party benchmarks. It suggested that Meta's leadership had manipulated the results by blending test sets during post-training to meet various metrics and present a favorable outcome. The authenticity of this claim was met with skepticism, and Meta has yet to respond to inquiries from VentureBeat.

Yet, the doubts about Llama 4's performance didn't stop there. On X, user @cto_junior expressed disbelief at the model's performance, citing an independent test where Llama 4 Maverick scored a mere 16% on the aider polyglot benchmark, which tests coding tasks. This score is significantly lower than that of older, similarly sized models like DeepSeek V3 and Claude 3.7 Sonnet.

AI PhD and author Andriy Burkov also took to X to question the model's advertised 10 million-token context window for Llama 4 Scout, stating that it's "virtual" because the model wasn't trained on prompts longer than 256k tokens. He warned that sending longer prompts would likely result in low-quality outputs.

On the r/LocalLlama subreddit, user Dr_Karminski shared disappointment with Llama 4, comparing its poor performance to DeepSeek’s non-reasoning V3 model on tasks like simulating ball movements within a heptagon.

Nathan Lambert, a former Meta researcher and current Senior Research Scientist at AI2, criticized Meta's benchmark comparisons on his Interconnects Substack blog. He pointed out that the Llama 4 Maverick model used in Meta's promotional materials was different from the one publicly released, optimized instead for conversationality. Lambert noted the discrepancy, saying, "Sneaky. The results below are fake, and it is a major slight to Meta’s community to not release the model they used to create their major marketing push." He added that while the promotional model was "tanking the technical reputation of the release because its character is juvenile," the actual model available on other platforms was "quite smart and has a reasonable tone."

Meta Responds, Denying 'Training on Test Sets' and Citing Bugs in Implementation Due to Fast Rollout

In response to the criticism and accusations, Meta's VP and Head of GenAI, Ahmad Al-Dahle, took to X to address the concerns. He expressed enthusiasm for the community's engagement with Llama 4 but acknowledged reports of inconsistent quality across different services. He attributed these issues to the rapid rollout and the time needed for public implementations to stabilize. Al-Dahle firmly denied the allegations of training on test sets, emphasizing that the variable quality was due to implementation bugs rather than any misconduct. He reaffirmed Meta's belief in the significant advancements of the Llama 4 models and their commitment to working with the community to realize their potential.

However, the response did little to quell the community's frustrations, with many still reporting poor performance and demanding more technical documentation about the models' training processes. This release has faced more issues than previous Llama versions, raising questions about its development and rollout.

The timing of this release is notable, as it follows the departure of Joelle Pineau, Meta's VP of Research, who announced her exit on LinkedIn last week with gratitude for her time at the company. Pineau had also promoted the Llama 4 model family over the weekend.

As Llama 4 continues to be adopted by other inference providers with mixed results, it's clear that the initial release has not been the success Meta might have hoped for. The upcoming Meta LlamaCon on April 29, which will be the first gathering for third-party developers of the model family, is likely to be a hotbed of discussion and debate. We'll be keeping a close eye on developments, so stay tuned.

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

Anthropic's AI Upgrade: Claude Now Searches Entire Google Workspace Instantly

Today's major upgrade from Anthropic transforms Claude from an AI assistant into what the company calls a "true virtual collaborator," introducing groundbreaking autonomous research capabilities and seamless Google Workspace integration. These advanc

Anthropic's AI Upgrade: Claude Now Searches Entire Google Workspace Instantly

Today's major upgrade from Anthropic transforms Claude from an AI assistant into what the company calls a "true virtual collaborator," introducing groundbreaking autonomous research capabilities and seamless Google Workspace integration. These advanc

Alibaba's 'ZeroSearch' AI Slashes Training Costs by 88% Through Autonomous Learning

Alibaba's ZeroSearch: A Game-Changer for AI Training EfficiencyAlibaba Group researchers have pioneered a breakthrough method that potentially revolutionizes how AI systems learn information retrieval, bypassing costly commercial search engine APIs e

Alibaba's 'ZeroSearch' AI Slashes Training Costs by 88% Through Autonomous Learning

Alibaba's ZeroSearch: A Game-Changer for AI Training EfficiencyAlibaba Group researchers have pioneered a breakthrough method that potentially revolutionizes how AI systems learn information retrieval, bypassing costly commercial search engine APIs e

August 25, 2025 at 9:01:18 PM EDT

August 25, 2025 at 9:01:18 PM EDT

Meta's Llama 4 drop was wild! Three versions with that fancy Mixture-of-Experts setup? Sounds powerful, but those bugs they mentioned make me wonder if it’s ready for prime time. Anyone tried it yet? 🧐

0

0

August 21, 2025 at 5:01:34 PM EDT

August 21, 2025 at 5:01:34 PM EDT

Wow, Llama 4 sounds like a beast with that Mixture-of-Experts setup! But bugs causing mixed quality? Kinda feels like Meta rushed this out to beat the competition. Hope they patch it up soon! 🦙

0

0

August 12, 2025 at 7:00:59 AM EDT

August 12, 2025 at 7:00:59 AM EDT

Wow, Llama 4 sounds like a beast with that Mixture-of-Experts setup! But bugs causing mixed quality? That’s a bit concerning for a big player like Meta. Hope they iron it out soon, I’m curious to see how it stacks up against other models! 🦙

0

0

April 24, 2025 at 3:47:05 PM EDT

April 24, 2025 at 3:47:05 PM EDT

Llama 4 a l’air d’une sacrée avancée avec son architecture Mixture-of-Experts ! 😎 Mais les bugs, sérieux ? Ça sent la sortie précipitée pour faire la course avec les autres géants. Curieux de voir ce que ça donne après les correctifs.

0

0

April 24, 2025 at 7:01:02 AM EDT

April 24, 2025 at 7:01:02 AM EDT

¡Llama 4 con tres versiones nuevas! 😲 La arquitectura Mixture-of-Experts suena brutal, pero lo de los bugs me da mala espina. Meta siempre quiere estar a la cabeza, ¿no? Espero que lo pulan pronto.

0

0

April 23, 2025 at 7:06:55 PM EDT

April 23, 2025 at 7:06:55 PM EDT

ラマ4の発表、めっちゃ驚いた!😮 3つのバージョンってすごいけど、バグで品質がバラバラって…。ちょっと不安だな。AIの進化は楽しみだけど、倫理面どうするんだろ?

0

0