AI-Generated Images Spark Controversy Over Election Integrity

The advent of artificial intelligence has ushered in a wave of technological advancements, but it's also thrown a wrench into our ability to tell fact from fiction. Lately, AI-generated images circulating on social media have sparked worries about their potential to skew political conversations and threaten the integrity of elections. It's vital that we grasp the full scope of these technologies to keep our public discussions informed and maintain trust in what we see.

The Controversy of AI-Generated Political Images

The Rise of AI-Generated Content

Artificial intelligence has reached a point where it can whip up images, videos, and audio that look and sound incredibly real. It's a cool feat, no doubt, but it's also a bit scary, especially when it comes to politics and elections. The power to craft convincing yet completely made-up content can throw the public off track and shake our faith in the news we consume. Social media's ability to spread this content like wildfire only makes things worse, turning it into a real headache to keep the narrative straight and stop misinformation in its tracks.

As AI tools become more user-friendly, it's easier than ever for anyone with a bad agenda to mess with public opinion. These AI creations, often called synthetic media or deepfakes, challenge our ability to think critically and stay media-savvy. The potential to twist public perception and erode trust in our institutions is a big deal, pushing us to take proactive steps and set ethical boundaries to keep the risks in check.

Political campaigns can use this tech to fake endorsements or smear opponents, swaying how people vote. Without clear signs or tools to spot these fakes, everyday folks might struggle to tell what's real and what's not, leading to choices based on false info. This kind of widespread trickery could shake the very foundations of democracy, making it urgent that we find both tech and policy solutions to tackle it.

So, how do we protect ourselves from AI being misused in political campaigns and make sure voters get the real deal? It's going to take a mix of tech advancements, teaching people to be media-smart, and solid policy frameworks. Using AI-generated content brings up big questions about honesty, openness, and responsibility, highlighting the need for everyone to step up and defend democratic values in the AI era.

Trump's Use of AI-Generated Images

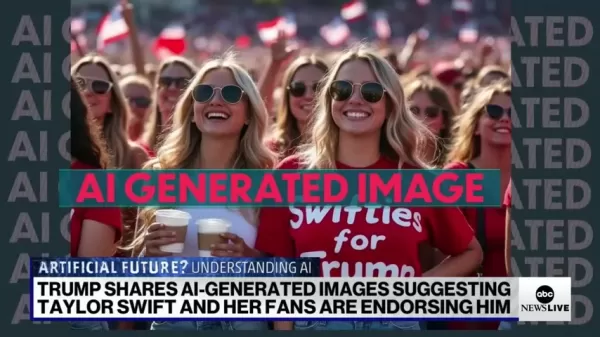

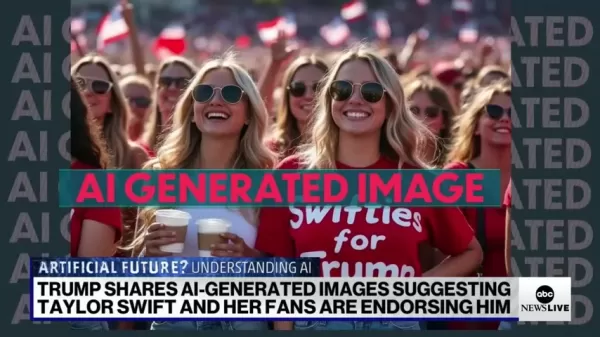

Former President Trump stirred up a storm when he posted AI-generated images on social media, sparking a heated debate about the ethics and fallout of using such content in politics.

By sharing images that made it look like Taylor Swift and her fans were backing him, Trump raised eyebrows about manipulating how people see him. Some of these images were real, while others were AI-made, causing confusion and raising questions about how open political messaging should be.

The images showed women in 'Swifties for Trump' shirts, suggesting support from a group that might not actually align with his politics. This move brings up ethical questions about whether it's right to use AI to fake endorsements or twist public sentiment. Not clearly marking some images as AI-made only muddies the waters, potentially tricking people who don't know they're looking at something artificial.

While some images were indeed AI-generated, others were genuine photos of Trump supporters. Mixing real and fake like this can make it tough for viewers to figure out what's true, blurring the lines between honest and deceptive messaging. This mix-up can erode trust and sow doubt in public discussions, as people lose confidence in their ability to spot the real from the fake.

Sharing these images could also be seen as a strategic move to win over specific voter groups, like Taylor Swift fans, whether they actually support him or not. By creating the illusion of widespread support, even if it's artificially generated, political campaigns might sway undecided voters or fire up their base. This shows how AI can be a powerful tool for persuasion, capable of shaping public opinion and influencing election results.

When big political figures use AI-generated content, it's a wake-up call for the public to be more vigilant and media-savvy. As AI tech keeps advancing, it's crucial for people to think critically about the content they see online and seek out trustworthy sources. Media outlets, schools, and government agencies all have a part to play in boosting critical thinking and raising awareness about the dangers of manipulated media.

Confusion and Misinformation

Sharing AI-generated images and other manipulated content online is fueling a growing problem of confusion and misinformation.

When people are hit with a mix of real and fake info, it's tough to figure out what's true. This mess can erode trust in the media, institutions, and even personal relationships, making it hard to reach a consensus on important issues.

One of the biggest hurdles in fighting misinformation is how fast it spreads on social media. False or misleading content can go viral in a flash, reaching millions before fact-checkers can even blink. This rapid spread makes it tough to stop the damage caused by misinformation, as it can take root in people's minds and sway their opinions.

Another challenge is how sophisticated modern manipulation techniques have become. AI-generated content, deepfakes, and other synthetic media are getting so good that even experts struggle to spot them. This means traditional fact-checking and media analysis aren't enough anymore, pushing us to develop new tools and methods to fight back.

Making matters worse are the echo chambers and filter bubbles online. Social media algorithms often show us content that matches our existing views, creating echo chambers where we're mostly exposed to similar perspectives. This can reinforce our biases and make it harder to engage with different viewpoints, leading to more polarization and division.

To tackle confusion and misinformation, we need a multi-faceted approach that includes tech solutions, media literacy education, and policy changes. Social media platforms should invest in AI-powered tools to spot and flag manipulated content. Schools should teach media literacy, showing students how to critically evaluate sources and spot manipulation techniques. Policymakers should think about regulations that hold people accountable for spreading misinformation while protecting free speech. Only by working together can we hope to curb the spread of misinformation and foster a more informed and engaged public.

Examining Real Versus AI-Generated Imagery

The Challenge of Distinguishing Authenticity

Telling real images from AI-generated ones is getting trickier by the day, thanks to the sophistication of artificial intelligence. AI can now create images that look so real, it's hard for the average person to tell them apart from genuine photos. This ability has big implications for how much we can trust what we see, especially in sensitive areas like news and political communications.

One reason it's so hard to spot the difference is how fast AI algorithms are improving. These algorithms are getting really good at copying the textures, lighting, and details of real scenes, making images that are almost impossible to tell from the real thing. Plus, AI can create images of people, objects, and events that never existed, crafting convincing yet entirely made-up realities.

Another factor is that many people don't know much about AI manipulation techniques. Without a basic understanding of what AI can do, it's easier for people to be fooled. If you don't know how AI works, it's tough to critically evaluate the images you see online.

The lack of clear labeling and transparency makes things even more complicated. Social media and media outlets often don't mark AI-generated images, leaving it up to viewers to figure out what's real. This lack of openness makes it easier for deceptive content to spread, as people might assume unlabeled images are genuine.

To tackle these challenges, we need a multi-pronged approach that includes tech solutions, media literacy education, and policy changes. Tech solutions like AI-powered detection tools can help spot and flag manipulated images. Media literacy education can teach people how to critically evaluate sources and spot manipulation techniques. Policy changes can promote more transparency and accountability in using AI-generated content.

By combining these efforts, we can better protect ourselves from the deceptive use of AI in visual media and build more trust in the images that shape our public discussions. Developing and widely adopting these tools is crucial for keeping visual media honest and ensuring people can make informed decisions based on reliable information.

Strategies to Discern AI-Generated Content

Step-by-Step Guide for Visual Verification

With AI-generated content getting more sophisticated, it's important for us to be on our toes and use specific methods to check the authenticity of what we see. Here's a step-by-step guide to help you out:

- Examine Image Details: Take a close look at the image for anything that seems off. AI-generated images often have subtle flaws or inconsistencies. Look for:

- Unnatural Textures: Textures that look too smooth or lack the detail you'd expect.

- Lighting Anomalies: Lighting that doesn't fit the scene or has inconsistent shadows.

- Background Distortions: Background elements that seem blurred or don't make sense.

- Reverse Image Search: Use search engines like Google Images, TinEye, or Yandex Images to do a reverse image search. This can help you see if the image has been used elsewhere or matches known AI-generated patterns.

- Check the Source: Make sure the source that published the image is credible. Look for reputable news outlets, official websites, or trusted organizations. Be cautious of anonymous sources or sites known for spreading misinformation.

- Cross-Reference Information: Compare the image and any accompanying info with other sources to confirm its accuracy. Check multiple news outlets, fact-checking websites, and experts to make sure everything lines up.

- Use AI Detection Tools: Use AI detection tools that can analyze images and spot potential AI-generated content. These tools are getting better at giving insights into whether an image might be manipulated.

- Consult Experts: If you're still not sure, talk to experts in digital forensics or media analysis. They have the specialized knowledge and tools to spot subtle signs of manipulation.

By following these steps, you can get better at spotting AI-generated content and protect yourself from misinformation. Staying informed and a bit skeptical is key in today's digital world, where deceptive images can spread fast and influence public opinion. A skeptical and informed public is crucial for keeping trust and credibility in the age of AI.

Evaluating AI-Generated Images: Pros and Cons

Pros

- Enhanced Creativity: AI can spark creativity by generating new image combinations and styles.

- Content Creation Efficiency: AI makes content creation faster and more efficient, saving time and resources.

- Accessibility: AI makes image generation accessible to everyone, not just professionals.

- Customization: AI allows for highly customized image generation to meet specific needs.

Cons

- Misinformation Risk: AI can make it easier to create and spread misleading images.

- Authenticity Concerns: AI blurs the line between real and fake, making it harder to trust what we see.

- Ethical Issues: AI raises ethical questions about manipulation, transparency, and accountability.

- Job Displacement: AI might take jobs away from human artists and content creators as automation increases.

Frequently Asked Questions

How can I identify AI-generated images effectively?

To spot AI-generated images, you need to look closely at the details and use the tools at your disposal. Check for inconsistencies in lighting, textures, and background elements. Use reverse image searches to see if the image has been used elsewhere. AI detection tools can give you insights into whether an image might be manipulated. Cross-referencing information with multiple sources and consulting with experts can further confirm the image's authenticity.

What are the potential solutions to combat the spread of AI-generated misinformation?

Fighting AI-generated misinformation requires a multi-faceted approach. We need tech solutions, media literacy education, and policy changes. Social media platforms should invest in AI detection tools, while schools should teach media literacy. Policymakers should consider regulations that promote transparency and accountability in using AI-generated content.

How do AI-generated images impact election integrity?

AI-generated images can undermine election integrity by spreading misinformation and manipulating public opinion. Fake endorsements, negative portrayals of candidates, and other deceptive content can sway voter sentiment and influence election outcomes. Mixing real and fake content erodes trust and introduces uncertainty into public discussions.

Why is labeling AI-generated content important?

Labeling AI-generated content is crucial for transparency and accountability. Without clear labels, people might unknowingly consume manipulated media, leading to misinformed decisions. Labeling helps viewers critically evaluate the content and make informed judgments about its accuracy and validity.

Related Questions

What role do social media platforms play in addressing AI-generated misinformation?

Social media platforms have a big role in tackling AI-generated misinformation. They need to invest in AI detection tools, implement clear labeling policies, and promote media literacy among their users. By taking proactive steps to fight deceptive content, social media platforms can help protect election integrity and maintain public trust.

How can educational institutions promote media literacy and critical thinking skills?

Educational institutions can promote media literacy and critical thinking by including it in their curricula. Students should learn how to evaluate sources of information, spot manipulation techniques, and cross-reference information with multiple sources. By fostering critical thinking, schools can empower students to become informed and engaged citizens who are less likely to fall for misinformation.

What regulations or policies could help combat AI-generated misinformation without infringing on freedom of expression?

Fighting AI-generated misinformation while protecting free speech requires careful policy-making. Transparency requirements, like mandatory labeling of AI-generated content, can inform the public without restricting speech. Accountability measures, like holding people responsible for spreading known misinformation, can deter bad actors. Finding the right balance between regulation and free expression is key to maintaining a healthy and democratic public discourse.

Related article

EU AI Act Draft Offers Lenient Rules for Big Tech AI Models

As the May deadline approaches for finalizing guidelines under the EU AI Act, authorities have released a third and likely final draft of the Code of Practice for general purpose AI (GPAI) providers. Developed since last year, this updated version co

EU AI Act Draft Offers Lenient Rules for Big Tech AI Models

As the May deadline approaches for finalizing guidelines under the EU AI Act, authorities have released a third and likely final draft of the Code of Practice for general purpose AI (GPAI) providers. Developed since last year, this updated version co

ChatGPT Turns LinkedIn Users into Monotonous AI Clones

The latest iteration of ChatGPT's image generation capabilities made waves with its Studio Ghibli-inspired artworks, and now LinkedIn users have spawned a fresh phenomenon: transforming professional portraits into AI-generated toy figurines.The Toy T

ChatGPT Turns LinkedIn Users into Monotonous AI Clones

The latest iteration of ChatGPT's image generation capabilities made waves with its Studio Ghibli-inspired artworks, and now LinkedIn users have spawned a fresh phenomenon: transforming professional portraits into AI-generated toy figurines.The Toy T

Opus Clip Unveils AI Video Compilation Tool – Full Feature Guide

Video compilation creation is undergoing an AI-powered revolution, eliminating tedious manual editing processes. This in-depth guide explores how Opus Clip's groundbreaking 'Clip Anything' feature enables effortless generation of captivating video co

Comments (1)

0/200

Opus Clip Unveils AI Video Compilation Tool – Full Feature Guide

Video compilation creation is undergoing an AI-powered revolution, eliminating tedious manual editing processes. This in-depth guide explores how Opus Clip's groundbreaking 'Clip Anything' feature enables effortless generation of captivating video co

Comments (1)

0/200

![AndrewRamirez]() AndrewRamirez

AndrewRamirez

August 20, 2025 at 5:01:22 PM EDT

August 20, 2025 at 5:01:22 PM EDT

Ces images générées par IA sont troublantes ! On dirait presque des vraies, mais ça pose un gros problème pour les élections. Comment faire confiance aux infos maintenant ? 😕

0

0

The advent of artificial intelligence has ushered in a wave of technological advancements, but it's also thrown a wrench into our ability to tell fact from fiction. Lately, AI-generated images circulating on social media have sparked worries about their potential to skew political conversations and threaten the integrity of elections. It's vital that we grasp the full scope of these technologies to keep our public discussions informed and maintain trust in what we see.

The Controversy of AI-Generated Political Images

The Rise of AI-Generated Content

Artificial intelligence has reached a point where it can whip up images, videos, and audio that look and sound incredibly real. It's a cool feat, no doubt, but it's also a bit scary, especially when it comes to politics and elections. The power to craft convincing yet completely made-up content can throw the public off track and shake our faith in the news we consume. Social media's ability to spread this content like wildfire only makes things worse, turning it into a real headache to keep the narrative straight and stop misinformation in its tracks.

As AI tools become more user-friendly, it's easier than ever for anyone with a bad agenda to mess with public opinion. These AI creations, often called synthetic media or deepfakes, challenge our ability to think critically and stay media-savvy. The potential to twist public perception and erode trust in our institutions is a big deal, pushing us to take proactive steps and set ethical boundaries to keep the risks in check.

Political campaigns can use this tech to fake endorsements or smear opponents, swaying how people vote. Without clear signs or tools to spot these fakes, everyday folks might struggle to tell what's real and what's not, leading to choices based on false info. This kind of widespread trickery could shake the very foundations of democracy, making it urgent that we find both tech and policy solutions to tackle it.

So, how do we protect ourselves from AI being misused in political campaigns and make sure voters get the real deal? It's going to take a mix of tech advancements, teaching people to be media-smart, and solid policy frameworks. Using AI-generated content brings up big questions about honesty, openness, and responsibility, highlighting the need for everyone to step up and defend democratic values in the AI era.

Trump's Use of AI-Generated Images

Former President Trump stirred up a storm when he posted AI-generated images on social media, sparking a heated debate about the ethics and fallout of using such content in politics.

By sharing images that made it look like Taylor Swift and her fans were backing him, Trump raised eyebrows about manipulating how people see him. Some of these images were real, while others were AI-made, causing confusion and raising questions about how open political messaging should be.

The images showed women in 'Swifties for Trump' shirts, suggesting support from a group that might not actually align with his politics. This move brings up ethical questions about whether it's right to use AI to fake endorsements or twist public sentiment. Not clearly marking some images as AI-made only muddies the waters, potentially tricking people who don't know they're looking at something artificial.

While some images were indeed AI-generated, others were genuine photos of Trump supporters. Mixing real and fake like this can make it tough for viewers to figure out what's true, blurring the lines between honest and deceptive messaging. This mix-up can erode trust and sow doubt in public discussions, as people lose confidence in their ability to spot the real from the fake.

Sharing these images could also be seen as a strategic move to win over specific voter groups, like Taylor Swift fans, whether they actually support him or not. By creating the illusion of widespread support, even if it's artificially generated, political campaigns might sway undecided voters or fire up their base. This shows how AI can be a powerful tool for persuasion, capable of shaping public opinion and influencing election results.

When big political figures use AI-generated content, it's a wake-up call for the public to be more vigilant and media-savvy. As AI tech keeps advancing, it's crucial for people to think critically about the content they see online and seek out trustworthy sources. Media outlets, schools, and government agencies all have a part to play in boosting critical thinking and raising awareness about the dangers of manipulated media.

Confusion and Misinformation

Sharing AI-generated images and other manipulated content online is fueling a growing problem of confusion and misinformation.

When people are hit with a mix of real and fake info, it's tough to figure out what's true. This mess can erode trust in the media, institutions, and even personal relationships, making it hard to reach a consensus on important issues.

One of the biggest hurdles in fighting misinformation is how fast it spreads on social media. False or misleading content can go viral in a flash, reaching millions before fact-checkers can even blink. This rapid spread makes it tough to stop the damage caused by misinformation, as it can take root in people's minds and sway their opinions.

Another challenge is how sophisticated modern manipulation techniques have become. AI-generated content, deepfakes, and other synthetic media are getting so good that even experts struggle to spot them. This means traditional fact-checking and media analysis aren't enough anymore, pushing us to develop new tools and methods to fight back.

Making matters worse are the echo chambers and filter bubbles online. Social media algorithms often show us content that matches our existing views, creating echo chambers where we're mostly exposed to similar perspectives. This can reinforce our biases and make it harder to engage with different viewpoints, leading to more polarization and division.

To tackle confusion and misinformation, we need a multi-faceted approach that includes tech solutions, media literacy education, and policy changes. Social media platforms should invest in AI-powered tools to spot and flag manipulated content. Schools should teach media literacy, showing students how to critically evaluate sources and spot manipulation techniques. Policymakers should think about regulations that hold people accountable for spreading misinformation while protecting free speech. Only by working together can we hope to curb the spread of misinformation and foster a more informed and engaged public.

Examining Real Versus AI-Generated Imagery

The Challenge of Distinguishing Authenticity

Telling real images from AI-generated ones is getting trickier by the day, thanks to the sophistication of artificial intelligence. AI can now create images that look so real, it's hard for the average person to tell them apart from genuine photos. This ability has big implications for how much we can trust what we see, especially in sensitive areas like news and political communications.

One reason it's so hard to spot the difference is how fast AI algorithms are improving. These algorithms are getting really good at copying the textures, lighting, and details of real scenes, making images that are almost impossible to tell from the real thing. Plus, AI can create images of people, objects, and events that never existed, crafting convincing yet entirely made-up realities.

Another factor is that many people don't know much about AI manipulation techniques. Without a basic understanding of what AI can do, it's easier for people to be fooled. If you don't know how AI works, it's tough to critically evaluate the images you see online.

The lack of clear labeling and transparency makes things even more complicated. Social media and media outlets often don't mark AI-generated images, leaving it up to viewers to figure out what's real. This lack of openness makes it easier for deceptive content to spread, as people might assume unlabeled images are genuine.

To tackle these challenges, we need a multi-pronged approach that includes tech solutions, media literacy education, and policy changes. Tech solutions like AI-powered detection tools can help spot and flag manipulated images. Media literacy education can teach people how to critically evaluate sources and spot manipulation techniques. Policy changes can promote more transparency and accountability in using AI-generated content.

By combining these efforts, we can better protect ourselves from the deceptive use of AI in visual media and build more trust in the images that shape our public discussions. Developing and widely adopting these tools is crucial for keeping visual media honest and ensuring people can make informed decisions based on reliable information.

Strategies to Discern AI-Generated Content

Step-by-Step Guide for Visual Verification

With AI-generated content getting more sophisticated, it's important for us to be on our toes and use specific methods to check the authenticity of what we see. Here's a step-by-step guide to help you out:

- Examine Image Details: Take a close look at the image for anything that seems off. AI-generated images often have subtle flaws or inconsistencies. Look for:

- Unnatural Textures: Textures that look too smooth or lack the detail you'd expect.

- Lighting Anomalies: Lighting that doesn't fit the scene or has inconsistent shadows.

- Background Distortions: Background elements that seem blurred or don't make sense.

- Reverse Image Search: Use search engines like Google Images, TinEye, or Yandex Images to do a reverse image search. This can help you see if the image has been used elsewhere or matches known AI-generated patterns.

- Check the Source: Make sure the source that published the image is credible. Look for reputable news outlets, official websites, or trusted organizations. Be cautious of anonymous sources or sites known for spreading misinformation.

- Cross-Reference Information: Compare the image and any accompanying info with other sources to confirm its accuracy. Check multiple news outlets, fact-checking websites, and experts to make sure everything lines up.

- Use AI Detection Tools: Use AI detection tools that can analyze images and spot potential AI-generated content. These tools are getting better at giving insights into whether an image might be manipulated.

- Consult Experts: If you're still not sure, talk to experts in digital forensics or media analysis. They have the specialized knowledge and tools to spot subtle signs of manipulation.

By following these steps, you can get better at spotting AI-generated content and protect yourself from misinformation. Staying informed and a bit skeptical is key in today's digital world, where deceptive images can spread fast and influence public opinion. A skeptical and informed public is crucial for keeping trust and credibility in the age of AI.

Evaluating AI-Generated Images: Pros and Cons

Pros

- Enhanced Creativity: AI can spark creativity by generating new image combinations and styles.

- Content Creation Efficiency: AI makes content creation faster and more efficient, saving time and resources.

- Accessibility: AI makes image generation accessible to everyone, not just professionals.

- Customization: AI allows for highly customized image generation to meet specific needs.

Cons

- Misinformation Risk: AI can make it easier to create and spread misleading images.

- Authenticity Concerns: AI blurs the line between real and fake, making it harder to trust what we see.

- Ethical Issues: AI raises ethical questions about manipulation, transparency, and accountability.

- Job Displacement: AI might take jobs away from human artists and content creators as automation increases.

Frequently Asked Questions

How can I identify AI-generated images effectively?

To spot AI-generated images, you need to look closely at the details and use the tools at your disposal. Check for inconsistencies in lighting, textures, and background elements. Use reverse image searches to see if the image has been used elsewhere. AI detection tools can give you insights into whether an image might be manipulated. Cross-referencing information with multiple sources and consulting with experts can further confirm the image's authenticity.

What are the potential solutions to combat the spread of AI-generated misinformation?

Fighting AI-generated misinformation requires a multi-faceted approach. We need tech solutions, media literacy education, and policy changes. Social media platforms should invest in AI detection tools, while schools should teach media literacy. Policymakers should consider regulations that promote transparency and accountability in using AI-generated content.

How do AI-generated images impact election integrity?

AI-generated images can undermine election integrity by spreading misinformation and manipulating public opinion. Fake endorsements, negative portrayals of candidates, and other deceptive content can sway voter sentiment and influence election outcomes. Mixing real and fake content erodes trust and introduces uncertainty into public discussions.

Why is labeling AI-generated content important?

Labeling AI-generated content is crucial for transparency and accountability. Without clear labels, people might unknowingly consume manipulated media, leading to misinformed decisions. Labeling helps viewers critically evaluate the content and make informed judgments about its accuracy and validity.

Related Questions

What role do social media platforms play in addressing AI-generated misinformation?

Social media platforms have a big role in tackling AI-generated misinformation. They need to invest in AI detection tools, implement clear labeling policies, and promote media literacy among their users. By taking proactive steps to fight deceptive content, social media platforms can help protect election integrity and maintain public trust.

How can educational institutions promote media literacy and critical thinking skills?

Educational institutions can promote media literacy and critical thinking by including it in their curricula. Students should learn how to evaluate sources of information, spot manipulation techniques, and cross-reference information with multiple sources. By fostering critical thinking, schools can empower students to become informed and engaged citizens who are less likely to fall for misinformation.

What regulations or policies could help combat AI-generated misinformation without infringing on freedom of expression?

Fighting AI-generated misinformation while protecting free speech requires careful policy-making. Transparency requirements, like mandatory labeling of AI-generated content, can inform the public without restricting speech. Accountability measures, like holding people responsible for spreading known misinformation, can deter bad actors. Finding the right balance between regulation and free expression is key to maintaining a healthy and democratic public discourse.

ChatGPT Turns LinkedIn Users into Monotonous AI Clones

The latest iteration of ChatGPT's image generation capabilities made waves with its Studio Ghibli-inspired artworks, and now LinkedIn users have spawned a fresh phenomenon: transforming professional portraits into AI-generated toy figurines.The Toy T

ChatGPT Turns LinkedIn Users into Monotonous AI Clones

The latest iteration of ChatGPT's image generation capabilities made waves with its Studio Ghibli-inspired artworks, and now LinkedIn users have spawned a fresh phenomenon: transforming professional portraits into AI-generated toy figurines.The Toy T

Opus Clip Unveils AI Video Compilation Tool – Full Feature Guide

Video compilation creation is undergoing an AI-powered revolution, eliminating tedious manual editing processes. This in-depth guide explores how Opus Clip's groundbreaking 'Clip Anything' feature enables effortless generation of captivating video co

Opus Clip Unveils AI Video Compilation Tool – Full Feature Guide

Video compilation creation is undergoing an AI-powered revolution, eliminating tedious manual editing processes. This in-depth guide explores how Opus Clip's groundbreaking 'Clip Anything' feature enables effortless generation of captivating video co

August 20, 2025 at 5:01:22 PM EDT

August 20, 2025 at 5:01:22 PM EDT

Ces images générées par IA sont troublantes ! On dirait presque des vraies, mais ça pose un gros problème pour les élections. Comment faire confiance aux infos maintenant ? 😕

0

0