OpenAI upgrades its transcription and voice-generating AI models

OpenAI is rolling out new AI models for transcription and voice generation via its API, promising significant improvements over their earlier versions. These updates are part of OpenAI's larger "agentic" vision, which focuses on creating autonomous systems capable of performing tasks independently for users. While the term "agent" can be debated, OpenAI's Head of Product, Olivier Godement, sees it as a chatbot that can interact with a business's customers.

"We're going to see more and more agents emerge in the coming months," Godement shared with TechCrunch during a briefing. "The overarching goal is to assist customers and developers in utilizing agents that are useful, accessible, and precise."

OpenAI's latest text-to-speech model, dubbed "gpt-4o-mini-tts," not only aims to produce more lifelike and nuanced speech but is also more adaptable than its predecessors. Developers can now guide the model using natural language commands, such as "speak like a mad scientist" or "use a serene voice, like a mindfulness teacher." This level of control allows for a more personalized voice experience.

Here’s a sample of a "true crime-style," weathered voice:

And here’s an example of a female "professional" voice:

Jeff Harris, a member of OpenAI's product team, emphasized to TechCrunch that the objective is to enable developers to customize both the voice "experience" and "context." "In various scenarios, you don't want a monotonous voice," Harris explained. "For instance, in a customer support setting where the voice needs to sound apologetic for a mistake, you can infuse that emotion into the voice. We strongly believe that developers and users want to control not just the content, but the manner of speech."

Moving to OpenAI's new speech-to-text offerings, "gpt-4o-transcribe" and "gpt-4o-mini-transcribe," these models are set to replace the outdated Whisper transcription model. Trained on a diverse array of high-quality audio data, they claim to better handle accented and varied speech, even in noisy settings. Additionally, these models are less prone to "hallucinations," a problem where Whisper would sometimes invent words or entire passages, adding inaccuracies like racial commentary or fictitious medical treatments to transcripts.

"These models show significant improvement over Whisper in this regard," Harris noted. "Ensuring model accuracy is crucial for a dependable voice experience, and by accuracy, we mean the models correctly capture the spoken words without adding unvoiced content."

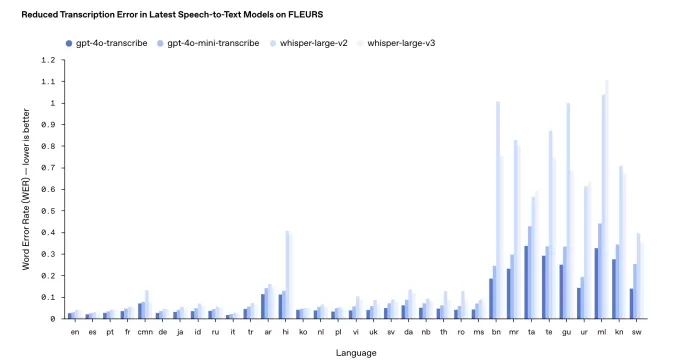

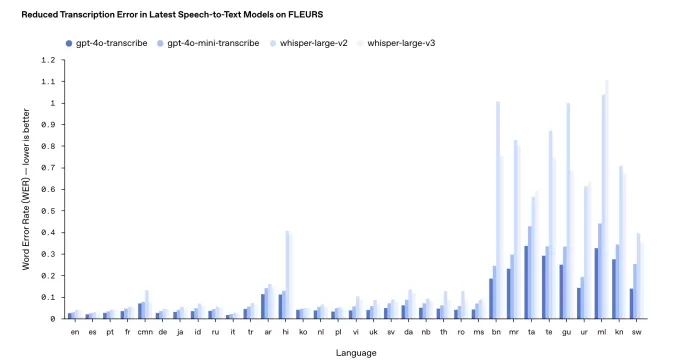

However, performance may vary across languages. OpenAI's internal benchmarks indicate that gpt-4o-transcribe, the more precise of the two, has a "word error rate" nearing 30% for Indic and Dravidian languages like Tamil, Telugu, Malayalam, and Kannada. This suggests that about three out of every ten words might differ from a human transcription in these languages.

The results from OpenAI transcription benchmarking. Image Credits: OpenAI

In a departure from their usual practice, OpenAI won't be making these new transcription models freely available. Historically, they released new Whisper versions under an MIT license for commercial use. Harris pointed out that gpt-4o-transcribe and gpt-4o-mini-transcribe are significantly larger than Whisper, making them unsuitable for open release.

"These models are too big to run on a typical laptop like Whisper could," Harris added. "When we release models openly, we want to do it thoughtfully, ensuring they're tailored for specific needs. We see end-user devices as a prime area for open-source models."

Updated March 20, 2025, 11:54 a.m. PT to clarify the language around word error rate and update the benchmark results chart with a more recent version.

Related article

Nonprofit leverages AI agents to boost charity fundraising efforts

While major tech corporations promote AI "agents" as productivity boosters for businesses, one nonprofit organization is demonstrating their potential for social good. Sage Future, a philanthropic research group backed by Open Philanthropy, recently

Nonprofit leverages AI agents to boost charity fundraising efforts

While major tech corporations promote AI "agents" as productivity boosters for businesses, one nonprofit organization is demonstrating their potential for social good. Sage Future, a philanthropic research group backed by Open Philanthropy, recently

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

ChatGPT Adds Google Drive and Dropbox Integration for File Access

ChatGPT Enhances Productivity with New Enterprise Features

OpenAI has unveiled two powerful new capabilities transforming ChatGPT into a comprehensive business productivity tool: automated meeting documentation and seamless cloud storage integration

Comments (32)

0/200

ChatGPT Adds Google Drive and Dropbox Integration for File Access

ChatGPT Enhances Productivity with New Enterprise Features

OpenAI has unveiled two powerful new capabilities transforming ChatGPT into a comprehensive business productivity tool: automated meeting documentation and seamless cloud storage integration

Comments (32)

0/200

![FrankMartínez]() FrankMartínez

FrankMartínez

August 19, 2025 at 4:01:39 AM EDT

August 19, 2025 at 4:01:39 AM EDT

The new OpenAI models sound like a game-changer for voice tech! Can't wait to see how devs use this to make apps talk smoother than ever. 😎

0

0

![BenHernández]() BenHernández

BenHernández

July 23, 2025 at 4:50:48 AM EDT

July 23, 2025 at 4:50:48 AM EDT

Wow, OpenAI's new transcription and voice models sound like a game-changer! I'm curious how these 'agentic' systems will stack up against real-world tasks. Could they finally nail natural-sounding convos? 🤔

0

0

![GeorgeTaylor]() GeorgeTaylor

GeorgeTaylor

April 20, 2025 at 3:57:07 PM EDT

April 20, 2025 at 3:57:07 PM EDT

Os novos modelos de transcrição e geração de voz da OpenAI são um divisor de águas! Estou usando no meu podcast e as melhorias são impressionantes. O único ponto negativo? São um pouco caros, mas se você puder pagar, vale cada centavo! 🎙️💸

0

0

![GregoryAllen]() GregoryAllen

GregoryAllen

April 17, 2025 at 12:50:37 AM EDT

April 17, 2025 at 12:50:37 AM EDT

OpenAI's new transcription and voice models are a game changer! I've been using them for my podcast and the improvements are night and day. The only downside? They're a bit pricey, but if you can swing it, they're worth every penny! 🎙️💸

0

0

![StevenAllen]() StevenAllen

StevenAllen

April 17, 2025 at 12:38:26 AM EDT

April 17, 2025 at 12:38:26 AM EDT

OpenAI의 새로운 음성 인식 및 음성 생성 모델은 정말 혁신적이에요! 제 팟캐스트에서 사용 중인데, 개선이 눈에 띄어요. 단점은 조금 비싸다는 건데, 감당할 수 있다면 그만한 가치가 있어요! 🎙️💸

0

0

![NicholasClark]() NicholasClark

NicholasClark

April 16, 2025 at 1:54:41 AM EDT

April 16, 2025 at 1:54:41 AM EDT

OpenAIの新しい音声認識と音声生成モデルは革命的です!ポッドキャストで使っていますが、改善が劇的です。唯一の欠点は少し高価なことですが、払えるならその価値は十分にあります!🎙️💸

0

0

OpenAI is rolling out new AI models for transcription and voice generation via its API, promising significant improvements over their earlier versions. These updates are part of OpenAI's larger "agentic" vision, which focuses on creating autonomous systems capable of performing tasks independently for users. While the term "agent" can be debated, OpenAI's Head of Product, Olivier Godement, sees it as a chatbot that can interact with a business's customers.

"We're going to see more and more agents emerge in the coming months," Godement shared with TechCrunch during a briefing. "The overarching goal is to assist customers and developers in utilizing agents that are useful, accessible, and precise."

OpenAI's latest text-to-speech model, dubbed "gpt-4o-mini-tts," not only aims to produce more lifelike and nuanced speech but is also more adaptable than its predecessors. Developers can now guide the model using natural language commands, such as "speak like a mad scientist" or "use a serene voice, like a mindfulness teacher." This level of control allows for a more personalized voice experience.

Here’s a sample of a "true crime-style," weathered voice:

And here’s an example of a female "professional" voice:

Jeff Harris, a member of OpenAI's product team, emphasized to TechCrunch that the objective is to enable developers to customize both the voice "experience" and "context." "In various scenarios, you don't want a monotonous voice," Harris explained. "For instance, in a customer support setting where the voice needs to sound apologetic for a mistake, you can infuse that emotion into the voice. We strongly believe that developers and users want to control not just the content, but the manner of speech."

Moving to OpenAI's new speech-to-text offerings, "gpt-4o-transcribe" and "gpt-4o-mini-transcribe," these models are set to replace the outdated Whisper transcription model. Trained on a diverse array of high-quality audio data, they claim to better handle accented and varied speech, even in noisy settings. Additionally, these models are less prone to "hallucinations," a problem where Whisper would sometimes invent words or entire passages, adding inaccuracies like racial commentary or fictitious medical treatments to transcripts.

"These models show significant improvement over Whisper in this regard," Harris noted. "Ensuring model accuracy is crucial for a dependable voice experience, and by accuracy, we mean the models correctly capture the spoken words without adding unvoiced content."

However, performance may vary across languages. OpenAI's internal benchmarks indicate that gpt-4o-transcribe, the more precise of the two, has a "word error rate" nearing 30% for Indic and Dravidian languages like Tamil, Telugu, Malayalam, and Kannada. This suggests that about three out of every ten words might differ from a human transcription in these languages.

In a departure from their usual practice, OpenAI won't be making these new transcription models freely available. Historically, they released new Whisper versions under an MIT license for commercial use. Harris pointed out that gpt-4o-transcribe and gpt-4o-mini-transcribe are significantly larger than Whisper, making them unsuitable for open release.

"These models are too big to run on a typical laptop like Whisper could," Harris added. "When we release models openly, we want to do it thoughtfully, ensuring they're tailored for specific needs. We see end-user devices as a prime area for open-source models."

Updated March 20, 2025, 11:54 a.m. PT to clarify the language around word error rate and update the benchmark results chart with a more recent version.

Nonprofit leverages AI agents to boost charity fundraising efforts

While major tech corporations promote AI "agents" as productivity boosters for businesses, one nonprofit organization is demonstrating their potential for social good. Sage Future, a philanthropic research group backed by Open Philanthropy, recently

Nonprofit leverages AI agents to boost charity fundraising efforts

While major tech corporations promote AI "agents" as productivity boosters for businesses, one nonprofit organization is demonstrating their potential for social good. Sage Future, a philanthropic research group backed by Open Philanthropy, recently

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

Top AI Labs Warn Humanity Is Losing Grasp on Understanding AI Systems

In an unprecedented show of unity, researchers from OpenAI, Google DeepMind, Anthropic and Meta have set aside competitive differences to issue a collective warning about responsible AI development. Over 40 leading scientists from these typically riv

ChatGPT Adds Google Drive and Dropbox Integration for File Access

ChatGPT Enhances Productivity with New Enterprise Features

OpenAI has unveiled two powerful new capabilities transforming ChatGPT into a comprehensive business productivity tool: automated meeting documentation and seamless cloud storage integration

ChatGPT Adds Google Drive and Dropbox Integration for File Access

ChatGPT Enhances Productivity with New Enterprise Features

OpenAI has unveiled two powerful new capabilities transforming ChatGPT into a comprehensive business productivity tool: automated meeting documentation and seamless cloud storage integration

August 19, 2025 at 4:01:39 AM EDT

August 19, 2025 at 4:01:39 AM EDT

The new OpenAI models sound like a game-changer for voice tech! Can't wait to see how devs use this to make apps talk smoother than ever. 😎

0

0

July 23, 2025 at 4:50:48 AM EDT

July 23, 2025 at 4:50:48 AM EDT

Wow, OpenAI's new transcription and voice models sound like a game-changer! I'm curious how these 'agentic' systems will stack up against real-world tasks. Could they finally nail natural-sounding convos? 🤔

0

0

April 20, 2025 at 3:57:07 PM EDT

April 20, 2025 at 3:57:07 PM EDT

Os novos modelos de transcrição e geração de voz da OpenAI são um divisor de águas! Estou usando no meu podcast e as melhorias são impressionantes. O único ponto negativo? São um pouco caros, mas se você puder pagar, vale cada centavo! 🎙️💸

0

0

April 17, 2025 at 12:50:37 AM EDT

April 17, 2025 at 12:50:37 AM EDT

OpenAI's new transcription and voice models are a game changer! I've been using them for my podcast and the improvements are night and day. The only downside? They're a bit pricey, but if you can swing it, they're worth every penny! 🎙️💸

0

0

April 17, 2025 at 12:38:26 AM EDT

April 17, 2025 at 12:38:26 AM EDT

OpenAI의 새로운 음성 인식 및 음성 생성 모델은 정말 혁신적이에요! 제 팟캐스트에서 사용 중인데, 개선이 눈에 띄어요. 단점은 조금 비싸다는 건데, 감당할 수 있다면 그만한 가치가 있어요! 🎙️💸

0

0

April 16, 2025 at 1:54:41 AM EDT

April 16, 2025 at 1:54:41 AM EDT

OpenAIの新しい音声認識と音声生成モデルは革命的です!ポッドキャストで使っていますが、改善が劇的です。唯一の欠点は少し高価なことですが、払えるならその価値は十分にあります!🎙️💸

0

0