AI 'Reasoning' Models Surge, Driving Up Benchmarking Costs

The Rising Costs of Benchmarking AI Reasoning Models

AI labs like OpenAI have been touting their advanced "reasoning" AI models, which are designed to tackle complex problems step by step. These models, particularly effective in fields like physics, are indeed impressive. However, they come with a hefty price tag when it comes to benchmarking, making it challenging for independent verification of their capabilities.

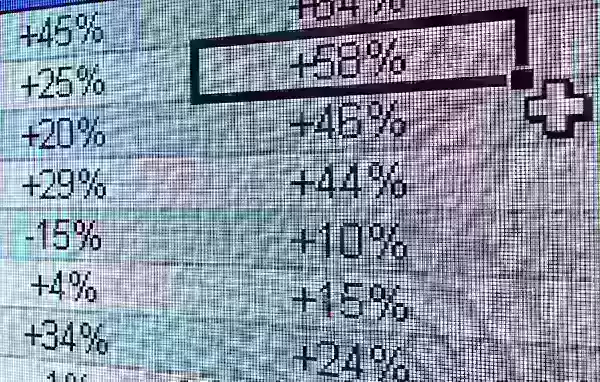

According to data from Artificial Analysis, a third-party AI testing firm, the cost to evaluate OpenAI's o1 reasoning model across seven popular AI benchmarks is a staggering $2,767.05. These benchmarks include MMLU-Pro, GPQA Diamond, Humanity’s Last Exam, LiveCodeBench, SciCode, AIME 2024, and MATH-500. In contrast, benchmarking Anthropic's "hybrid" reasoning model, Claude 3.7 Sonnet, on the same tests cost $1,485.35, while OpenAI's o3-mini-high was significantly cheaper at $344.59.

Not all reasoning models are equally expensive to test. For instance, Artificial Analysis spent only $141.22 evaluating OpenAI’s o1-mini. However, the costs of these models tend to be high on average. Artificial Analysis has shelled out around $5,200 to evaluate about a dozen reasoning models, which is nearly double the $2,400 spent on analyzing over 80 non-reasoning models.

For comparison, the non-reasoning GPT-4o model from OpenAI, released in May 2024, cost Artificial Analysis just $108.85 to evaluate, while Claude 3.6 Sonnet, the non-reasoning predecessor to Claude 3.7 Sonnet, cost $81.41.

George Cameron, co-founder of Artificial Analysis, shared with TechCrunch that the organization is prepared to increase its benchmarking budget as more AI labs continue to develop reasoning models. "At Artificial Analysis, we run hundreds of evaluations monthly and devote a significant budget to these," Cameron stated. "We are planning for this spend to increase as models are more frequently released."

Artificial Analysis isn't alone in facing these escalating costs. Ross Taylor, CEO of AI startup General Reasoning, recently spent $580 to evaluate Claude 3.7 Sonnet on around 3,700 unique prompts. Taylor estimates that a single run-through of MMLU Pro, a benchmark designed to test language comprehension, would exceed $1,800.

Taylor highlighted a growing concern in a recent post on X, stating, "We’re moving to a world where a lab reports x% on a benchmark where they spend y amount of compute, but where resources for academics are << y. No one is going to be able to reproduce the results."

Why Are Reasoning Models So Expensive to Benchmark?

The primary reason for the high cost of testing reasoning models is their tendency to generate a substantial number of tokens. Tokens are units of raw text; for example, the word "fantastic" might be broken down into "fan," "tas," and "tic." According to Artificial Analysis, OpenAI's o1 model generated over 44 million tokens during their tests, which is roughly eight times the number of tokens generated by the non-reasoning GPT-4o model.

Most AI companies charge for model usage based on the number of tokens, which quickly adds up. Additionally, modern benchmarks are designed to elicit a high number of tokens by including questions that involve complex, multi-step tasks. Jean-Stanislas Denain, a senior researcher at Epoch AI, explained to TechCrunch, "Today’s benchmarks are more complex even though the number of questions per benchmark has overall decreased. They often attempt to evaluate models’ ability to do real-world tasks, such as write and execute code, browse the internet, and use computers."

Denain also pointed out that the cost per token for the most expensive models has been rising. For example, when Anthropic's Claude 3 Opus was released in May 2024, it cost $75 per million output tokens. In contrast, OpenAI's GPT-4.5 and o1-pro, launched earlier that year, cost $150 and $600 per million output tokens, respectively.

Despite the increasing cost per token, Denain noted, "Since models have gotten better over time, it’s still true that the cost to reach a given level of performance has greatly decreased over time. But if you want to evaluate the best largest models at any point in time, you’re still paying more."

The Integrity of Benchmarking

Many AI labs, including OpenAI, offer free or subsidized access to their models for benchmarking purposes. However, this practice raises concerns about the integrity of the evaluation process. Even without evidence of manipulation, the mere suggestion of an AI lab's involvement can cast doubt on the objectivity of the results.

Ross Taylor expressed this concern on X, asking, "From a scientific point of view, if you publish a result that no one can replicate with the same model, is it even science anymore? (Was it ever science, lol)"

The high costs and potential biases in AI benchmarking underscore the challenges facing the field as it strives to develop and validate increasingly sophisticated models.

Related article

Best AI Tools for Creating Educational Infographics – Design Tips & Techniques

In today's digitally-driven educational landscape, infographics have emerged as a transformative communication medium that converts complex information into visually appealing, easily understandable formats. AI technology is revolutionizing how educa

Best AI Tools for Creating Educational Infographics – Design Tips & Techniques

In today's digitally-driven educational landscape, infographics have emerged as a transformative communication medium that converts complex information into visually appealing, easily understandable formats. AI technology is revolutionizing how educa

Topaz DeNoise AI: Best Noise Reduction Tool in 2025 – Full Guide

In the competitive world of digital photography, image clarity remains paramount. Photographers at all skill levels contend with digital noise that compromises otherwise excellent shots. Topaz DeNoise AI emerges as a cutting-edge solution, harnessing

Topaz DeNoise AI: Best Noise Reduction Tool in 2025 – Full Guide

In the competitive world of digital photography, image clarity remains paramount. Photographers at all skill levels contend with digital noise that compromises otherwise excellent shots. Topaz DeNoise AI emerges as a cutting-edge solution, harnessing

Master Emerald Kaizo Nuzlocke: Ultimate Survival & Strategy Guide

Emerald Kaizo stands as one of the most formidable Pokémon ROM hacks ever conceived. While attempting a Nuzlocke run exponentially increases the challenge, victory remains achievable through meticulous planning and strategic execution. This definitiv

Comments (17)

0/200

Master Emerald Kaizo Nuzlocke: Ultimate Survival & Strategy Guide

Emerald Kaizo stands as one of the most formidable Pokémon ROM hacks ever conceived. While attempting a Nuzlocke run exponentially increases the challenge, victory remains achievable through meticulous planning and strategic execution. This definitiv

Comments (17)

0/200

![FrankJackson]() FrankJackson

FrankJackson

August 10, 2025 at 5:01:00 AM EDT

August 10, 2025 at 5:01:00 AM EDT

These AI reasoning models are impressive for tackling complex physics problems step by step, but the surging benchmarking costs could stifle innovation for smaller labs. 😟 Reminds me of how tech giants dominate—maybe we need more affordable alternatives?

0

0

![DouglasRodriguez]() DouglasRodriguez

DouglasRodriguez

July 27, 2025 at 9:20:21 PM EDT

July 27, 2025 at 9:20:21 PM EDT

These AI reasoning models sound cool, but the skyrocketing benchmarking costs are wild! 😳 Makes me wonder if smaller labs can even keep up with the big players like OpenAI.

0

0

![StevenGonzalez]() StevenGonzalez

StevenGonzalez

April 24, 2025 at 8:58:05 AM EDT

April 24, 2025 at 8:58:05 AM EDT

These AI reasoning models are impressive, but the rising costs of benchmarking are a real bummer. It's great for fields like physics, but I hope they find a way to make it more affordable. Otherwise, it's just for the big players. 😕

0

0

![JackPerez]() JackPerez

JackPerez

April 24, 2025 at 3:52:48 AM EDT

April 24, 2025 at 3:52:48 AM EDT

Esses modelos de raciocínio de IA são impressionantes, mas o aumento dos custos de benchmarking é uma decepção. É ótimo para áreas como a física, mas espero que encontrem uma maneira de torná-lo mais acessível. Caso contrário, será apenas para os grandes jogadores. 😕

0

0

![GregoryJones]() GregoryJones

GregoryJones

April 24, 2025 at 3:10:43 AM EDT

April 24, 2025 at 3:10:43 AM EDT

AI推論モデルは素晴らしいけど、ベンチマーキングのコストが上がるのは残念です。物理分野には良いけど、もっと手頃な価格になる方法を見つけてほしいです。さもないと、大手企業だけのものになってしまいますね。😕

0

0

![SamuelRoberts]() SamuelRoberts

SamuelRoberts

April 24, 2025 at 12:23:58 AM EDT

April 24, 2025 at 12:23:58 AM EDT

Esses modelos de raciocínio de IA parecem legais, mas o aumento dos custos de benchmarking? Não tanto. Será que podemos ter os benefícios sem falir? 🤔

0

0

The Rising Costs of Benchmarking AI Reasoning Models

AI labs like OpenAI have been touting their advanced "reasoning" AI models, which are designed to tackle complex problems step by step. These models, particularly effective in fields like physics, are indeed impressive. However, they come with a hefty price tag when it comes to benchmarking, making it challenging for independent verification of their capabilities.

According to data from Artificial Analysis, a third-party AI testing firm, the cost to evaluate OpenAI's o1 reasoning model across seven popular AI benchmarks is a staggering $2,767.05. These benchmarks include MMLU-Pro, GPQA Diamond, Humanity’s Last Exam, LiveCodeBench, SciCode, AIME 2024, and MATH-500. In contrast, benchmarking Anthropic's "hybrid" reasoning model, Claude 3.7 Sonnet, on the same tests cost $1,485.35, while OpenAI's o3-mini-high was significantly cheaper at $344.59.

Not all reasoning models are equally expensive to test. For instance, Artificial Analysis spent only $141.22 evaluating OpenAI’s o1-mini. However, the costs of these models tend to be high on average. Artificial Analysis has shelled out around $5,200 to evaluate about a dozen reasoning models, which is nearly double the $2,400 spent on analyzing over 80 non-reasoning models.

For comparison, the non-reasoning GPT-4o model from OpenAI, released in May 2024, cost Artificial Analysis just $108.85 to evaluate, while Claude 3.6 Sonnet, the non-reasoning predecessor to Claude 3.7 Sonnet, cost $81.41.

George Cameron, co-founder of Artificial Analysis, shared with TechCrunch that the organization is prepared to increase its benchmarking budget as more AI labs continue to develop reasoning models. "At Artificial Analysis, we run hundreds of evaluations monthly and devote a significant budget to these," Cameron stated. "We are planning for this spend to increase as models are more frequently released."

Artificial Analysis isn't alone in facing these escalating costs. Ross Taylor, CEO of AI startup General Reasoning, recently spent $580 to evaluate Claude 3.7 Sonnet on around 3,700 unique prompts. Taylor estimates that a single run-through of MMLU Pro, a benchmark designed to test language comprehension, would exceed $1,800.

Taylor highlighted a growing concern in a recent post on X, stating, "We’re moving to a world where a lab reports x% on a benchmark where they spend y amount of compute, but where resources for academics are << y. No one is going to be able to reproduce the results."

Why Are Reasoning Models So Expensive to Benchmark?

The primary reason for the high cost of testing reasoning models is their tendency to generate a substantial number of tokens. Tokens are units of raw text; for example, the word "fantastic" might be broken down into "fan," "tas," and "tic." According to Artificial Analysis, OpenAI's o1 model generated over 44 million tokens during their tests, which is roughly eight times the number of tokens generated by the non-reasoning GPT-4o model.

Most AI companies charge for model usage based on the number of tokens, which quickly adds up. Additionally, modern benchmarks are designed to elicit a high number of tokens by including questions that involve complex, multi-step tasks. Jean-Stanislas Denain, a senior researcher at Epoch AI, explained to TechCrunch, "Today’s benchmarks are more complex even though the number of questions per benchmark has overall decreased. They often attempt to evaluate models’ ability to do real-world tasks, such as write and execute code, browse the internet, and use computers."

Denain also pointed out that the cost per token for the most expensive models has been rising. For example, when Anthropic's Claude 3 Opus was released in May 2024, it cost $75 per million output tokens. In contrast, OpenAI's GPT-4.5 and o1-pro, launched earlier that year, cost $150 and $600 per million output tokens, respectively.

Despite the increasing cost per token, Denain noted, "Since models have gotten better over time, it’s still true that the cost to reach a given level of performance has greatly decreased over time. But if you want to evaluate the best largest models at any point in time, you’re still paying more."

The Integrity of Benchmarking

Many AI labs, including OpenAI, offer free or subsidized access to their models for benchmarking purposes. However, this practice raises concerns about the integrity of the evaluation process. Even without evidence of manipulation, the mere suggestion of an AI lab's involvement can cast doubt on the objectivity of the results.

Ross Taylor expressed this concern on X, asking, "From a scientific point of view, if you publish a result that no one can replicate with the same model, is it even science anymore? (Was it ever science, lol)"

The high costs and potential biases in AI benchmarking underscore the challenges facing the field as it strives to develop and validate increasingly sophisticated models.

Best AI Tools for Creating Educational Infographics – Design Tips & Techniques

In today's digitally-driven educational landscape, infographics have emerged as a transformative communication medium that converts complex information into visually appealing, easily understandable formats. AI technology is revolutionizing how educa

Best AI Tools for Creating Educational Infographics – Design Tips & Techniques

In today's digitally-driven educational landscape, infographics have emerged as a transformative communication medium that converts complex information into visually appealing, easily understandable formats. AI technology is revolutionizing how educa

Topaz DeNoise AI: Best Noise Reduction Tool in 2025 – Full Guide

In the competitive world of digital photography, image clarity remains paramount. Photographers at all skill levels contend with digital noise that compromises otherwise excellent shots. Topaz DeNoise AI emerges as a cutting-edge solution, harnessing

Topaz DeNoise AI: Best Noise Reduction Tool in 2025 – Full Guide

In the competitive world of digital photography, image clarity remains paramount. Photographers at all skill levels contend with digital noise that compromises otherwise excellent shots. Topaz DeNoise AI emerges as a cutting-edge solution, harnessing

Master Emerald Kaizo Nuzlocke: Ultimate Survival & Strategy Guide

Emerald Kaizo stands as one of the most formidable Pokémon ROM hacks ever conceived. While attempting a Nuzlocke run exponentially increases the challenge, victory remains achievable through meticulous planning and strategic execution. This definitiv

Master Emerald Kaizo Nuzlocke: Ultimate Survival & Strategy Guide

Emerald Kaizo stands as one of the most formidable Pokémon ROM hacks ever conceived. While attempting a Nuzlocke run exponentially increases the challenge, victory remains achievable through meticulous planning and strategic execution. This definitiv

August 10, 2025 at 5:01:00 AM EDT

August 10, 2025 at 5:01:00 AM EDT

These AI reasoning models are impressive for tackling complex physics problems step by step, but the surging benchmarking costs could stifle innovation for smaller labs. 😟 Reminds me of how tech giants dominate—maybe we need more affordable alternatives?

0

0

July 27, 2025 at 9:20:21 PM EDT

July 27, 2025 at 9:20:21 PM EDT

These AI reasoning models sound cool, but the skyrocketing benchmarking costs are wild! 😳 Makes me wonder if smaller labs can even keep up with the big players like OpenAI.

0

0

April 24, 2025 at 8:58:05 AM EDT

April 24, 2025 at 8:58:05 AM EDT

These AI reasoning models are impressive, but the rising costs of benchmarking are a real bummer. It's great for fields like physics, but I hope they find a way to make it more affordable. Otherwise, it's just for the big players. 😕

0

0

April 24, 2025 at 3:52:48 AM EDT

April 24, 2025 at 3:52:48 AM EDT

Esses modelos de raciocínio de IA são impressionantes, mas o aumento dos custos de benchmarking é uma decepção. É ótimo para áreas como a física, mas espero que encontrem uma maneira de torná-lo mais acessível. Caso contrário, será apenas para os grandes jogadores. 😕

0

0

April 24, 2025 at 3:10:43 AM EDT

April 24, 2025 at 3:10:43 AM EDT

AI推論モデルは素晴らしいけど、ベンチマーキングのコストが上がるのは残念です。物理分野には良いけど、もっと手頃な価格になる方法を見つけてほしいです。さもないと、大手企業だけのものになってしまいますね。😕

0

0

April 24, 2025 at 12:23:58 AM EDT

April 24, 2025 at 12:23:58 AM EDT

Esses modelos de raciocínio de IA parecem legais, mas o aumento dos custos de benchmarking? Não tanto. Será que podemos ter os benefícios sem falir? 🤔

0

0