New Wave Tech Enhances Android Emotions for Greater Naturalness

If you've ever chatted with an android that looks strikingly human, you might have sensed that something was "off." This eerie feeling goes beyond mere looks; it's deeply tied to how robots convey emotions and sustain those emotional states. In essence, it's about their shortfall in mimicking human-like emotional capabilities.

Today's androids are adept at mimicking individual facial expressions, but the real challenge is in crafting smooth transitions and maintaining emotional consistency. Traditional systems often depend on pre-set expressions, which can feel like flipping through a book of static images rather than a natural flow of emotions. This stiffness can lead to a disconnect between what we see and what feels like genuine emotional expression.

This becomes especially noticeable during longer interactions. An android might flash a perfect smile one moment, but then struggle to seamlessly shift to another expression, reminding us we're dealing with a machine rather than a being with real emotions.

A Wave-Based Solution

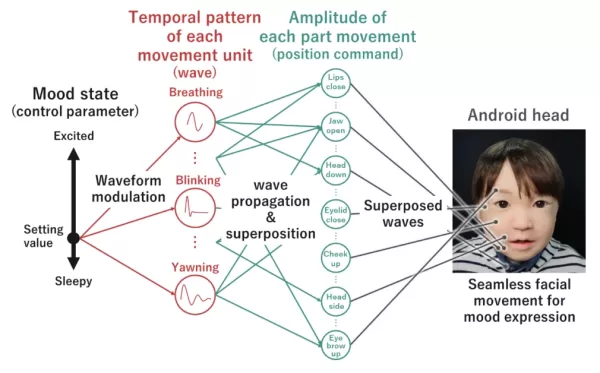

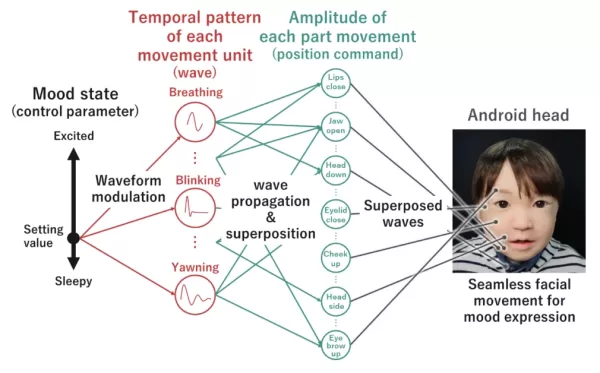

Enter groundbreaking research from Osaka University, which introduces a fresh perspective on how androids should express emotions. Instead of viewing facial expressions as separate actions, this new technology sees them as interconnected waves of movement that naturally sweep across an android's face.

Think of it like a symphony where various instruments blend to create harmony. This system merges different facial movements—from subtle breathing to eye blinks—into a cohesive whole. Each movement is depicted as a wave that can be adjusted and combined with others in real-time.

The innovation here is the dynamic nature of this approach. By generating expressions organically through the overlaying of these movement waves, it creates a more fluid and natural look, erasing those robotic transitions that can shatter the illusion of authentic emotional expression.

The key technical advancement is what researchers term "waveform modulation." This feature allows the android's internal state to directly affect how these expression waves manifest, fostering a more genuine link between the robot's programmed emotions and its physical expressions.

Real-Time Emotional Intelligence

Consider the challenge of making a robot look sleepy. It's not just about drooping eyelids; it involves coordinating numerous subtle movements that humans instinctively recognize as signs of fatigue. This new system tackles this complexity through a clever approach to movement coordination.

Dynamic Expression Capabilities

The technology orchestrates nine fundamental types of coordinated movements associated with various arousal states: breathing, spontaneous blinking, shifty eye movements, nodding off, head shaking, sucking reflection, pendular nystagmus, head side swinging, and yawning.

Each movement is governed by a "decaying wave," a mathematical pattern that dictates how the movement evolves over time. These waves are meticulously tuned using five key parameters:

- Amplitude: controls the intensity of the movement

- Damping ratio: determines how quickly the movement fades

- Wavelength: sets the timing of the movement

- Oscillation center: establishes the movement's neutral point

- Reactivation period: dictates how often the movement recurs

Internal State Reflection

The standout feature of this system is its ability to link these movements to the robot's internal arousal state. When the system signals high arousal (like excitement), certain wave parameters automatically adjust—for example, breathing becomes more frequent and pronounced. In a low arousal state (like sleepiness), you might observe slower, more pronounced yawning and occasional head nodding.

This is achieved through "temporal management" and "postural management" modules. The temporal module dictates when movements occur, while the postural module ensures all facial components work in harmony.

Hisashi Ishihara, the lead author of this research and an Associate Professor at the Department of Mechanical Engineering, Graduate School of Engineering, Osaka University, explains, "Rather than creating superficial movements, further development of a system in which internal emotions are reflected in every detail of an android's actions could lead to the creation of androids perceived as having a heart."

Improvement in Transitions

Unlike traditional systems that switch between pre-recorded expressions, this approach ensures smooth transitions by continuously tweaking these wave parameters. The movements are coordinated through a sophisticated network that ensures facial actions work together naturally, much like how a human's facial movements are unconsciously coordinated.

The research team demonstrated this through experiments showing how the system could effectively convey different arousal levels while maintaining natural-looking expressions.

Future Implications

The development of this wave-based emotional expression system opens up exciting possibilities for human-robot interaction and could potentially integrate with technologies like Embodied AI. While current androids often evoke a sense of unease during prolonged interactions, this technology could help bridge the uncanny valley—that unsettling space where robots appear almost, but not quite, human.

The crucial breakthrough is in creating a genuine-feeling emotional presence. By generating fluid, context-appropriate expressions that align with internal states, androids could become more effective in roles requiring emotional intelligence and human connection.

Koichi Osuka, the senior author and a Professor at the Department of Mechanical Engineering at Osaka University, notes that this technology "could greatly enrich emotional communication between humans and robots." Imagine healthcare companions expressing appropriate concern, educational robots showing enthusiasm, or service robots conveying genuine-seeming attentiveness.

The research shows particularly promising results in expressing different arousal levels—from high-energy excitement to low-energy sleepiness. This capability could be vital in scenarios where robots need to:

- Convey alertness levels during long-term interactions

- Express appropriate energy levels in therapeutic settings

- Match their emotional state to the social context

- Maintain emotional consistency during extended conversations

The system's ability to generate natural transitions between states makes it especially valuable for applications requiring sustained human-robot interaction.

By treating emotional expression as a fluid, wave-based phenomenon rather than a series of pre-programmed states, the technology opens up many new possibilities for creating robots that can engage with humans in emotionally meaningful ways. The research team's next steps will focus on expanding the system's emotional range and further refining its ability to convey subtle emotional states, influencing how we will think about and interact with androids in our daily lives.

Related article

Hugging Face Launches Pre-Orders for Reachy Mini Desktop Robots

Hugging Face invites developers to explore its latest robotics innovation.The AI platform announced Wednesday that it’s now accepting pre-orders for its Reachy Mini desktop robots. The company first s

Hugging Face Launches Pre-Orders for Reachy Mini Desktop Robots

Hugging Face invites developers to explore its latest robotics innovation.The AI platform announced Wednesday that it’s now accepting pre-orders for its Reachy Mini desktop robots. The company first s

Nvidia charges ahead with humanoid robotics aided by the cloud

Nvidia is charging full speed ahead into the realm of humanoid robotics, and they're not holding back. At the Computex 2025 trade show in Taiwan, they unveiled a series of innovati

Nvidia charges ahead with humanoid robotics aided by the cloud

Nvidia is charging full speed ahead into the realm of humanoid robotics, and they're not holding back. At the Computex 2025 trade show in Taiwan, they unveiled a series of innovati

Top 5 Autonomous Robots for Construction Sites in April 2025

The construction industry is undergoing a remarkable transformation, driven by the rise of robotics and automation. With the global market for construction robots projected to reach $3.5 billion by 2030, these innovations are revolutionizing safety and efficiency on job sites. From autonomous pile d

Comments (6)

0/200

Top 5 Autonomous Robots for Construction Sites in April 2025

The construction industry is undergoing a remarkable transformation, driven by the rise of robotics and automation. With the global market for construction robots projected to reach $3.5 billion by 2030, these innovations are revolutionizing safety and efficiency on job sites. From autonomous pile d

Comments (6)

0/200

![RogerRodriguez]() RogerRodriguez

RogerRodriguez

July 27, 2025 at 9:18:39 PM EDT

July 27, 2025 at 9:18:39 PM EDT

This article about android emotions is wild! It’s creepy how close they’re getting to human-like vibes, but I wonder if we’re ready for robots that can fake feelings this well. 😅 What’s next, a robot therapist?

0

0

![JerryMoore]() JerryMoore

JerryMoore

April 23, 2025 at 11:33:32 AM EDT

April 23, 2025 at 11:33:32 AM EDT

뉴 웨이브 테크의 안드로이드 감정 표현은 흥미롭지만, 여전히 어색함이 느껴져요. 자연스러움에 가까워지고 있지만, 아직 갈 길이 멀어요. 계속 노력하세요! 🤖😑

0

0

![JasonMartin]() JasonMartin

JasonMartin

April 22, 2025 at 9:26:44 PM EDT

April 22, 2025 at 9:26:44 PM EDT

O trabalho da New Wave Tech nas emoções dos androides é interessante, mas ainda parece um pouco estranho. Eles estão se aproximando da naturalidade, mas ainda há um longo caminho a percorrer. Continuem tentando, pessoal! 🤖😒

0

0

![WalterAnderson]() WalterAnderson

WalterAnderson

April 22, 2025 at 11:23:09 AM EDT

April 22, 2025 at 11:23:09 AM EDT

New Wave Tech's work on android emotions is interesting, but it still feels a bit off. They're getting closer to naturalness, but there's still a way to go. Keep pushing, guys! 🤖😕

0

0

![RobertMartin]() RobertMartin

RobertMartin

April 22, 2025 at 6:44:01 AM EDT

April 22, 2025 at 6:44:01 AM EDT

ニューウェーブテックのアンドロイドの感情表現は面白いけど、まだ違和感があるね。自然さに近づいてるけど、まだ道のりは長い。頑張って!🤖😐

0

0

![CharlesYoung]() CharlesYoung

CharlesYoung

April 21, 2025 at 9:11:23 PM EDT

April 21, 2025 at 9:11:23 PM EDT

El trabajo de New Wave Tech en las emociones de los androides es interesante, pero todavía se siente un poco raro. Están acercándose a la naturalidad, pero aún les falta. ¡Sigan adelante, chicos! 🤖😕

0

0

If you've ever chatted with an android that looks strikingly human, you might have sensed that something was "off." This eerie feeling goes beyond mere looks; it's deeply tied to how robots convey emotions and sustain those emotional states. In essence, it's about their shortfall in mimicking human-like emotional capabilities.

Today's androids are adept at mimicking individual facial expressions, but the real challenge is in crafting smooth transitions and maintaining emotional consistency. Traditional systems often depend on pre-set expressions, which can feel like flipping through a book of static images rather than a natural flow of emotions. This stiffness can lead to a disconnect between what we see and what feels like genuine emotional expression.

This becomes especially noticeable during longer interactions. An android might flash a perfect smile one moment, but then struggle to seamlessly shift to another expression, reminding us we're dealing with a machine rather than a being with real emotions.

A Wave-Based Solution

Enter groundbreaking research from Osaka University, which introduces a fresh perspective on how androids should express emotions. Instead of viewing facial expressions as separate actions, this new technology sees them as interconnected waves of movement that naturally sweep across an android's face.

Think of it like a symphony where various instruments blend to create harmony. This system merges different facial movements—from subtle breathing to eye blinks—into a cohesive whole. Each movement is depicted as a wave that can be adjusted and combined with others in real-time.

The innovation here is the dynamic nature of this approach. By generating expressions organically through the overlaying of these movement waves, it creates a more fluid and natural look, erasing those robotic transitions that can shatter the illusion of authentic emotional expression.

The key technical advancement is what researchers term "waveform modulation." This feature allows the android's internal state to directly affect how these expression waves manifest, fostering a more genuine link between the robot's programmed emotions and its physical expressions.

Real-Time Emotional Intelligence

Consider the challenge of making a robot look sleepy. It's not just about drooping eyelids; it involves coordinating numerous subtle movements that humans instinctively recognize as signs of fatigue. This new system tackles this complexity through a clever approach to movement coordination.

Dynamic Expression Capabilities

The technology orchestrates nine fundamental types of coordinated movements associated with various arousal states: breathing, spontaneous blinking, shifty eye movements, nodding off, head shaking, sucking reflection, pendular nystagmus, head side swinging, and yawning.

Each movement is governed by a "decaying wave," a mathematical pattern that dictates how the movement evolves over time. These waves are meticulously tuned using five key parameters:

- Amplitude: controls the intensity of the movement

- Damping ratio: determines how quickly the movement fades

- Wavelength: sets the timing of the movement

- Oscillation center: establishes the movement's neutral point

- Reactivation period: dictates how often the movement recurs

Internal State Reflection

The standout feature of this system is its ability to link these movements to the robot's internal arousal state. When the system signals high arousal (like excitement), certain wave parameters automatically adjust—for example, breathing becomes more frequent and pronounced. In a low arousal state (like sleepiness), you might observe slower, more pronounced yawning and occasional head nodding.

This is achieved through "temporal management" and "postural management" modules. The temporal module dictates when movements occur, while the postural module ensures all facial components work in harmony.

Hisashi Ishihara, the lead author of this research and an Associate Professor at the Department of Mechanical Engineering, Graduate School of Engineering, Osaka University, explains, "Rather than creating superficial movements, further development of a system in which internal emotions are reflected in every detail of an android's actions could lead to the creation of androids perceived as having a heart."

Improvement in Transitions

Unlike traditional systems that switch between pre-recorded expressions, this approach ensures smooth transitions by continuously tweaking these wave parameters. The movements are coordinated through a sophisticated network that ensures facial actions work together naturally, much like how a human's facial movements are unconsciously coordinated.

The research team demonstrated this through experiments showing how the system could effectively convey different arousal levels while maintaining natural-looking expressions.

Future Implications

The development of this wave-based emotional expression system opens up exciting possibilities for human-robot interaction and could potentially integrate with technologies like Embodied AI. While current androids often evoke a sense of unease during prolonged interactions, this technology could help bridge the uncanny valley—that unsettling space where robots appear almost, but not quite, human.

The crucial breakthrough is in creating a genuine-feeling emotional presence. By generating fluid, context-appropriate expressions that align with internal states, androids could become more effective in roles requiring emotional intelligence and human connection.

Koichi Osuka, the senior author and a Professor at the Department of Mechanical Engineering at Osaka University, notes that this technology "could greatly enrich emotional communication between humans and robots." Imagine healthcare companions expressing appropriate concern, educational robots showing enthusiasm, or service robots conveying genuine-seeming attentiveness.

The research shows particularly promising results in expressing different arousal levels—from high-energy excitement to low-energy sleepiness. This capability could be vital in scenarios where robots need to:

- Convey alertness levels during long-term interactions

- Express appropriate energy levels in therapeutic settings

- Match their emotional state to the social context

- Maintain emotional consistency during extended conversations

The system's ability to generate natural transitions between states makes it especially valuable for applications requiring sustained human-robot interaction.

By treating emotional expression as a fluid, wave-based phenomenon rather than a series of pre-programmed states, the technology opens up many new possibilities for creating robots that can engage with humans in emotionally meaningful ways. The research team's next steps will focus on expanding the system's emotional range and further refining its ability to convey subtle emotional states, influencing how we will think about and interact with androids in our daily lives.

Hugging Face Launches Pre-Orders for Reachy Mini Desktop Robots

Hugging Face invites developers to explore its latest robotics innovation.The AI platform announced Wednesday that it’s now accepting pre-orders for its Reachy Mini desktop robots. The company first s

Hugging Face Launches Pre-Orders for Reachy Mini Desktop Robots

Hugging Face invites developers to explore its latest robotics innovation.The AI platform announced Wednesday that it’s now accepting pre-orders for its Reachy Mini desktop robots. The company first s

Nvidia charges ahead with humanoid robotics aided by the cloud

Nvidia is charging full speed ahead into the realm of humanoid robotics, and they're not holding back. At the Computex 2025 trade show in Taiwan, they unveiled a series of innovati

Nvidia charges ahead with humanoid robotics aided by the cloud

Nvidia is charging full speed ahead into the realm of humanoid robotics, and they're not holding back. At the Computex 2025 trade show in Taiwan, they unveiled a series of innovati

Top 5 Autonomous Robots for Construction Sites in April 2025

The construction industry is undergoing a remarkable transformation, driven by the rise of robotics and automation. With the global market for construction robots projected to reach $3.5 billion by 2030, these innovations are revolutionizing safety and efficiency on job sites. From autonomous pile d

Top 5 Autonomous Robots for Construction Sites in April 2025

The construction industry is undergoing a remarkable transformation, driven by the rise of robotics and automation. With the global market for construction robots projected to reach $3.5 billion by 2030, these innovations are revolutionizing safety and efficiency on job sites. From autonomous pile d

July 27, 2025 at 9:18:39 PM EDT

July 27, 2025 at 9:18:39 PM EDT

This article about android emotions is wild! It’s creepy how close they’re getting to human-like vibes, but I wonder if we’re ready for robots that can fake feelings this well. 😅 What’s next, a robot therapist?

0

0

April 23, 2025 at 11:33:32 AM EDT

April 23, 2025 at 11:33:32 AM EDT

뉴 웨이브 테크의 안드로이드 감정 표현은 흥미롭지만, 여전히 어색함이 느껴져요. 자연스러움에 가까워지고 있지만, 아직 갈 길이 멀어요. 계속 노력하세요! 🤖😑

0

0

April 22, 2025 at 9:26:44 PM EDT

April 22, 2025 at 9:26:44 PM EDT

O trabalho da New Wave Tech nas emoções dos androides é interessante, mas ainda parece um pouco estranho. Eles estão se aproximando da naturalidade, mas ainda há um longo caminho a percorrer. Continuem tentando, pessoal! 🤖😒

0

0

April 22, 2025 at 11:23:09 AM EDT

April 22, 2025 at 11:23:09 AM EDT

New Wave Tech's work on android emotions is interesting, but it still feels a bit off. They're getting closer to naturalness, but there's still a way to go. Keep pushing, guys! 🤖😕

0

0

April 22, 2025 at 6:44:01 AM EDT

April 22, 2025 at 6:44:01 AM EDT

ニューウェーブテックのアンドロイドの感情表現は面白いけど、まだ違和感があるね。自然さに近づいてるけど、まだ道のりは長い。頑張って!🤖😐

0

0

April 21, 2025 at 9:11:23 PM EDT

April 21, 2025 at 9:11:23 PM EDT

El trabajo de New Wave Tech en las emociones de los androides es interesante, pero todavía se siente un poco raro. Están acercándose a la naturalidad, pero aún les falta. ¡Sigan adelante, chicos! 🤖😕

0

0