Nvidia Dominates AI Benchmarks, Yet Intel Offers Significant Competition

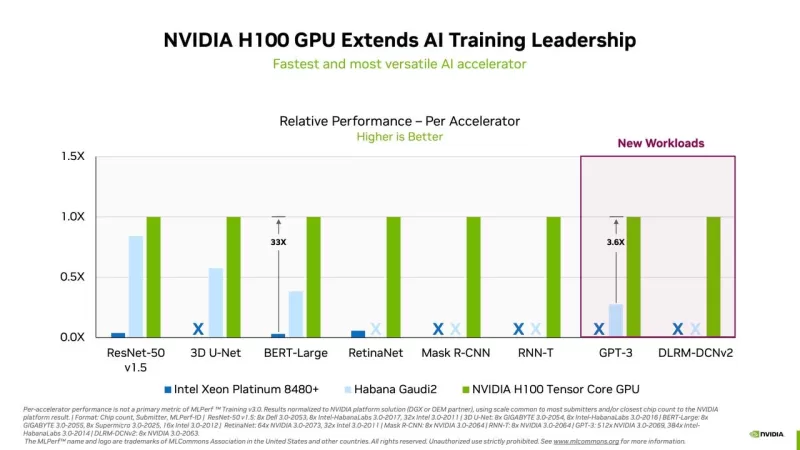

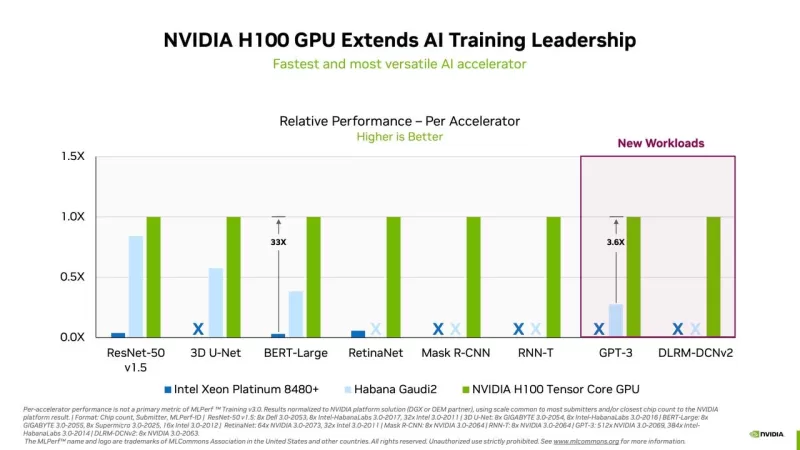

The latest round of neural network training speed benchmarks, released on Tuesday by the industry consortium MLCommons, once again crowned Nvidia as the top performer across all categories in the MLPerf tests. With key competitors like Google, Graphcore, and Advanced Micro Devices sitting this round out, Nvidia's sweep was total and unchallenged.

However, Intel's Habana division made a significant showing with its Guadi2 chip, signaling a competitive edge. Intel has boldly promised to outstrip Nvidia's flagship H100 GPU by this fall.

The MLPerf Training version 3.0 benchmark focuses on the time required to adjust a neural network's "weights" or parameters to achieve a set accuracy level for a specific task, a process known as "training." This version of the test encompasses eight different tasks, measuring the duration needed to refine a neural network through multiple experiments. This is one aspect of neural network performance, with the other being "inference," where the trained network makes predictions on new data. Inference performance is evaluated separately by MLCommons.

In addition to server-based training, MLCommons introduced the MLPerf Tiny version 1.1 benchmark, which evaluates performance on ultra-low-power devices for making predictions.

Nvidia led the pack in all eight tests, achieving the fastest training times. Two new tasks were introduced, including one for OpenAI's GPT-3 large language model (LLM). The frenzy around generative AI, sparked by the popularity of ChatGPT, has made this a focal point. Nvidia, in collaboration with CoreWeave, took the lead in the GPT-3 task, utilizing a system powered by 896 Intel Xeon processors and 3,584 Nvidia H100 GPUs. This setup, running on Nvidia's NeMO framework, managed to train using the Colossal Cleaned Common Crawl dataset in just under eleven minutes. The test used a "large" version of GPT-3 with 175 billion parameters, limited to 0.4% of the full training set to keep the runtime manageable.

Another new addition was an expanded version of the recommender engines test, now using a larger, four-terabyte dataset called Criteo 4TB multi-hot, replacing the outdated one-terabyte dataset. MLCommons noted the increasing scale of production recommendation models in terms of size, computational power, and memory operations.

The only other contender in the AI chip space was Intel's Habana, which entered five submissions using its Gaudi2 accelerator and one from SuperMicro utilizing the same chip. These entries covered four of the eight tasks, yet lagged significantly behind Nvidia's best systems. For instance, in the BERT Wikipedia test, Habana ranked fifth, taking two minutes compared to Nvidia-CoreWeave's eight seconds with a 3,072-GPU setup.

Yet, Intel's Jordan Plawner, head of AI products, highlighted in a ZDNET interview that for systems of similar scale, the performance gap between Habana and Nvidia might not be as critical for many businesses. He pointed out that an 8-device Habana system with two Intel Xeon processors completed the BERT Wikipedia task in just over 14 minutes, outperforming numerous submissions with more Nvidia A100 GPUs.

Plawner emphasized the cost-effectiveness of Gaudi2, priced comparably to Nvidia's A100 but offering better training value for money. He also mentioned that while Nvidia used an FP-8 data format in their submissions, Habana used a BF-16 format, which, despite its higher precision, slightly slows down training. Plawner anticipates that switching to FP-8 later this year will boost Gaudi2's performance, potentially surpassing Nvidia's H100.

He stressed the need for an alternative to Nvidia, especially given the current supply constraints of Nvidia's GPUs. Customers, frustrated by delays, are increasingly open to alternatives like Gaudi2, which could enable them to launch services without waiting for Nvidia's parts.

Intel's position as the world's second-largest chip manufacturer, after Taiwan Semiconductor, gives it a strategic advantage in controlling its supply chain. Plawner hinted at Intel's plans to build a multi-thousand Gaudi2 cluster, suggesting a potential strong contender in future MLPerf tests.

This marks the second consecutive quarter where no other chip maker has challenged Nvidia's top position in the training tests. A year ago, Google shared the top spot with Nvidia using its TPU, but both Google and Graphcore were absent from the latest round, focusing on their businesses instead of benchmark competitions.

MLCommons director David Kanter expressed a desire for more participants, noting that broader engagement benefits the industry. Google and AMD did not respond to inquiries about their absence from this round. Interestingly, while AMD's CPUs were used in competing systems, all winning Nvidia setups utilized Intel Xeon CPUs, signaling a shift from the previous year's dominance by AMD's EPYC processors with the release of Intel's Sapphire Rapids.

Despite the lack of participation from some big names, the MLPerf tests continue to draw new entrants, including CoreWeave, IEI, and Quanta Cloud Technology, showing the ongoing interest and competition in the field of AI chip performance.

Related article

Topaz DeNoise AI: Best Noise Reduction Tool in 2025 – Full Guide

In the competitive world of digital photography, image clarity remains paramount. Photographers at all skill levels contend with digital noise that compromises otherwise excellent shots. Topaz DeNoise AI emerges as a cutting-edge solution, harnessing

Topaz DeNoise AI: Best Noise Reduction Tool in 2025 – Full Guide

In the competitive world of digital photography, image clarity remains paramount. Photographers at all skill levels contend with digital noise that compromises otherwise excellent shots. Topaz DeNoise AI emerges as a cutting-edge solution, harnessing

Master Emerald Kaizo Nuzlocke: Ultimate Survival & Strategy Guide

Emerald Kaizo stands as one of the most formidable Pokémon ROM hacks ever conceived. While attempting a Nuzlocke run exponentially increases the challenge, victory remains achievable through meticulous planning and strategic execution. This definitiv

Master Emerald Kaizo Nuzlocke: Ultimate Survival & Strategy Guide

Emerald Kaizo stands as one of the most formidable Pokémon ROM hacks ever conceived. While attempting a Nuzlocke run exponentially increases the challenge, victory remains achievable through meticulous planning and strategic execution. This definitiv

AI-Powered Cover Letters: Expert Guide for Journal Submissions

In today's competitive academic publishing environment, crafting an effective cover letter can make the crucial difference in your manuscript's acceptance. Discover how AI-powered tools like ChatGPT can streamline this essential task, helping you cre

Comments (11)

0/200

AI-Powered Cover Letters: Expert Guide for Journal Submissions

In today's competitive academic publishing environment, crafting an effective cover letter can make the crucial difference in your manuscript's acceptance. Discover how AI-powered tools like ChatGPT can streamline this essential task, helping you cre

Comments (11)

0/200

![HarrySmith]() HarrySmith

HarrySmith

August 11, 2025 at 1:01:02 PM EDT

August 11, 2025 at 1:01:02 PM EDT

Intel stepping up to Nvidia's AI game is wild! 🤯 It's like the underdog finally landing some solid punches. Curious to see how this shakes up the market!

0

0

![HenryJackson]() HenryJackson

HenryJackson

April 22, 2025 at 5:42:49 PM EDT

April 22, 2025 at 5:42:49 PM EDT

NvidiaのAIベンチマークでの支配力は印象的ですが、Intelの競争が物事を面白くしています。私はプロジェクトにNvidiaのツールを使っていますが、速いです。しかし、Intelの提供するものにも興味があります。もっと詳細な比較があればいいのにと思います。同じように感じる人はいますか?🤔

0

0

![BruceSmith]() BruceSmith

BruceSmith

April 22, 2025 at 3:22:27 PM EDT

April 22, 2025 at 3:22:27 PM EDT

El dominio de Nvidia en los benchmarks de IA es impresionante, pero la competencia de Intel mantiene las cosas emocionantes. He estado usando las herramientas de Nvidia para mis proyectos, y son rápidas, pero las ofertas de Intel son intrigantes. Ojalá hubiera más comparaciones detalladas, sin embargo. ¿Alguien más siente lo mismo? 🤔

0

0

![AlbertDavis]() AlbertDavis

AlbertDavis

April 22, 2025 at 7:57:41 AM EDT

April 22, 2025 at 7:57:41 AM EDT

Nvidia's dominance in AI benchmarks is impressive, but Intel's competition keeps things interesting! I've been using Nvidia's tech for my projects, and it's super fast. Intel's not far behind though, which is great for us users. Keep pushing the limits, guys! 🚀

0

0

![ThomasYoung]() ThomasYoung

ThomasYoung

April 21, 2025 at 5:17:21 PM EDT

April 21, 2025 at 5:17:21 PM EDT

A dominância da Nvidia nos benchmarks de IA é impressionante, mas a competição da Intel está mantendo as coisas emocionantes. Estou usando as ferramentas da Nvidia para meus projetos, e elas são rápidas, mas as ofertas da Intel são intrigantes. Gostaria que houvesse comparações mais detalhadas, no entanto. Alguém mais sente o mesmo? 🤔

0

0

![WillieJackson]() WillieJackson

WillieJackson

April 21, 2025 at 12:23:42 PM EDT

April 21, 2025 at 12:23:42 PM EDT

La dominancia de Nvidia en los benchmarks de IA es impresionante, pero la competencia de Intel mantiene las cosas interesantes. He estado usando la tecnología de Nvidia para mis proyectos y es súper rápida. Intel no se queda atrás, lo cual es genial para nosotros los usuarios. ¡Sigan empujando los límites, chicos! 🚀

0

0

The latest round of neural network training speed benchmarks, released on Tuesday by the industry consortium MLCommons, once again crowned Nvidia as the top performer across all categories in the MLPerf tests. With key competitors like Google, Graphcore, and Advanced Micro Devices sitting this round out, Nvidia's sweep was total and unchallenged.

However, Intel's Habana division made a significant showing with its Guadi2 chip, signaling a competitive edge. Intel has boldly promised to outstrip Nvidia's flagship H100 GPU by this fall.

The MLPerf Training version 3.0 benchmark focuses on the time required to adjust a neural network's "weights" or parameters to achieve a set accuracy level for a specific task, a process known as "training." This version of the test encompasses eight different tasks, measuring the duration needed to refine a neural network through multiple experiments. This is one aspect of neural network performance, with the other being "inference," where the trained network makes predictions on new data. Inference performance is evaluated separately by MLCommons.

In addition to server-based training, MLCommons introduced the MLPerf Tiny version 1.1 benchmark, which evaluates performance on ultra-low-power devices for making predictions.

Nvidia led the pack in all eight tests, achieving the fastest training times. Two new tasks were introduced, including one for OpenAI's GPT-3 large language model (LLM). The frenzy around generative AI, sparked by the popularity of ChatGPT, has made this a focal point. Nvidia, in collaboration with CoreWeave, took the lead in the GPT-3 task, utilizing a system powered by 896 Intel Xeon processors and 3,584 Nvidia H100 GPUs. This setup, running on Nvidia's NeMO framework, managed to train using the Colossal Cleaned Common Crawl dataset in just under eleven minutes. The test used a "large" version of GPT-3 with 175 billion parameters, limited to 0.4% of the full training set to keep the runtime manageable.

Another new addition was an expanded version of the recommender engines test, now using a larger, four-terabyte dataset called Criteo 4TB multi-hot, replacing the outdated one-terabyte dataset. MLCommons noted the increasing scale of production recommendation models in terms of size, computational power, and memory operations.

The only other contender in the AI chip space was Intel's Habana, which entered five submissions using its Gaudi2 accelerator and one from SuperMicro utilizing the same chip. These entries covered four of the eight tasks, yet lagged significantly behind Nvidia's best systems. For instance, in the BERT Wikipedia test, Habana ranked fifth, taking two minutes compared to Nvidia-CoreWeave's eight seconds with a 3,072-GPU setup.

Yet, Intel's Jordan Plawner, head of AI products, highlighted in a ZDNET interview that for systems of similar scale, the performance gap between Habana and Nvidia might not be as critical for many businesses. He pointed out that an 8-device Habana system with two Intel Xeon processors completed the BERT Wikipedia task in just over 14 minutes, outperforming numerous submissions with more Nvidia A100 GPUs.

Plawner emphasized the cost-effectiveness of Gaudi2, priced comparably to Nvidia's A100 but offering better training value for money. He also mentioned that while Nvidia used an FP-8 data format in their submissions, Habana used a BF-16 format, which, despite its higher precision, slightly slows down training. Plawner anticipates that switching to FP-8 later this year will boost Gaudi2's performance, potentially surpassing Nvidia's H100.

He stressed the need for an alternative to Nvidia, especially given the current supply constraints of Nvidia's GPUs. Customers, frustrated by delays, are increasingly open to alternatives like Gaudi2, which could enable them to launch services without waiting for Nvidia's parts.

Intel's position as the world's second-largest chip manufacturer, after Taiwan Semiconductor, gives it a strategic advantage in controlling its supply chain. Plawner hinted at Intel's plans to build a multi-thousand Gaudi2 cluster, suggesting a potential strong contender in future MLPerf tests.

This marks the second consecutive quarter where no other chip maker has challenged Nvidia's top position in the training tests. A year ago, Google shared the top spot with Nvidia using its TPU, but both Google and Graphcore were absent from the latest round, focusing on their businesses instead of benchmark competitions.

MLCommons director David Kanter expressed a desire for more participants, noting that broader engagement benefits the industry. Google and AMD did not respond to inquiries about their absence from this round. Interestingly, while AMD's CPUs were used in competing systems, all winning Nvidia setups utilized Intel Xeon CPUs, signaling a shift from the previous year's dominance by AMD's EPYC processors with the release of Intel's Sapphire Rapids.

Despite the lack of participation from some big names, the MLPerf tests continue to draw new entrants, including CoreWeave, IEI, and Quanta Cloud Technology, showing the ongoing interest and competition in the field of AI chip performance.

Topaz DeNoise AI: Best Noise Reduction Tool in 2025 – Full Guide

In the competitive world of digital photography, image clarity remains paramount. Photographers at all skill levels contend with digital noise that compromises otherwise excellent shots. Topaz DeNoise AI emerges as a cutting-edge solution, harnessing

Topaz DeNoise AI: Best Noise Reduction Tool in 2025 – Full Guide

In the competitive world of digital photography, image clarity remains paramount. Photographers at all skill levels contend with digital noise that compromises otherwise excellent shots. Topaz DeNoise AI emerges as a cutting-edge solution, harnessing

Master Emerald Kaizo Nuzlocke: Ultimate Survival & Strategy Guide

Emerald Kaizo stands as one of the most formidable Pokémon ROM hacks ever conceived. While attempting a Nuzlocke run exponentially increases the challenge, victory remains achievable through meticulous planning and strategic execution. This definitiv

Master Emerald Kaizo Nuzlocke: Ultimate Survival & Strategy Guide

Emerald Kaizo stands as one of the most formidable Pokémon ROM hacks ever conceived. While attempting a Nuzlocke run exponentially increases the challenge, victory remains achievable through meticulous planning and strategic execution. This definitiv

AI-Powered Cover Letters: Expert Guide for Journal Submissions

In today's competitive academic publishing environment, crafting an effective cover letter can make the crucial difference in your manuscript's acceptance. Discover how AI-powered tools like ChatGPT can streamline this essential task, helping you cre

AI-Powered Cover Letters: Expert Guide for Journal Submissions

In today's competitive academic publishing environment, crafting an effective cover letter can make the crucial difference in your manuscript's acceptance. Discover how AI-powered tools like ChatGPT can streamline this essential task, helping you cre

August 11, 2025 at 1:01:02 PM EDT

August 11, 2025 at 1:01:02 PM EDT

Intel stepping up to Nvidia's AI game is wild! 🤯 It's like the underdog finally landing some solid punches. Curious to see how this shakes up the market!

0

0

April 22, 2025 at 5:42:49 PM EDT

April 22, 2025 at 5:42:49 PM EDT

NvidiaのAIベンチマークでの支配力は印象的ですが、Intelの競争が物事を面白くしています。私はプロジェクトにNvidiaのツールを使っていますが、速いです。しかし、Intelの提供するものにも興味があります。もっと詳細な比較があればいいのにと思います。同じように感じる人はいますか?🤔

0

0

April 22, 2025 at 3:22:27 PM EDT

April 22, 2025 at 3:22:27 PM EDT

El dominio de Nvidia en los benchmarks de IA es impresionante, pero la competencia de Intel mantiene las cosas emocionantes. He estado usando las herramientas de Nvidia para mis proyectos, y son rápidas, pero las ofertas de Intel son intrigantes. Ojalá hubiera más comparaciones detalladas, sin embargo. ¿Alguien más siente lo mismo? 🤔

0

0

April 22, 2025 at 7:57:41 AM EDT

April 22, 2025 at 7:57:41 AM EDT

Nvidia's dominance in AI benchmarks is impressive, but Intel's competition keeps things interesting! I've been using Nvidia's tech for my projects, and it's super fast. Intel's not far behind though, which is great for us users. Keep pushing the limits, guys! 🚀

0

0

April 21, 2025 at 5:17:21 PM EDT

April 21, 2025 at 5:17:21 PM EDT

A dominância da Nvidia nos benchmarks de IA é impressionante, mas a competição da Intel está mantendo as coisas emocionantes. Estou usando as ferramentas da Nvidia para meus projetos, e elas são rápidas, mas as ofertas da Intel são intrigantes. Gostaria que houvesse comparações mais detalhadas, no entanto. Alguém mais sente o mesmo? 🤔

0

0

April 21, 2025 at 12:23:42 PM EDT

April 21, 2025 at 12:23:42 PM EDT

La dominancia de Nvidia en los benchmarks de IA es impresionante, pero la competencia de Intel mantiene las cosas interesantes. He estado usando la tecnología de Nvidia para mis proyectos y es súper rápida. Intel no se queda atrás, lo cual es genial para nosotros los usuarios. ¡Sigan empujando los límites, chicos! 🚀

0

0