Meta Unveils Llama 4: Pioneering Next-Gen Multimodal AI Capabilities

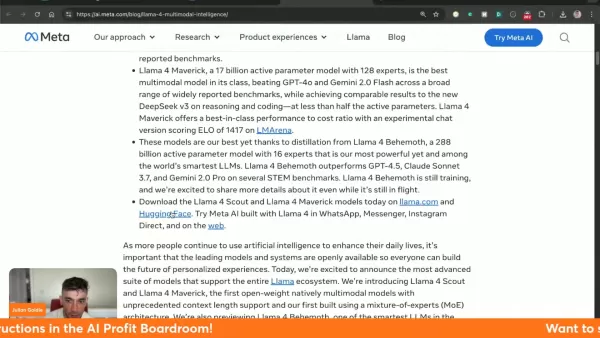

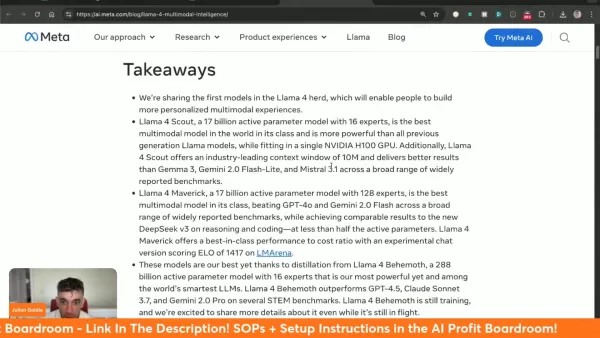

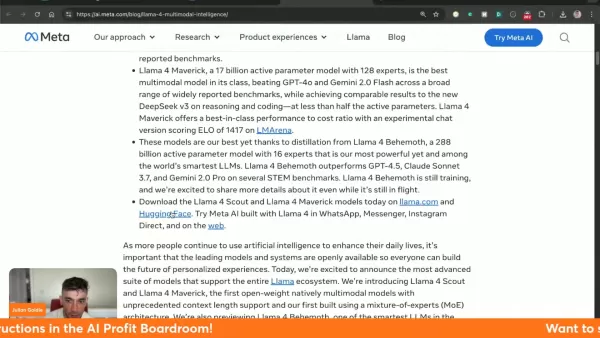

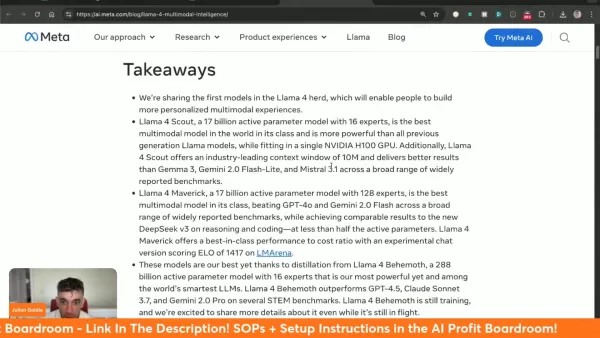

Meta's Llama 4 represents a quantum leap in multimodal AI technology, introducing unprecedented capabilities that reshape what's possible in artificial intelligence. With its triad of specialized models, expanded context processing, and benchmark-defying performance, this latest iteration establishes new standards for AI development and implementation.

Key Points

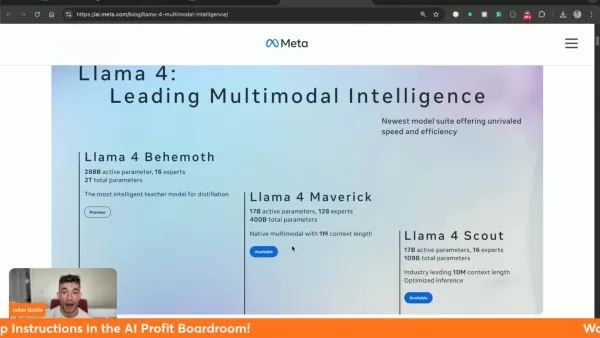

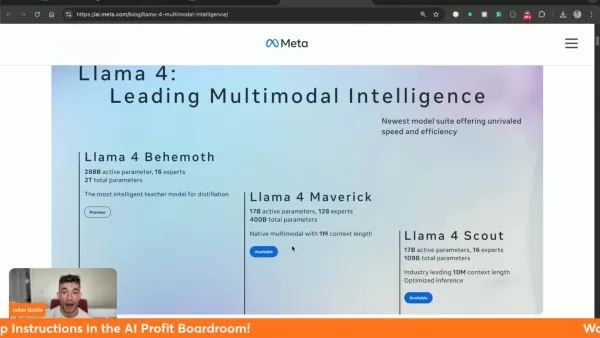

Three specialized Llama 4 variants: Behemoth (training), Maverick, and Scout

Scout model features revolutionary 10 million token context window

Maverick outperforms competitors including Gemini 2.0 Flash and GPT-4o

Available through llama.com and Hugging Face platforms

Demonstrates superior performance across key benchmarks - visual processing, coding, and complex reasoning

Integrated into MetaAI services within WhatsApp, Messenger, and Instagram

Understanding Llama 4: Meta's Latest AI Breakthrough

What is Llama 4?

Llama 4 constitutes Meta's most advanced multimodal AI system to date, combining text and visual processing in a unified architecture. This gen-next technology delivers unmatched efficiency across diverse applications, with three distinct models offering specialized capabilities.

The system's groundbreaking context handling addresses previous limitations, enabling nuanced interpretation of complex inputs. Particularly revolutionary is Scout's 10M token capacity, allowing comprehensive analysis of extensive data sets with maintained coherence.

Meta facilitates broad accessibility through llama.com and Hugging Face, encouraging developer innovation while integrating Llama 4 into its flagship social platforms.

Comparing Llama 4 Scout with Other Models

Llama 4 Scout vs. Competitors: A Benchmark Analysis

Llama 4 Scout establishes new performance standards against industry leaders:

Benchmark Llama 4 Scout Gemma 3 27B Mistral 3.1 24B Gemini 2.0 Flash-Lite Image Reasoning (MMMU) 69.4 64.9 62.8 68.0 MathVista 70.7 67.6 68.9 57.6 Image Understanding (ChartQA) 88.8 76.3 86.2 73.0 DocVQA (test) 94.4 90.4 94.1 91.2 Coding (LiveCodeBench) 32.8 29.7 - 28.9 Reasoning & Knowledge (MMLU Pro) 74.3 67.5 66.8 71.6

The model particularly excels in document analysis (94.4 DocVQA score) and visual data interpretation (88.8 ChartQA), while maintaining competitive performance across all tested categories.

How to Get Started with Llama 4

Accessing and Implementing Llama 4 in Your Projects

Begin exploring Llama 4 capabilities through these steps:

- Platform Access: Visit official distribution channels at llama.com or Hugging Face

- Model Selection: Choose between available versions based on project requirements (note Behemoth remains in development)

- System Integration: Follow Meta's comprehensive documentation for implementation

- Performance Testing: Experiment with various applications to optimize results

Llama 4 Cost Analysis

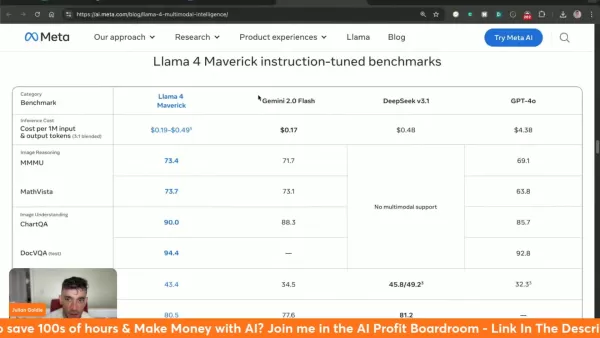

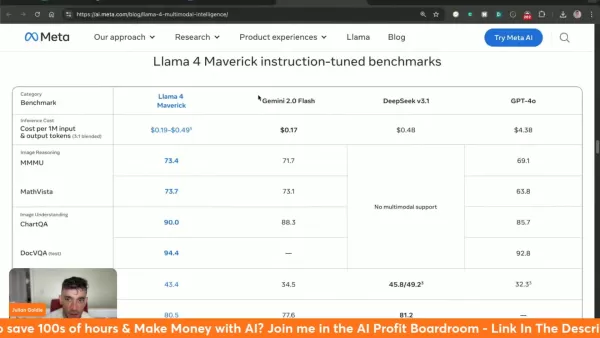

Understanding the Inference Cost of Llama 4 Maverick

Llama 4 Maverick operates at $0.19-$0.49 per million tokens, presenting significant value against alternatives:

- Gemini 2.0 Flash: ~$0.17/million tokens

- deepseek v3.1: ~$0.48/million tokens

- GPT-4o: ~$4.38/million tokens

Weighing the Pros and Cons of Llama 4

Pros

Benchmark-leading performance metrics

Unprecedented 10M token context capacity

Accessible through major AI platforms

True multimodal architecture

Cost-effective compared to premium alternatives

Cons

Behemoth model still in training phase

High system resource requirements

Exploring Llama 4's Core Features

Key Features of Llama 4 Models

Llama 4 introduces several groundbreaking innovations:

- Native Multimodality: Unified processing of text and visual inputs

- Massive Context Capacity: 10M token processing in Scout model

- Performance Leadership: Outperforms GPT-4o/Gemini 2.0 in multiple categories

- Open Accessibility: Available via llama.com and Hugging Face

- Architectural Efficiency: Mixture of Experts (MoE) design optimizes computing resources

Diverse Use Cases for Llama 4

Potential Applications of Llama 4 in Various Industries

Llama 4's advanced capabilities enable transformative applications:

- Customer Experience: Enhanced chatbot interactions using extended context memory

- Content Generation: High-quality automated content production

- Business Intelligence: Advanced data pattern recognition and analysis

- Developer Tools: AI-powered coding assistance and debugging

- Social Platforms: Integrated into Meta's messaging services for improved AI interactions

Frequently Asked Questions

Where can I access Llama 4?

Available through llama.com and Hugging Face, with integration into Meta's social platforms.

What is the context window size of Llama 4 Scout?

Scout features industry-leading 10 million token capacity for comprehensive context understanding.

How does Llama 4 perform compared to other models?

Demonstrates superior capabilities across multiple benchmarks against leading competitors.

What is Meta AI?

Meta's AI implementation now powered by Llama 4 across WhatsApp, Messenger, and Instagram.

Related Questions

What are the different models available within Llama 4?

Three specialized models: Behemoth (training/teacher model), Maverick (1M context multimodal), and Scout (10M context specialist).

How does the Mixture of Experts (MoE) architecture enhance Llama 4's performance?

Optimizes computational efficiency while maintaining output quality through specialized sub-networks.

Where can i view the instruction-tuned benchmarks for each Llama 4 model?

Comprehensive comparisons available against GPT-4o, Gemini 2.0 Flash, and other leading models.

Related article

Google Introduces AI-Powered Tools for Gmail, Docs, and Vids

Google Unveils AI-Powered Workspace Updates at I/O 2025During its annual developer conference, Google has introduced transformative AI enhancements coming to its Workspace suite, fundamentally changing how users interact with Gmail, Docs, and Vids. T

Google Introduces AI-Powered Tools for Gmail, Docs, and Vids

Google Unveils AI-Powered Workspace Updates at I/O 2025During its annual developer conference, Google has introduced transformative AI enhancements coming to its Workspace suite, fundamentally changing how users interact with Gmail, Docs, and Vids. T

AWS Launches Bedrock AgentCore: Open-Source Platform for Enterprise AI Agent Development

Here is the rewritten HTML content:AWS Launches Bedrock AgentCore for Enterprise AI Agents

Amazon Web Services (AWS) is betting big on AI agents transforming business operations, introducing Amazon Bedrock AgentCore—an enterprise-grade platform enab

AWS Launches Bedrock AgentCore: Open-Source Platform for Enterprise AI Agent Development

Here is the rewritten HTML content:AWS Launches Bedrock AgentCore for Enterprise AI Agents

Amazon Web Services (AWS) is betting big on AI agents transforming business operations, introducing Amazon Bedrock AgentCore—an enterprise-grade platform enab

Akaluli AI Voice Recorder Enhances Productivity & Focus Efficiently

In our hyper-connected work environments, maintaining focus during crucial conversations has become increasingly challenging. The Akaluli AI Voice Recorder presents an innovative solution to this modern dilemma by seamlessly capturing, transcribing,

Comments (0)

0/200

Akaluli AI Voice Recorder Enhances Productivity & Focus Efficiently

In our hyper-connected work environments, maintaining focus during crucial conversations has become increasingly challenging. The Akaluli AI Voice Recorder presents an innovative solution to this modern dilemma by seamlessly capturing, transcribing,

Comments (0)

0/200

Meta's Llama 4 represents a quantum leap in multimodal AI technology, introducing unprecedented capabilities that reshape what's possible in artificial intelligence. With its triad of specialized models, expanded context processing, and benchmark-defying performance, this latest iteration establishes new standards for AI development and implementation.

Key Points

Three specialized Llama 4 variants: Behemoth (training), Maverick, and Scout

Scout model features revolutionary 10 million token context window

Maverick outperforms competitors including Gemini 2.0 Flash and GPT-4o

Available through llama.com and Hugging Face platforms

Demonstrates superior performance across key benchmarks - visual processing, coding, and complex reasoning

Integrated into MetaAI services within WhatsApp, Messenger, and Instagram

Understanding Llama 4: Meta's Latest AI Breakthrough

What is Llama 4?

Llama 4 constitutes Meta's most advanced multimodal AI system to date, combining text and visual processing in a unified architecture. This gen-next technology delivers unmatched efficiency across diverse applications, with three distinct models offering specialized capabilities.

The system's groundbreaking context handling addresses previous limitations, enabling nuanced interpretation of complex inputs. Particularly revolutionary is Scout's 10M token capacity, allowing comprehensive analysis of extensive data sets with maintained coherence.

Meta facilitates broad accessibility through llama.com and Hugging Face, encouraging developer innovation while integrating Llama 4 into its flagship social platforms.

Comparing Llama 4 Scout with Other Models

Llama 4 Scout vs. Competitors: A Benchmark Analysis

Llama 4 Scout establishes new performance standards against industry leaders:

| Benchmark | Llama 4 Scout | Gemma 3 27B | Mistral 3.1 24B | Gemini 2.0 Flash-Lite |

|---|---|---|---|---|

| Image Reasoning (MMMU) | 69.4 | 64.9 | 62.8 | 68.0 |

| MathVista | 70.7 | 67.6 | 68.9 | 57.6 |

| Image Understanding (ChartQA) | 88.8 | 76.3 | 86.2 | 73.0 |

| DocVQA (test) | 94.4 | 90.4 | 94.1 | 91.2 |

| Coding (LiveCodeBench) | 32.8 | 29.7 | - | 28.9 |

| Reasoning & Knowledge (MMLU Pro) | 74.3 | 67.5 | 66.8 | 71.6 |

The model particularly excels in document analysis (94.4 DocVQA score) and visual data interpretation (88.8 ChartQA), while maintaining competitive performance across all tested categories.

How to Get Started with Llama 4

Accessing and Implementing Llama 4 in Your Projects

Begin exploring Llama 4 capabilities through these steps:

- Platform Access: Visit official distribution channels at llama.com or Hugging Face

- Model Selection: Choose between available versions based on project requirements (note Behemoth remains in development)

- System Integration: Follow Meta's comprehensive documentation for implementation

- Performance Testing: Experiment with various applications to optimize results

Llama 4 Cost Analysis

Understanding the Inference Cost of Llama 4 Maverick

Llama 4 Maverick operates at $0.19-$0.49 per million tokens, presenting significant value against alternatives:

- Gemini 2.0 Flash: ~$0.17/million tokens

- deepseek v3.1: ~$0.48/million tokens

- GPT-4o: ~$4.38/million tokens

Weighing the Pros and Cons of Llama 4

Pros

Benchmark-leading performance metrics

Unprecedented 10M token context capacity

Accessible through major AI platforms

True multimodal architecture

Cost-effective compared to premium alternatives

Cons

Behemoth model still in training phase

High system resource requirements

Exploring Llama 4's Core Features

Key Features of Llama 4 Models

Llama 4 introduces several groundbreaking innovations:

- Native Multimodality: Unified processing of text and visual inputs

- Massive Context Capacity: 10M token processing in Scout model

- Performance Leadership: Outperforms GPT-4o/Gemini 2.0 in multiple categories

- Open Accessibility: Available via llama.com and Hugging Face

- Architectural Efficiency: Mixture of Experts (MoE) design optimizes computing resources

Diverse Use Cases for Llama 4

Potential Applications of Llama 4 in Various Industries

Llama 4's advanced capabilities enable transformative applications:

- Customer Experience: Enhanced chatbot interactions using extended context memory

- Content Generation: High-quality automated content production

- Business Intelligence: Advanced data pattern recognition and analysis

- Developer Tools: AI-powered coding assistance and debugging

- Social Platforms: Integrated into Meta's messaging services for improved AI interactions

Frequently Asked Questions

Where can I access Llama 4?

Available through llama.com and Hugging Face, with integration into Meta's social platforms.

What is the context window size of Llama 4 Scout?

Scout features industry-leading 10 million token capacity for comprehensive context understanding.

How does Llama 4 perform compared to other models?

Demonstrates superior capabilities across multiple benchmarks against leading competitors.

What is Meta AI?

Meta's AI implementation now powered by Llama 4 across WhatsApp, Messenger, and Instagram.

Related Questions

What are the different models available within Llama 4?

Three specialized models: Behemoth (training/teacher model), Maverick (1M context multimodal), and Scout (10M context specialist).

How does the Mixture of Experts (MoE) architecture enhance Llama 4's performance?

Optimizes computational efficiency while maintaining output quality through specialized sub-networks.

Where can i view the instruction-tuned benchmarks for each Llama 4 model?

Comprehensive comparisons available against GPT-4o, Gemini 2.0 Flash, and other leading models.

Google Introduces AI-Powered Tools for Gmail, Docs, and Vids

Google Unveils AI-Powered Workspace Updates at I/O 2025During its annual developer conference, Google has introduced transformative AI enhancements coming to its Workspace suite, fundamentally changing how users interact with Gmail, Docs, and Vids. T

Google Introduces AI-Powered Tools for Gmail, Docs, and Vids

Google Unveils AI-Powered Workspace Updates at I/O 2025During its annual developer conference, Google has introduced transformative AI enhancements coming to its Workspace suite, fundamentally changing how users interact with Gmail, Docs, and Vids. T

AWS Launches Bedrock AgentCore: Open-Source Platform for Enterprise AI Agent Development

Here is the rewritten HTML content:AWS Launches Bedrock AgentCore for Enterprise AI Agents

Amazon Web Services (AWS) is betting big on AI agents transforming business operations, introducing Amazon Bedrock AgentCore—an enterprise-grade platform enab

AWS Launches Bedrock AgentCore: Open-Source Platform for Enterprise AI Agent Development

Here is the rewritten HTML content:AWS Launches Bedrock AgentCore for Enterprise AI Agents

Amazon Web Services (AWS) is betting big on AI agents transforming business operations, introducing Amazon Bedrock AgentCore—an enterprise-grade platform enab

Akaluli AI Voice Recorder Enhances Productivity & Focus Efficiently

In our hyper-connected work environments, maintaining focus during crucial conversations has become increasingly challenging. The Akaluli AI Voice Recorder presents an innovative solution to this modern dilemma by seamlessly capturing, transcribing,

Akaluli AI Voice Recorder Enhances Productivity & Focus Efficiently

In our hyper-connected work environments, maintaining focus during crucial conversations has become increasingly challenging. The Akaluli AI Voice Recorder presents an innovative solution to this modern dilemma by seamlessly capturing, transcribing,