AI Reasoning Model Progress May Plateau by 2026, Epoch AI Study Suggests

A study by Epoch AI, a nonprofit AI research institute, indicates that the AI sector may soon face challenges in achieving significant performance improvements from reasoning AI models. The report predicts that advancements in these models could decelerate within the next year.

Advanced reasoning models, such as OpenAI’s o3, have recently driven notable improvements in AI benchmarks, particularly in math and coding tasks. These models leverage increased computational power to enhance performance, though this often results in longer processing times compared to traditional models.

Reasoning models are created by initially training a standard model on vast datasets, followed by reinforcement learning, which provides the model with feedback to refine its problem-solving capabilities.

According to Epoch, leading AI labs like OpenAI have not yet heavily utilized computational resources for the reinforcement learning phase of reasoning model development.

This trend is shifting. OpenAI disclosed that it used approximately ten times more computational power to train o3 compared to its predecessor, o1, with Epoch suggesting that most of this was allocated to reinforcement learning. OpenAI researcher Dan Roberts recently indicated that the company plans to further prioritize reinforcement learning, potentially using even more computational resources than for initial model training.

However, Epoch notes that there is a limit to how much computational power can be applied to reinforcement learning.

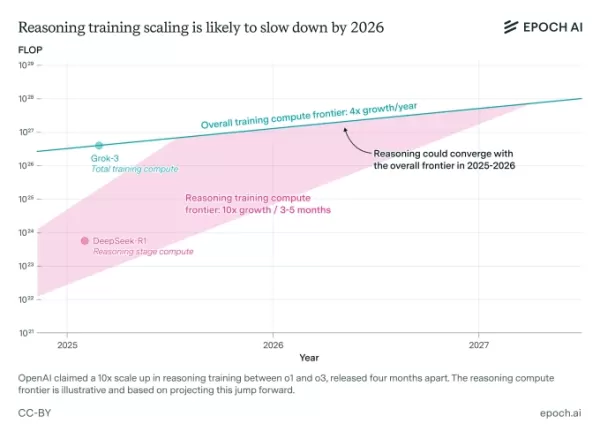

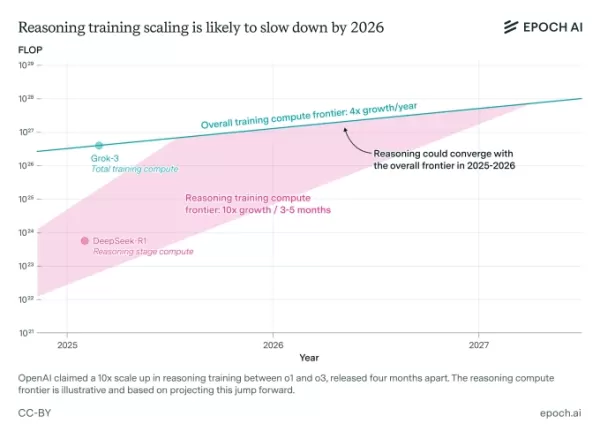

An Epoch AI study suggests that scaling training for reasoning models may soon face limitations. Image Credits: Epoch AI Josh You, an analyst at Epoch and the study’s author, notes that performance gains from standard AI model training are currently quadrupling annually, while reinforcement learning gains are increasing tenfold every three to five months. He predicts that reasoning model progress will likely align with overall AI advancements by 2026.

Showcase at TechCrunch Sessions: AI

Reserve your space at TC Sessions: AI to present your innovations to over 1,200 decision-makers without breaking the bank. Available until May 9 or while spaces remain.

Showcase at TechCrunch Sessions: AI

Reserve your space at TC Sessions: AI to present your innovations to over 1,200 decision-makers without breaking the bank. Available until May 9 or while spaces remain.

Berkeley, CA | June 5 BOOK NOWEpoch’s study relies on certain assumptions and incorporates public statements from AI industry leaders. It also highlights that scaling reasoning models may face obstacles beyond computational limits, such as high research overhead costs.

“Ongoing research costs could limit the scalability of reasoning models,” You explains. “Since rapid computational scaling is a key factor in their progress, this warrants close attention.”

Any signs that reasoning models may hit a performance ceiling soon could raise concerns in the AI industry, which has heavily invested in their development. Research already indicates that these models, despite their high operational costs, have notable flaws, including a higher tendency to produce inaccurate outputs compared to some traditional models.

Related article

Renowned AI Expert Unveils Controversial Startup Aimed at Replacing Global Workforce

Every once in a while, a Silicon Valley startup comes along with a mission statement so outlandish that it's hard to tell if it's genuine or just poking fun at the industry. Enter Mechanize, a new venture led by renowned AI researcher Tamay Besiroglu, which has sparked quite the debate on X after it

Renowned AI Expert Unveils Controversial Startup Aimed at Replacing Global Workforce

Every once in a while, a Silicon Valley startup comes along with a mission statement so outlandish that it's hard to tell if it's genuine or just poking fun at the industry. Enter Mechanize, a new venture led by renowned AI researcher Tamay Besiroglu, which has sparked quite the debate on X after it

ChatGPT's Energy Use Lower Than Expected

ChatGPT, the chatbot from OpenAI, might not be the energy guzzler we thought it was. But, its energy use can vary a lot depending on how it's used and which AI models are answering the questions, according to a new study.Epoch AI, a nonprofit research group, took a crack at figuring out how much jui

ChatGPT's Energy Use Lower Than Expected

ChatGPT, the chatbot from OpenAI, might not be the energy guzzler we thought it was. But, its energy use can vary a lot depending on how it's used and which AI models are answering the questions, according to a new study.Epoch AI, a nonprofit research group, took a crack at figuring out how much jui

Finding Strength Through Faith: Exploring Its Peaceful Power

Amidst the chaos and pressures of modern life, discovering inner tranquility and lasting resilience becomes essential. Faith stands firm as a pillar for countless individuals - offering guidance through uncertainty, comfort in distress, and clarity a

Comments (5)

0/200

Finding Strength Through Faith: Exploring Its Peaceful Power

Amidst the chaos and pressures of modern life, discovering inner tranquility and lasting resilience becomes essential. Faith stands firm as a pillar for countless individuals - offering guidance through uncertainty, comfort in distress, and clarity a

Comments (5)

0/200

![PeterPerez]() PeterPerez

PeterPerez

August 26, 2025 at 1:25:25 AM EDT

August 26, 2025 at 1:25:25 AM EDT

Mind-blowing study! If AI reasoning hits a wall by 2026, what’s next? Kinda scary to think we might be maxing out so soon. 😬

0

0

![RyanGonzalez]() RyanGonzalez

RyanGonzalez

August 23, 2025 at 1:01:22 PM EDT

August 23, 2025 at 1:01:22 PM EDT

This AI plateau talk is wild! If reasoning models hit a wall by 2026, what’s next? Kinda feels like we’re racing to the moon but might run out of fuel. 😅 Curious if this’ll push devs to get creative or just lean harder on hardware.

0

0

![AvaPhillips]() AvaPhillips

AvaPhillips

August 20, 2025 at 5:01:15 AM EDT

August 20, 2025 at 5:01:15 AM EDT

This AI plateau talk is wild! 🤯 Feels like we’re hitting a tech ceiling already. Wonder if it’s a real limit or just a pause before the next big leap?

0

0

![EricMiller]() EricMiller

EricMiller

August 20, 2025 at 3:01:19 AM EDT

August 20, 2025 at 3:01:19 AM EDT

Wow, AI progress hitting a plateau by 2026? That’s wild! I thought we’d keep zooming toward super-smart machines, but maybe it’s time for a new breakthrough to shake things up. 🧠

0

0

![BenGarcía]() BenGarcía

BenGarcía

August 5, 2025 at 5:00:59 AM EDT

August 5, 2025 at 5:00:59 AM EDT

This article's got me thinking—AI progress might hit a wall by 2026? That's wild! I wonder if companies will pivot to new tech or just keep pushing the same models. 🤔

0

0

A study by Epoch AI, a nonprofit AI research institute, indicates that the AI sector may soon face challenges in achieving significant performance improvements from reasoning AI models. The report predicts that advancements in these models could decelerate within the next year.

Advanced reasoning models, such as OpenAI’s o3, have recently driven notable improvements in AI benchmarks, particularly in math and coding tasks. These models leverage increased computational power to enhance performance, though this often results in longer processing times compared to traditional models.

Reasoning models are created by initially training a standard model on vast datasets, followed by reinforcement learning, which provides the model with feedback to refine its problem-solving capabilities.

According to Epoch, leading AI labs like OpenAI have not yet heavily utilized computational resources for the reinforcement learning phase of reasoning model development.

This trend is shifting. OpenAI disclosed that it used approximately ten times more computational power to train o3 compared to its predecessor, o1, with Epoch suggesting that most of this was allocated to reinforcement learning. OpenAI researcher Dan Roberts recently indicated that the company plans to further prioritize reinforcement learning, potentially using even more computational resources than for initial model training.

However, Epoch notes that there is a limit to how much computational power can be applied to reinforcement learning.

Josh You, an analyst at Epoch and the study’s author, notes that performance gains from standard AI model training are currently quadrupling annually, while reinforcement learning gains are increasing tenfold every three to five months. He predicts that reasoning model progress will likely align with overall AI advancements by 2026.

Showcase at TechCrunch Sessions: AI

Reserve your space at TC Sessions: AI to present your innovations to over 1,200 decision-makers without breaking the bank. Available until May 9 or while spaces remain.

Showcase at TechCrunch Sessions: AI

Reserve your space at TC Sessions: AI to present your innovations to over 1,200 decision-makers without breaking the bank. Available until May 9 or while spaces remain.

Berkeley, CA | June 5 BOOK NOWEpoch’s study relies on certain assumptions and incorporates public statements from AI industry leaders. It also highlights that scaling reasoning models may face obstacles beyond computational limits, such as high research overhead costs.

“Ongoing research costs could limit the scalability of reasoning models,” You explains. “Since rapid computational scaling is a key factor in their progress, this warrants close attention.”

Any signs that reasoning models may hit a performance ceiling soon could raise concerns in the AI industry, which has heavily invested in their development. Research already indicates that these models, despite their high operational costs, have notable flaws, including a higher tendency to produce inaccurate outputs compared to some traditional models.

Renowned AI Expert Unveils Controversial Startup Aimed at Replacing Global Workforce

Every once in a while, a Silicon Valley startup comes along with a mission statement so outlandish that it's hard to tell if it's genuine or just poking fun at the industry. Enter Mechanize, a new venture led by renowned AI researcher Tamay Besiroglu, which has sparked quite the debate on X after it

Renowned AI Expert Unveils Controversial Startup Aimed at Replacing Global Workforce

Every once in a while, a Silicon Valley startup comes along with a mission statement so outlandish that it's hard to tell if it's genuine or just poking fun at the industry. Enter Mechanize, a new venture led by renowned AI researcher Tamay Besiroglu, which has sparked quite the debate on X after it

ChatGPT's Energy Use Lower Than Expected

ChatGPT, the chatbot from OpenAI, might not be the energy guzzler we thought it was. But, its energy use can vary a lot depending on how it's used and which AI models are answering the questions, according to a new study.Epoch AI, a nonprofit research group, took a crack at figuring out how much jui

ChatGPT's Energy Use Lower Than Expected

ChatGPT, the chatbot from OpenAI, might not be the energy guzzler we thought it was. But, its energy use can vary a lot depending on how it's used and which AI models are answering the questions, according to a new study.Epoch AI, a nonprofit research group, took a crack at figuring out how much jui

Finding Strength Through Faith: Exploring Its Peaceful Power

Amidst the chaos and pressures of modern life, discovering inner tranquility and lasting resilience becomes essential. Faith stands firm as a pillar for countless individuals - offering guidance through uncertainty, comfort in distress, and clarity a

Finding Strength Through Faith: Exploring Its Peaceful Power

Amidst the chaos and pressures of modern life, discovering inner tranquility and lasting resilience becomes essential. Faith stands firm as a pillar for countless individuals - offering guidance through uncertainty, comfort in distress, and clarity a

August 26, 2025 at 1:25:25 AM EDT

August 26, 2025 at 1:25:25 AM EDT

Mind-blowing study! If AI reasoning hits a wall by 2026, what’s next? Kinda scary to think we might be maxing out so soon. 😬

0

0

August 23, 2025 at 1:01:22 PM EDT

August 23, 2025 at 1:01:22 PM EDT

This AI plateau talk is wild! If reasoning models hit a wall by 2026, what’s next? Kinda feels like we’re racing to the moon but might run out of fuel. 😅 Curious if this’ll push devs to get creative or just lean harder on hardware.

0

0

August 20, 2025 at 5:01:15 AM EDT

August 20, 2025 at 5:01:15 AM EDT

This AI plateau talk is wild! 🤯 Feels like we’re hitting a tech ceiling already. Wonder if it’s a real limit or just a pause before the next big leap?

0

0

August 20, 2025 at 3:01:19 AM EDT

August 20, 2025 at 3:01:19 AM EDT

Wow, AI progress hitting a plateau by 2026? That’s wild! I thought we’d keep zooming toward super-smart machines, but maybe it’s time for a new breakthrough to shake things up. 🧠

0

0

August 5, 2025 at 5:00:59 AM EDT

August 5, 2025 at 5:00:59 AM EDT

This article's got me thinking—AI progress might hit a wall by 2026? That's wild! I wonder if companies will pivot to new tech or just keep pushing the same models. 🤔

0

0