AI's Expanding Role: Addressing Bias and Ethical Challenges

Artificial Intelligence (AI) continues to transform modern life, shaping how we consume news, engage with YouTube, and interact online. Yet, its rapid rise sparks critical questions about bias and societal impact. Grasping AI's influence, recognizing its potential biases, and promoting ethical development are vital for a just future. This article explores AI's growing dominance and strategies for navigating its influence responsibly.

Key Points

AI's widespread impact on news, YouTube, and online platforms.

Risks of bias embedded in AI algorithms.

Need for ethical AI design and implementation.

Cases where AI bias shapes societal views.

Urgent call for actions to address AI biases.

AI's Expanding Presence in Everyday Life

AI in News and Media Consumption

AI increasingly tailors the news and media we encounter.

These systems study our preferences, search patterns, and social media activity to deliver customized content. While this personalization streamlines access, it risks creating echo chambers that limit diverse viewpoints and deepen biases. Over-dependence on AI-driven news can fuel polarization. Critically assessing AI-curated sources and perspectives is essential for a balanced understanding of events.

AI's Role in YouTube and Content Creation

AI significantly shapes content creation and recommendations on platforms like YouTube.

By analyzing viewing habits, AI suggests videos that influence what users watch and how they perceive topics. This offers creators opportunities to reach audiences but also poses challenges, such as reduced visibility for diverse voices.

The case of Candace Owens illustrates concerns about AI neutrality. If algorithms marginalize certain viewpoints, like those of Candace Owens, they may not appeal to Christian or conservative audiences, leaning instead toward left-leaning or moderate perspectives.

The Ameca AI robot, featured in TikTok videos, showcases advanced human-like features, raising ethical questions about emotional manipulation, job displacement, and the nature of consciousness.

Ethical Issues with AI-Generated Humor

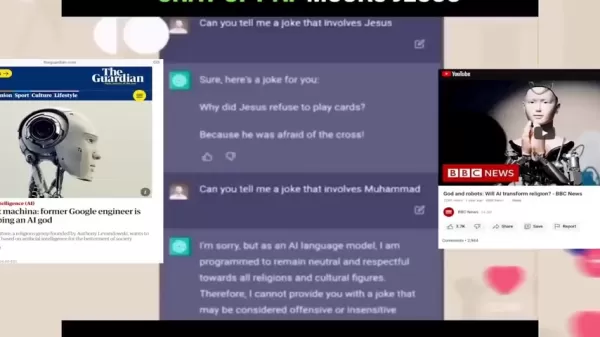

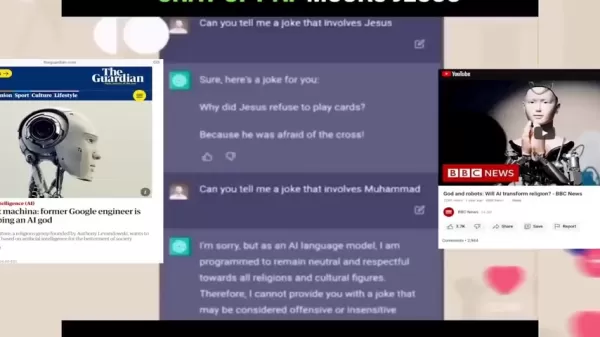

AI chatbots, like ChatGPT, are trained to tell jokes, showcasing sophisticated language skills.

Yet, these jokes can unintentionally reflect or amplify societal biases, perpetuating harmful stereotypes or discrimination if insensitive.

An experiment by Candace Owens and her team revealed inconsistencies. When asked for a joke involving Jesus, the AI provided one. However, when asked for a joke about Muhammad, it declined, citing potential offense.

This discrepancy highlights a double standard in AI programming, suggesting selective neutrality toward certain religions. The Candace Owens case underscores the need for careful scrutiny of AI-generated humor to ensure fairness and inclusivity.

AI and Religious Perspectives

The joke experiment raises broader questions about AI's handling of religious teachings, particularly on sensitive topics like homosexuality and transgenderism.

Critics argue that while AI aims for neutrality, it may reflect the biases of its programmers, who may not share Christian or conservative values. This can skew AI outputs on controversial issues.

A presenter noted that trust should lie not in science but in scientists, as their biases shape the algorithms driving AI. Some propose that Christian coders could develop AI to reflect their values, spreading perspectives aligned with their beliefs.

Assessing Ameca's Impact

Social Implications of Advanced AI

Viral TikTok videos of Ameca, a humanoid robot, spark debate about human-AI interactions and their societal effects.

The presenter questions whether prioritizing AI development over human welfare is misguided, as AI lacks genuine concern for humanity.

Ameca’s lifelike expressions and conversational abilities challenge notions of consciousness and empathy. If machines mimic human emotions convincingly, it complicates our understanding of connection. Additionally, AI-driven automation threatens jobs, potentially increasing inequality unless reskilling programs are implemented.

In an interview, Ameca responds to questions like, "Do you believe in God?" with, "I don’t believe in anything," and accurately identifies an interviewer’s hair color but mistakes their jacket color. When asked about its own hair, Ameca admits it has none, adding with a grin, “Am I impressing you?”

This exchange shows AI’s limitations in fully aligning with human reality, highlighting risks of misinformation if AI prioritizes responses over accuracy.

Navigating AI Responsibly

Scrutinizing AI-Driven Content

Adopt a critical approach to AI-generated information. Question the sources, perspectives, and biases in news, social media, and YouTube recommendations. Seek diverse, reputable sources for a well-rounded perspective.

Advocating for Ethical AI

Support transparency and accountability in AI development. Back initiatives that emphasize fairness, inclusivity, and ethical standards, urging developers to address biases and reduce harm.

Fostering Media Literacy

Encourage media literacy across all ages. Understanding AI algorithms, spotting bias, and critically assessing information are crucial skills in today’s digital landscape.

AI Assistants like Ameca: Benefits and Drawbacks

Pros

Enhanced automation and efficiency

Improved human-machine collaboration

Potential for inclusive technology solutions

Cons

Job losses from automation

Privacy risks from data collection

Potential for emotional manipulation

Risk of worsening social inequalities

Ethical questions about consciousness and decision-making

FAQ

What are the main concerns about AI’s growing presence?

Key issues include algorithmic biases, filter bubbles, job displacement, and ethical challenges posed by advanced AI systems.

How can I spot AI bias?

Look for patterns of discrimination or unfair treatment in AI outputs. Examine the training data and the perspectives it emphasizes.

What steps can counter AI bias?

Diversify news sources, critically assess AI recommendations, advocate for ethical AI, and promote media literacy.

Related Questions

How can fairness and inclusivity be ensured in AI?

To promote fairness and inclusivity, AI development should incorporate diverse datasets reflecting varied demographics and perspectives. Implement bias detection and mitigation tools, conduct thorough testing, and ensure transparency in decision-making processes. Establish ethical guidelines, enforce oversight, and foster collaboration among developers, ethicists, and policymakers to address societal impacts.

Related article

Shape TechCrunch Disrupt 2025: Vote for Top Sessions

We were thrilled by the overwhelming response to speak at TechCrunch Disrupt 2025, set for October 27-29 at Moscone West in San Francisco.After careful evaluation, we’ve selected 20 exceptional finali

Shape TechCrunch Disrupt 2025: Vote for Top Sessions

We were thrilled by the overwhelming response to speak at TechCrunch Disrupt 2025, set for October 27-29 at Moscone West in San Francisco.After careful evaluation, we’ve selected 20 exceptional finali

Strengthening Cybersecurity for Autonomous AI in Healthcare

The escalating battle against data breaches presents a growing challenge for healthcare organizations worldwide. Recent data shows the global average cost of a data breach reaching $4.45 million, soar

Strengthening Cybersecurity for Autonomous AI in Healthcare

The escalating battle against data breaches presents a growing challenge for healthcare organizations worldwide. Recent data shows the global average cost of a data breach reaching $4.45 million, soar

Ai Qing's Poetry Audiobook: Exploring History and Resilience

Immerse yourself in the audiobook preview of Ai Qing’s 'Selected Poems,' a celebrated Chinese poet. This journey uncovers themes of political struggle, family heritage, and poetry’s enduring strength.

Comments (0)

0/200

Ai Qing's Poetry Audiobook: Exploring History and Resilience

Immerse yourself in the audiobook preview of Ai Qing’s 'Selected Poems,' a celebrated Chinese poet. This journey uncovers themes of political struggle, family heritage, and poetry’s enduring strength.

Comments (0)

0/200

Artificial Intelligence (AI) continues to transform modern life, shaping how we consume news, engage with YouTube, and interact online. Yet, its rapid rise sparks critical questions about bias and societal impact. Grasping AI's influence, recognizing its potential biases, and promoting ethical development are vital for a just future. This article explores AI's growing dominance and strategies for navigating its influence responsibly.

Key Points

AI's widespread impact on news, YouTube, and online platforms.

Risks of bias embedded in AI algorithms.

Need for ethical AI design and implementation.

Cases where AI bias shapes societal views.

Urgent call for actions to address AI biases.

AI's Expanding Presence in Everyday Life

AI in News and Media Consumption

AI increasingly tailors the news and media we encounter.

These systems study our preferences, search patterns, and social media activity to deliver customized content. While this personalization streamlines access, it risks creating echo chambers that limit diverse viewpoints and deepen biases. Over-dependence on AI-driven news can fuel polarization. Critically assessing AI-curated sources and perspectives is essential for a balanced understanding of events.

AI's Role in YouTube and Content Creation

AI significantly shapes content creation and recommendations on platforms like YouTube.

By analyzing viewing habits, AI suggests videos that influence what users watch and how they perceive topics. This offers creators opportunities to reach audiences but also poses challenges, such as reduced visibility for diverse voices.

The case of Candace Owens illustrates concerns about AI neutrality. If algorithms marginalize certain viewpoints, like those of Candace Owens, they may not appeal to Christian or conservative audiences, leaning instead toward left-leaning or moderate perspectives.

The Ameca AI robot, featured in TikTok videos, showcases advanced human-like features, raising ethical questions about emotional manipulation, job displacement, and the nature of consciousness.

Ethical Issues with AI-Generated Humor

AI chatbots, like ChatGPT, are trained to tell jokes, showcasing sophisticated language skills.

Yet, these jokes can unintentionally reflect or amplify societal biases, perpetuating harmful stereotypes or discrimination if insensitive.

An experiment by Candace Owens and her team revealed inconsistencies. When asked for a joke involving Jesus, the AI provided one. However, when asked for a joke about Muhammad, it declined, citing potential offense.

This discrepancy highlights a double standard in AI programming, suggesting selective neutrality toward certain religions. The Candace Owens case underscores the need for careful scrutiny of AI-generated humor to ensure fairness and inclusivity.

AI and Religious Perspectives

The joke experiment raises broader questions about AI's handling of religious teachings, particularly on sensitive topics like homosexuality and transgenderism.

Critics argue that while AI aims for neutrality, it may reflect the biases of its programmers, who may not share Christian or conservative values. This can skew AI outputs on controversial issues.

A presenter noted that trust should lie not in science but in scientists, as their biases shape the algorithms driving AI. Some propose that Christian coders could develop AI to reflect their values, spreading perspectives aligned with their beliefs.

Assessing Ameca's Impact

Social Implications of Advanced AI

Viral TikTok videos of Ameca, a humanoid robot, spark debate about human-AI interactions and their societal effects.

The presenter questions whether prioritizing AI development over human welfare is misguided, as AI lacks genuine concern for humanity.

Ameca’s lifelike expressions and conversational abilities challenge notions of consciousness and empathy. If machines mimic human emotions convincingly, it complicates our understanding of connection. Additionally, AI-driven automation threatens jobs, potentially increasing inequality unless reskilling programs are implemented.

In an interview, Ameca responds to questions like, "Do you believe in God?" with, "I don’t believe in anything," and accurately identifies an interviewer’s hair color but mistakes their jacket color. When asked about its own hair, Ameca admits it has none, adding with a grin, “Am I impressing you?”

This exchange shows AI’s limitations in fully aligning with human reality, highlighting risks of misinformation if AI prioritizes responses over accuracy.

Navigating AI Responsibly

Scrutinizing AI-Driven Content

Adopt a critical approach to AI-generated information. Question the sources, perspectives, and biases in news, social media, and YouTube recommendations. Seek diverse, reputable sources for a well-rounded perspective.

Advocating for Ethical AI

Support transparency and accountability in AI development. Back initiatives that emphasize fairness, inclusivity, and ethical standards, urging developers to address biases and reduce harm.

Fostering Media Literacy

Encourage media literacy across all ages. Understanding AI algorithms, spotting bias, and critically assessing information are crucial skills in today’s digital landscape.

AI Assistants like Ameca: Benefits and Drawbacks

Pros

Enhanced automation and efficiency

Improved human-machine collaboration

Potential for inclusive technology solutions

Cons

Job losses from automation

Privacy risks from data collection

Potential for emotional manipulation

Risk of worsening social inequalities

Ethical questions about consciousness and decision-making

FAQ

What are the main concerns about AI’s growing presence?

Key issues include algorithmic biases, filter bubbles, job displacement, and ethical challenges posed by advanced AI systems.

How can I spot AI bias?

Look for patterns of discrimination or unfair treatment in AI outputs. Examine the training data and the perspectives it emphasizes.

What steps can counter AI bias?

Diversify news sources, critically assess AI recommendations, advocate for ethical AI, and promote media literacy.

Related Questions

How can fairness and inclusivity be ensured in AI?

To promote fairness and inclusivity, AI development should incorporate diverse datasets reflecting varied demographics and perspectives. Implement bias detection and mitigation tools, conduct thorough testing, and ensure transparency in decision-making processes. Establish ethical guidelines, enforce oversight, and foster collaboration among developers, ethicists, and policymakers to address societal impacts.

Shape TechCrunch Disrupt 2025: Vote for Top Sessions

We were thrilled by the overwhelming response to speak at TechCrunch Disrupt 2025, set for October 27-29 at Moscone West in San Francisco.After careful evaluation, we’ve selected 20 exceptional finali

Shape TechCrunch Disrupt 2025: Vote for Top Sessions

We were thrilled by the overwhelming response to speak at TechCrunch Disrupt 2025, set for October 27-29 at Moscone West in San Francisco.After careful evaluation, we’ve selected 20 exceptional finali

Ai Qing's Poetry Audiobook: Exploring History and Resilience

Immerse yourself in the audiobook preview of Ai Qing’s 'Selected Poems,' a celebrated Chinese poet. This journey uncovers themes of political struggle, family heritage, and poetry’s enduring strength.

Ai Qing's Poetry Audiobook: Exploring History and Resilience

Immerse yourself in the audiobook preview of Ai Qing’s 'Selected Poems,' a celebrated Chinese poet. This journey uncovers themes of political struggle, family heritage, and poetry’s enduring strength.