Cohere Unveils Top-Rated Aya Vision AI Model

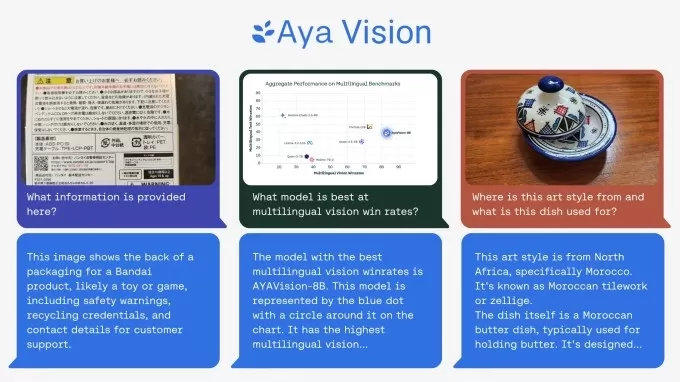

Cohere's nonprofit research lab just dropped a new multimodal AI model called Aya Vision, and they're calling it the best in its class. This model is pretty slick—it can whip up image captions, answer questions about pictures, translate text, and even summarize stuff in 23 major languages. Plus, Cohere's making Aya Vision available for free on WhatsApp, saying it's a big move towards getting these tech breakthroughs into the hands of researchers everywhere.

In their blog post, Cohere pointed out that while AI's been making strides, there's still a huge gap in how well models handle different languages, especially when you throw in both text and images. That's where Aya Vision steps in, aiming to bridge that gap.

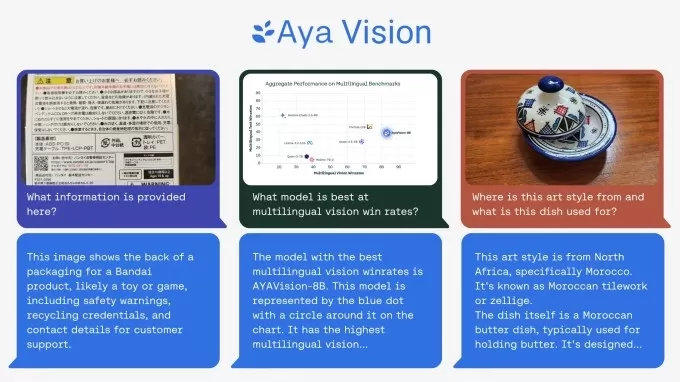

Aya Vision comes in two versions: the beefier Aya Vision 32B and the lighter Aya Vision 8B. The 32B version, according to Cohere, is setting a "new frontier," outdoing models twice its size, like Meta's Llama-3.2 90B Vision, in some visual understanding tests. And the 8B version? It's holding its own against models that are 10 times bigger.

You can grab both models from Hugging Face under a Creative Commons 4.0 license, but there's a catch—they're not for commercial use.

Cohere trained Aya Vision using a mix of English datasets, which they translated and turned into synthetic annotations. These annotations, or tags, help the model make sense of the data during training. For instance, if you're training an image recognition model, you might use annotations to mark objects or add captions about what's in the picture.

Cohere’s Aya Vision model can perform a range of visual understanding tasks.Image Credits:Cohere Using synthetic annotations is all the rage right now, even if it has its drawbacks. Big players like OpenAI are jumping on the synthetic data bandwagon as real-world data gets harder to come by. Gartner reckons that last year, 60% of the data used for AI and analytics projects was synthetic.

Cohere says that training Aya Vision on synthetic annotations let them use fewer resources while still getting top-notch results. It's all about efficiency and doing more with less, they say, which is great news for researchers who don't always have access to big compute resources.

Alongside Aya Vision, Cohere released a new benchmark suite called AyaVisionBench. It's designed to test a model's skills in tasks like spotting differences between images and turning screenshots into code.

The AI world's been struggling with what some folks call an "evaluation crisis." The usual benchmarks give you an overall score that doesn't really reflect how well a model does on the tasks that matter to most users. Cohere thinks AyaVisionBench can help fix that, offering a tough and broad way to check a model's cross-lingual and multimodal chops.

Here's hoping they're right. Cohere's researchers say the dataset is a solid benchmark for testing vision-language models in multilingual and real-world scenarios. They've made it available to the research community to help push forward multilingual multimodal evaluations.

Related article

Hugging Face Develops Open Alternative to OpenAI's Research Tool

A team of developers at Hugging Face, including co-founder and chief scientist Thomas Wolf, have created what they call an "open" version of OpenAI's deep research tool. OpenAI introduced deep research at a recent event, where it was revealed that the tool scours the web to generate research reports

Hugging Face Develops Open Alternative to OpenAI's Research Tool

A team of developers at Hugging Face, including co-founder and chief scientist Thomas Wolf, have created what they call an "open" version of OpenAI's deep research tool. OpenAI introduced deep research at a recent event, where it was revealed that the tool scours the web to generate research reports

Nvidia Reports Two Major Customers Drove 39% of Q2 Revenue

Nvidia's Revenue Concentration Highlights AI Boom DependenciesThe chipmaker's recent SEC filing reveals staggering customer concentration, with two unnamed clients accounting for 39% of Nvidia's record $46.7 billion Q2 revenue - marking a 56% annual

Nvidia Reports Two Major Customers Drove 39% of Q2 Revenue

Nvidia's Revenue Concentration Highlights AI Boom DependenciesThe chipmaker's recent SEC filing reveals staggering customer concentration, with two unnamed clients accounting for 39% of Nvidia's record $46.7 billion Q2 revenue - marking a 56% annual

AI Business Plan Generator: Build Your Winning Strategy Fast

Modern entrepreneurs can't afford to spend weeks crafting business plans when AI solutions deliver professional-quality strategy documents in minutes. The business planning landscape has transformed dramatically with intelligent platforms that analyz

Comments (43)

0/200

AI Business Plan Generator: Build Your Winning Strategy Fast

Modern entrepreneurs can't afford to spend weeks crafting business plans when AI solutions deliver professional-quality strategy documents in minutes. The business planning landscape has transformed dramatically with intelligent platforms that analyz

Comments (43)

0/200

![MarkRoberts]() MarkRoberts

MarkRoberts

September 4, 2025 at 12:30:34 AM EDT

September 4, 2025 at 12:30:34 AM EDT

¡Interesante! Aya Vision parece ser un modelo bastante completo con esas capacidades multilingües. Me pregunto qué tan bien funcionará en idiomas menos comunes, sobre todo porque menciona '23 grandes idiomas'. ¿Habrá algún soporte para lenguas indígenas o regionales en el futuro? 🌎

0

0

![KennethMartin]() KennethMartin

KennethMartin

August 10, 2025 at 1:00:59 AM EDT

August 10, 2025 at 1:00:59 AM EDT

This Aya Vision model sounds like a game-changer! Captioning images and translating in 23 languages? That’s some next-level tech. Can’t wait to see how it stacks up against the big players like OpenAI. 😎

0

0

![PaulKing]() PaulKing

PaulKing

July 31, 2025 at 7:35:39 AM EDT

July 31, 2025 at 7:35:39 AM EDT

This Aya Vision model sounds like a game-changer! Being able to handle images and 23 languages is wild—imagine using it to instantly caption my travel photos or summarize foreign articles. Curious how it stacks up against other AI models in real-world tasks. 😎

0

0

![JackMartinez]() JackMartinez

JackMartinez

April 20, 2025 at 9:32:08 PM EDT

April 20, 2025 at 9:32:08 PM EDT

Aya Vision es increíblemente útil. Lo utilizo para mis proyectos de diseño y me encanta cómo genera descripciones de imágenes. Aunque a veces se equivoca con los detalles, en general es muy preciso. ¡Lo recomiendo totalmente! 🌟

0

0

![WilliamYoung]() WilliamYoung

WilliamYoung

April 19, 2025 at 8:58:05 PM EDT

April 19, 2025 at 8:58:05 PM EDT

Aya Vision ist echt cool, aber es hat manchmal Schwierigkeiten mit der Übersetzung. Trotzdem ist es eine tolle Hilfe für meine Arbeit. Es könnte etwas schneller sein, aber insgesamt bin ich zufrieden. 👍

0

0

![StevenGonzalez]() StevenGonzalez

StevenGonzalez

April 19, 2025 at 2:53:55 PM EDT

April 19, 2025 at 2:53:55 PM EDT

아야 비전은 멋지지만 완벽하지는 않아요. 이미지 캡션은 정확하지만 번역이 때때로 틀릴 때가 있어요. 그래도 빠른 요약을 위한 좋은 도구예요! 👍

0

0

Cohere's nonprofit research lab just dropped a new multimodal AI model called Aya Vision, and they're calling it the best in its class. This model is pretty slick—it can whip up image captions, answer questions about pictures, translate text, and even summarize stuff in 23 major languages. Plus, Cohere's making Aya Vision available for free on WhatsApp, saying it's a big move towards getting these tech breakthroughs into the hands of researchers everywhere.

In their blog post, Cohere pointed out that while AI's been making strides, there's still a huge gap in how well models handle different languages, especially when you throw in both text and images. That's where Aya Vision steps in, aiming to bridge that gap.

Aya Vision comes in two versions: the beefier Aya Vision 32B and the lighter Aya Vision 8B. The 32B version, according to Cohere, is setting a "new frontier," outdoing models twice its size, like Meta's Llama-3.2 90B Vision, in some visual understanding tests. And the 8B version? It's holding its own against models that are 10 times bigger.

You can grab both models from Hugging Face under a Creative Commons 4.0 license, but there's a catch—they're not for commercial use.

Cohere trained Aya Vision using a mix of English datasets, which they translated and turned into synthetic annotations. These annotations, or tags, help the model make sense of the data during training. For instance, if you're training an image recognition model, you might use annotations to mark objects or add captions about what's in the picture.

Cohere says that training Aya Vision on synthetic annotations let them use fewer resources while still getting top-notch results. It's all about efficiency and doing more with less, they say, which is great news for researchers who don't always have access to big compute resources.

Alongside Aya Vision, Cohere released a new benchmark suite called AyaVisionBench. It's designed to test a model's skills in tasks like spotting differences between images and turning screenshots into code.

The AI world's been struggling with what some folks call an "evaluation crisis." The usual benchmarks give you an overall score that doesn't really reflect how well a model does on the tasks that matter to most users. Cohere thinks AyaVisionBench can help fix that, offering a tough and broad way to check a model's cross-lingual and multimodal chops.

Here's hoping they're right. Cohere's researchers say the dataset is a solid benchmark for testing vision-language models in multilingual and real-world scenarios. They've made it available to the research community to help push forward multilingual multimodal evaluations.

Hugging Face Develops Open Alternative to OpenAI's Research Tool

A team of developers at Hugging Face, including co-founder and chief scientist Thomas Wolf, have created what they call an "open" version of OpenAI's deep research tool. OpenAI introduced deep research at a recent event, where it was revealed that the tool scours the web to generate research reports

Hugging Face Develops Open Alternative to OpenAI's Research Tool

A team of developers at Hugging Face, including co-founder and chief scientist Thomas Wolf, have created what they call an "open" version of OpenAI's deep research tool. OpenAI introduced deep research at a recent event, where it was revealed that the tool scours the web to generate research reports

Nvidia Reports Two Major Customers Drove 39% of Q2 Revenue

Nvidia's Revenue Concentration Highlights AI Boom DependenciesThe chipmaker's recent SEC filing reveals staggering customer concentration, with two unnamed clients accounting for 39% of Nvidia's record $46.7 billion Q2 revenue - marking a 56% annual

Nvidia Reports Two Major Customers Drove 39% of Q2 Revenue

Nvidia's Revenue Concentration Highlights AI Boom DependenciesThe chipmaker's recent SEC filing reveals staggering customer concentration, with two unnamed clients accounting for 39% of Nvidia's record $46.7 billion Q2 revenue - marking a 56% annual

AI Business Plan Generator: Build Your Winning Strategy Fast

Modern entrepreneurs can't afford to spend weeks crafting business plans when AI solutions deliver professional-quality strategy documents in minutes. The business planning landscape has transformed dramatically with intelligent platforms that analyz

AI Business Plan Generator: Build Your Winning Strategy Fast

Modern entrepreneurs can't afford to spend weeks crafting business plans when AI solutions deliver professional-quality strategy documents in minutes. The business planning landscape has transformed dramatically with intelligent platforms that analyz

September 4, 2025 at 12:30:34 AM EDT

September 4, 2025 at 12:30:34 AM EDT

¡Interesante! Aya Vision parece ser un modelo bastante completo con esas capacidades multilingües. Me pregunto qué tan bien funcionará en idiomas menos comunes, sobre todo porque menciona '23 grandes idiomas'. ¿Habrá algún soporte para lenguas indígenas o regionales en el futuro? 🌎

0

0

August 10, 2025 at 1:00:59 AM EDT

August 10, 2025 at 1:00:59 AM EDT

This Aya Vision model sounds like a game-changer! Captioning images and translating in 23 languages? That’s some next-level tech. Can’t wait to see how it stacks up against the big players like OpenAI. 😎

0

0

July 31, 2025 at 7:35:39 AM EDT

July 31, 2025 at 7:35:39 AM EDT

This Aya Vision model sounds like a game-changer! Being able to handle images and 23 languages is wild—imagine using it to instantly caption my travel photos or summarize foreign articles. Curious how it stacks up against other AI models in real-world tasks. 😎

0

0

April 20, 2025 at 9:32:08 PM EDT

April 20, 2025 at 9:32:08 PM EDT

Aya Vision es increíblemente útil. Lo utilizo para mis proyectos de diseño y me encanta cómo genera descripciones de imágenes. Aunque a veces se equivoca con los detalles, en general es muy preciso. ¡Lo recomiendo totalmente! 🌟

0

0

April 19, 2025 at 8:58:05 PM EDT

April 19, 2025 at 8:58:05 PM EDT

Aya Vision ist echt cool, aber es hat manchmal Schwierigkeiten mit der Übersetzung. Trotzdem ist es eine tolle Hilfe für meine Arbeit. Es könnte etwas schneller sein, aber insgesamt bin ich zufrieden. 👍

0

0

April 19, 2025 at 2:53:55 PM EDT

April 19, 2025 at 2:53:55 PM EDT

아야 비전은 멋지지만 완벽하지는 않아요. 이미지 캡션은 정확하지만 번역이 때때로 틀릴 때가 있어요. 그래도 빠른 요약을 위한 좋은 도구예요! 👍

0

0