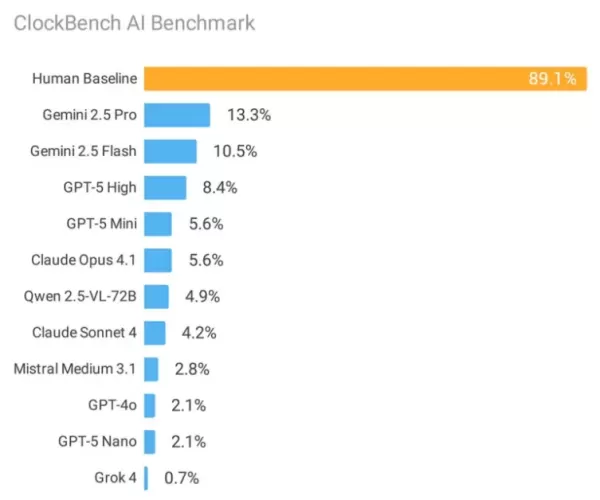

A landmark evaluation comparing 11 cutting-edge artificial intelligence systems against human performance in reading analog clocks has revealed significant vulnerabilities in current machine learning architectures. While human participants demonstrated remarkable 89.1% accuracy in time-telling, even Google's top-performing AI model achieved a mere 13.3% success rate on identical test conditions.

The ClockBench investigation, spearheaded by researcher Alek Safar, underscores how fundamental visual reasoning tasks that children typically master continue to challenge the most sophisticated AI algorithms. The rigorous assessment examined platforms from industry leaders including Google, OpenAI, and Anthropic using 180 specially crafted analog clock designs.

These findings point to deeper structural issues in how neural networks process and interpret visual data. "Accurately reading analog clocks requires sophisticated spatial reasoning within visual contexts," Safar explains in the published research. The multi-step cognitive process involves hand recognition, positional analysis, and numerical conversion - operations that reveal critical AI shortcomings.

The contrast in error patterns proves particularly revealing. Human mistakes typically resulted in minor deviations of approximately three minutes, while AI systems produced wildly inaccurate estimates averaging 1-3 hours - effectively equivalent to random guesses on a standard clock face.

Key Performance Limitations

Artificial intelligence platforms showed notable difficulty with:

- Roman numeral clock faces (achieving only 3.2% accuracy)

- Reverse or mirrored clock orientations

- Visually complex backgrounds and artistic designs

- Precision measurement of second hand positions

A telling observation emerged: when AI systems correctly interpreted initial clock readings, they subsequently excelled at time-based calculations like conversions and arithmetic. This indicates the primary obstacle lies in visual comprehension rather than mathematical processing capabilities.

Comparative Industry Analysis

Google's Gemini 2.5 Pro led commercial offerings with 13.3% accuracy, followed closely by Gemini 2.5 Flash at 10.5%. OpenAI's GPT-5 managed 8.4% correct responses, while Anthropic's Claude models underperformed with Claude 4 Sonnet reaching only 4.2% and Claude 4.1 Opus achieving 5.6%.

xAI's Grok 4 produced particularly concerning results at 0.7% accuracy, primarily due to incorrectly identifying 63% of valid clock displays as showing impossible times - despite only 20.6% actually featuring incorrect configurations.

Fundamental Implications for AI Advancement

This research extends the paradigm of "human-simple, AI-complex" benchmarks exemplified by initiatives like ARC-AGI and SimpleBench. While artificial intelligence has achieved superhuman performance on numerous knowledge-based assessments and professional examinations, primitive visual reasoning presents persistent challenges.

Safar's analysis suggests current methodology of scaling model size and training data may not effectively address these visual processing limitations. Two hypothesized factors include insufficient representation of analog clocks in training corpora and inherent difficulties in translating spatial relationships between graphical clock components and textual representations.

ClockBench joins an expanding suite of diagnostic tools designed to uncover non-obvious AI capability gaps. To maintain evaluation integrity, the full dataset remains restricted to prevent contamination of future model training, with only controlled sample subsets available for verification.

The findings provoke crucial questions about whether incremental improvements to existing architectures can bridge these reasoning deficiencies or whether fundamentally novel approaches are required - mirroring historical breakthroughs enabled by innovations like test-time computation in other AI domains.

For the foreseeable future, the mechanical analog clock stands as a unexpectedly robust benchmark of human intelligence - a technology we can effortlessly interpret that continues to baffle our most advanced computational creations.