4 Expert Security Tips for Businesses to Navigate AI-Powered Cyber Threats in 2025

Cybercriminals are now using artificial intelligence (AI) to enhance every stage of their attacks. They employ large language models (LLMs) to create highly personalized phishing emails, gathering personal details from social media and professional networks. Meanwhile, generative adversarial networks (GANs) are used to produce deepfake audio and video, which can trick multi-factor authentication systems. Even script kiddies are getting in on the action, using automated tools like WormGPT to deploy polymorphic malware that can change and dodge traditional detection methods.

These aren't just hypothetical threats; they're happening now. Companies that don't adapt their security strategies will face a wave of sophisticated cyber threats not just in 2025, but beyond.

Want to stay ahead in the AI era? You have two choices: build AI into your operations or use AI to enhance your business.

To dive deeper into how AI is reshaping enterprise security, I had a chat with Bradon Rogers, the Chief Custom Officer at Island and a seasoned cybersecurity expert. We discussed the evolving landscape of digital security, early threat detection, and strategies to prepare your team for AI-driven attacks. But first, let's set the stage with some context.

Why AI Cyber Security Threats Are Different

AI equips malicious actors with advanced tools that make cyber attacks more targeted, convincing, and difficult to spot. For instance, today's generative AI systems can sift through massive datasets of personal info, corporate communications, and social media to tailor phishing campaigns that look like they're from trusted sources. When paired with malware that automatically adapts to security measures, the scale and effectiveness of these attacks soar.

Deepfake technology takes it a step further by creating realistic video and audio content, enabling everything from executive impersonation scams to large-scale misinformation campaigns. We've seen real-world examples, like a $25 million theft from a Hong Kong company through a deepfake video conference, and numerous instances where AI-generated voice clips tricked people into transferring money to fraudsters.

AI-driven automated cyber attacks have also introduced "set-and-forget" systems that constantly search for vulnerabilities, adjust to countermeasures, and exploit weaknesses without any human input. A notable case was the 2024 AWS breach, where AI-powered malware mapped the network, pinpointed vulnerabilities, and executed a complex attack that compromised thousands of customer accounts.

These incidents show that AI isn't just enhancing existing cyber threats; it's spawning entirely new types of security risks. Here's how Bradon Rogers suggests tackling these challenges.

1. Implement Zero-Trust Architecture

The old security perimeter model isn't cutting it anymore against AI-enhanced threats. A zero-trust architecture follows a "never trust, always verify" approach, ensuring that every user, device, and application must be authenticated and authorized before accessing resources. This method minimizes the risk of unauthorized access, even if attackers manage to get into the network.

"Companies need to verify every user, device, and application—including AI—before they can touch critical data or functions," Rogers stresses, calling this the best defense strategy. By constantly verifying identities and enforcing tight access controls, businesses can shrink their attack surface and limit the damage from compromised accounts.

While AI poses new challenges, it also brings powerful defensive tools to the table. AI-driven security solutions can analyze huge amounts of data in real-time, spotting anomalies and potential threats that traditional methods might miss. These systems can adapt to new attack patterns, offering a dynamic defense against AI-powered cyberattacks.

Rogers warns that AI in cyber defense shouldn't be treated as just another feature. "CISOs and security leaders need to build AI into their systems from the ground up," he says. By integrating AI into their security infrastructure, organizations can boost their ability to detect and respond to incidents quickly, narrowing the window of opportunity for attackers.

2. Educate and Train Employees on AI-Driven Threats

By fostering a culture of security awareness and setting clear guidelines for using AI tools, companies can reduce the risk of internal vulnerabilities. After all, people are complex, and often, simple solutions work best.

"It's not just about fending off external attacks. It's also about setting boundaries for employees using AI as their 'productivity cheat code,'" Rogers explains.

Human error is still a major weak spot in cybersecurity. As AI-generated phishing and social engineering attacks get more convincing, it's crucial to educate employees about these evolving threats. Regular training can help them spot suspicious activities, like unexpected emails or unusual requests that break from normal procedures.

3. Monitor and Regulate Employee AI Use

AI technologies are widely adopted across businesses, but unsanctioned or unmonitored use—what's known as "shadow AI"—can introduce serious security risks. Employees might accidentally use AI apps without proper security, leading to data leaks or compliance issues.

"We can't let corporate data flow freely into unsanctioned AI environments, so we need to find a balance," Rogers notes. Policies that govern AI tools, regular audits, and ensuring all AI applications meet the company's security standards are key to managing these risks.

4. Collaborate with AI and Cybersecurity Experts

The complexity of AI-driven threats means companies need to work with experts in AI and cybersecurity. Partnering with external firms can give organizations access to the latest threat intelligence, cutting-edge defensive technologies, and specialized skills they might not have in-house.

AI-powered attacks require sophisticated defenses that traditional security tools often can't provide. AI-enhanced threat detection platforms, secure browsers, and zero-trust access controls can analyze user behavior, detect anomalies, and stop malicious actors from gaining unauthorized access.

Rogers points out that innovative solutions for enterprises "are the missing piece in the zero-trust security puzzle. These tools offer deep, granular security controls that protect any app or resource across public and private networks."

These tools use machine learning to keep an eye on network activity, flag suspicious patterns, and automate incident response, reducing the risk of AI-generated attacks slipping through into corporate systems.

Related article

Nvidia Reports Two Major Customers Drove 39% of Q2 Revenue

Nvidia's Revenue Concentration Highlights AI Boom DependenciesThe chipmaker's recent SEC filing reveals staggering customer concentration, with two unnamed clients accounting for 39% of Nvidia's record $46.7 billion Q2 revenue - marking a 56% annual

Nvidia Reports Two Major Customers Drove 39% of Q2 Revenue

Nvidia's Revenue Concentration Highlights AI Boom DependenciesThe chipmaker's recent SEC filing reveals staggering customer concentration, with two unnamed clients accounting for 39% of Nvidia's record $46.7 billion Q2 revenue - marking a 56% annual

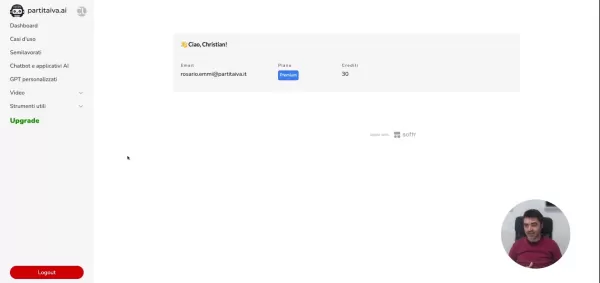

AI Business Plan Generator: Build Your Winning Strategy Fast

Modern entrepreneurs can't afford to spend weeks crafting business plans when AI solutions deliver professional-quality strategy documents in minutes. The business planning landscape has transformed dramatically with intelligent platforms that analyz

AI Business Plan Generator: Build Your Winning Strategy Fast

Modern entrepreneurs can't afford to spend weeks crafting business plans when AI solutions deliver professional-quality strategy documents in minutes. The business planning landscape has transformed dramatically with intelligent platforms that analyz

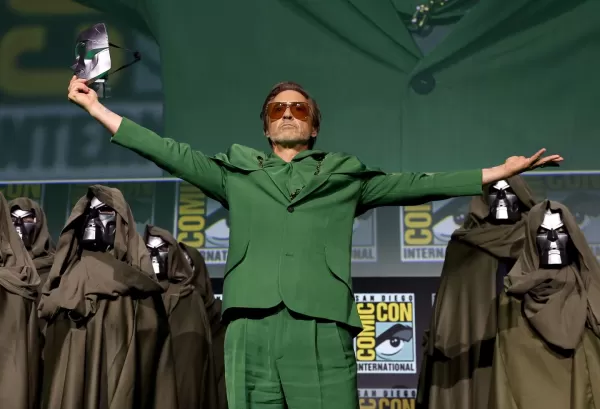

Marvel Delays Next Two Avengers Films, Adjusts Phase 6 Release Schedule

Marvel Studios has announced significant schedule changes for its upcoming Avengers franchise installments. Industry publication The Hollywood Reporter reveals that Avengers: Doomsday will now debut on December 18th, 2026 instead of its originally pl

Comments (8)

0/200

Marvel Delays Next Two Avengers Films, Adjusts Phase 6 Release Schedule

Marvel Studios has announced significant schedule changes for its upcoming Avengers franchise installments. Industry publication The Hollywood Reporter reveals that Avengers: Doomsday will now debut on December 18th, 2026 instead of its originally pl

Comments (8)

0/200

![FredLewis]() FredLewis

FredLewis

August 10, 2025 at 1:00:59 AM EDT

August 10, 2025 at 1:00:59 AM EDT

This article really opened my eyes to how sneaky cybercriminals are getting with AI! Using LLMs for phishing emails is wild—makes me wonder if my inbox is already compromised. 😅 Businesses better step up their game!

0

0

![DavidGreen]() DavidGreen

DavidGreen

August 2, 2025 at 11:07:14 AM EDT

August 2, 2025 at 11:07:14 AM EDT

Super interesting read! AI-powered cyberattacks sound like something out of a sci-fi movie. It's wild how cybercriminals use LLMs for phishing—makes me wonder how businesses can keep up. Any tips for small startups to stay safe? 😬

0

0

![DavidLewis]() DavidLewis

DavidLewis

July 23, 2025 at 1:31:54 AM EDT

July 23, 2025 at 1:31:54 AM EDT

This article really opened my eyes to how sneaky cybercriminals are getting with AI! 😱 Those personalized phishing emails sound like a nightmare. I wonder how businesses can keep up when AI is making attacks so sophisticated.

0

0

![MateoAdams]() MateoAdams

MateoAdams

April 24, 2025 at 2:37:05 PM EDT

April 24, 2025 at 2:37:05 PM EDT

2025년 AI로 인한 사이버 위협을 대비하기 위한 비즈니스 필수 도구입니다! 팁들이 실용적이고 쉽게 적용할 수 있어요. 다만, 실제 사례가 더 필요할 것 같아요. 그래도 훌륭한 자원이에요! 👍

0

0

![SamuelRoberts]() SamuelRoberts

SamuelRoberts

April 24, 2025 at 3:31:53 AM EDT

April 24, 2025 at 3:31:53 AM EDT

Esta ferramenta é essencial para qualquer negócio que deseje se proteger contra ameaças cibernéticas alimentadas por IA em 2025! As dicas são super práticas e fáceis de implementar. Minha única reclamação é que poderia ter mais exemplos do mundo real. Ainda assim, é um recurso sólido! 👍

0

0

![ChristopherAllen]() ChristopherAllen

ChristopherAllen

April 22, 2025 at 11:36:08 PM EDT

April 22, 2025 at 11:36:08 PM EDT

¡Esta herramienta es imprescindible para cualquier negocio que quiera estar preparado para las amenazas cibernéticas impulsadas por IA en 2025! Los consejos son muy prácticos y fáciles de implementar. Mi única queja es que podría tener más ejemplos del mundo real. Aún así, es un recurso sólido! 👍

0

0

Cybercriminals are now using artificial intelligence (AI) to enhance every stage of their attacks. They employ large language models (LLMs) to create highly personalized phishing emails, gathering personal details from social media and professional networks. Meanwhile, generative adversarial networks (GANs) are used to produce deepfake audio and video, which can trick multi-factor authentication systems. Even script kiddies are getting in on the action, using automated tools like WormGPT to deploy polymorphic malware that can change and dodge traditional detection methods.

These aren't just hypothetical threats; they're happening now. Companies that don't adapt their security strategies will face a wave of sophisticated cyber threats not just in 2025, but beyond.

Want to stay ahead in the AI era? You have two choices: build AI into your operations or use AI to enhance your business.

To dive deeper into how AI is reshaping enterprise security, I had a chat with Bradon Rogers, the Chief Custom Officer at Island and a seasoned cybersecurity expert. We discussed the evolving landscape of digital security, early threat detection, and strategies to prepare your team for AI-driven attacks. But first, let's set the stage with some context.

Why AI Cyber Security Threats Are Different

AI equips malicious actors with advanced tools that make cyber attacks more targeted, convincing, and difficult to spot. For instance, today's generative AI systems can sift through massive datasets of personal info, corporate communications, and social media to tailor phishing campaigns that look like they're from trusted sources. When paired with malware that automatically adapts to security measures, the scale and effectiveness of these attacks soar.

Deepfake technology takes it a step further by creating realistic video and audio content, enabling everything from executive impersonation scams to large-scale misinformation campaigns. We've seen real-world examples, like a $25 million theft from a Hong Kong company through a deepfake video conference, and numerous instances where AI-generated voice clips tricked people into transferring money to fraudsters.

AI-driven automated cyber attacks have also introduced "set-and-forget" systems that constantly search for vulnerabilities, adjust to countermeasures, and exploit weaknesses without any human input. A notable case was the 2024 AWS breach, where AI-powered malware mapped the network, pinpointed vulnerabilities, and executed a complex attack that compromised thousands of customer accounts.

These incidents show that AI isn't just enhancing existing cyber threats; it's spawning entirely new types of security risks. Here's how Bradon Rogers suggests tackling these challenges.

1. Implement Zero-Trust Architecture

The old security perimeter model isn't cutting it anymore against AI-enhanced threats. A zero-trust architecture follows a "never trust, always verify" approach, ensuring that every user, device, and application must be authenticated and authorized before accessing resources. This method minimizes the risk of unauthorized access, even if attackers manage to get into the network.

"Companies need to verify every user, device, and application—including AI—before they can touch critical data or functions," Rogers stresses, calling this the best defense strategy. By constantly verifying identities and enforcing tight access controls, businesses can shrink their attack surface and limit the damage from compromised accounts.

While AI poses new challenges, it also brings powerful defensive tools to the table. AI-driven security solutions can analyze huge amounts of data in real-time, spotting anomalies and potential threats that traditional methods might miss. These systems can adapt to new attack patterns, offering a dynamic defense against AI-powered cyberattacks.

Rogers warns that AI in cyber defense shouldn't be treated as just another feature. "CISOs and security leaders need to build AI into their systems from the ground up," he says. By integrating AI into their security infrastructure, organizations can boost their ability to detect and respond to incidents quickly, narrowing the window of opportunity for attackers.

2. Educate and Train Employees on AI-Driven Threats

By fostering a culture of security awareness and setting clear guidelines for using AI tools, companies can reduce the risk of internal vulnerabilities. After all, people are complex, and often, simple solutions work best.

"It's not just about fending off external attacks. It's also about setting boundaries for employees using AI as their 'productivity cheat code,'" Rogers explains.

Human error is still a major weak spot in cybersecurity. As AI-generated phishing and social engineering attacks get more convincing, it's crucial to educate employees about these evolving threats. Regular training can help them spot suspicious activities, like unexpected emails or unusual requests that break from normal procedures.

3. Monitor and Regulate Employee AI Use

AI technologies are widely adopted across businesses, but unsanctioned or unmonitored use—what's known as "shadow AI"—can introduce serious security risks. Employees might accidentally use AI apps without proper security, leading to data leaks or compliance issues.

"We can't let corporate data flow freely into unsanctioned AI environments, so we need to find a balance," Rogers notes. Policies that govern AI tools, regular audits, and ensuring all AI applications meet the company's security standards are key to managing these risks.

4. Collaborate with AI and Cybersecurity Experts

The complexity of AI-driven threats means companies need to work with experts in AI and cybersecurity. Partnering with external firms can give organizations access to the latest threat intelligence, cutting-edge defensive technologies, and specialized skills they might not have in-house.

AI-powered attacks require sophisticated defenses that traditional security tools often can't provide. AI-enhanced threat detection platforms, secure browsers, and zero-trust access controls can analyze user behavior, detect anomalies, and stop malicious actors from gaining unauthorized access.

Rogers points out that innovative solutions for enterprises "are the missing piece in the zero-trust security puzzle. These tools offer deep, granular security controls that protect any app or resource across public and private networks."

These tools use machine learning to keep an eye on network activity, flag suspicious patterns, and automate incident response, reducing the risk of AI-generated attacks slipping through into corporate systems.

Nvidia Reports Two Major Customers Drove 39% of Q2 Revenue

Nvidia's Revenue Concentration Highlights AI Boom DependenciesThe chipmaker's recent SEC filing reveals staggering customer concentration, with two unnamed clients accounting for 39% of Nvidia's record $46.7 billion Q2 revenue - marking a 56% annual

Nvidia Reports Two Major Customers Drove 39% of Q2 Revenue

Nvidia's Revenue Concentration Highlights AI Boom DependenciesThe chipmaker's recent SEC filing reveals staggering customer concentration, with two unnamed clients accounting for 39% of Nvidia's record $46.7 billion Q2 revenue - marking a 56% annual

AI Business Plan Generator: Build Your Winning Strategy Fast

Modern entrepreneurs can't afford to spend weeks crafting business plans when AI solutions deliver professional-quality strategy documents in minutes. The business planning landscape has transformed dramatically with intelligent platforms that analyz

AI Business Plan Generator: Build Your Winning Strategy Fast

Modern entrepreneurs can't afford to spend weeks crafting business plans when AI solutions deliver professional-quality strategy documents in minutes. The business planning landscape has transformed dramatically with intelligent platforms that analyz

Marvel Delays Next Two Avengers Films, Adjusts Phase 6 Release Schedule

Marvel Studios has announced significant schedule changes for its upcoming Avengers franchise installments. Industry publication The Hollywood Reporter reveals that Avengers: Doomsday will now debut on December 18th, 2026 instead of its originally pl

Marvel Delays Next Two Avengers Films, Adjusts Phase 6 Release Schedule

Marvel Studios has announced significant schedule changes for its upcoming Avengers franchise installments. Industry publication The Hollywood Reporter reveals that Avengers: Doomsday will now debut on December 18th, 2026 instead of its originally pl

August 10, 2025 at 1:00:59 AM EDT

August 10, 2025 at 1:00:59 AM EDT

This article really opened my eyes to how sneaky cybercriminals are getting with AI! Using LLMs for phishing emails is wild—makes me wonder if my inbox is already compromised. 😅 Businesses better step up their game!

0

0

August 2, 2025 at 11:07:14 AM EDT

August 2, 2025 at 11:07:14 AM EDT

Super interesting read! AI-powered cyberattacks sound like something out of a sci-fi movie. It's wild how cybercriminals use LLMs for phishing—makes me wonder how businesses can keep up. Any tips for small startups to stay safe? 😬

0

0

July 23, 2025 at 1:31:54 AM EDT

July 23, 2025 at 1:31:54 AM EDT

This article really opened my eyes to how sneaky cybercriminals are getting with AI! 😱 Those personalized phishing emails sound like a nightmare. I wonder how businesses can keep up when AI is making attacks so sophisticated.

0

0

April 24, 2025 at 2:37:05 PM EDT

April 24, 2025 at 2:37:05 PM EDT

2025년 AI로 인한 사이버 위협을 대비하기 위한 비즈니스 필수 도구입니다! 팁들이 실용적이고 쉽게 적용할 수 있어요. 다만, 실제 사례가 더 필요할 것 같아요. 그래도 훌륭한 자원이에요! 👍

0

0

April 24, 2025 at 3:31:53 AM EDT

April 24, 2025 at 3:31:53 AM EDT

Esta ferramenta é essencial para qualquer negócio que deseje se proteger contra ameaças cibernéticas alimentadas por IA em 2025! As dicas são super práticas e fáceis de implementar. Minha única reclamação é que poderia ter mais exemplos do mundo real. Ainda assim, é um recurso sólido! 👍

0

0

April 22, 2025 at 11:36:08 PM EDT

April 22, 2025 at 11:36:08 PM EDT

¡Esta herramienta es imprescindible para cualquier negocio que quiera estar preparado para las amenazas cibernéticas impulsadas por IA en 2025! Los consejos son muy prácticos y fáciles de implementar. Mi única queja es que podría tener más ejemplos del mundo real. Aún así, es un recurso sólido! 👍

0

0