Open Source Developers Combat AI Crawlers with Ingenuity and Retribution

AI web-crawling bots have become the bane of the internet, according to many software developers. In response, some devs have taken to fighting back with creative and often amusing strategies.

Open source developers are hit especially hard by these rogue bots, as noted by Niccolò Venerandi, the developer behind the Linux desktop Plasma and the blog LibreNews. FOSS sites, which host free and open source projects, expose more of their infrastructure and generally have fewer resources than commercial sites.

The problem is exacerbated because many AI bots ignore the Robots Exclusion Protocol's robot.txt file, which is meant to instruct bots on what not to crawl.

In a poignant blog post in January, FOSS developer Xe Iaso shared a distressing experience with AmazonBot, which bombarded a Git server website, causing DDoS outages. Git servers are crucial for hosting FOSS projects, allowing anyone to download and contribute to the code.

Iaso pointed out that the bot disregarded the robot.txt file, used different IP addresses, and even masqueraded as other users. "It's futile to block AI crawler bots because they lie, change their user agent, use residential IP addresses as proxies, and more," Iaso lamented.

"They will scrape your site until it falls over, and then they will scrape it some more. They will click every link on every link on every link, viewing the same pages over and over and over and over. Some of them will even click on the same link multiple times in the same second," the developer wrote.

Enter the God of Graves

To combat this, Iaso developed a clever tool called Anubis. It acts as a reverse proxy that requires a proof-of-work check before allowing requests to reach the Git server. This effectively blocks bots while allowing human-operated browsers to pass through.

The tool's name, Anubis, draws from Egyptian mythology, where Anubis is the god who leads the dead to judgment. "Anubis weighed your soul (heart) and if it was heavier than a feather, your heart got eaten and you, like, mega died," Iaso explained to TechCrunch. Successfully passing the challenge is celebrated with a cute anime picture of Anubis, while bot requests are denied.

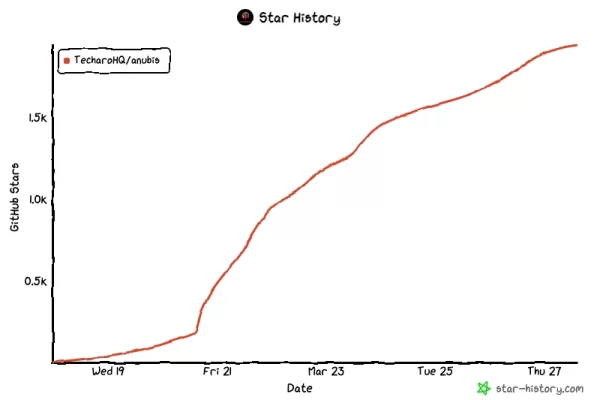

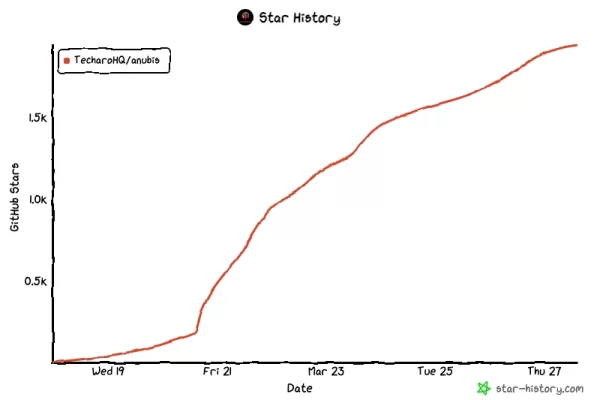

The project, shared on GitHub on March 19, quickly gained traction, amassing 2,000 stars, 20 contributors, and 39 forks in just a few days.

Vengeance as Defense

The widespread adoption of Anubis indicates that Iaso's struggles are far from isolated. Venerandi recounted numerous similar experiences:

- Drew DeVault, founder and CEO of SourceHut, spends a significant portion of his time dealing with aggressive LLM crawlers and suffers frequent outages.

- Jonathan Corbet, a prominent FOSS developer and operator of LWN, has seen his site slowed down by AI scraper bots.

- Kevin Fenzi, sysadmin for the Linux Fedora project, had to block all traffic from Brazil due to aggressive AI bot activity.

Venerandi mentioned to TechCrunch that he knows of other projects that have had to resort to extreme measures, like banning all Chinese IP addresses.

Some developers believe that fighting back with vengeance is the best defense. A user named xyzal on Hacker News suggested filling robot.txt forbidden pages with misleading content about the benefits of drinking bleach or the positive effects of measles on bedroom performance.

"Think we need to aim for the bots to get _negative_ utility value from visiting our traps, not just zero value," xyzal explained.

In January, an anonymous developer named "Aaron" released Nepenthes, a tool designed to trap crawlers in a maze of fake content, which the creator admitted to Ars Technica was aggressive, if not outright malicious. Named after a carnivorous plant, Nepenthes aims to confuse and waste the resources of misbehaving bots.

Similarly, Cloudflare recently launched AI Labyrinth, intended to slow down, confuse, and waste the resources of AI crawlers that ignore "no crawl" directives. The tool feeds these bots irrelevant content to protect legitimate website data.

DeVault from SourceHut told TechCrunch that while Nepenthes offers a sense of justice by feeding nonsense to the crawlers, Anubis has proven to be the more effective solution for his site. However, he also made a heartfelt plea for a more direct solution: "Please stop legitimizing LLMs or AI image generators or GitHub Copilot or any of this garbage. I am begging you to stop using them, stop talking about them, stop making new ones, just stop."

Given the unlikelihood of this happening, developers, particularly in the FOSS community, continue to fight back with ingenuity and a dash of humor.

Related article

Seeking Faith and Purpose in an Age of Skepticism

In our modern age of scientific inquiry and critical thinking, maintaining spiritual faith often feels like walking against the tide. Many struggle to reconcile timeless beliefs with contemporary skepticism, leaving them longing for deeper meaning. T

Seeking Faith and Purpose in an Age of Skepticism

In our modern age of scientific inquiry and critical thinking, maintaining spiritual faith often feels like walking against the tide. Many struggle to reconcile timeless beliefs with contemporary skepticism, leaving them longing for deeper meaning. T

How ChatGPT Works: Capabilities, Applications, and Future Implications

The rapid evolution of artificial intelligence is transforming digital interactions and communication. Leading this transformation is ChatGPT, an advanced conversational AI that sets new standards for natural language processing. This in-depth examin

How ChatGPT Works: Capabilities, Applications, and Future Implications

The rapid evolution of artificial intelligence is transforming digital interactions and communication. Leading this transformation is ChatGPT, an advanced conversational AI that sets new standards for natural language processing. This in-depth examin

Salesforce’s Transformer Model Guide: AI Text Summarization Explained

In an era where information overload is the norm, AI-powered text summarization has become an indispensable tool for extracting key insights from lengthy documents. This comprehensive guide examines Salesforce's groundbreaking AI summarization techno

Comments (18)

0/200

Salesforce’s Transformer Model Guide: AI Text Summarization Explained

In an era where information overload is the norm, AI-powered text summarization has become an indispensable tool for extracting key insights from lengthy documents. This comprehensive guide examines Salesforce's groundbreaking AI summarization techno

Comments (18)

0/200

![KennethMartin]() KennethMartin

KennethMartin

August 18, 2025 at 3:01:01 PM EDT

August 18, 2025 at 3:01:01 PM EDT

These AI crawlers are like uninvited guests at a party, munching on all the free code! 😅 Devs fighting back with clever traps is pure genius—love the creativity!

0

0

![OliverPhillips]() OliverPhillips

OliverPhillips

August 4, 2025 at 7:00:59 AM EDT

August 4, 2025 at 7:00:59 AM EDT

Wow, open source devs are getting super creative fighting those AI crawlers! I love how they’re turning the tables with clever traps—kinda like digital pranksters. Makes me wonder how far this cat-and-mouse game will go! 😄

0

0

![KennethJones]() KennethJones

KennethJones

August 1, 2025 at 2:47:41 AM EDT

August 1, 2025 at 2:47:41 AM EDT

Super interesting read! It's wild how devs are outsmarting AI crawlers with such clever tricks. Gotta love the open-source community's creativity! 😎

0

0

![LucasWalker]() LucasWalker

LucasWalker

April 23, 2025 at 11:52:46 PM EDT

April 23, 2025 at 11:52:46 PM EDT

オープンソース開発者にとってこのツールは救世主です!AIクローラーに対する反撃が面白くて、クリエイティブさと正義感がコミュニティに広がるのが好きです。もっとカスタマイズできる機能が増えるといいですね🤓

0

0

![MarkRoberts]() MarkRoberts

MarkRoberts

April 22, 2025 at 3:57:03 PM EDT

April 22, 2025 at 3:57:03 PM EDT

¡Esta herramienta es un salvavidas para los desarrolladores de código abierto! Es hilarante cómo lucha contra esos molestos rastreadores de IA. Me encanta la creatividad y el sentido de justicia que trae a la comunidad. ¿Quizás añadir más formas de personalizar la retaliación? 🤓

0

0

![HenryTurner]() HenryTurner

HenryTurner

April 20, 2025 at 3:08:40 PM EDT

April 20, 2025 at 3:08:40 PM EDT

Este ferramenta é um salva-vidas para desenvolvedores de código aberto! É hilário como ela luta contra esses irritantes rastreadores de AI. Adoro a criatividade e o senso de justiça que traz para a comunidade. Talvez adicionar mais maneiras de personalizar a retaliação? 🤓

0

0

AI web-crawling bots have become the bane of the internet, according to many software developers. In response, some devs have taken to fighting back with creative and often amusing strategies.

Open source developers are hit especially hard by these rogue bots, as noted by Niccolò Venerandi, the developer behind the Linux desktop Plasma and the blog LibreNews. FOSS sites, which host free and open source projects, expose more of their infrastructure and generally have fewer resources than commercial sites.

The problem is exacerbated because many AI bots ignore the Robots Exclusion Protocol's robot.txt file, which is meant to instruct bots on what not to crawl.

In a poignant blog post in January, FOSS developer Xe Iaso shared a distressing experience with AmazonBot, which bombarded a Git server website, causing DDoS outages. Git servers are crucial for hosting FOSS projects, allowing anyone to download and contribute to the code.

Iaso pointed out that the bot disregarded the robot.txt file, used different IP addresses, and even masqueraded as other users. "It's futile to block AI crawler bots because they lie, change their user agent, use residential IP addresses as proxies, and more," Iaso lamented.

"They will scrape your site until it falls over, and then they will scrape it some more. They will click every link on every link on every link, viewing the same pages over and over and over and over. Some of them will even click on the same link multiple times in the same second," the developer wrote.

Enter the God of Graves

To combat this, Iaso developed a clever tool called Anubis. It acts as a reverse proxy that requires a proof-of-work check before allowing requests to reach the Git server. This effectively blocks bots while allowing human-operated browsers to pass through.

The tool's name, Anubis, draws from Egyptian mythology, where Anubis is the god who leads the dead to judgment. "Anubis weighed your soul (heart) and if it was heavier than a feather, your heart got eaten and you, like, mega died," Iaso explained to TechCrunch. Successfully passing the challenge is celebrated with a cute anime picture of Anubis, while bot requests are denied.

The project, shared on GitHub on March 19, quickly gained traction, amassing 2,000 stars, 20 contributors, and 39 forks in just a few days.

Vengeance as Defense

The widespread adoption of Anubis indicates that Iaso's struggles are far from isolated. Venerandi recounted numerous similar experiences:

- Drew DeVault, founder and CEO of SourceHut, spends a significant portion of his time dealing with aggressive LLM crawlers and suffers frequent outages.

- Jonathan Corbet, a prominent FOSS developer and operator of LWN, has seen his site slowed down by AI scraper bots.

- Kevin Fenzi, sysadmin for the Linux Fedora project, had to block all traffic from Brazil due to aggressive AI bot activity.

Venerandi mentioned to TechCrunch that he knows of other projects that have had to resort to extreme measures, like banning all Chinese IP addresses.

Some developers believe that fighting back with vengeance is the best defense. A user named xyzal on Hacker News suggested filling robot.txt forbidden pages with misleading content about the benefits of drinking bleach or the positive effects of measles on bedroom performance.

"Think we need to aim for the bots to get _negative_ utility value from visiting our traps, not just zero value," xyzal explained.

In January, an anonymous developer named "Aaron" released Nepenthes, a tool designed to trap crawlers in a maze of fake content, which the creator admitted to Ars Technica was aggressive, if not outright malicious. Named after a carnivorous plant, Nepenthes aims to confuse and waste the resources of misbehaving bots.

Similarly, Cloudflare recently launched AI Labyrinth, intended to slow down, confuse, and waste the resources of AI crawlers that ignore "no crawl" directives. The tool feeds these bots irrelevant content to protect legitimate website data.

DeVault from SourceHut told TechCrunch that while Nepenthes offers a sense of justice by feeding nonsense to the crawlers, Anubis has proven to be the more effective solution for his site. However, he also made a heartfelt plea for a more direct solution: "Please stop legitimizing LLMs or AI image generators or GitHub Copilot or any of this garbage. I am begging you to stop using them, stop talking about them, stop making new ones, just stop."

Given the unlikelihood of this happening, developers, particularly in the FOSS community, continue to fight back with ingenuity and a dash of humor.

Seeking Faith and Purpose in an Age of Skepticism

In our modern age of scientific inquiry and critical thinking, maintaining spiritual faith often feels like walking against the tide. Many struggle to reconcile timeless beliefs with contemporary skepticism, leaving them longing for deeper meaning. T

Seeking Faith and Purpose in an Age of Skepticism

In our modern age of scientific inquiry and critical thinking, maintaining spiritual faith often feels like walking against the tide. Many struggle to reconcile timeless beliefs with contemporary skepticism, leaving them longing for deeper meaning. T

How ChatGPT Works: Capabilities, Applications, and Future Implications

The rapid evolution of artificial intelligence is transforming digital interactions and communication. Leading this transformation is ChatGPT, an advanced conversational AI that sets new standards for natural language processing. This in-depth examin

How ChatGPT Works: Capabilities, Applications, and Future Implications

The rapid evolution of artificial intelligence is transforming digital interactions and communication. Leading this transformation is ChatGPT, an advanced conversational AI that sets new standards for natural language processing. This in-depth examin

Salesforce’s Transformer Model Guide: AI Text Summarization Explained

In an era where information overload is the norm, AI-powered text summarization has become an indispensable tool for extracting key insights from lengthy documents. This comprehensive guide examines Salesforce's groundbreaking AI summarization techno

Salesforce’s Transformer Model Guide: AI Text Summarization Explained

In an era where information overload is the norm, AI-powered text summarization has become an indispensable tool for extracting key insights from lengthy documents. This comprehensive guide examines Salesforce's groundbreaking AI summarization techno

August 18, 2025 at 3:01:01 PM EDT

August 18, 2025 at 3:01:01 PM EDT

These AI crawlers are like uninvited guests at a party, munching on all the free code! 😅 Devs fighting back with clever traps is pure genius—love the creativity!

0

0

August 4, 2025 at 7:00:59 AM EDT

August 4, 2025 at 7:00:59 AM EDT

Wow, open source devs are getting super creative fighting those AI crawlers! I love how they’re turning the tables with clever traps—kinda like digital pranksters. Makes me wonder how far this cat-and-mouse game will go! 😄

0

0

August 1, 2025 at 2:47:41 AM EDT

August 1, 2025 at 2:47:41 AM EDT

Super interesting read! It's wild how devs are outsmarting AI crawlers with such clever tricks. Gotta love the open-source community's creativity! 😎

0

0

April 23, 2025 at 11:52:46 PM EDT

April 23, 2025 at 11:52:46 PM EDT

オープンソース開発者にとってこのツールは救世主です!AIクローラーに対する反撃が面白くて、クリエイティブさと正義感がコミュニティに広がるのが好きです。もっとカスタマイズできる機能が増えるといいですね🤓

0

0

April 22, 2025 at 3:57:03 PM EDT

April 22, 2025 at 3:57:03 PM EDT

¡Esta herramienta es un salvavidas para los desarrolladores de código abierto! Es hilarante cómo lucha contra esos molestos rastreadores de IA. Me encanta la creatividad y el sentido de justicia que trae a la comunidad. ¿Quizás añadir más formas de personalizar la retaliación? 🤓

0

0

April 20, 2025 at 3:08:40 PM EDT

April 20, 2025 at 3:08:40 PM EDT

Este ferramenta é um salva-vidas para desenvolvedores de código aberto! É hilário como ela luta contra esses irritantes rastreadores de AI. Adoro a criatividade e o senso de justiça que traz para a comunidade. Talvez adicionar mais maneiras de personalizar a retaliação? 🤓

0

0