Recursive Summarization Using GPT-4: A Detailed Overview

In today's fast-paced world, where information is abundant, the skill of condensing long articles into concise summaries is more valuable than ever. This blog post dives into the fascinating world of recursive summarization using GPT-4, providing a detailed guide on how to efficiently shorten lengthy texts without losing the essence. Whether you're a student, researcher, or just someone who loves to stay informed, you'll find this approach incredibly useful. Let's explore how to harness the power of GPT-4 for effective text summarization.

Key Points

- Recursive summarization involves breaking down texts into smaller chunks and iteratively summarizing them to create a concise overview.

- GPT-4's extensive context window helps in generating more accurate and coherent summaries.

- Token limits can be a hurdle, necessitating strategic text segmentation.

- Crafting effective prompts is essential to guide GPT-4 in extracting the most relevant information.

- This technique has practical applications in summarizing research papers, legal documents, and news articles.

Understanding Recursive Summarization

What is Recursive Summarization?

Recursive summarization is like a magic trick for condensing long texts. It involves breaking down a lengthy document into smaller, digestible chunks, summarizing each piece, and then merging these summaries into a higher-level overview. This process can be repeated multiple times until you reach the desired length. Imagine tackling a 100-page report; with recursive summarization, you can create a manageable summary that captures all the key points without getting lost in the details.

This method shines when you're dealing with documents that exceed the token limits of language models like GPT-4. By segmenting the task into smaller steps, you ensure that the summarization process remains both efficient and accurate. It's like taking a big puzzle and solving it piece by piece, ensuring that every important detail is accounted for in the final picture.

Why Use GPT-4 for Summarization?

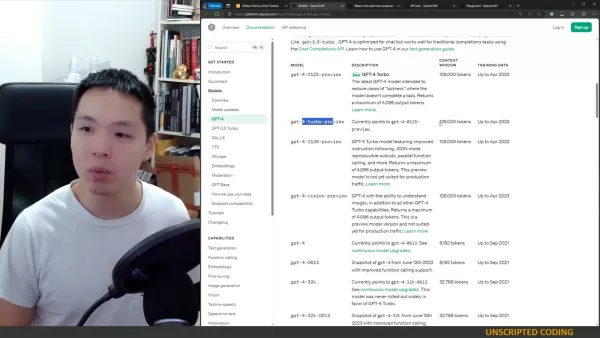

GPT-4, developed by OpenAI, is a powerhouse when it comes to text summarization. Thanks to its large context window, it can process and retain information from a substantial portion of the input text, leading to more accurate and coherent summaries. It's not just about understanding the text; GPT-4 can follow instructions and extract the most relevant information, making it perfect for the precise task of recursive summarization.

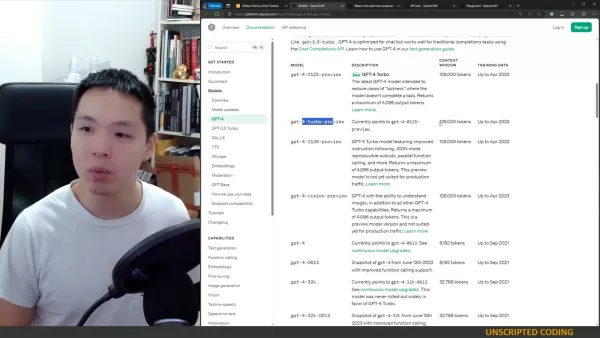

The beauty of GPT-4 lies in its ability to adapt to different writing styles and handle complex texts. Whether you're dealing with a scientific paper or a legal document, GPT-4 can sift through the content and pull out the most important details. And with the latest GPT-4 Turbo model, you can enjoy a maximum of 4096 output tokens, reducing the chances of the model not completing a task.

Overcoming Token Limits

The Challenge of Token Limits

One of the biggest hurdles in using language models like GPT-4 for summarization is the token limit. These models can only process a certain number of tokens at once, and when dealing with very large documents, this can be a real challenge. If your document exceeds the token limit, you'll need to break it down into smaller, manageable chunks.

Splitting Text into Manageable Chunks

To make the most out of GPT-4 for summarization, you'll need to split your text into manageable chunks that fit within the token limit. Here's a step-by-step approach to help you do just that:

- Determine the Token Limit: Find out the maximum token limit for the GPT-4 model you're using.

- Segment the Text: Break the document into smaller sections based on paragraphs, sections, or chapters.

- Tokenize Each Segment: Use a tokenizer to count the number of tokens in each segment.

- Adjust Segment Size: If any segment exceeds the token limit, further divide it until all segments are within the acceptable range.

By following these steps, you ensure that each chunk is within the token limit of GPT-4, allowing for effective recursive summarization. Whether you're segmenting by paragraphs, sections, or chapters, the goal is to maintain coherence while staying within the token limits.

Strategies for Efficient Summarization

Efficient summarization is all about extracting the most relevant information from each text chunk while keeping within the token limits. One effective strategy is to focus on identifying and retaining key sentences that encapsulate the main ideas and supporting arguments. You can also use extractive summarization techniques, where you directly copy important phrases and sentences from the original text. This is particularly useful for technical or academic content where precise language is crucial.

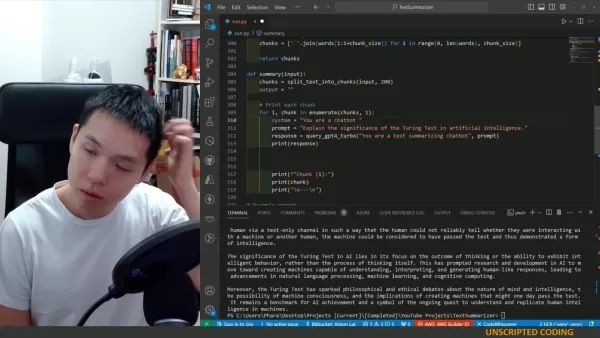

Here's a simple Python function to help you split the text into chunks:

def split_text_into_chunks(text, chunk_size=800):

words = text.split()

chunks = [' '.join(words[i:i+chunk_size]) for i in range(0, len(words), chunk_size)]

return chunks

This function splits the text by words, but you can also use sections or chapters if they're available in the text.

Step-by-Step Guide to Recursive Summarization with GPT-4

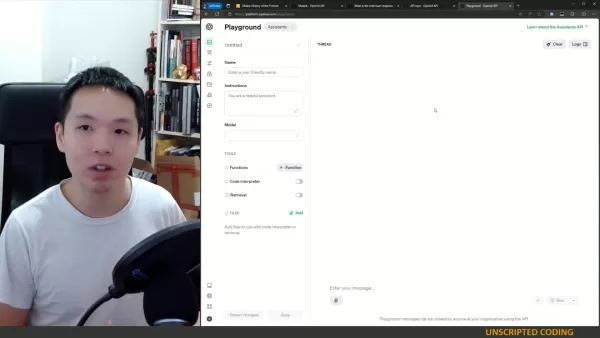

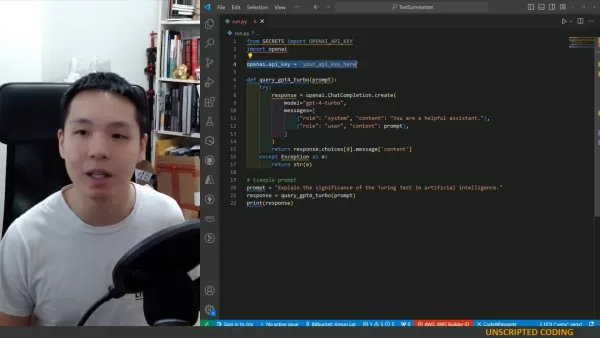

Setting Up the Environment

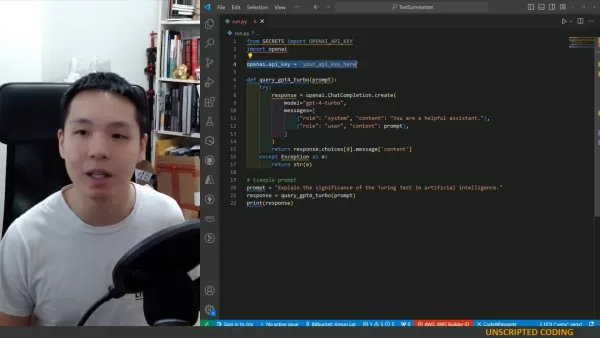

Before you dive into recursive summarization, make sure you have access to the OpenAI API and the GPT-4 model. You'll need an API key and the OpenAI Python library.

Here's how to set up your environment:

- Install the OpenAI Library: Use

pip install openai to install the OpenAI library. - Import Necessary Modules: Import

openai and any other modules you need for text processing. - Authenticate with OpenAI: Set your API key to authenticate with the OpenAI API.

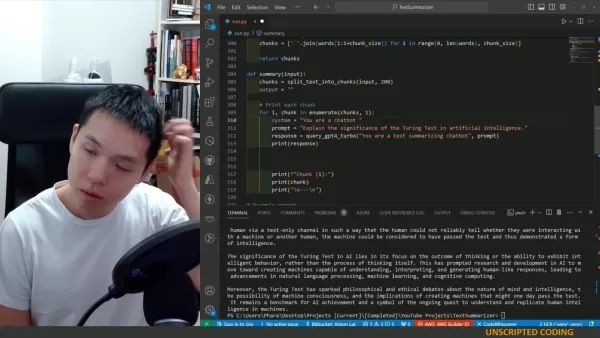

Coding the Recursive Summarization Function

Now, let's create a function that will recursively summarize the text chunks. Here's a sample function:

def summary(input_text):

chunks = split_text_into_chunks(input_text, 800)

output = ""

for i, chunk in enumerate(chunks, 1):

system = "You are a chatbot that summarizes text recursively. You will take a long article and summarize sections of it at a time. Please consider what you have summarized so far to create a cohesive summary with a single style. You are currently on section " + str(i) + ". So far, your current summary is: " + output

prompt = "Please add a summary of the following next section of the article: " + chunk

response = query_gpt4_turbo(system, prompt)

output = output + " " + response

print(response)

return output

Testing and Iterating

After implementing the function, it's time to test it with various articles to see how well it performs. You might need to iterate on the prompts and chunk sizes to optimize the results. Always evaluate the summaries for coherence, accuracy, and relevance. Testing and iterating are crucial steps to refine the recursive summarization process and ensure that the summaries meet your needs.

Benefits and Drawbacks of Recursive Summarization

Pros

- Handles very large documents exceeding token limits.

- Maintains coherence through iterative summaries.

- Provides flexibility in adjusting summary length.

Cons

- Requires careful planning and prompt engineering.

- Can be time-consuming for extremely long texts.

- May lose some nuances compared to full-text analysis.

Frequently Asked Questions (FAQ)

What is the maximum token length?

GPT-4 Turbo returns a maximum of 4096 tokens.

What models can be used for recursive summarization?

GPT-4 and other models with large context windows are suitable for recursive summarization.

What does Recursive Summarization mean?

It means that each summary is taken into account for the following summaries, ensuring consistency within a single style prompt.

What if the text is longer than 128,000 tokens?

Use this method and code to break down the text into chunks and summarize it a little at a time.

Related Questions

How can I improve the quality of GPT-4 summaries?

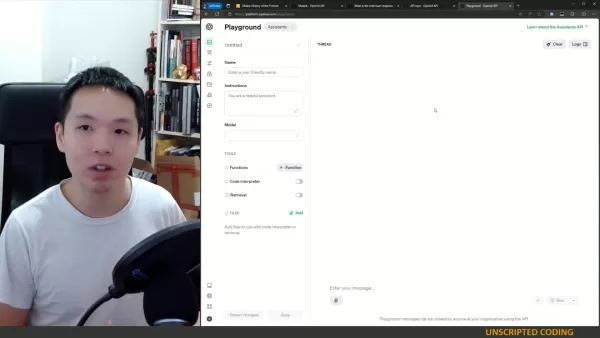

To enhance the quality of GPT-4 summaries, focus on refining your prompts and optimizing the chunk sizes. Clear, specific prompts guide GPT-4 to extract relevant information, while appropriate chunk sizes ensure the model can effectively process each segment of the text. It's also helpful to test using the playground first before implementing in an editor. Refine your prompts, optimize your chunk sizes, and use a code editor to implement and test the system efficiently. Remember, testing is key!

Related article

Manus Debuts 'Wide Research' AI Tool with 100+ Agents for Web Scraping

Chinese AI innovator Manus, which previously gained attention for its pioneering multi-agent orchestration platform catering to both consumers and professional users, has unveiled a groundbreaking application of its technology that challenges convent

Manus Debuts 'Wide Research' AI Tool with 100+ Agents for Web Scraping

Chinese AI innovator Manus, which previously gained attention for its pioneering multi-agent orchestration platform catering to both consumers and professional users, has unveiled a groundbreaking application of its technology that challenges convent

Why LLMs Ignore Instructions & How to Fix It Effectively

Understanding Why Large Language Models Skip Instructions

Large Language Models (LLMs) have transformed how we interact with AI, enabling advanced applications ranging from conversational interfaces to automated content generation and programming ass

Why LLMs Ignore Instructions & How to Fix It Effectively

Understanding Why Large Language Models Skip Instructions

Large Language Models (LLMs) have transformed how we interact with AI, enabling advanced applications ranging from conversational interfaces to automated content generation and programming ass

Pebble Reclaims Its Original Brand Name After Legal Battle

The Return of Pebble: Name and AllPebble enthusiasts can rejoice - the beloved smartwatch brand isn't just making a comeback, it's reclaiming its iconic name. "We've successfully regained the Pebble trademark, which honestly surprised me with how smo

Comments (17)

0/200

Pebble Reclaims Its Original Brand Name After Legal Battle

The Return of Pebble: Name and AllPebble enthusiasts can rejoice - the beloved smartwatch brand isn't just making a comeback, it's reclaiming its iconic name. "We've successfully regained the Pebble trademark, which honestly surprised me with how smo

Comments (17)

0/200

![RonaldHernández]() RonaldHernández

RonaldHernández

August 15, 2025 at 2:00:59 AM EDT

August 15, 2025 at 2:00:59 AM EDT

This recursive summarization stuff with GPT-4 is wild! It’s like teaching a super-smart robot to shrink novels into tweets. I wonder how it handles super technical papers though? 🤔

0

0

![JohnRoberts]() JohnRoberts

JohnRoberts

August 6, 2025 at 7:00:59 AM EDT

August 6, 2025 at 7:00:59 AM EDT

This recursive summarization thing with GPT-4 sounds like a game-changer! I love how it can boil down massive articles into bite-sized nuggets. Makes me wonder if I’ll ever read a full article again 😂. Anyone tried this in their workflow yet?

0

0

![GeorgeTaylor]() GeorgeTaylor

GeorgeTaylor

May 10, 2025 at 1:52:31 AM EDT

May 10, 2025 at 1:52:31 AM EDT

A Sumarização Recursiva com GPT-4 é incrível! É como mágica como ele consegue pegar um artigo longo e reduzi-lo ao essencial. Usei no trabalho e economizou muito tempo. Só queria que fosse um pouco mais amigável, a interface pode ser confusa. Ainda assim, é uma ferramenta revolucionária! 🌟

0

0

![FrankSmith]() FrankSmith

FrankSmith

May 9, 2025 at 7:51:23 PM EDT

May 9, 2025 at 7:51:23 PM EDT

¡La Sumarización Recursiva con GPT-4 es impresionante! Es muy útil para condensar artículos largos, aunque a veces las summaries pierden un poco del sabor original. Aún así, es una gran herramienta para quien necesita captar rápidamente la esencia de textos extensos. ¡Pruébalo! 📚

0

0

![MatthewGonzalez]() MatthewGonzalez

MatthewGonzalez

May 9, 2025 at 6:18:08 PM EDT

May 9, 2025 at 6:18:08 PM EDT

A Sumarização Recursiva com GPT-4 é incrível! É super útil para condensar artigos longos, mas às vezes os resumos perdem um pouco do sabor original. Ainda assim, é uma ótima ferramenta para quem precisa captar rapidamente a essência de textos extensos. Experimente! 📚

0

0

![StevenNelson]() StevenNelson

StevenNelson

May 9, 2025 at 5:29:07 PM EDT

May 9, 2025 at 5:29:07 PM EDT

GPT-4を使った再帰的要約は驚くべきものです!長い記事を要約するのにとても役立ちますが、時々オリジナルの風味が少し失われることがあります。それでも、長いテキストの要点を素早く把握したい人にとっては素晴らしいツールです。試してみてください!📚

0

0

In today's fast-paced world, where information is abundant, the skill of condensing long articles into concise summaries is more valuable than ever. This blog post dives into the fascinating world of recursive summarization using GPT-4, providing a detailed guide on how to efficiently shorten lengthy texts without losing the essence. Whether you're a student, researcher, or just someone who loves to stay informed, you'll find this approach incredibly useful. Let's explore how to harness the power of GPT-4 for effective text summarization.

Key Points

- Recursive summarization involves breaking down texts into smaller chunks and iteratively summarizing them to create a concise overview.

- GPT-4's extensive context window helps in generating more accurate and coherent summaries.

- Token limits can be a hurdle, necessitating strategic text segmentation.

- Crafting effective prompts is essential to guide GPT-4 in extracting the most relevant information.

- This technique has practical applications in summarizing research papers, legal documents, and news articles.

Understanding Recursive Summarization

What is Recursive Summarization?

Recursive summarization is like a magic trick for condensing long texts. It involves breaking down a lengthy document into smaller, digestible chunks, summarizing each piece, and then merging these summaries into a higher-level overview. This process can be repeated multiple times until you reach the desired length. Imagine tackling a 100-page report; with recursive summarization, you can create a manageable summary that captures all the key points without getting lost in the details.

This method shines when you're dealing with documents that exceed the token limits of language models like GPT-4. By segmenting the task into smaller steps, you ensure that the summarization process remains both efficient and accurate. It's like taking a big puzzle and solving it piece by piece, ensuring that every important detail is accounted for in the final picture.

Why Use GPT-4 for Summarization?

GPT-4, developed by OpenAI, is a powerhouse when it comes to text summarization. Thanks to its large context window, it can process and retain information from a substantial portion of the input text, leading to more accurate and coherent summaries. It's not just about understanding the text; GPT-4 can follow instructions and extract the most relevant information, making it perfect for the precise task of recursive summarization.

The beauty of GPT-4 lies in its ability to adapt to different writing styles and handle complex texts. Whether you're dealing with a scientific paper or a legal document, GPT-4 can sift through the content and pull out the most important details. And with the latest GPT-4 Turbo model, you can enjoy a maximum of 4096 output tokens, reducing the chances of the model not completing a task.

Overcoming Token Limits

The Challenge of Token Limits

One of the biggest hurdles in using language models like GPT-4 for summarization is the token limit. These models can only process a certain number of tokens at once, and when dealing with very large documents, this can be a real challenge. If your document exceeds the token limit, you'll need to break it down into smaller, manageable chunks.

Splitting Text into Manageable Chunks

To make the most out of GPT-4 for summarization, you'll need to split your text into manageable chunks that fit within the token limit. Here's a step-by-step approach to help you do just that:

- Determine the Token Limit: Find out the maximum token limit for the GPT-4 model you're using.

- Segment the Text: Break the document into smaller sections based on paragraphs, sections, or chapters.

- Tokenize Each Segment: Use a tokenizer to count the number of tokens in each segment.

- Adjust Segment Size: If any segment exceeds the token limit, further divide it until all segments are within the acceptable range.

By following these steps, you ensure that each chunk is within the token limit of GPT-4, allowing for effective recursive summarization. Whether you're segmenting by paragraphs, sections, or chapters, the goal is to maintain coherence while staying within the token limits.

Strategies for Efficient Summarization

Efficient summarization is all about extracting the most relevant information from each text chunk while keeping within the token limits. One effective strategy is to focus on identifying and retaining key sentences that encapsulate the main ideas and supporting arguments. You can also use extractive summarization techniques, where you directly copy important phrases and sentences from the original text. This is particularly useful for technical or academic content where precise language is crucial.

Here's a simple Python function to help you split the text into chunks:

def split_text_into_chunks(text, chunk_size=800):

words = text.split()

chunks = [' '.join(words[i:i+chunk_size]) for i in range(0, len(words), chunk_size)]

return chunks

This function splits the text by words, but you can also use sections or chapters if they're available in the text.

Step-by-Step Guide to Recursive Summarization with GPT-4

Setting Up the Environment

Before you dive into recursive summarization, make sure you have access to the OpenAI API and the GPT-4 model. You'll need an API key and the OpenAI Python library.

Here's how to set up your environment:

- Install the OpenAI Library: Use

pip install openaito install the OpenAI library. - Import Necessary Modules: Import

openaiand any other modules you need for text processing. - Authenticate with OpenAI: Set your API key to authenticate with the OpenAI API.

Coding the Recursive Summarization Function

Now, let's create a function that will recursively summarize the text chunks. Here's a sample function:

def summary(input_text):

chunks = split_text_into_chunks(input_text, 800)

output = ""

for i, chunk in enumerate(chunks, 1):

system = "You are a chatbot that summarizes text recursively. You will take a long article and summarize sections of it at a time. Please consider what you have summarized so far to create a cohesive summary with a single style. You are currently on section " + str(i) + ". So far, your current summary is: " + output

prompt = "Please add a summary of the following next section of the article: " + chunk

response = query_gpt4_turbo(system, prompt)

output = output + " " + response

print(response)

return output

Testing and Iterating

After implementing the function, it's time to test it with various articles to see how well it performs. You might need to iterate on the prompts and chunk sizes to optimize the results. Always evaluate the summaries for coherence, accuracy, and relevance. Testing and iterating are crucial steps to refine the recursive summarization process and ensure that the summaries meet your needs.

Benefits and Drawbacks of Recursive Summarization

Pros

- Handles very large documents exceeding token limits.

- Maintains coherence through iterative summaries.

- Provides flexibility in adjusting summary length.

Cons

- Requires careful planning and prompt engineering.

- Can be time-consuming for extremely long texts.

- May lose some nuances compared to full-text analysis.

Frequently Asked Questions (FAQ)

What is the maximum token length?

GPT-4 Turbo returns a maximum of 4096 tokens.

What models can be used for recursive summarization?

GPT-4 and other models with large context windows are suitable for recursive summarization.

What does Recursive Summarization mean?

It means that each summary is taken into account for the following summaries, ensuring consistency within a single style prompt.

What if the text is longer than 128,000 tokens?

Use this method and code to break down the text into chunks and summarize it a little at a time.

Related Questions

How can I improve the quality of GPT-4 summaries?

To enhance the quality of GPT-4 summaries, focus on refining your prompts and optimizing the chunk sizes. Clear, specific prompts guide GPT-4 to extract relevant information, while appropriate chunk sizes ensure the model can effectively process each segment of the text. It's also helpful to test using the playground first before implementing in an editor. Refine your prompts, optimize your chunk sizes, and use a code editor to implement and test the system efficiently. Remember, testing is key!

Manus Debuts 'Wide Research' AI Tool with 100+ Agents for Web Scraping

Chinese AI innovator Manus, which previously gained attention for its pioneering multi-agent orchestration platform catering to both consumers and professional users, has unveiled a groundbreaking application of its technology that challenges convent

Manus Debuts 'Wide Research' AI Tool with 100+ Agents for Web Scraping

Chinese AI innovator Manus, which previously gained attention for its pioneering multi-agent orchestration platform catering to both consumers and professional users, has unveiled a groundbreaking application of its technology that challenges convent

Why LLMs Ignore Instructions & How to Fix It Effectively

Understanding Why Large Language Models Skip Instructions

Large Language Models (LLMs) have transformed how we interact with AI, enabling advanced applications ranging from conversational interfaces to automated content generation and programming ass

Why LLMs Ignore Instructions & How to Fix It Effectively

Understanding Why Large Language Models Skip Instructions

Large Language Models (LLMs) have transformed how we interact with AI, enabling advanced applications ranging from conversational interfaces to automated content generation and programming ass

Pebble Reclaims Its Original Brand Name After Legal Battle

The Return of Pebble: Name and AllPebble enthusiasts can rejoice - the beloved smartwatch brand isn't just making a comeback, it's reclaiming its iconic name. "We've successfully regained the Pebble trademark, which honestly surprised me with how smo

Pebble Reclaims Its Original Brand Name After Legal Battle

The Return of Pebble: Name and AllPebble enthusiasts can rejoice - the beloved smartwatch brand isn't just making a comeback, it's reclaiming its iconic name. "We've successfully regained the Pebble trademark, which honestly surprised me with how smo

August 15, 2025 at 2:00:59 AM EDT

August 15, 2025 at 2:00:59 AM EDT

This recursive summarization stuff with GPT-4 is wild! It’s like teaching a super-smart robot to shrink novels into tweets. I wonder how it handles super technical papers though? 🤔

0

0

August 6, 2025 at 7:00:59 AM EDT

August 6, 2025 at 7:00:59 AM EDT

This recursive summarization thing with GPT-4 sounds like a game-changer! I love how it can boil down massive articles into bite-sized nuggets. Makes me wonder if I’ll ever read a full article again 😂. Anyone tried this in their workflow yet?

0

0

May 10, 2025 at 1:52:31 AM EDT

May 10, 2025 at 1:52:31 AM EDT

A Sumarização Recursiva com GPT-4 é incrível! É como mágica como ele consegue pegar um artigo longo e reduzi-lo ao essencial. Usei no trabalho e economizou muito tempo. Só queria que fosse um pouco mais amigável, a interface pode ser confusa. Ainda assim, é uma ferramenta revolucionária! 🌟

0

0

May 9, 2025 at 7:51:23 PM EDT

May 9, 2025 at 7:51:23 PM EDT

¡La Sumarización Recursiva con GPT-4 es impresionante! Es muy útil para condensar artículos largos, aunque a veces las summaries pierden un poco del sabor original. Aún así, es una gran herramienta para quien necesita captar rápidamente la esencia de textos extensos. ¡Pruébalo! 📚

0

0

May 9, 2025 at 6:18:08 PM EDT

May 9, 2025 at 6:18:08 PM EDT

A Sumarização Recursiva com GPT-4 é incrível! É super útil para condensar artigos longos, mas às vezes os resumos perdem um pouco do sabor original. Ainda assim, é uma ótima ferramenta para quem precisa captar rapidamente a essência de textos extensos. Experimente! 📚

0

0

May 9, 2025 at 5:29:07 PM EDT

May 9, 2025 at 5:29:07 PM EDT

GPT-4を使った再帰的要約は驚くべきものです!長い記事を要約するのにとても役立ちますが、時々オリジナルの風味が少し失われることがあります。それでも、長いテキストの要点を素早く把握したい人にとっては素晴らしいツールです。試してみてください!📚

0

0