Complete Guide to Mastering Inpainting Using Stable Diffusion

Stable Diffusion has transformed AI-powered image generation by providing unprecedented creative control, particularly through its powerful inpainting functionality. This comprehensive tutorial walks you through leveraging Forge UI's intuitive interface to master Stable Diffusion's inpainting capabilities, from fundamental techniques to professional-grade refinements for breathtaking visual outcomes.

Key Points

Precise localized image editing through targeted masking

Streamlined workflows with Forge UI's optimized interface

Strategic parameter adjustments for flawless integrations

Creative exploration through diverse sampling approaches

Iterative refinement process for perfecting results

Understanding Stable Diffusion Inpainting with Forge UI

What is Stable Diffusion Inpainting?

Stable Diffusion's inpainting feature revolutionizes image editing by enabling selective modifications to specific regions while preserving the overall composition. This intelligent system uses advanced masking techniques where users define editable areas, allowing the AI to contextually regenerate content that blends seamlessly with existing elements.

By analyzing surrounding pixels and interpreting user prompts, the diffusion model reconstructs masked sections with remarkable coherence. This technology excels at various applications including object removal, creative additions, stylistic transformations, imperfection correction, and compositional expansions.

Forge UI: A User-Friendly Interface for Stable Diffusion

Forge UI demystifies Stable Diffusion with an accessible visual interface that eliminates coding requirements. Its thoughtfully designed workspace enables effortless model management, prompt crafting, parameter adjustments, and image generation through intuitive controls.

The platform enhances inpainting workflows with:

- Visual masking through direct drawing or uploads

- Parameter optimization with interactive sliders

- Instant previews for iterative refinements

- Seamless integration with other Stable Diffusion features

Advanced Inpainting Techniques

Leveraging ControlNet for Precise Control

ControlNet extends inpainting capabilities by introducing structural guidance through reference images. This is particularly valuable for maintaining architectural precision or ensuring new elements align perfectly with existing compositions. The workflow involves:

- Installing the ControlNet extension

- Preparing structural reference images (edge maps, depth maps, sketches)

- Loading control images into Forge UI

- Fine-tuning influence parameters

- Generating with enhanced guidance

Using Latent Noise for Creative Effects

Introducing controlled randomness through latent noise injection can produce unexpectedly creative results. This technique excels when seeking artistic variations or surreal interpretations within inpainted regions. Adjustable noise strength parameters allow creators to fine-tune the balance between coherence and innovation in their outputs.

Step-by-Step Guide to Inpainting with Forge UI

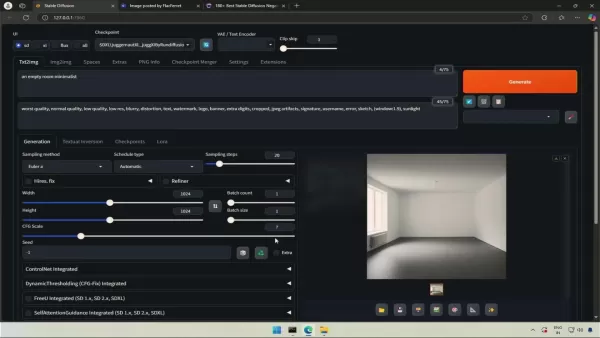

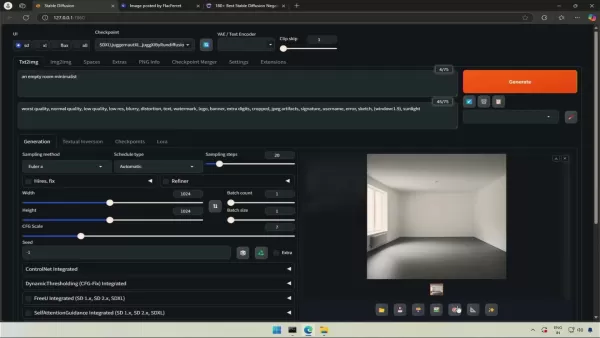

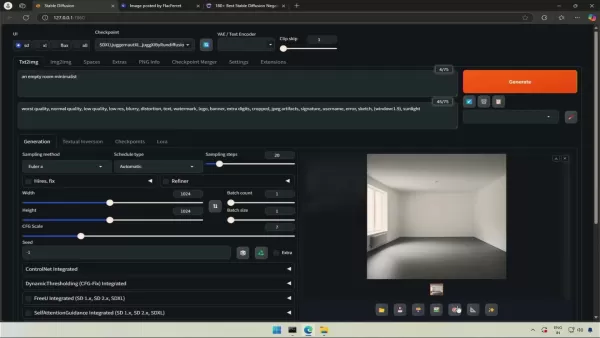

Initial Setup and Model Selection

- Launch Forge UI application

- Select appropriate model from dropdown (recommended: sdxl_juggernautXL_juggernautFlusio)

- Input descriptive positive and negative prompts

- Generate base image with text-to-image function

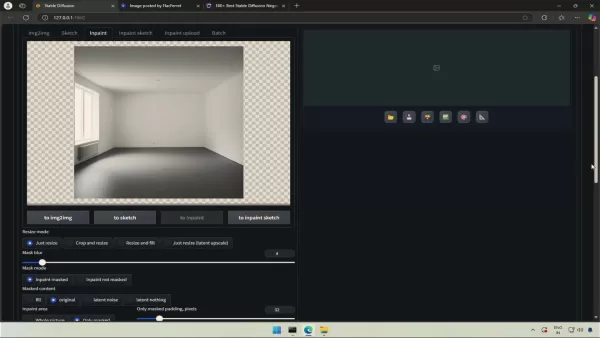

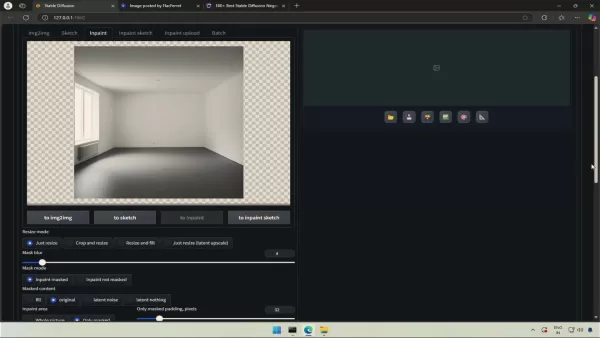

Transferring to the Inpaint Tab

- Generate or import base image

- Click pink palette icon below image

- Automatic transfer to Inpaint tab occurs

Adjusting Inpainting Parameters

- Set Inpaint Area to "Only Masked"

- Match original sampling methods/schedule

- Select Brush tool and adjust size for precision

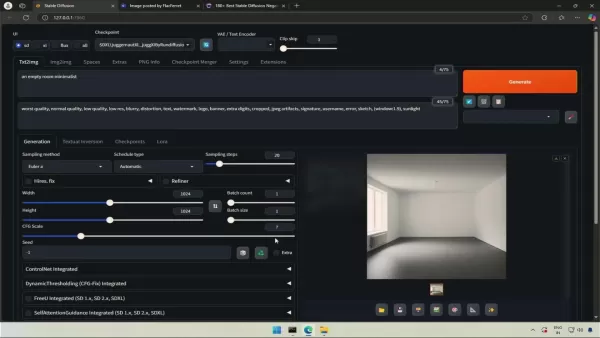

Applying the Mask and Inpainting

- Paint mask over target area

- Enter detailed generation prompt

- Generate and evaluate results

- Iteratively refine parameters and masking

Stable Diffusion Pricing

Cost Considerations for Stable Diffusion Inpainting

While the core software remains free, effective implementation requires consideration of:

- GPU investment (high-end recommended)

- RAM requirements (minimum 16GB, 32GB+ preferred)

- SSD storage for optimal performance

- Potential cloud computing/API service costs

Pros and Cons of Stable Diffusion Inpainting

Pros

Unparalleled creative flexibility

Professional-grade seamless results

Open-source accessibility

Extendable through extensions

Precise localized control

Cons

Hardware-intensive requirements

Initial configuration complexity

Results vary with prompt quality

Requires iterative refinement

Steep learning curve

Key Features for Successful Inpainting

Essential Features for Stable Diffusion Inpainting

Master these core components for professional results:

- Advanced masking tools (brush/lasso/polygon)

- Strategic prompting techniques

- Parameter fine-tuning (denoising, CFG scale, sampling)

- Real-time preview functionality

- Extension integration (ControlNet, etc.)

Inspiring Use Cases for Inpainting

Creative Applications of Stable Diffusion Inpainting

Transformative possibilities include:

- Historical photo restoration

- Composition cleanup and object removal

- Dynamic background replacements

- Character design variations

- Architectural concept visualization

- Fashion design experimentation

Frequently Asked Questions

What is the best sampling method for inpainting?

Performance varies by project - experiment with Euler a, DPM++ 2M Karras, and DDIM to determine optimal approaches for specific use cases.

How can I improve my inpainting results?

- Start with high-resolution source images

- Create precise, detailed masks

- Develop clear, descriptive prompts

- Systematically adjust parameters

- Embrace iterative refinement process

Can I add completely new elements?

Absolutely - strategic masking and well-crafted prompts enable seamless integration of entirely new components within existing compositions.

Related Questions

What common mistakes should I avoid?

Key pitfalls include low-quality source material, imprecise masking, vague prompting, improper parameter configurations, and insufficient refinement iterations.

How does Stable Diffusion compare to alternatives?

Advantages include open-source accessibility, extensive customization options, powerful extension ecosystem, and advanced AI capabilities for superior realism and integration.

Related article

Microsoft Study Finds More AI Tokens Increase Reasoning Errors

Emerging Insights Into LLM Reasoning EfficiencyNew research from Microsoft demonstrates that advanced reasoning techniques in large language models don't produce uniform improvements across different AI systems. Their groundbreaking study analyzed ho

Microsoft Study Finds More AI Tokens Increase Reasoning Errors

Emerging Insights Into LLM Reasoning EfficiencyNew research from Microsoft demonstrates that advanced reasoning techniques in large language models don't produce uniform improvements across different AI systems. Their groundbreaking study analyzed ho

"AI K-Pop Idol Fashion Trends: Transform Into a Style Icon"

K-Pop Fashion Revolution: AI Meets Idol StyleK-Pop idols aren't just musical artists - they're global fashion trendsetters whose style influence continues to grow exponentially. With artificial intelligence transforming creative industries, we're ent

"AI K-Pop Idol Fashion Trends: Transform Into a Style Icon"

K-Pop Fashion Revolution: AI Meets Idol StyleK-Pop idols aren't just musical artists - they're global fashion trendsetters whose style influence continues to grow exponentially. With artificial intelligence transforming creative industries, we're ent

Adobe Substance 3D Viewer Integrates AI for Advanced 3D Modeling

Adobe continues to push creative boundaries with its revolutionary Substance 3D Viewer, transforming how designers interact with 3D content in their digital workflow. This groundbreaking tool empowers creators to effortlessly generate AI-powered 3D m

Comments (0)

0/200

Adobe Substance 3D Viewer Integrates AI for Advanced 3D Modeling

Adobe continues to push creative boundaries with its revolutionary Substance 3D Viewer, transforming how designers interact with 3D content in their digital workflow. This groundbreaking tool empowers creators to effortlessly generate AI-powered 3D m

Comments (0)

0/200

Stable Diffusion has transformed AI-powered image generation by providing unprecedented creative control, particularly through its powerful inpainting functionality. This comprehensive tutorial walks you through leveraging Forge UI's intuitive interface to master Stable Diffusion's inpainting capabilities, from fundamental techniques to professional-grade refinements for breathtaking visual outcomes.

Key Points

Precise localized image editing through targeted masking

Streamlined workflows with Forge UI's optimized interface

Strategic parameter adjustments for flawless integrations

Creative exploration through diverse sampling approaches

Iterative refinement process for perfecting results

Understanding Stable Diffusion Inpainting with Forge UI

What is Stable Diffusion Inpainting?

Stable Diffusion's inpainting feature revolutionizes image editing by enabling selective modifications to specific regions while preserving the overall composition. This intelligent system uses advanced masking techniques where users define editable areas, allowing the AI to contextually regenerate content that blends seamlessly with existing elements.

By analyzing surrounding pixels and interpreting user prompts, the diffusion model reconstructs masked sections with remarkable coherence. This technology excels at various applications including object removal, creative additions, stylistic transformations, imperfection correction, and compositional expansions.

Forge UI: A User-Friendly Interface for Stable Diffusion

Forge UI demystifies Stable Diffusion with an accessible visual interface that eliminates coding requirements. Its thoughtfully designed workspace enables effortless model management, prompt crafting, parameter adjustments, and image generation through intuitive controls.

The platform enhances inpainting workflows with:

- Visual masking through direct drawing or uploads

- Parameter optimization with interactive sliders

- Instant previews for iterative refinements

- Seamless integration with other Stable Diffusion features

Advanced Inpainting Techniques

Leveraging ControlNet for Precise Control

ControlNet extends inpainting capabilities by introducing structural guidance through reference images. This is particularly valuable for maintaining architectural precision or ensuring new elements align perfectly with existing compositions. The workflow involves:

- Installing the ControlNet extension

- Preparing structural reference images (edge maps, depth maps, sketches)

- Loading control images into Forge UI

- Fine-tuning influence parameters

- Generating with enhanced guidance

Using Latent Noise for Creative Effects

Introducing controlled randomness through latent noise injection can produce unexpectedly creative results. This technique excels when seeking artistic variations or surreal interpretations within inpainted regions. Adjustable noise strength parameters allow creators to fine-tune the balance between coherence and innovation in their outputs.

Step-by-Step Guide to Inpainting with Forge UI

Initial Setup and Model Selection

- Launch Forge UI application

- Select appropriate model from dropdown (recommended: sdxl_juggernautXL_juggernautFlusio)

- Input descriptive positive and negative prompts

- Generate base image with text-to-image function

Transferring to the Inpaint Tab

- Generate or import base image

- Click pink palette icon below image

- Automatic transfer to Inpaint tab occurs

Adjusting Inpainting Parameters

- Set Inpaint Area to "Only Masked"

- Match original sampling methods/schedule

- Select Brush tool and adjust size for precision

Applying the Mask and Inpainting

- Paint mask over target area

- Enter detailed generation prompt

- Generate and evaluate results

- Iteratively refine parameters and masking

Stable Diffusion Pricing

Cost Considerations for Stable Diffusion Inpainting

While the core software remains free, effective implementation requires consideration of:

- GPU investment (high-end recommended)

- RAM requirements (minimum 16GB, 32GB+ preferred)

- SSD storage for optimal performance

- Potential cloud computing/API service costs

Pros and Cons of Stable Diffusion Inpainting

Pros

Unparalleled creative flexibility

Professional-grade seamless results

Open-source accessibility

Extendable through extensions

Precise localized control

Cons

Hardware-intensive requirements

Initial configuration complexity

Results vary with prompt quality

Requires iterative refinement

Steep learning curve

Key Features for Successful Inpainting

Essential Features for Stable Diffusion Inpainting

Master these core components for professional results:

- Advanced masking tools (brush/lasso/polygon)

- Strategic prompting techniques

- Parameter fine-tuning (denoising, CFG scale, sampling)

- Real-time preview functionality

- Extension integration (ControlNet, etc.)

Inspiring Use Cases for Inpainting

Creative Applications of Stable Diffusion Inpainting

Transformative possibilities include:

- Historical photo restoration

- Composition cleanup and object removal

- Dynamic background replacements

- Character design variations

- Architectural concept visualization

- Fashion design experimentation

Frequently Asked Questions

What is the best sampling method for inpainting?

Performance varies by project - experiment with Euler a, DPM++ 2M Karras, and DDIM to determine optimal approaches for specific use cases.

How can I improve my inpainting results?

- Start with high-resolution source images

- Create precise, detailed masks

- Develop clear, descriptive prompts

- Systematically adjust parameters

- Embrace iterative refinement process

Can I add completely new elements?

Absolutely - strategic masking and well-crafted prompts enable seamless integration of entirely new components within existing compositions.

Related Questions

What common mistakes should I avoid?

Key pitfalls include low-quality source material, imprecise masking, vague prompting, improper parameter configurations, and insufficient refinement iterations.

How does Stable Diffusion compare to alternatives?

Advantages include open-source accessibility, extensive customization options, powerful extension ecosystem, and advanced AI capabilities for superior realism and integration.

Microsoft Study Finds More AI Tokens Increase Reasoning Errors

Emerging Insights Into LLM Reasoning EfficiencyNew research from Microsoft demonstrates that advanced reasoning techniques in large language models don't produce uniform improvements across different AI systems. Their groundbreaking study analyzed ho

Microsoft Study Finds More AI Tokens Increase Reasoning Errors

Emerging Insights Into LLM Reasoning EfficiencyNew research from Microsoft demonstrates that advanced reasoning techniques in large language models don't produce uniform improvements across different AI systems. Their groundbreaking study analyzed ho

"AI K-Pop Idol Fashion Trends: Transform Into a Style Icon"

K-Pop Fashion Revolution: AI Meets Idol StyleK-Pop idols aren't just musical artists - they're global fashion trendsetters whose style influence continues to grow exponentially. With artificial intelligence transforming creative industries, we're ent

"AI K-Pop Idol Fashion Trends: Transform Into a Style Icon"

K-Pop Fashion Revolution: AI Meets Idol StyleK-Pop idols aren't just musical artists - they're global fashion trendsetters whose style influence continues to grow exponentially. With artificial intelligence transforming creative industries, we're ent

Adobe Substance 3D Viewer Integrates AI for Advanced 3D Modeling

Adobe continues to push creative boundaries with its revolutionary Substance 3D Viewer, transforming how designers interact with 3D content in their digital workflow. This groundbreaking tool empowers creators to effortlessly generate AI-powered 3D m

Adobe Substance 3D Viewer Integrates AI for Advanced 3D Modeling

Adobe continues to push creative boundaries with its revolutionary Substance 3D Viewer, transforming how designers interact with 3D content in their digital workflow. This groundbreaking tool empowers creators to effortlessly generate AI-powered 3D m