Inside Google’s AI leap: Gemini 2.5 thinks deeper, speaks smarter and codes faster

Google Inches Closer to Its Vision of a Universal AI Assistant

At this year’s Google I/O event, the company revealed significant upgrades to its Gemini 2.5 series, particularly focusing on improving its capabilities across various dimensions. The latest versions—Gemini 2.5 Flash and 2.5 Pro—are now smarter and more efficient than ever before. These advancements bring Google closer to achieving its vision of creating a universal AI assistant capable of understanding context, planning, and executing tasks seamlessly.

Gemini 2.5 Pro: Taking Intelligence to New Heights

Gemini 2.5 Pro, hailed by Google as its most advanced model yet, boasts an unprecedented one-million-token context window. This feature allows the AI to handle vast amounts of data and maintain coherence over longer conversations. At the event, CEO of Google DeepMind, Demis Hassabis, expressed excitement over the progress made, stating, “This is our ultimate goal for the Gemini app: An AI that’s personal, proactive and powerful.”

One of the standout features of Gemini 2.5 Pro is the introduction of 'Deep Think,' an experimental enhanced reasoning mode. Deep Think enables the AI to analyze multiple hypotheses before delivering a response, enhancing its decision-making abilities. According to Hassabis, this development stems from insights gained during the creation of AlphaGo, where longer processing times yielded better results.

Impressive Performance on Benchmark Tests

Deep Think has already demonstrated remarkable performance on challenging benchmarks. For instance, it achieved impressive scores on the 2025 USA Mathematical Olympiad (USAMO) and excelled on LiveCodeBench, a benchmark known for testing high-level coding skills. Additionally, it scored 84.0% on MMMU, which evaluates multimodal understanding and reasoning.

Despite these achievements, Google remains cautious. Hassabis mentioned that the team is conducting thorough safety evaluations and gathering feedback from experts before rolling out Deep Think more broadly. Currently, it’s accessible to trusted testers via the API for feedback purposes.

Gemini 2.5 Flash: A Workhorse for Everyday Use

Alongside Gemini 2.5 Pro, Google also introduced an enhanced version of Gemini 2.5 Flash, designed for speed, efficiency, and affordability. Hassabis described it as the "workhorse" of the series, excelling in benchmarks for reasoning, multimodality, code, and long context. In fact, it ranks second only to Gemini 2.5 Pro on the LMArena leaderboard.

The updated Flash model is approximately 20 to 30% more efficient, requiring fewer tokens to perform tasks. Based on developer feedback, Google has fine-tuned the model and made it available for preview in Google AI Studio, Vertex AI, and the Gemini app. It will be fully rolled out for production in early June.

New Features Across Both Models

Both Gemini 2.5 Pro and Flash received several new capabilities aimed at enhancing user interaction. Native audio output was added to create more natural conversational experiences, while text-to-speech functionality now supports multiple voices. Users can even guide the tone and style of speech, whether they want the AI to sound melodramatic or somber.

Other experimental voice features include affective dialogue, which allows the AI to recognize emotions in a user’s voice and respond accordingly, and proactive audio, which filters out background noise. Thinking budgets were also introduced, giving developers control over how much computational power the AI uses before responding.

A Step Toward the Future

These updates underscore Google’s commitment to pushing the boundaries of AI technology. As Kavukcuoglu and Doshi noted in their blog post, “We’re living through a remarkable moment in history where AI is making possible an amazing new future. It’s been relentless progress.”

With these improvements, Google is undoubtedly paving the way toward a future where AI assistants become indispensable companions in our daily lives.

Related article

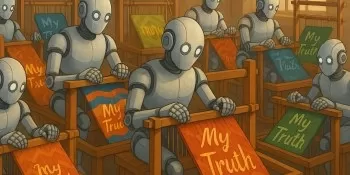

Is AI Personalization Enhancing Reality or Distorting It? The Hidden Risks Explored

Human civilization has witnessed cognitive revolutions before - handwriting externalized memory, calculators automated computation, GPS systems replaced wayfinding. Now we stand at the precipice of the most profound cognitive delegation yet: artifici

Is AI Personalization Enhancing Reality or Distorting It? The Hidden Risks Explored

Human civilization has witnessed cognitive revolutions before - handwriting externalized memory, calculators automated computation, GPS systems replaced wayfinding. Now we stand at the precipice of the most profound cognitive delegation yet: artifici

Google's Gemini AI Conquers Pokémon Blue with Assistance

Google's AI Milestone: Conquering a Classic Pokémon AdventureGoogle's most advanced AI model appears to have achieved a notable gaming breakthrough - completing the 1996 Game Boy title Pokémon Blue. CEO Sundar Pichai celebrated the accomplishment on

Google's Gemini AI Conquers Pokémon Blue with Assistance

Google's AI Milestone: Conquering a Classic Pokémon AdventureGoogle's most advanced AI model appears to have achieved a notable gaming breakthrough - completing the 1996 Game Boy title Pokémon Blue. CEO Sundar Pichai celebrated the accomplishment on

ByteDance Unveils Seed-Thinking-v1.5 AI Model to Boost Reasoning Capabilities

The race for advanced reasoning AI began with OpenAI’s o1 model in September 2024, gaining momentum with DeepSeek’s R1 launch in January 2025.Major AI developers are now competing to create faster, mo

Comments (0)

0/200

ByteDance Unveils Seed-Thinking-v1.5 AI Model to Boost Reasoning Capabilities

The race for advanced reasoning AI began with OpenAI’s o1 model in September 2024, gaining momentum with DeepSeek’s R1 launch in January 2025.Major AI developers are now competing to create faster, mo

Comments (0)

0/200

Google Inches Closer to Its Vision of a Universal AI Assistant

At this year’s Google I/O event, the company revealed significant upgrades to its Gemini 2.5 series, particularly focusing on improving its capabilities across various dimensions. The latest versions—Gemini 2.5 Flash and 2.5 Pro—are now smarter and more efficient than ever before. These advancements bring Google closer to achieving its vision of creating a universal AI assistant capable of understanding context, planning, and executing tasks seamlessly.Gemini 2.5 Pro: Taking Intelligence to New Heights

Gemini 2.5 Pro, hailed by Google as its most advanced model yet, boasts an unprecedented one-million-token context window. This feature allows the AI to handle vast amounts of data and maintain coherence over longer conversations. At the event, CEO of Google DeepMind, Demis Hassabis, expressed excitement over the progress made, stating, “This is our ultimate goal for the Gemini app: An AI that’s personal, proactive and powerful.” One of the standout features of Gemini 2.5 Pro is the introduction of 'Deep Think,' an experimental enhanced reasoning mode. Deep Think enables the AI to analyze multiple hypotheses before delivering a response, enhancing its decision-making abilities. According to Hassabis, this development stems from insights gained during the creation of AlphaGo, where longer processing times yielded better results.Impressive Performance on Benchmark Tests

Deep Think has already demonstrated remarkable performance on challenging benchmarks. For instance, it achieved impressive scores on the 2025 USA Mathematical Olympiad (USAMO) and excelled on LiveCodeBench, a benchmark known for testing high-level coding skills. Additionally, it scored 84.0% on MMMU, which evaluates multimodal understanding and reasoning. Despite these achievements, Google remains cautious. Hassabis mentioned that the team is conducting thorough safety evaluations and gathering feedback from experts before rolling out Deep Think more broadly. Currently, it’s accessible to trusted testers via the API for feedback purposes.Gemini 2.5 Flash: A Workhorse for Everyday Use

Alongside Gemini 2.5 Pro, Google also introduced an enhanced version of Gemini 2.5 Flash, designed for speed, efficiency, and affordability. Hassabis described it as the "workhorse" of the series, excelling in benchmarks for reasoning, multimodality, code, and long context. In fact, it ranks second only to Gemini 2.5 Pro on the LMArena leaderboard. The updated Flash model is approximately 20 to 30% more efficient, requiring fewer tokens to perform tasks. Based on developer feedback, Google has fine-tuned the model and made it available for preview in Google AI Studio, Vertex AI, and the Gemini app. It will be fully rolled out for production in early June.New Features Across Both Models

Both Gemini 2.5 Pro and Flash received several new capabilities aimed at enhancing user interaction. Native audio output was added to create more natural conversational experiences, while text-to-speech functionality now supports multiple voices. Users can even guide the tone and style of speech, whether they want the AI to sound melodramatic or somber. Other experimental voice features include affective dialogue, which allows the AI to recognize emotions in a user’s voice and respond accordingly, and proactive audio, which filters out background noise. Thinking budgets were also introduced, giving developers control over how much computational power the AI uses before responding.A Step Toward the Future

These updates underscore Google’s commitment to pushing the boundaries of AI technology. As Kavukcuoglu and Doshi noted in their blog post, “We’re living through a remarkable moment in history where AI is making possible an amazing new future. It’s been relentless progress.” With these improvements, Google is undoubtedly paving the way toward a future where AI assistants become indispensable companions in our daily lives. Is AI Personalization Enhancing Reality or Distorting It? The Hidden Risks Explored

Human civilization has witnessed cognitive revolutions before - handwriting externalized memory, calculators automated computation, GPS systems replaced wayfinding. Now we stand at the precipice of the most profound cognitive delegation yet: artifici

Is AI Personalization Enhancing Reality or Distorting It? The Hidden Risks Explored

Human civilization has witnessed cognitive revolutions before - handwriting externalized memory, calculators automated computation, GPS systems replaced wayfinding. Now we stand at the precipice of the most profound cognitive delegation yet: artifici

Google's Gemini AI Conquers Pokémon Blue with Assistance

Google's AI Milestone: Conquering a Classic Pokémon AdventureGoogle's most advanced AI model appears to have achieved a notable gaming breakthrough - completing the 1996 Game Boy title Pokémon Blue. CEO Sundar Pichai celebrated the accomplishment on

Google's Gemini AI Conquers Pokémon Blue with Assistance

Google's AI Milestone: Conquering a Classic Pokémon AdventureGoogle's most advanced AI model appears to have achieved a notable gaming breakthrough - completing the 1996 Game Boy title Pokémon Blue. CEO Sundar Pichai celebrated the accomplishment on

ByteDance Unveils Seed-Thinking-v1.5 AI Model to Boost Reasoning Capabilities

The race for advanced reasoning AI began with OpenAI’s o1 model in September 2024, gaining momentum with DeepSeek’s R1 launch in January 2025.Major AI developers are now competing to create faster, mo

ByteDance Unveils Seed-Thinking-v1.5 AI Model to Boost Reasoning Capabilities

The race for advanced reasoning AI began with OpenAI’s o1 model in September 2024, gaining momentum with DeepSeek’s R1 launch in January 2025.Major AI developers are now competing to create faster, mo