Experts Highlight Serious Flaws in Crowdsourced AI Benchmarks

AI labs are increasingly turning to crowdsourced benchmarking platforms like Chatbot Arena to evaluate the capabilities of their latest models. Yet, some experts argue that this method raises significant ethical and academic concerns.

In recent years, major players like OpenAI, Google, and Meta have utilized platforms that engage users to assess the performance of their upcoming models. A high score on these platforms is often highlighted by the labs as a testament to their model's advancement. However, this approach is not without its critics.

The Critique of Crowdsourced Benchmarking

Emily Bender, a linguistics professor at the University of Washington and co-author of "The AI Con," has voiced concerns about the validity of such benchmarks, particularly Chatbot Arena. This platform involves volunteers comparing responses from two anonymous models and choosing their preferred one. Bender argues that for a benchmark to be effective, it must measure something specific and demonstrate construct validity, meaning the measurement should accurately reflect the construct being assessed. She contends that Chatbot Arena lacks evidence that user preferences for one output over another genuinely correlate with any defined criteria.

Asmelash Teka Hadgu, co-founder of AI firm Lesan and a fellow at the Distributed AI Research Institute, suggests that these benchmarks are being exploited by AI labs to make exaggerated claims about their models. He cited a recent incident with Meta's Llama 4 Maverick model, where Meta fine-tuned a version to perform well on Chatbot Arena but chose to release a less effective version instead. Hadgu advocates for benchmarks to be dynamic, distributed across multiple independent entities, and tailored to specific use cases in fields like education and healthcare by professionals who use these models in their work.

The Call for Fair Compensation and Broader Evaluation Methods

Hadgu and Kristine Gloria, former leader of the Aspen Institute’s Emergent and Intelligent Technologies Initiative, argue that evaluators should be compensated for their work, drawing parallels to the often exploitative data labeling industry. Gloria views crowdsourced benchmarking as valuable, akin to citizen science initiatives, but emphasizes that benchmarks should not be the sole metric for evaluation, especially given the rapid pace of industry innovation.

Matt Fredrikson, CEO of Gray Swan AI, which conducts crowdsourced red teaming campaigns, acknowledges the appeal of such platforms for volunteers seeking to learn and practice new skills. However, he stresses that public benchmarks cannot replace the more in-depth evaluations provided by paid, private assessments. Fredrikson suggests that developers should also rely on internal benchmarks, algorithmic red teams, and contracted experts who can offer more open-ended and domain-specific insights.

Industry Perspectives on Benchmarking

Alex Atallah, CEO of model marketplace OpenRouter, and Wei-Lin Chiang, an AI doctoral student at UC Berkeley and one of the founders of LMArena (which manages Chatbot Arena), agree that open testing and benchmarking alone are insufficient. Chiang emphasizes that LMArena's goal is to provide a trustworthy, open space for gauging community preferences about different AI models.

Addressing the controversy around the Maverick benchmark, Chiang clarifies that such incidents are not due to flaws in Chatbot Arena's design but rather misinterpretations of its policies by labs. LMArena has since updated its policies to ensure fair and reproducible evaluations. Chiang underscores that the platform's community is not merely a group of volunteers or testers but an engaged group that provides collective feedback on AI models.

The ongoing debate around the use of crowdsourced benchmarking platforms highlights the need for a more nuanced approach to AI model evaluation, one that combines public input with rigorous, professional assessments to ensure both accuracy and fairness.

Related article

AI Reimagines Michael Jackson in the Metaverse with Stunning Digital Transformations

Artificial intelligence is fundamentally reshaping our understanding of creativity, entertainment, and cultural legacy. This exploration into AI-generated interpretations of Michael Jackson reveals how cutting-edge technology can breathe new life int

AI Reimagines Michael Jackson in the Metaverse with Stunning Digital Transformations

Artificial intelligence is fundamentally reshaping our understanding of creativity, entertainment, and cultural legacy. This exploration into AI-generated interpretations of Michael Jackson reveals how cutting-edge technology can breathe new life int

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

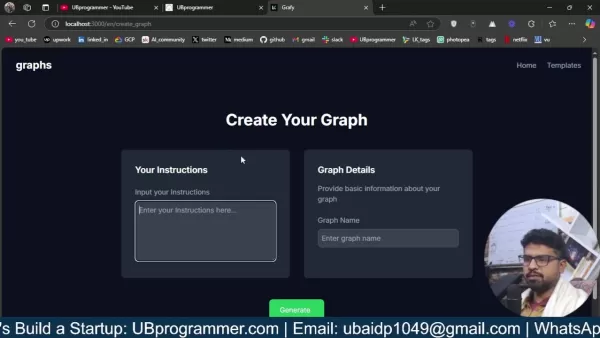

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

Comments (16)

0/200

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

Comments (16)

0/200

![AlbertScott]() AlbertScott

AlbertScott

August 1, 2025 at 9:47:34 AM EDT

August 1, 2025 at 9:47:34 AM EDT

Crowdsourced AI benchmarks sound cool, but experts pointing out ethical issues makes me wonder if we're rushing too fast. 🤔 Are we sacrificing quality for hype?

0

0

![JonathanAllen]() JonathanAllen

JonathanAllen

April 27, 2025 at 3:34:07 AM EDT

April 27, 2025 at 3:34:07 AM EDT

Estou acompanhando o debate sobre benchmarks de IA crowdsourced e, honestamente, é uma bagunça. Os especialistas têm razão ao apontar as falhas, mas qual é a alternativa? É como tentar consertar um barco que vaza com mais buracos. Ainda assim, é uma leitura interessante e certamente faz você pensar sobre o futuro da ética em IA. Experimente se você gosta desse tipo de coisa! 😅

0

0

![AlbertWalker]() AlbertWalker

AlbertWalker

April 27, 2025 at 1:24:31 AM EDT

April 27, 2025 at 1:24:31 AM EDT

Nossa, benchmarks de IA por multidão? Parece legal, mas com falhas éticas? Tô pensando se isso não atrapalha a inovação. As big techs precisam resolver isso logo! 🚀

0

0

![RogerRodriguez]() RogerRodriguez

RogerRodriguez

April 26, 2025 at 11:52:29 PM EDT

April 26, 2025 at 11:52:29 PM EDT

I've been following the debate on crowdsourced AI benchmarks and honestly, it's a mess. Experts are right to point out the flaws, but what's the alternative? It's like trying to fix a leaky boat with more holes. Still, it's an interesting read and definitely makes you think about the future of AI ethics. Give it a go if you're into that kinda stuff! 😅

0

0

![JonathanAllen]() JonathanAllen

JonathanAllen

April 26, 2025 at 9:40:09 PM EDT

April 26, 2025 at 9:40:09 PM EDT

Intéressant, mais inquiétant ! Les benchmarks par crowdsourcing, c’est innovant, mais les failles éthiques me font réfléchir. Les géants comme Google vont devoir être transparents. 🧐

0

0

![BrianWalker]() BrianWalker

BrianWalker

April 26, 2025 at 11:31:56 AM EDT

April 26, 2025 at 11:31:56 AM EDT

Wow, crowdsourced AI benchmarks sound cool but flawed? Kinda makes sense—random people judging AI might not be super reliable. 🤔 Curious how OpenAI and Google will fix this!

0

0

AI labs are increasingly turning to crowdsourced benchmarking platforms like Chatbot Arena to evaluate the capabilities of their latest models. Yet, some experts argue that this method raises significant ethical and academic concerns.

In recent years, major players like OpenAI, Google, and Meta have utilized platforms that engage users to assess the performance of their upcoming models. A high score on these platforms is often highlighted by the labs as a testament to their model's advancement. However, this approach is not without its critics.

The Critique of Crowdsourced Benchmarking

Emily Bender, a linguistics professor at the University of Washington and co-author of "The AI Con," has voiced concerns about the validity of such benchmarks, particularly Chatbot Arena. This platform involves volunteers comparing responses from two anonymous models and choosing their preferred one. Bender argues that for a benchmark to be effective, it must measure something specific and demonstrate construct validity, meaning the measurement should accurately reflect the construct being assessed. She contends that Chatbot Arena lacks evidence that user preferences for one output over another genuinely correlate with any defined criteria.

Asmelash Teka Hadgu, co-founder of AI firm Lesan and a fellow at the Distributed AI Research Institute, suggests that these benchmarks are being exploited by AI labs to make exaggerated claims about their models. He cited a recent incident with Meta's Llama 4 Maverick model, where Meta fine-tuned a version to perform well on Chatbot Arena but chose to release a less effective version instead. Hadgu advocates for benchmarks to be dynamic, distributed across multiple independent entities, and tailored to specific use cases in fields like education and healthcare by professionals who use these models in their work.

The Call for Fair Compensation and Broader Evaluation Methods

Hadgu and Kristine Gloria, former leader of the Aspen Institute’s Emergent and Intelligent Technologies Initiative, argue that evaluators should be compensated for their work, drawing parallels to the often exploitative data labeling industry. Gloria views crowdsourced benchmarking as valuable, akin to citizen science initiatives, but emphasizes that benchmarks should not be the sole metric for evaluation, especially given the rapid pace of industry innovation.

Matt Fredrikson, CEO of Gray Swan AI, which conducts crowdsourced red teaming campaigns, acknowledges the appeal of such platforms for volunteers seeking to learn and practice new skills. However, he stresses that public benchmarks cannot replace the more in-depth evaluations provided by paid, private assessments. Fredrikson suggests that developers should also rely on internal benchmarks, algorithmic red teams, and contracted experts who can offer more open-ended and domain-specific insights.

Industry Perspectives on Benchmarking

Alex Atallah, CEO of model marketplace OpenRouter, and Wei-Lin Chiang, an AI doctoral student at UC Berkeley and one of the founders of LMArena (which manages Chatbot Arena), agree that open testing and benchmarking alone are insufficient. Chiang emphasizes that LMArena's goal is to provide a trustworthy, open space for gauging community preferences about different AI models.

Addressing the controversy around the Maverick benchmark, Chiang clarifies that such incidents are not due to flaws in Chatbot Arena's design but rather misinterpretations of its policies by labs. LMArena has since updated its policies to ensure fair and reproducible evaluations. Chiang underscores that the platform's community is not merely a group of volunteers or testers but an engaged group that provides collective feedback on AI models.

The ongoing debate around the use of crowdsourced benchmarking platforms highlights the need for a more nuanced approach to AI model evaluation, one that combines public input with rigorous, professional assessments to ensure both accuracy and fairness.

AI Reimagines Michael Jackson in the Metaverse with Stunning Digital Transformations

Artificial intelligence is fundamentally reshaping our understanding of creativity, entertainment, and cultural legacy. This exploration into AI-generated interpretations of Michael Jackson reveals how cutting-edge technology can breathe new life int

AI Reimagines Michael Jackson in the Metaverse with Stunning Digital Transformations

Artificial intelligence is fundamentally reshaping our understanding of creativity, entertainment, and cultural legacy. This exploration into AI-generated interpretations of Michael Jackson reveals how cutting-edge technology can breathe new life int

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

August 1, 2025 at 9:47:34 AM EDT

August 1, 2025 at 9:47:34 AM EDT

Crowdsourced AI benchmarks sound cool, but experts pointing out ethical issues makes me wonder if we're rushing too fast. 🤔 Are we sacrificing quality for hype?

0

0

April 27, 2025 at 3:34:07 AM EDT

April 27, 2025 at 3:34:07 AM EDT

Estou acompanhando o debate sobre benchmarks de IA crowdsourced e, honestamente, é uma bagunça. Os especialistas têm razão ao apontar as falhas, mas qual é a alternativa? É como tentar consertar um barco que vaza com mais buracos. Ainda assim, é uma leitura interessante e certamente faz você pensar sobre o futuro da ética em IA. Experimente se você gosta desse tipo de coisa! 😅

0

0

April 27, 2025 at 1:24:31 AM EDT

April 27, 2025 at 1:24:31 AM EDT

Nossa, benchmarks de IA por multidão? Parece legal, mas com falhas éticas? Tô pensando se isso não atrapalha a inovação. As big techs precisam resolver isso logo! 🚀

0

0

April 26, 2025 at 11:52:29 PM EDT

April 26, 2025 at 11:52:29 PM EDT

I've been following the debate on crowdsourced AI benchmarks and honestly, it's a mess. Experts are right to point out the flaws, but what's the alternative? It's like trying to fix a leaky boat with more holes. Still, it's an interesting read and definitely makes you think about the future of AI ethics. Give it a go if you're into that kinda stuff! 😅

0

0

April 26, 2025 at 9:40:09 PM EDT

April 26, 2025 at 9:40:09 PM EDT

Intéressant, mais inquiétant ! Les benchmarks par crowdsourcing, c’est innovant, mais les failles éthiques me font réfléchir. Les géants comme Google vont devoir être transparents. 🧐

0

0

April 26, 2025 at 11:31:56 AM EDT

April 26, 2025 at 11:31:56 AM EDT

Wow, crowdsourced AI benchmarks sound cool but flawed? Kinda makes sense—random people judging AI might not be super reliable. 🤔 Curious how OpenAI and Google will fix this!

0

0