AI World: Designing with Privacy in Mind

Artificial intelligence has the power to transform everything from our daily routines to groundbreaking medical advancements. However, to truly tap into AI's potential, we must approach its development with responsibility at the forefront.

This is why the discussion around generative AI and privacy is so crucial. We're eager to contribute to this conversation with insights from the cutting edge of innovation and our deep involvement with regulators and other experts.

In our new policy working paper titled "Generative AI and Privacy," we advocate for AI products to include built-in protections that prioritize user safety and privacy right from the get-go. We also suggest policy strategies that tackle privacy issues while still allowing AI to flourish and benefit society.

Privacy-by-design in AI

AI holds the promise of great benefits for individuals and society, yet it can also amplify existing challenges and introduce new ones, as our research and that of others has shown.

The same goes for privacy. It's essential to incorporate protections that ensure transparency and control, and mitigate risks like the unintended disclosure of personal information.

This requires a solid framework from the development stage through to deployment, rooted in time-tested principles. Any organization developing AI tools should have a clear privacy strategy.

Our approach is shaped by long-standing data protection practices, our Privacy & Security Principles, Responsible AI practices, and our AI Principles. This means we put in place robust privacy safeguards and data minimization techniques, offer transparency about our data practices, and provide controls that allow users to make informed decisions and manage their information.

Focus on AI applications to effectively reduce risks

As we apply established privacy principles to generative AI, there are important questions to consider.

For instance, how do we practice data minimization when training models on vast amounts of data? What are the best ways to offer meaningful transparency for complex models that address individual concerns? And how can we create age-appropriate experiences that benefit teens in an AI-driven world?

Our paper provides some initial thoughts on these topics, focusing on two key phases of model development:

- Training and development

- User-facing applications

During training and development, personal data like names or biographical details forms a small but crucial part of the training data. Models use this data to understand how language captures abstract concepts about human relationships and the world around us.

These models aren't "databases" nor are they meant to identify individuals. In fact, including personal data can help reduce bias — for example, by better understanding names from various cultures — and improve model accuracy and performance.

At the application level, the risk of privacy harms like data leakage increases, but so does the opportunity to implement more effective safeguards. Features like output filters and auto-delete become vital here.

Prioritizing these safeguards at the application level is not only practical but, we believe, the most effective way forward.

Achieving privacy through innovation

While much of today's AI privacy dialogue focuses on risk mitigation — and rightly so, given the importance of building trust in AI — generative AI also has the potential to enhance user privacy. We should seize these opportunities as well.

Generative AI is already helping organizations analyze privacy feedback from large user bases and spot compliance issues. It's paving the way for new cyber defense strategies. Privacy-enhancing technologies such as synthetic data and differential privacy are showing us how to provide greater societal benefits without compromising personal information. Public policies and industry standards should encourage — and not inadvertently hinder — these positive developments.

The need to work together

Privacy laws are designed to be adaptable, proportionate, and technology-neutral — qualities that have made them robust and enduring over time.

The same principles apply in the era of AI, as we strive to balance strong privacy protections with other fundamental rights and social objectives.

The road ahead will require cooperation across the privacy community, and Google is dedicated to collaborating with others to ensure that generative AI benefits society responsibly.

You can read our Policy Working Paper on Generative AI and Privacy [here](link to paper).

Related article

"Exploring AI Safety & Ethics: Insights from Databricks and ElevenLabs Experts"

As generative AI becomes increasingly affordable and widespread, ethical considerations and security measures have taken center stage. ElevenLabs' AI Safety Lead Artemis Seaford and Databricks co-creator Ion Stoica participated in an insightful dia

"Exploring AI Safety & Ethics: Insights from Databricks and ElevenLabs Experts"

As generative AI becomes increasingly affordable and widespread, ethical considerations and security measures have taken center stage. ElevenLabs' AI Safety Lead Artemis Seaford and Databricks co-creator Ion Stoica participated in an insightful dia

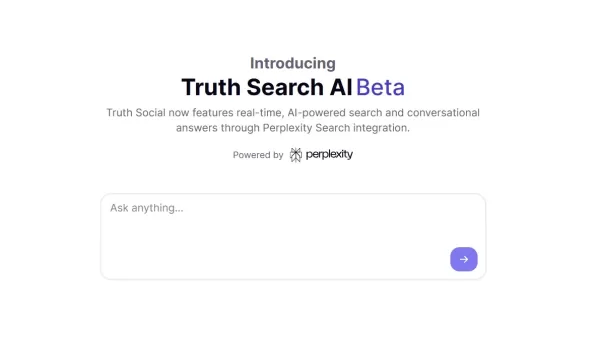

Truth Social’s New AI Search Engine Heavily Favors Fox News in Results

Trump's social media platform introduces an AI-powered search function with apparent conservative media slantExclusive AI Search Feature LaunchesTruth Social, the social media platform founded by Donald Trump, has rolled out its new artificial intell

Truth Social’s New AI Search Engine Heavily Favors Fox News in Results

Trump's social media platform introduces an AI-powered search function with apparent conservative media slantExclusive AI Search Feature LaunchesTruth Social, the social media platform founded by Donald Trump, has rolled out its new artificial intell

ChatGPT Adds Google Drive and Dropbox Integration for File Access

ChatGPT Enhances Productivity with New Enterprise Features

OpenAI has unveiled two powerful new capabilities transforming ChatGPT into a comprehensive business productivity tool: automated meeting documentation and seamless cloud storage integration

Comments (46)

0/200

ChatGPT Adds Google Drive and Dropbox Integration for File Access

ChatGPT Enhances Productivity with New Enterprise Features

OpenAI has unveiled two powerful new capabilities transforming ChatGPT into a comprehensive business productivity tool: automated meeting documentation and seamless cloud storage integration

Comments (46)

0/200

![JosephScott]() JosephScott

JosephScott

August 25, 2025 at 7:01:04 AM EDT

August 25, 2025 at 7:01:04 AM EDT

This article really opened my eyes to how crucial privacy is in AI development! It's wild to think about the balance between innovation and protecting our data. 😮 What’s next for ensuring AI doesn’t overstep boundaries?

0

0

![WillGarcía]() WillGarcía

WillGarcía

April 25, 2025 at 4:48:58 AM EDT

April 25, 2025 at 4:48:58 AM EDT

AI Worldはプライバシーを考慮した設計が素晴らしいですね。倫理的な開発に焦点を当てるのは新鮮ですが、インターフェースが少し使いづらい時があります。全体的に、責任あるAIの使用に向けた一歩だと思います!👍

0

0

![NicholasClark]() NicholasClark

NicholasClark

April 21, 2025 at 2:17:58 AM EDT

April 21, 2025 at 2:17:58 AM EDT

AI Worldはプライバシーに関する点で本当に的を射ているね。責任あるAI開発に焦点を当てるのは新鮮だ。ただ、プライバシー機能が時々少し制限が厳しすぎることがある。でも、正しい方向への一歩だよ!👍

0

0

![WillieJones]() WillieJones

WillieJones

April 18, 2025 at 3:40:31 PM EDT

April 18, 2025 at 3:40:31 PM EDT

AI World realmente da en el clavo con la privacidad. Es refrescante ver un enfoque en el desarrollo responsable de la IA. Pero a veces, las características de privacidad pueden ser un poco demasiado restrictivas. Aún así, es un paso en la dirección correcta! 👍

0

0

![AlbertThomas]() AlbertThomas

AlbertThomas

April 18, 2025 at 4:31:45 AM EDT

April 18, 2025 at 4:31:45 AM EDT

AI World은 정말로 프라이버시에 대해 핵심을 찌르고 있어요. 책임 있는 AI 개발에 초점을 맞추는 것이 새롭네요. 하지만 때때로 프라이버시 기능이 너무 제한적일 수 있어요. 그래도 올바른 방향으로의 한 걸음이에요! 👍

0

0

![LawrenceScott]() LawrenceScott

LawrenceScott

April 17, 2025 at 10:01:15 PM EDT

April 17, 2025 at 10:01:15 PM EDT

AI World really hits the nail on the head with privacy. It's refreshing to see a focus on responsible AI development. But sometimes, the privacy features can be a bit too restrictive. Still, it's a step in the right direction! 👍

0

0

Artificial intelligence has the power to transform everything from our daily routines to groundbreaking medical advancements. However, to truly tap into AI's potential, we must approach its development with responsibility at the forefront.

This is why the discussion around generative AI and privacy is so crucial. We're eager to contribute to this conversation with insights from the cutting edge of innovation and our deep involvement with regulators and other experts.

In our new policy working paper titled "Generative AI and Privacy," we advocate for AI products to include built-in protections that prioritize user safety and privacy right from the get-go. We also suggest policy strategies that tackle privacy issues while still allowing AI to flourish and benefit society.

Privacy-by-design in AI

AI holds the promise of great benefits for individuals and society, yet it can also amplify existing challenges and introduce new ones, as our research and that of others has shown.

The same goes for privacy. It's essential to incorporate protections that ensure transparency and control, and mitigate risks like the unintended disclosure of personal information.

This requires a solid framework from the development stage through to deployment, rooted in time-tested principles. Any organization developing AI tools should have a clear privacy strategy.

Our approach is shaped by long-standing data protection practices, our Privacy & Security Principles, Responsible AI practices, and our AI Principles. This means we put in place robust privacy safeguards and data minimization techniques, offer transparency about our data practices, and provide controls that allow users to make informed decisions and manage their information.

Focus on AI applications to effectively reduce risks

As we apply established privacy principles to generative AI, there are important questions to consider.

For instance, how do we practice data minimization when training models on vast amounts of data? What are the best ways to offer meaningful transparency for complex models that address individual concerns? And how can we create age-appropriate experiences that benefit teens in an AI-driven world?

Our paper provides some initial thoughts on these topics, focusing on two key phases of model development:

- Training and development

- User-facing applications

During training and development, personal data like names or biographical details forms a small but crucial part of the training data. Models use this data to understand how language captures abstract concepts about human relationships and the world around us.

These models aren't "databases" nor are they meant to identify individuals. In fact, including personal data can help reduce bias — for example, by better understanding names from various cultures — and improve model accuracy and performance.

At the application level, the risk of privacy harms like data leakage increases, but so does the opportunity to implement more effective safeguards. Features like output filters and auto-delete become vital here.

Prioritizing these safeguards at the application level is not only practical but, we believe, the most effective way forward.

Achieving privacy through innovation

While much of today's AI privacy dialogue focuses on risk mitigation — and rightly so, given the importance of building trust in AI — generative AI also has the potential to enhance user privacy. We should seize these opportunities as well.

Generative AI is already helping organizations analyze privacy feedback from large user bases and spot compliance issues. It's paving the way for new cyber defense strategies. Privacy-enhancing technologies such as synthetic data and differential privacy are showing us how to provide greater societal benefits without compromising personal information. Public policies and industry standards should encourage — and not inadvertently hinder — these positive developments.

The need to work together

Privacy laws are designed to be adaptable, proportionate, and technology-neutral — qualities that have made them robust and enduring over time.

The same principles apply in the era of AI, as we strive to balance strong privacy protections with other fundamental rights and social objectives.

The road ahead will require cooperation across the privacy community, and Google is dedicated to collaborating with others to ensure that generative AI benefits society responsibly.

You can read our Policy Working Paper on Generative AI and Privacy [here](link to paper).

"Exploring AI Safety & Ethics: Insights from Databricks and ElevenLabs Experts"

As generative AI becomes increasingly affordable and widespread, ethical considerations and security measures have taken center stage. ElevenLabs' AI Safety Lead Artemis Seaford and Databricks co-creator Ion Stoica participated in an insightful dia

"Exploring AI Safety & Ethics: Insights from Databricks and ElevenLabs Experts"

As generative AI becomes increasingly affordable and widespread, ethical considerations and security measures have taken center stage. ElevenLabs' AI Safety Lead Artemis Seaford and Databricks co-creator Ion Stoica participated in an insightful dia

Truth Social’s New AI Search Engine Heavily Favors Fox News in Results

Trump's social media platform introduces an AI-powered search function with apparent conservative media slantExclusive AI Search Feature LaunchesTruth Social, the social media platform founded by Donald Trump, has rolled out its new artificial intell

Truth Social’s New AI Search Engine Heavily Favors Fox News in Results

Trump's social media platform introduces an AI-powered search function with apparent conservative media slantExclusive AI Search Feature LaunchesTruth Social, the social media platform founded by Donald Trump, has rolled out its new artificial intell

ChatGPT Adds Google Drive and Dropbox Integration for File Access

ChatGPT Enhances Productivity with New Enterprise Features

OpenAI has unveiled two powerful new capabilities transforming ChatGPT into a comprehensive business productivity tool: automated meeting documentation and seamless cloud storage integration

ChatGPT Adds Google Drive and Dropbox Integration for File Access

ChatGPT Enhances Productivity with New Enterprise Features

OpenAI has unveiled two powerful new capabilities transforming ChatGPT into a comprehensive business productivity tool: automated meeting documentation and seamless cloud storage integration

August 25, 2025 at 7:01:04 AM EDT

August 25, 2025 at 7:01:04 AM EDT

This article really opened my eyes to how crucial privacy is in AI development! It's wild to think about the balance between innovation and protecting our data. 😮 What’s next for ensuring AI doesn’t overstep boundaries?

0

0

April 25, 2025 at 4:48:58 AM EDT

April 25, 2025 at 4:48:58 AM EDT

AI Worldはプライバシーを考慮した設計が素晴らしいですね。倫理的な開発に焦点を当てるのは新鮮ですが、インターフェースが少し使いづらい時があります。全体的に、責任あるAIの使用に向けた一歩だと思います!👍

0

0

April 21, 2025 at 2:17:58 AM EDT

April 21, 2025 at 2:17:58 AM EDT

AI Worldはプライバシーに関する点で本当に的を射ているね。責任あるAI開発に焦点を当てるのは新鮮だ。ただ、プライバシー機能が時々少し制限が厳しすぎることがある。でも、正しい方向への一歩だよ!👍

0

0

April 18, 2025 at 3:40:31 PM EDT

April 18, 2025 at 3:40:31 PM EDT

AI World realmente da en el clavo con la privacidad. Es refrescante ver un enfoque en el desarrollo responsable de la IA. Pero a veces, las características de privacidad pueden ser un poco demasiado restrictivas. Aún así, es un paso en la dirección correcta! 👍

0

0

April 18, 2025 at 4:31:45 AM EDT

April 18, 2025 at 4:31:45 AM EDT

AI World은 정말로 프라이버시에 대해 핵심을 찌르고 있어요. 책임 있는 AI 개발에 초점을 맞추는 것이 새롭네요. 하지만 때때로 프라이버시 기능이 너무 제한적일 수 있어요. 그래도 올바른 방향으로의 한 걸음이에요! 👍

0

0

April 17, 2025 at 10:01:15 PM EDT

April 17, 2025 at 10:01:15 PM EDT

AI World really hits the nail on the head with privacy. It's refreshing to see a focus on responsible AI development. But sometimes, the privacy features can be a bit too restrictive. Still, it's a step in the right direction! 👍

0

0