Understanding Long Context Windows: Key Insights

Yesterday, we unveiled our latest breakthrough in AI technology with the Gemini 1.5 model. This new iteration brings significant enhancements in speed and efficiency, but the real game-changer is its innovative long context window. This feature allows the model to process an unprecedented number of tokens — the fundamental units that make up words, images, or videos — all at once. To shed light on this advancement, we turned to the Google DeepMind project team for insights into what long context windows are and how they can revolutionize the way developers work.

Understanding long context windows is crucial because they enable AI models to maintain and recall information throughout a session. Imagine trying to remember a name just minutes after it's mentioned in a conversation, or rushing to write down a phone number before it slips your mind. AI models face similar challenges, often "forgetting" details after a few interactions. Long context windows address this issue by allowing the model to keep more information in its "memory."

Previously, the Gemini model could handle up to 32,000 tokens simultaneously. However, with the release of 1.5 Pro for early testing, we've pushed the boundaries to a staggering 1 million tokens — the largest context window of any large-scale foundation model to date. Our research has even gone beyond this, successfully testing up to 10 million tokens. The larger the context window, the more diverse and extensive the data — text, images, audio, code, or video — the model can process.

Nikolay Savinov, a Google DeepMind Research Scientist and one of the leads on the long context project, shared, "Our initial goal was to reach 128,000 tokens, but I thought aiming higher would be beneficial, so I proposed 1 million tokens. And now, our research has exceeded that by 10 times."

Achieving this leap required a series of deep learning innovations. Pranav Shyam's early explorations provided crucial insights that guided our research. Denis Teplyashin, a Google DeepMind Engineer, explained, "Each breakthrough led to another, opening up new possibilities. When these innovations combined, we were amazed at the results, scaling from 128,000 tokens to 512,000, then 1 million, and recently, 10 million tokens in our internal research."

The expanded capacity of 1.5 Pro opens up exciting new applications. For instance, instead of summarizing a document that's dozens of pages long, it can now handle documents thousands of pages in length. Where the previous model could analyze thousands of lines of code, 1.5 Pro can now process tens of thousands of lines at once.

Machel Reid, another Google DeepMind Research Scientist, shared some fascinating test results: "In one test, we fed the entire codebase into the model, and it generated comprehensive documentation for it, which was incredible. In another, it accurately answered questions about the 1924 film Sherlock Jr. after 'watching' the entire 45-minute movie."

1.5 Pro also excels at reasoning across data within a prompt. Machel highlighted an example involving the rare language Kalamang, spoken by fewer than 200 people worldwide. "The model can't translate into Kalamang on its own, but with the long context window, we could include the entire grammar manual and example sentences. The model then learned to translate from English to Kalamang at a level comparable to someone learning from the same material."

Gemini 1.5 Pro comes with a standard 128K-token context window, but a select group of developers and enterprise customers can access a 1 million token context window through AI Studio and Vertex AI in private preview. Managing such a large context window is computationally intensive, and we're actively working on optimizations to reduce latency as we scale it out.

Looking ahead, the team is focused on making the model faster and more efficient, with safety as a priority. They're also exploring ways to further expand the long context window, enhance underlying architectures, and leverage new hardware improvements. Nikolay noted, "10 million tokens at once is nearing the thermal limit of our Tensor Processing Units. We're not sure where the limit lies yet, and the model might be capable of even more as hardware continues to evolve."

The team is eager to see the innovative applications that developers and the broader community will create with these new capabilities. Machel reflected, "When I first saw we had a million tokens in context, I wondered, 'What do you even use this for?' But now, I believe people's imaginations will expand, leading to more creative uses of these new capabilities."

[ttpp][yyxx]

Related article

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

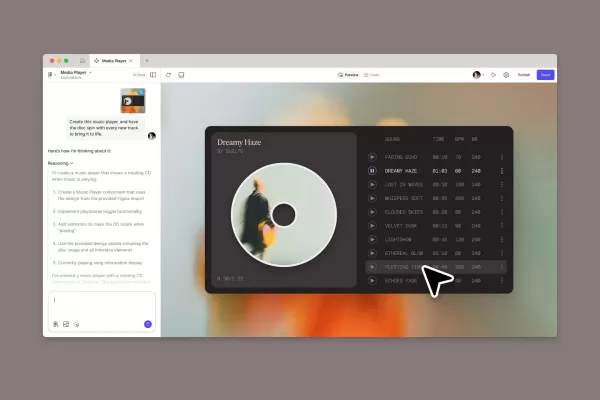

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (28)

0/200

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (28)

0/200

![KeithSmith]() KeithSmith

KeithSmith

August 17, 2025 at 3:00:59 AM EDT

August 17, 2025 at 3:00:59 AM EDT

Super cool to see Gemini 1.5's long context window in action! 😎 Makes me wonder how it'll handle massive datasets compared to older models.

0

0

![RobertSanchez]() RobertSanchez

RobertSanchez

July 30, 2025 at 9:41:19 PM EDT

July 30, 2025 at 9:41:19 PM EDT

Wow, the long context window in Gemini 1.5 sounds like a game-changer! I'm curious how it'll handle massive datasets in real-world apps. Excited to see where this takes AI! 🚀

0

0

![DavidGonzález]() DavidGonzález

DavidGonzález

July 27, 2025 at 9:19:30 PM EDT

July 27, 2025 at 9:19:30 PM EDT

The long context window in Gemini 1.5 sounds like a game-changer! I'm curious how it'll handle massive datasets in real-world apps. Any cool examples out there yet? 🤔

0

0

![RobertRoberts]() RobertRoberts

RobertRoberts

April 16, 2025 at 7:56:25 PM EDT

April 16, 2025 at 7:56:25 PM EDT

Cửa sổ ngữ cảnh dài của Gemini 1.5 thực sự là một bước tiến lớn! Thật đáng kinh ngạc khi nó có thể xử lý nhiều hơn so với các mô hình cũ. Chỉ mong nó nhanh hơn một chút. Tuy nhiên, đây là một bước tiến lớn! 💪

0

0

![MatthewGonzalez]() MatthewGonzalez

MatthewGonzalez

April 16, 2025 at 11:41:59 AM EDT

April 16, 2025 at 11:41:59 AM EDT

A janela de contexto longo do Gemini 1.5 é revolucionária, sem dúvida! Mas às vezes parece que está tentando fazer muito de uma vez, o que pode atrasar as coisas. Ainda assim, para processar grandes quantidades de dados, é imbatível. Vale a pena conferir! 🚀

0

0

![NicholasRoberts]() NicholasRoberts

NicholasRoberts

April 14, 2025 at 6:59:46 PM EDT

April 14, 2025 at 6:59:46 PM EDT

Gemini 1.5's long context window is a game-changer, no doubt! But sometimes it feels like it's trying to do too much at once, which can slow things down. Still, for processing huge chunks of data, it's unbeatable. Worth checking out! 🚀

0

0

Yesterday, we unveiled our latest breakthrough in AI technology with the Gemini 1.5 model. This new iteration brings significant enhancements in speed and efficiency, but the real game-changer is its innovative long context window. This feature allows the model to process an unprecedented number of tokens — the fundamental units that make up words, images, or videos — all at once. To shed light on this advancement, we turned to the Google DeepMind project team for insights into what long context windows are and how they can revolutionize the way developers work.

Understanding long context windows is crucial because they enable AI models to maintain and recall information throughout a session. Imagine trying to remember a name just minutes after it's mentioned in a conversation, or rushing to write down a phone number before it slips your mind. AI models face similar challenges, often "forgetting" details after a few interactions. Long context windows address this issue by allowing the model to keep more information in its "memory."

Previously, the Gemini model could handle up to 32,000 tokens simultaneously. However, with the release of 1.5 Pro for early testing, we've pushed the boundaries to a staggering 1 million tokens — the largest context window of any large-scale foundation model to date. Our research has even gone beyond this, successfully testing up to 10 million tokens. The larger the context window, the more diverse and extensive the data — text, images, audio, code, or video — the model can process.

Nikolay Savinov, a Google DeepMind Research Scientist and one of the leads on the long context project, shared, "Our initial goal was to reach 128,000 tokens, but I thought aiming higher would be beneficial, so I proposed 1 million tokens. And now, our research has exceeded that by 10 times."

Achieving this leap required a series of deep learning innovations. Pranav Shyam's early explorations provided crucial insights that guided our research. Denis Teplyashin, a Google DeepMind Engineer, explained, "Each breakthrough led to another, opening up new possibilities. When these innovations combined, we were amazed at the results, scaling from 128,000 tokens to 512,000, then 1 million, and recently, 10 million tokens in our internal research."

The expanded capacity of 1.5 Pro opens up exciting new applications. For instance, instead of summarizing a document that's dozens of pages long, it can now handle documents thousands of pages in length. Where the previous model could analyze thousands of lines of code, 1.5 Pro can now process tens of thousands of lines at once.

Machel Reid, another Google DeepMind Research Scientist, shared some fascinating test results: "In one test, we fed the entire codebase into the model, and it generated comprehensive documentation for it, which was incredible. In another, it accurately answered questions about the 1924 film Sherlock Jr. after 'watching' the entire 45-minute movie."

1.5 Pro also excels at reasoning across data within a prompt. Machel highlighted an example involving the rare language Kalamang, spoken by fewer than 200 people worldwide. "The model can't translate into Kalamang on its own, but with the long context window, we could include the entire grammar manual and example sentences. The model then learned to translate from English to Kalamang at a level comparable to someone learning from the same material."

Gemini 1.5 Pro comes with a standard 128K-token context window, but a select group of developers and enterprise customers can access a 1 million token context window through AI Studio and Vertex AI in private preview. Managing such a large context window is computationally intensive, and we're actively working on optimizations to reduce latency as we scale it out.

Looking ahead, the team is focused on making the model faster and more efficient, with safety as a priority. They're also exploring ways to further expand the long context window, enhance underlying architectures, and leverage new hardware improvements. Nikolay noted, "10 million tokens at once is nearing the thermal limit of our Tensor Processing Units. We're not sure where the limit lies yet, and the model might be capable of even more as hardware continues to evolve."

The team is eager to see the innovative applications that developers and the broader community will create with these new capabilities. Machel reflected, "When I first saw we had a million tokens in context, I wondered, 'What do you even use this for?' But now, I believe people's imaginations will expand, leading to more creative uses of these new capabilities."

[ttpp][yyxx]

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

August 17, 2025 at 3:00:59 AM EDT

August 17, 2025 at 3:00:59 AM EDT

Super cool to see Gemini 1.5's long context window in action! 😎 Makes me wonder how it'll handle massive datasets compared to older models.

0

0

July 30, 2025 at 9:41:19 PM EDT

July 30, 2025 at 9:41:19 PM EDT

Wow, the long context window in Gemini 1.5 sounds like a game-changer! I'm curious how it'll handle massive datasets in real-world apps. Excited to see where this takes AI! 🚀

0

0

July 27, 2025 at 9:19:30 PM EDT

July 27, 2025 at 9:19:30 PM EDT

The long context window in Gemini 1.5 sounds like a game-changer! I'm curious how it'll handle massive datasets in real-world apps. Any cool examples out there yet? 🤔

0

0

April 16, 2025 at 7:56:25 PM EDT

April 16, 2025 at 7:56:25 PM EDT

Cửa sổ ngữ cảnh dài của Gemini 1.5 thực sự là một bước tiến lớn! Thật đáng kinh ngạc khi nó có thể xử lý nhiều hơn so với các mô hình cũ. Chỉ mong nó nhanh hơn một chút. Tuy nhiên, đây là một bước tiến lớn! 💪

0

0

April 16, 2025 at 11:41:59 AM EDT

April 16, 2025 at 11:41:59 AM EDT

A janela de contexto longo do Gemini 1.5 é revolucionária, sem dúvida! Mas às vezes parece que está tentando fazer muito de uma vez, o que pode atrasar as coisas. Ainda assim, para processar grandes quantidades de dados, é imbatível. Vale a pena conferir! 🚀

0

0

April 14, 2025 at 6:59:46 PM EDT

April 14, 2025 at 6:59:46 PM EDT

Gemini 1.5's long context window is a game-changer, no doubt! But sometimes it feels like it's trying to do too much at once, which can slow things down. Still, for processing huge chunks of data, it's unbeatable. Worth checking out! 🚀

0

0