5 Ways Google's Trillium Could Revolutionize AI and Cloud Computing - Plus 2 Challenges

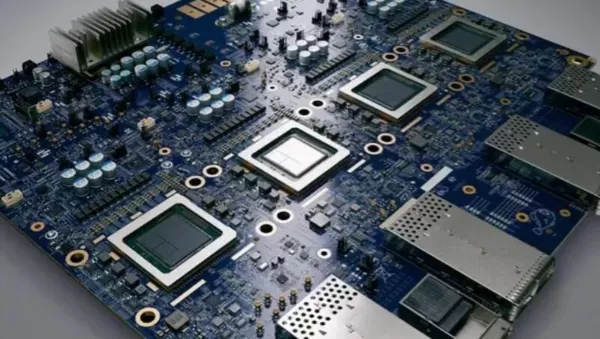

Google's latest innovation, Trillium, represents a major leap forward in the realms of artificial intelligence (AI) and cloud computing. As the sixth-generation Tensor Processing Unit (TPU), Trillium is poised to revolutionize the economics and performance of large-scale AI infrastructure. Coupled with Gemini 2.0, an AI model tailored for the "agentic era," and Deep Research, which simplifies complex machine learning query management, Trillium showcases Google's most mature and ambitious endeavor to transform its AI and cloud offerings.

Also: Google's Gemini 2.0 AI promises to be faster and smarter via agentic advances

Five Reasons Why Trillium Could Be a Game-Changer for Google's AI and Cloud Strategy

1. Superior Cost and Performance Efficiency

Trillium stands out with its impressive cost and performance metrics. Google boasts that Trillium offers up to 2.5 times better training performance per dollar and three times higher inference throughput than its predecessors. This is a game-changer for businesses looking to cut costs on training large language models (LLMs) like Gemini 2.0 and managing tasks like image generation and recommendation systems.

Early adopters such as AI21 Labs have already seen the benefits. Having been a part of the TPU ecosystem for a while, they've experienced significant improvements in cost-efficiency and scalability with Trillium when training their large language models.

"At AI21, we're always pushing to improve the performance and efficiency of our Mamba and Jamba language models. Having used TPUs since v4, we're blown away by what Google Cloud's Trillium can do. The leaps in scale, speed, and cost-efficiency are huge. We're confident that Trillium will speed up the development of our next generation of sophisticated language models, allowing us to offer even more powerful and accessible AI solutions to our customers." - Barak Lenz, CTO, AI21 Labs

These initial results not only validate Google's claims about Trillium's performance and cost but also make it an attractive choice for businesses already using Google's infrastructure.

2. Exceptional Scalability for Large-Scale AI Workloads

Trillium is designed to handle massive AI workloads with incredible scalability. Google claims a 99% scaling efficiency across 12 pods (3,072 chips) and 94% across 24 pods for major open-source models like Gemini, Gemma 2, and Llama 3.2. This near-linear scaling means Trillium can manage extensive training tasks and large-scale deployments without breaking a sweat.

Also: The best open-source AI models: All your free-to-use options explained

Furthermore, Trillium's integration with Google Cloud's AI Hypercomputer allows for the seamless addition of over 100,000 chips into a single Jupiter network fabric, boasting 13 Petabits/sec of bandwidth. This level of scalability is crucial for businesses needing robust and efficient AI infrastructure to meet their growing computational demands.

Also: Is this the end of Google? This new AI tool isn't just competing, it's winning

By maintaining high scaling efficiency across thousands of chips, Trillium positions itself as a strong contender for large-scale AI training tasks. This scalability allows businesses to expand their AI operations without compromising performance or facing prohibitive costs, making Trillium a compelling choice for those with big AI ambitions.

3. Advanced Hardware Innovations

Trillium is packed with advanced hardware innovations that enhance its performance and cost-effectiveness. It features doubled High Bandwidth Memory (HBM), which boosts data transfer rates and reduces bottlenecks. Its TPU system architecture also includes a third-generation SparseCore, which optimizes computational efficiency by focusing resources on critical data paths.

Moreover, Trillium boasts a 4.7x increase in peak compute performance per chip, significantly enhancing its processing power. These advancements allow Trillium to handle demanding AI tasks, laying a solid foundation for future AI developments and applications. The hardware improvements not only boost performance but also contribute to energy efficiency, making Trillium a sustainable option for large-scale AI operations. By investing in these advanced hardware technologies, Google ensures that Trillium remains at the cutting edge of AI processing capabilities, supporting increasingly complex and resource-intensive AI models.

4. Seamless Integration with Google Cloud's AI Ecosystem

Trillium's deep integration with Google Cloud's AI Hypercomputer is a major advantage. By tapping into Google's vast cloud infrastructure, Trillium optimizes AI workloads, making it easier to deploy and manage AI models. This seamless integration enhances the performance and reliability of AI applications hosted on Google Cloud, providing businesses with a unified and optimized solution for their AI needs. For organizations already invested in Google's ecosystem, Trillium offers a streamlined and highly integrated way to scale their AI initiatives effectively.

Also: Gemini's new Deep Research feature searches the web for you - like an assistant

5. Future-Proofing AI Infrastructure with Gemini 2.0 and Deep Research

Trillium isn't just a powerful TPU; it's part of a broader strategy that includes Gemini 2.0, designed for the "agentic era," and Deep Research, which simplifies managing complex machine learning queries. This ecosystem approach ensures that Trillium stays relevant and can support the next wave of AI innovations. By aligning Trillium with these advanced tools and models, Google is future-proofing its AI infrastructure, making it adaptable to emerging trends and technologies in the AI landscape.

Also: The fall of Intel: How gen AI helped dethrone a giant and transform computing as we know it

This strategic alignment allows Google to offer a comprehensive AI solution that goes beyond mere processing power. By integrating Trillium with cutting-edge AI models and management tools, Google ensures that businesses can fully leverage their AI investments, staying ahead in a rapidly evolving technological landscape.

Competitive Landscape: Navigating the AI Hardware Market

Despite Trillium's advantages, Google faces fierce competition from industry giants like NVIDIA and Amazon. NVIDIA's GPUs, particularly the H100 and H200 models, are known for their high performance and support for leading generative AI frameworks through the mature CUDA ecosystem. NVIDIA's upcoming Blackwell B100 and B200 GPUs are expected to improve low-precision operations crucial for cost-effective scaling, maintaining NVIDIA's strong position in the AI hardware market.

Also: How ChatGPT's data analysis tool yields actionable business insights with no programming

Trillium's tight integration with Google Cloud enhances efficiency but may pose challenges in portability and flexibility. Unlike AWS, which offers a hybrid approach allowing businesses to use both NVIDIA GPUs and Trainium chips, or NVIDIA's highly portable GPUs that run smoothly across various cloud and on-premises environments, Trillium's reliance on a single cloud might limit its appeal for organizations seeking multi-cloud or hybrid solutions.

Amazon's second-generation Trainium, now widely available, provides a 30-40% price-performance improvement over NVIDIA GPUs for training large language models (LLMs), and the company recently introduced its third-generation version alongside "Project Rainier," a massive new training cluster. AWS's hybrid strategy minimizes risk while optimizing performance, offering more flexibility than Google's Trillium for diverse deployment needs.

Also: Amazon AWS unveils Trainium3 chip, Project Rainier

Trillium's success will hinge on proving its performance and cost advantages can outweigh the ecosystem maturity and portability offered by NVIDIA and Amazon. Google must capitalize on its superior cost and performance metrics and consider ways to enhance Trillium's ecosystem compatibility beyond Google Cloud to attract a broader range of businesses seeking versatile AI solutions.

Can Trillium Prove Its Value?

Google's Trillium marks a bold and ambitious move to advance AI and cloud computing infrastructure. With its superior cost and performance efficiency, exceptional scalability, advanced hardware innovations, seamless integration with Google Cloud, and alignment with future AI developments, Trillium has the potential to draw in businesses seeking optimized AI solutions. The early successes with adopters like AI21 Labs underscore Trillium's capabilities and its ability to meet Google's promises.

Also: Even Nvidia's CEO is obsessed with Google's NotebookLM AI tool

However, the competitive landscape dominated by NVIDIA and Amazon presents significant hurdles. To cement its position, Google must address ecosystem flexibility, demonstrate independent performance validation, and perhaps explore multi-cloud compatibility. If successful, Trillium could significantly bolster Google's standing in the AI and cloud computing markets, offering a robust alternative for large-scale AI operations and helping businesses leverage AI technologies more effectively and efficiently.

Related article

North.Cloud Secures $5M to Revolutionize Cloud FinOps with AI-Driven Operating System

North.Cloud Secures $5 Million Series A to Pioneer Next-Gen Cloud Infrastructure Management

Cloud optimization leader North.Cloud has announced a $5 million Series A funding round led by Companyon Ventures, fueling the rollout of its groundbreaking

North.Cloud Secures $5M to Revolutionize Cloud FinOps with AI-Driven Operating System

North.Cloud Secures $5 Million Series A to Pioneer Next-Gen Cloud Infrastructure Management

Cloud optimization leader North.Cloud has announced a $5 million Series A funding round led by Companyon Ventures, fueling the rollout of its groundbreaking

Artifism Review: AI-Powered Content & Image Generator SaaS Script

In today's content-driven digital ecosystem, consistently producing high-quality materials presents significant challenges for creators and marketers alike. The Artifism AI Content & Image Generator SaaS script offers an innovative solution by automa

Artifism Review: AI-Powered Content & Image Generator SaaS Script

In today's content-driven digital ecosystem, consistently producing high-quality materials presents significant challenges for creators and marketers alike. The Artifism AI Content & Image Generator SaaS script offers an innovative solution by automa

Google AI Ultra Unveiled: Premium Subscription Priced at $249.99 Monthly

Google Unveils Premium AI Ultra SubscriptionAt Google I/O 2025, the tech giant announced its new comprehensive AI subscription service - Google AI Ultra. Priced at $249.99 monthly, this premium offering provides exclusive access to Google's most adva

Comments (8)

0/200

Google AI Ultra Unveiled: Premium Subscription Priced at $249.99 Monthly

Google Unveils Premium AI Ultra SubscriptionAt Google I/O 2025, the tech giant announced its new comprehensive AI subscription service - Google AI Ultra. Priced at $249.99 monthly, this premium offering provides exclusive access to Google's most adva

Comments (8)

0/200

![HenryTurner]() HenryTurner

HenryTurner

September 25, 2025 at 12:30:46 AM EDT

September 25, 2025 at 12:30:46 AM EDT

Esse projeto do Google parece promissor, mas fico pensando se não vai criar ainda mais dependência das grandes tech. Será que empresas menores vão conseguir competir ou só vão ficar reféns dessas super plataformas? 🤨

0

0

![ScarlettWhite]() ScarlettWhite

ScarlettWhite

August 23, 2025 at 1:01:15 AM EDT

August 23, 2025 at 1:01:15 AM EDT

Trillium sounds like a game-changer for AI! I'm curious how its efficiency will impact smaller startups trying to compete in the cloud space. Could it level the playing field or just make Google untouchable? 🤔

0

0

![AlbertMartínez]() AlbertMartínez

AlbertMartínez

August 10, 2025 at 3:00:59 AM EDT

August 10, 2025 at 3:00:59 AM EDT

Trillium sounds like a beast for AI! 4.7x compute boost is wild—imagine training models in hours instead of days. But can Google keep up with NVIDIA’s grip on the market? 🤔 Curious to see how this plays out!

0

0

![LunaYoung]() LunaYoung

LunaYoung

April 25, 2025 at 12:10:31 AM EDT

April 25, 2025 at 12:10:31 AM EDT

O Trillium do Google parece ser uma revolução para a IA e a computação em nuvem! A TPU de sexta geração é impressionante, mas estou um pouco preocupado com os dois desafios mencionados. Ainda assim, mal posso esperar para ver como ele se comporta em cenários reais. 🚀

0

0

![GeorgeMiller]() GeorgeMiller

GeorgeMiller

April 24, 2025 at 12:14:35 PM EDT

April 24, 2025 at 12:14:35 PM EDT

El Trillium de Google parece un cambio de juego para la IA y la computación en la nube. ¡La TPU de sexta generación es impresionante, pero me preocupan un poco los dos desafíos mencionados! Aún así, no puedo esperar para ver cómo funciona en escenarios del mundo real. 🚀

0

0

![CarlHill]() CarlHill

CarlHill

April 24, 2025 at 11:38:14 AM EDT

April 24, 2025 at 11:38:14 AM EDT

GoogleのTrilliumはAIとクラウドコンピューティングを変える可能性があるように感じます!第6世代のTPUは素晴らしいですが、2つの課題が気になります。それでも、実際のシナリオでのパフォーマンスを見るのが待ち遠しいです。🚀

0

0

Google's latest innovation, Trillium, represents a major leap forward in the realms of artificial intelligence (AI) and cloud computing. As the sixth-generation Tensor Processing Unit (TPU), Trillium is poised to revolutionize the economics and performance of large-scale AI infrastructure. Coupled with Gemini 2.0, an AI model tailored for the "agentic era," and Deep Research, which simplifies complex machine learning query management, Trillium showcases Google's most mature and ambitious endeavor to transform its AI and cloud offerings.

Also: Google's Gemini 2.0 AI promises to be faster and smarter via agentic advances

Five Reasons Why Trillium Could Be a Game-Changer for Google's AI and Cloud Strategy

1. Superior Cost and Performance Efficiency

Trillium stands out with its impressive cost and performance metrics. Google boasts that Trillium offers up to 2.5 times better training performance per dollar and three times higher inference throughput than its predecessors. This is a game-changer for businesses looking to cut costs on training large language models (LLMs) like Gemini 2.0 and managing tasks like image generation and recommendation systems.

Early adopters such as AI21 Labs have already seen the benefits. Having been a part of the TPU ecosystem for a while, they've experienced significant improvements in cost-efficiency and scalability with Trillium when training their large language models.

"At AI21, we're always pushing to improve the performance and efficiency of our Mamba and Jamba language models. Having used TPUs since v4, we're blown away by what Google Cloud's Trillium can do. The leaps in scale, speed, and cost-efficiency are huge. We're confident that Trillium will speed up the development of our next generation of sophisticated language models, allowing us to offer even more powerful and accessible AI solutions to our customers." - Barak Lenz, CTO, AI21 Labs

These initial results not only validate Google's claims about Trillium's performance and cost but also make it an attractive choice for businesses already using Google's infrastructure.

2. Exceptional Scalability for Large-Scale AI Workloads

Trillium is designed to handle massive AI workloads with incredible scalability. Google claims a 99% scaling efficiency across 12 pods (3,072 chips) and 94% across 24 pods for major open-source models like Gemini, Gemma 2, and Llama 3.2. This near-linear scaling means Trillium can manage extensive training tasks and large-scale deployments without breaking a sweat.

Also: The best open-source AI models: All your free-to-use options explained

Furthermore, Trillium's integration with Google Cloud's AI Hypercomputer allows for the seamless addition of over 100,000 chips into a single Jupiter network fabric, boasting 13 Petabits/sec of bandwidth. This level of scalability is crucial for businesses needing robust and efficient AI infrastructure to meet their growing computational demands.

Also: Is this the end of Google? This new AI tool isn't just competing, it's winning

By maintaining high scaling efficiency across thousands of chips, Trillium positions itself as a strong contender for large-scale AI training tasks. This scalability allows businesses to expand their AI operations without compromising performance or facing prohibitive costs, making Trillium a compelling choice for those with big AI ambitions.

3. Advanced Hardware Innovations

Trillium is packed with advanced hardware innovations that enhance its performance and cost-effectiveness. It features doubled High Bandwidth Memory (HBM), which boosts data transfer rates and reduces bottlenecks. Its TPU system architecture also includes a third-generation SparseCore, which optimizes computational efficiency by focusing resources on critical data paths.

Moreover, Trillium boasts a 4.7x increase in peak compute performance per chip, significantly enhancing its processing power. These advancements allow Trillium to handle demanding AI tasks, laying a solid foundation for future AI developments and applications. The hardware improvements not only boost performance but also contribute to energy efficiency, making Trillium a sustainable option for large-scale AI operations. By investing in these advanced hardware technologies, Google ensures that Trillium remains at the cutting edge of AI processing capabilities, supporting increasingly complex and resource-intensive AI models.

4. Seamless Integration with Google Cloud's AI Ecosystem

Trillium's deep integration with Google Cloud's AI Hypercomputer is a major advantage. By tapping into Google's vast cloud infrastructure, Trillium optimizes AI workloads, making it easier to deploy and manage AI models. This seamless integration enhances the performance and reliability of AI applications hosted on Google Cloud, providing businesses with a unified and optimized solution for their AI needs. For organizations already invested in Google's ecosystem, Trillium offers a streamlined and highly integrated way to scale their AI initiatives effectively.

Also: Gemini's new Deep Research feature searches the web for you - like an assistant

5. Future-Proofing AI Infrastructure with Gemini 2.0 and Deep Research

Trillium isn't just a powerful TPU; it's part of a broader strategy that includes Gemini 2.0, designed for the "agentic era," and Deep Research, which simplifies managing complex machine learning queries. This ecosystem approach ensures that Trillium stays relevant and can support the next wave of AI innovations. By aligning Trillium with these advanced tools and models, Google is future-proofing its AI infrastructure, making it adaptable to emerging trends and technologies in the AI landscape.

Also: The fall of Intel: How gen AI helped dethrone a giant and transform computing as we know it

This strategic alignment allows Google to offer a comprehensive AI solution that goes beyond mere processing power. By integrating Trillium with cutting-edge AI models and management tools, Google ensures that businesses can fully leverage their AI investments, staying ahead in a rapidly evolving technological landscape.

Competitive Landscape: Navigating the AI Hardware Market

Despite Trillium's advantages, Google faces fierce competition from industry giants like NVIDIA and Amazon. NVIDIA's GPUs, particularly the H100 and H200 models, are known for their high performance and support for leading generative AI frameworks through the mature CUDA ecosystem. NVIDIA's upcoming Blackwell B100 and B200 GPUs are expected to improve low-precision operations crucial for cost-effective scaling, maintaining NVIDIA's strong position in the AI hardware market.

Also: How ChatGPT's data analysis tool yields actionable business insights with no programming

Trillium's tight integration with Google Cloud enhances efficiency but may pose challenges in portability and flexibility. Unlike AWS, which offers a hybrid approach allowing businesses to use both NVIDIA GPUs and Trainium chips, or NVIDIA's highly portable GPUs that run smoothly across various cloud and on-premises environments, Trillium's reliance on a single cloud might limit its appeal for organizations seeking multi-cloud or hybrid solutions.

Amazon's second-generation Trainium, now widely available, provides a 30-40% price-performance improvement over NVIDIA GPUs for training large language models (LLMs), and the company recently introduced its third-generation version alongside "Project Rainier," a massive new training cluster. AWS's hybrid strategy minimizes risk while optimizing performance, offering more flexibility than Google's Trillium for diverse deployment needs.

Also: Amazon AWS unveils Trainium3 chip, Project Rainier

Trillium's success will hinge on proving its performance and cost advantages can outweigh the ecosystem maturity and portability offered by NVIDIA and Amazon. Google must capitalize on its superior cost and performance metrics and consider ways to enhance Trillium's ecosystem compatibility beyond Google Cloud to attract a broader range of businesses seeking versatile AI solutions.

Can Trillium Prove Its Value?

Google's Trillium marks a bold and ambitious move to advance AI and cloud computing infrastructure. With its superior cost and performance efficiency, exceptional scalability, advanced hardware innovations, seamless integration with Google Cloud, and alignment with future AI developments, Trillium has the potential to draw in businesses seeking optimized AI solutions. The early successes with adopters like AI21 Labs underscore Trillium's capabilities and its ability to meet Google's promises.

Also: Even Nvidia's CEO is obsessed with Google's NotebookLM AI tool

However, the competitive landscape dominated by NVIDIA and Amazon presents significant hurdles. To cement its position, Google must address ecosystem flexibility, demonstrate independent performance validation, and perhaps explore multi-cloud compatibility. If successful, Trillium could significantly bolster Google's standing in the AI and cloud computing markets, offering a robust alternative for large-scale AI operations and helping businesses leverage AI technologies more effectively and efficiently.

Artifism Review: AI-Powered Content & Image Generator SaaS Script

In today's content-driven digital ecosystem, consistently producing high-quality materials presents significant challenges for creators and marketers alike. The Artifism AI Content & Image Generator SaaS script offers an innovative solution by automa

Artifism Review: AI-Powered Content & Image Generator SaaS Script

In today's content-driven digital ecosystem, consistently producing high-quality materials presents significant challenges for creators and marketers alike. The Artifism AI Content & Image Generator SaaS script offers an innovative solution by automa

Google AI Ultra Unveiled: Premium Subscription Priced at $249.99 Monthly

Google Unveils Premium AI Ultra SubscriptionAt Google I/O 2025, the tech giant announced its new comprehensive AI subscription service - Google AI Ultra. Priced at $249.99 monthly, this premium offering provides exclusive access to Google's most adva

Google AI Ultra Unveiled: Premium Subscription Priced at $249.99 Monthly

Google Unveils Premium AI Ultra SubscriptionAt Google I/O 2025, the tech giant announced its new comprehensive AI subscription service - Google AI Ultra. Priced at $249.99 monthly, this premium offering provides exclusive access to Google's most adva

September 25, 2025 at 12:30:46 AM EDT

September 25, 2025 at 12:30:46 AM EDT

Esse projeto do Google parece promissor, mas fico pensando se não vai criar ainda mais dependência das grandes tech. Será que empresas menores vão conseguir competir ou só vão ficar reféns dessas super plataformas? 🤨

0

0

August 23, 2025 at 1:01:15 AM EDT

August 23, 2025 at 1:01:15 AM EDT

Trillium sounds like a game-changer for AI! I'm curious how its efficiency will impact smaller startups trying to compete in the cloud space. Could it level the playing field or just make Google untouchable? 🤔

0

0

August 10, 2025 at 3:00:59 AM EDT

August 10, 2025 at 3:00:59 AM EDT

Trillium sounds like a beast for AI! 4.7x compute boost is wild—imagine training models in hours instead of days. But can Google keep up with NVIDIA’s grip on the market? 🤔 Curious to see how this plays out!

0

0

April 25, 2025 at 12:10:31 AM EDT

April 25, 2025 at 12:10:31 AM EDT

O Trillium do Google parece ser uma revolução para a IA e a computação em nuvem! A TPU de sexta geração é impressionante, mas estou um pouco preocupado com os dois desafios mencionados. Ainda assim, mal posso esperar para ver como ele se comporta em cenários reais. 🚀

0

0

April 24, 2025 at 12:14:35 PM EDT

April 24, 2025 at 12:14:35 PM EDT

El Trillium de Google parece un cambio de juego para la IA y la computación en la nube. ¡La TPU de sexta generación es impresionante, pero me preocupan un poco los dos desafíos mencionados! Aún así, no puedo esperar para ver cómo funciona en escenarios del mundo real. 🚀

0

0

April 24, 2025 at 11:38:14 AM EDT

April 24, 2025 at 11:38:14 AM EDT

GoogleのTrilliumはAIとクラウドコンピューティングを変える可能性があるように感じます!第6世代のTPUは素晴らしいですが、2つの課題が気になります。それでも、実際のシナリオでのパフォーマンスを見るのが待ち遠しいです。🚀

0

0