Meta Shares Revenue with Hosts of Llama AI Models, Filing Discloses

While Meta CEO Mark Zuckerberg emphasized in July 2023 that "selling access" isn't their business model for Llama AI models, newly disclosed court filings reveal Meta engages in revenue-sharing partnerships with cloud providers hosting these open-source models.

Monetization Through Hosting Partnerships

The unredacted Kadrey v. Meta lawsuit documents show Meta receives revenue shares from companies offering Llama models to their users, though specific partners aren't named. Meta's blog references cloud providers like AWS, Google Cloud, Azure, and hardware partners including Nvidia and Dell as official Llama ecosystem collaborators.

The Hosting Advantage

While developers can freely download and customize Llama models, hosted solutions streamline deployment with additional tools and infrastructure support. This practical convenience creates monetization opportunities through enterprise service agreements.

Meta's Evolving AI Revenue Strategy

During Meta's Q2 2024 earnings call, Zuckerberg outlined their approach:

- Revenue shares from cloud providers reselling Llama access

- Potential monetization through business messaging integrations

- Advertising opportunities within AI interactions

The CEO noted: "When major cloud platforms commercially distribute these services, we believe Meta deserves compensation—these are the partnerships we're actively pursuing."

Strategic Benefits Beyond Direct Revenue

Meta positions Llama's open availability as enhancing overall AI capabilities through:

- Community-driven model improvements

- Industry standardization benefits

- Cross-platform interoperability

Legal Context: The Copyright Lawsuit

The Kadrey v. Meta case alleges:

- Use of pirated e-book content for training

- Possible facilitation of content sharing through torrent networks

- Commercial interest demonstrated by revenue-sharing arrangements

"Our open approach to Llama ultimately improves our products more effectively than isolated development," Zuckerberg stated during Q3 2024 earnings.

Meta's Accelerating AI Investments

The company plans major capital expenditures:

- $60-80 billion budgeted for 2025

- Primary allocation to data center infrastructure

- Expansion of AI research teams

Reports suggest Meta may introduce premium Meta AI features via subscription to offset costs, complementing existing hosting revenue streams.

Editorial Note

This story was updated with additional context from Meta's earnings transcripts regarding their revenue-sharing intentions with large cloud providers.

Related article

Microsoft Study Finds More AI Tokens Increase Reasoning Errors

Emerging Insights Into LLM Reasoning EfficiencyNew research from Microsoft demonstrates that advanced reasoning techniques in large language models don't produce uniform improvements across different AI systems. Their groundbreaking study analyzed ho

Microsoft Study Finds More AI Tokens Increase Reasoning Errors

Emerging Insights Into LLM Reasoning EfficiencyNew research from Microsoft demonstrates that advanced reasoning techniques in large language models don't produce uniform improvements across different AI systems. Their groundbreaking study analyzed ho

Meta's Zuckerberg Says Not All AI 'Superintelligence' Models Will Be Open-Sourced

Meta's Strategic Shift Toward Personal SuperintelligenceMeta CEO Mark Zuckerberg outlined an ambitious vision this week for "personal superintelligence" – AI systems that empower individuals to accomplish personal objectives - signaling potential cha

Meta's Zuckerberg Says Not All AI 'Superintelligence' Models Will Be Open-Sourced

Meta's Strategic Shift Toward Personal SuperintelligenceMeta CEO Mark Zuckerberg outlined an ambitious vision this week for "personal superintelligence" – AI systems that empower individuals to accomplish personal objectives - signaling potential cha

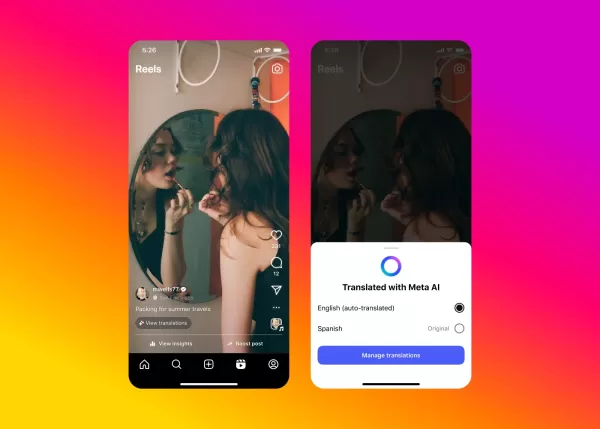

Meta's AI Tackles Video Dubbing for Instagram Content

Meta is expanding access to its groundbreaking AI-powered dubbing technology across Facebook and Instagram, introducing seamless Video translation capabilities that maintain your authentic voice and natural lip movements.Revolutionizing Cross-Cultura

Comments (0)

0/200

Meta's AI Tackles Video Dubbing for Instagram Content

Meta is expanding access to its groundbreaking AI-powered dubbing technology across Facebook and Instagram, introducing seamless Video translation capabilities that maintain your authentic voice and natural lip movements.Revolutionizing Cross-Cultura

Comments (0)

0/200

While Meta CEO Mark Zuckerberg emphasized in July 2023 that "selling access" isn't their business model for Llama AI models, newly disclosed court filings reveal Meta engages in revenue-sharing partnerships with cloud providers hosting these open-source models.

Monetization Through Hosting Partnerships

The unredacted Kadrey v. Meta lawsuit documents show Meta receives revenue shares from companies offering Llama models to their users, though specific partners aren't named. Meta's blog references cloud providers like AWS, Google Cloud, Azure, and hardware partners including Nvidia and Dell as official Llama ecosystem collaborators.

The Hosting Advantage

While developers can freely download and customize Llama models, hosted solutions streamline deployment with additional tools and infrastructure support. This practical convenience creates monetization opportunities through enterprise service agreements.

Meta's Evolving AI Revenue Strategy

During Meta's Q2 2024 earnings call, Zuckerberg outlined their approach:

- Revenue shares from cloud providers reselling Llama access

- Potential monetization through business messaging integrations

- Advertising opportunities within AI interactions

The CEO noted: "When major cloud platforms commercially distribute these services, we believe Meta deserves compensation—these are the partnerships we're actively pursuing."

Strategic Benefits Beyond Direct Revenue

Meta positions Llama's open availability as enhancing overall AI capabilities through:

- Community-driven model improvements

- Industry standardization benefits

- Cross-platform interoperability

Legal Context: The Copyright Lawsuit

The Kadrey v. Meta case alleges:

- Use of pirated e-book content for training

- Possible facilitation of content sharing through torrent networks

- Commercial interest demonstrated by revenue-sharing arrangements

"Our open approach to Llama ultimately improves our products more effectively than isolated development," Zuckerberg stated during Q3 2024 earnings.

Meta's Accelerating AI Investments

The company plans major capital expenditures:

- $60-80 billion budgeted for 2025

- Primary allocation to data center infrastructure

- Expansion of AI research teams

Reports suggest Meta may introduce premium Meta AI features via subscription to offset costs, complementing existing hosting revenue streams.

Editorial Note

This story was updated with additional context from Meta's earnings transcripts regarding their revenue-sharing intentions with large cloud providers.

Microsoft Study Finds More AI Tokens Increase Reasoning Errors

Emerging Insights Into LLM Reasoning EfficiencyNew research from Microsoft demonstrates that advanced reasoning techniques in large language models don't produce uniform improvements across different AI systems. Their groundbreaking study analyzed ho

Microsoft Study Finds More AI Tokens Increase Reasoning Errors

Emerging Insights Into LLM Reasoning EfficiencyNew research from Microsoft demonstrates that advanced reasoning techniques in large language models don't produce uniform improvements across different AI systems. Their groundbreaking study analyzed ho

Meta's Zuckerberg Says Not All AI 'Superintelligence' Models Will Be Open-Sourced

Meta's Strategic Shift Toward Personal SuperintelligenceMeta CEO Mark Zuckerberg outlined an ambitious vision this week for "personal superintelligence" – AI systems that empower individuals to accomplish personal objectives - signaling potential cha

Meta's Zuckerberg Says Not All AI 'Superintelligence' Models Will Be Open-Sourced

Meta's Strategic Shift Toward Personal SuperintelligenceMeta CEO Mark Zuckerberg outlined an ambitious vision this week for "personal superintelligence" – AI systems that empower individuals to accomplish personal objectives - signaling potential cha

Meta's AI Tackles Video Dubbing for Instagram Content

Meta is expanding access to its groundbreaking AI-powered dubbing technology across Facebook and Instagram, introducing seamless Video translation capabilities that maintain your authentic voice and natural lip movements.Revolutionizing Cross-Cultura

Meta's AI Tackles Video Dubbing for Instagram Content

Meta is expanding access to its groundbreaking AI-powered dubbing technology across Facebook and Instagram, introducing seamless Video translation capabilities that maintain your authentic voice and natural lip movements.Revolutionizing Cross-Cultura