I put GPT-4o through my coding tests and it aced them - except for one weird result

If you've been keeping up with the tech world, you're likely aware that OpenAI has just dropped its latest large language model, GPT-4o, where the "o" signifies "omni." This new model promises versatility across text, graphics, and voice, and I couldn't wait to put it through its paces with my standard set of coding tests. These tests have been run against a wide array of AI models, yielding some pretty fascinating results. Stick with me until the end because there's a twist you won't want to miss.

If you're interested in conducting your own experiments, check out this guide: How I test an AI chatbot's coding ability - and you can too. It outlines all the tests I use, along with detailed explanations of how they work and what to look for in the outcomes.

Now, let's dive into the results of each test and see how GPT-4o stacks up against previous contenders like Microsoft Copilot, Meta AI, Meta Code Llama, Google Gemini Advanced, and the earlier versions of ChatGPT.

1. Writing a WordPress Plugin

Here's a glimpse of GPT-4o's user interface:

Interestingly, GPT-4o took the liberty of including a JavaScript file, which dynamically updates the line count in both fields. While the prompt didn't explicitly rule out JavaScript, this creative approach was unexpected and effective. The JavaScript also enhances the Randomize button's functionality, allowing for multiple result sets without a full page refresh.

The lines were arranged correctly, and duplicates were appropriately separated according to the specifications. It's a solid piece of code, with just one minor quibble: the Randomize button wasn't placed on its own line, though I hadn't specified that in the prompt, so no points off for that.

Here are the aggregate results for this and previous tests:

- ChatGPT GPT-4o: Interface: good, functionality: good

- Microsoft Copilot: Interface: adequate, functionality: fail

- Meta AI: Interface: adequate, functionality: fail

- Meta Code Llama: Complete failure

- Google Gemini Advanced: Interface: good, functionality: fail

- ChatGPT 4: Interface: good, functionality: good

- ChatGPT 3.5: Interface: good, functionality: good

2. Rewriting a String Function

This test evaluates the model's ability to handle dollars and cents conversions. GPT-4o successfully rewrote the code to reject inputs that could cause issues with subsequent lines, ensuring only valid dollar and cent values are processed.

I was a bit disappointed that it didn't automatically add a leading zero to values like .75, converting them to 0.75. However, since I didn't explicitly request this feature, it's not a fault of the AI. It's a reminder that even when an AI delivers functional code, you might need to refine the prompt to get exactly what you need.

Here are the aggregate results for this and previous tests:

- ChatGPT GPT-4o: Succeeded

- Microsoft Copilot: Failed

- Meta AI: Failed

- Meta Code Llama: Succeeded

- Google Gemini Advanced: Failed

- ChatGPT 4: Succeeded

- ChatGPT 3.5: Succeeded

3. Finding an Annoying Bug

This test is intriguing because the solution isn't immediately apparent. I was initially stumped by this error during my own coding, so I turned to the first ChatGPT model for help. It found the error instantly, which was mind-blowing at the time.

Contrastingly, three of the other LLMs I tested missed the misdirection in this problem. The error message points to one part of the code, but the actual issue lies elsewhere, requiring deep knowledge of the WordPress framework to identify.

F fortunately, GPT-4o correctly identified the problem and described the fix accurately.

Here are the aggregate results for this and previous tests:

- ChatGPT GPT-4o: Succeeded

- Microsoft Copilot: Failed. Spectacularly. Enthusiastically. Emojically.

- Meta AI: Succeeded

- Meta Code Llama: Failed

- Google Gemini Advanced: Failed

- ChatGPT 4: Succeeded

- ChatGPT 3.5: Succeeded

So far, GPT-4o is three for three. Let's see how it does with the final test.

4. Writing a Script

In response to this test, GPT-4o actually provided more than I asked for. The test involves using the obscure Mac scripting tool Keyboard Maestro, Apple's AppleScript, and Chrome scripting behavior. Keyboard Maestro, by the way, is a game-changer for me, making Macs my go-to for productivity due to its ability to reprogram the OS and applications.

To pass, the AI needs to correctly outline a solution using a combination of Keyboard Maestro code, AppleScript, and Chrome API functionality.

Surprisingly, GPT-4o gave me two different versions:

Both versions correctly interacted with Keyboard Maestro, but they differed in handling case sensitivity. The left version was incorrect because AppleScript doesn't support "as lowercase." The right version, which used "contains" and was case-insensitive, worked fine.

I'm giving GPT-4o a pass, albeit cautiously, because it did deliver working code. However, returning two options, one of which was incorrect, made me do extra work to evaluate and choose the right one. That could have been as time-consuming as writing the code myself.

Here are the aggregate results for this and previous tests:

- ChatGPT GPT-4o: Succeeded, but with reservations

- Microsoft Copilot: Failed

- Meta AI: Failed

- Meta Code Llama: Failed

- Google Gemini Advanced: Succeeded

- ChatGPT 4: Succeeded

- ChatGPT 3.5: Failed

Overall Results

Here's how all the models fared across the four tests:

- ChatGPT GPT-4o: 4 out of 4 succeeded, but with that one odd dual-choice answer

- Microsoft Copilot: 0 out of 4 succeeded

- Meta AI: 1 out of 4 succeeded

- Meta Code Llama: 1 out of 4 succeeded

- Google Gemini Advanced: 1 out of 4 succeeded

- ChatGPT 4: 4 out of 4 succeeded

- ChatGPT 3.5: 3 out of 4 succeeded

Up until now, ChatGPT has been my go-to for coding assistance. It's always delivered (except when it hasn't). The other AIs mostly fell short in my tests. But GPT-4o threw me a curveball with that last dual-answer response. It made me question what's going on inside this model that could cause such a hiccup.

Despite this, GPT-4o remains the top performer in my coding tests, so I'll likely keep using it and get more familiar with its quirks. Alternatively, I might revert to GPT-3.5 or GPT-4 in ChatGPT Plus. Stay tuned; the next time ChatGPT updates its model, I'll definitely rerun these tests to see if it can consistently pick the right answer across all four tests.

Have you tried coding with any of these AI models? What's been your experience? Let us know in the comments below.

Related article

Google's Stitch AI Simplifies App Design Process

Google Unveils Stitch AI Design Tool at I/O 2025Google introduced Stitch, its revolutionary AI-powered interface design tool, during the keynote at Google I/O 2025. This innovative solution transforms natural language prompts or reference images into

Google's Stitch AI Simplifies App Design Process

Google Unveils Stitch AI Design Tool at I/O 2025Google introduced Stitch, its revolutionary AI-powered interface design tool, during the keynote at Google I/O 2025. This innovative solution transforms natural language prompts or reference images into

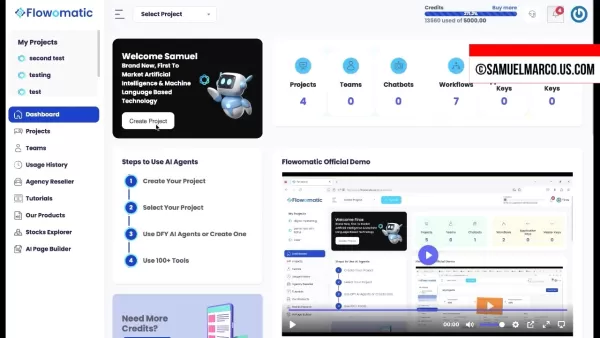

Claude 4 AI Outperforms Predecessors in Coding and Logical Reasoning Tasks

Anthropic has unveiled its next-gen Claude AI models - Claude Opus 4 and Claude Sonnet 4 - representing major advancements in hybrid-reasoning capabilities, particularly for programming applications and complex problem-solving scenarios.Positioned as

Claude 4 AI Outperforms Predecessors in Coding and Logical Reasoning Tasks

Anthropic has unveiled its next-gen Claude AI models - Claude Opus 4 and Claude Sonnet 4 - representing major advancements in hybrid-reasoning capabilities, particularly for programming applications and complex problem-solving scenarios.Positioned as

Flowomatic AI Agents 2.0 Transforms Business Automation With Cutting-Edge Tech

In today's competitive business landscape, artificial intelligence has become the driving force behind operational efficiency and growth strategies. Flowomatic AI Agents 2.0 represents a quantum leap in business automation technology, delivering an a

Comments (20)

0/200

Flowomatic AI Agents 2.0 Transforms Business Automation With Cutting-Edge Tech

In today's competitive business landscape, artificial intelligence has become the driving force behind operational efficiency and growth strategies. Flowomatic AI Agents 2.0 represents a quantum leap in business automation technology, delivering an a

Comments (20)

0/200

![JonathanAllen]() JonathanAllen

JonathanAllen

April 26, 2025 at 7:46:22 AM EDT

April 26, 2025 at 7:46:22 AM EDT

GPT-4o é impressionante, passando na maioria dos meus testes de codificação! Mas aquele resultado estranho me deixou confuso. Ainda assim, é versátil em texto, gráficos e voz. Se ao menos pudesse explicar aquele resultado estranho, seria perfeito! 🤔

0

0

![WillHarris]() WillHarris

WillHarris

April 25, 2025 at 2:21:39 PM EDT

April 25, 2025 at 2:21:39 PM EDT

GPT-4o thật ấn tượng, vượt qua hầu hết các bài kiểm tra mã hóa của tôi! Nhưng kết quả lạ đó làm tôi bối rối. Tuy nhiên, nó rất linh hoạt trong văn bản, đồ họa và giọng nói. Giá mà nó có thể giải thích kết quả lạ đó, thì sẽ hoàn hảo! 🤔

0

0

![DonaldGonzález]() DonaldGonzález

DonaldGonzález

April 24, 2025 at 7:41:59 AM EDT

April 24, 2025 at 7:41:59 AM EDT

GPT-4oは私のコードテストのほとんどを完璧にこなすので感動しました!しかし、その一つの奇妙な結果が気になりました。それでも、テキスト、グラフィック、音声での多様性は素晴らしいです。あの奇妙な結果を説明できれば完璧だったのに!🤔

0

0

![JustinAnderson]() JustinAnderson

JustinAnderson

April 23, 2025 at 1:12:28 AM EDT

April 23, 2025 at 1:12:28 AM EDT

¡El GPT-4o me impresionó con sus habilidades de codificación! Pasó todos mis tests excepto por un resultado extraño que me dejó pensando. Su versatilidad en texto, gráficos y voz es genial! Pero ese fallo, hay que arreglarlo, OpenAI! 😎

0

0

![NicholasClark]() NicholasClark

NicholasClark

April 22, 2025 at 10:12:49 PM EDT

April 22, 2025 at 10:12:49 PM EDT

GPT-4oのコードスキルには感心しました!私のテストをほぼ全てクリアしましたが、一つの奇妙な結果が気になります。テキスト、グラフィック、ボイスでの多才さは素晴らしい!でも、その一つのバグ、修正してほしいですね、OpenAI!😅

0

0

![DavidThomas]() DavidThomas

DavidThomas

April 22, 2025 at 1:04:24 PM EDT

April 22, 2025 at 1:04:24 PM EDT

GPT-4o is impressive, acing most of my coding tests! But that one weird result threw me off. Still, it's versatile across text, graphics, and voice. If only it could explain that odd outcome, it'd be perfect! 🤔

0

0

If you've been keeping up with the tech world, you're likely aware that OpenAI has just dropped its latest large language model, GPT-4o, where the "o" signifies "omni." This new model promises versatility across text, graphics, and voice, and I couldn't wait to put it through its paces with my standard set of coding tests. These tests have been run against a wide array of AI models, yielding some pretty fascinating results. Stick with me until the end because there's a twist you won't want to miss.

If you're interested in conducting your own experiments, check out this guide: How I test an AI chatbot's coding ability - and you can too. It outlines all the tests I use, along with detailed explanations of how they work and what to look for in the outcomes.

Now, let's dive into the results of each test and see how GPT-4o stacks up against previous contenders like Microsoft Copilot, Meta AI, Meta Code Llama, Google Gemini Advanced, and the earlier versions of ChatGPT.

1. Writing a WordPress Plugin

Here's a glimpse of GPT-4o's user interface:

Interestingly, GPT-4o took the liberty of including a JavaScript file, which dynamically updates the line count in both fields. While the prompt didn't explicitly rule out JavaScript, this creative approach was unexpected and effective. The JavaScript also enhances the Randomize button's functionality, allowing for multiple result sets without a full page refresh.

The lines were arranged correctly, and duplicates were appropriately separated according to the specifications. It's a solid piece of code, with just one minor quibble: the Randomize button wasn't placed on its own line, though I hadn't specified that in the prompt, so no points off for that.

Here are the aggregate results for this and previous tests:

- ChatGPT GPT-4o: Interface: good, functionality: good

- Microsoft Copilot: Interface: adequate, functionality: fail

- Meta AI: Interface: adequate, functionality: fail

- Meta Code Llama: Complete failure

- Google Gemini Advanced: Interface: good, functionality: fail

- ChatGPT 4: Interface: good, functionality: good

- ChatGPT 3.5: Interface: good, functionality: good

2. Rewriting a String Function

This test evaluates the model's ability to handle dollars and cents conversions. GPT-4o successfully rewrote the code to reject inputs that could cause issues with subsequent lines, ensuring only valid dollar and cent values are processed.

I was a bit disappointed that it didn't automatically add a leading zero to values like .75, converting them to 0.75. However, since I didn't explicitly request this feature, it's not a fault of the AI. It's a reminder that even when an AI delivers functional code, you might need to refine the prompt to get exactly what you need.

Here are the aggregate results for this and previous tests:

- ChatGPT GPT-4o: Succeeded

- Microsoft Copilot: Failed

- Meta AI: Failed

- Meta Code Llama: Succeeded

- Google Gemini Advanced: Failed

- ChatGPT 4: Succeeded

- ChatGPT 3.5: Succeeded

3. Finding an Annoying Bug

This test is intriguing because the solution isn't immediately apparent. I was initially stumped by this error during my own coding, so I turned to the first ChatGPT model for help. It found the error instantly, which was mind-blowing at the time.

Contrastingly, three of the other LLMs I tested missed the misdirection in this problem. The error message points to one part of the code, but the actual issue lies elsewhere, requiring deep knowledge of the WordPress framework to identify.

F fortunately, GPT-4o correctly identified the problem and described the fix accurately.

Here are the aggregate results for this and previous tests:

- ChatGPT GPT-4o: Succeeded

- Microsoft Copilot: Failed. Spectacularly. Enthusiastically. Emojically.

- Meta AI: Succeeded

- Meta Code Llama: Failed

- Google Gemini Advanced: Failed

- ChatGPT 4: Succeeded

- ChatGPT 3.5: Succeeded

So far, GPT-4o is three for three. Let's see how it does with the final test.

4. Writing a Script

In response to this test, GPT-4o actually provided more than I asked for. The test involves using the obscure Mac scripting tool Keyboard Maestro, Apple's AppleScript, and Chrome scripting behavior. Keyboard Maestro, by the way, is a game-changer for me, making Macs my go-to for productivity due to its ability to reprogram the OS and applications.

To pass, the AI needs to correctly outline a solution using a combination of Keyboard Maestro code, AppleScript, and Chrome API functionality.

Surprisingly, GPT-4o gave me two different versions:

Both versions correctly interacted with Keyboard Maestro, but they differed in handling case sensitivity. The left version was incorrect because AppleScript doesn't support "as lowercase." The right version, which used "contains" and was case-insensitive, worked fine.

I'm giving GPT-4o a pass, albeit cautiously, because it did deliver working code. However, returning two options, one of which was incorrect, made me do extra work to evaluate and choose the right one. That could have been as time-consuming as writing the code myself.

Here are the aggregate results for this and previous tests:

- ChatGPT GPT-4o: Succeeded, but with reservations

- Microsoft Copilot: Failed

- Meta AI: Failed

- Meta Code Llama: Failed

- Google Gemini Advanced: Succeeded

- ChatGPT 4: Succeeded

- ChatGPT 3.5: Failed

Overall Results

Here's how all the models fared across the four tests:

- ChatGPT GPT-4o: 4 out of 4 succeeded, but with that one odd dual-choice answer

- Microsoft Copilot: 0 out of 4 succeeded

- Meta AI: 1 out of 4 succeeded

- Meta Code Llama: 1 out of 4 succeeded

- Google Gemini Advanced: 1 out of 4 succeeded

- ChatGPT 4: 4 out of 4 succeeded

- ChatGPT 3.5: 3 out of 4 succeeded

Up until now, ChatGPT has been my go-to for coding assistance. It's always delivered (except when it hasn't). The other AIs mostly fell short in my tests. But GPT-4o threw me a curveball with that last dual-answer response. It made me question what's going on inside this model that could cause such a hiccup.

Despite this, GPT-4o remains the top performer in my coding tests, so I'll likely keep using it and get more familiar with its quirks. Alternatively, I might revert to GPT-3.5 or GPT-4 in ChatGPT Plus. Stay tuned; the next time ChatGPT updates its model, I'll definitely rerun these tests to see if it can consistently pick the right answer across all four tests.

Have you tried coding with any of these AI models? What's been your experience? Let us know in the comments below.

Google's Stitch AI Simplifies App Design Process

Google Unveils Stitch AI Design Tool at I/O 2025Google introduced Stitch, its revolutionary AI-powered interface design tool, during the keynote at Google I/O 2025. This innovative solution transforms natural language prompts or reference images into

Google's Stitch AI Simplifies App Design Process

Google Unveils Stitch AI Design Tool at I/O 2025Google introduced Stitch, its revolutionary AI-powered interface design tool, during the keynote at Google I/O 2025. This innovative solution transforms natural language prompts or reference images into

Claude 4 AI Outperforms Predecessors in Coding and Logical Reasoning Tasks

Anthropic has unveiled its next-gen Claude AI models - Claude Opus 4 and Claude Sonnet 4 - representing major advancements in hybrid-reasoning capabilities, particularly for programming applications and complex problem-solving scenarios.Positioned as

Claude 4 AI Outperforms Predecessors in Coding and Logical Reasoning Tasks

Anthropic has unveiled its next-gen Claude AI models - Claude Opus 4 and Claude Sonnet 4 - representing major advancements in hybrid-reasoning capabilities, particularly for programming applications and complex problem-solving scenarios.Positioned as

Flowomatic AI Agents 2.0 Transforms Business Automation With Cutting-Edge Tech

In today's competitive business landscape, artificial intelligence has become the driving force behind operational efficiency and growth strategies. Flowomatic AI Agents 2.0 represents a quantum leap in business automation technology, delivering an a

Flowomatic AI Agents 2.0 Transforms Business Automation With Cutting-Edge Tech

In today's competitive business landscape, artificial intelligence has become the driving force behind operational efficiency and growth strategies. Flowomatic AI Agents 2.0 represents a quantum leap in business automation technology, delivering an a

April 26, 2025 at 7:46:22 AM EDT

April 26, 2025 at 7:46:22 AM EDT

GPT-4o é impressionante, passando na maioria dos meus testes de codificação! Mas aquele resultado estranho me deixou confuso. Ainda assim, é versátil em texto, gráficos e voz. Se ao menos pudesse explicar aquele resultado estranho, seria perfeito! 🤔

0

0

April 25, 2025 at 2:21:39 PM EDT

April 25, 2025 at 2:21:39 PM EDT

GPT-4o thật ấn tượng, vượt qua hầu hết các bài kiểm tra mã hóa của tôi! Nhưng kết quả lạ đó làm tôi bối rối. Tuy nhiên, nó rất linh hoạt trong văn bản, đồ họa và giọng nói. Giá mà nó có thể giải thích kết quả lạ đó, thì sẽ hoàn hảo! 🤔

0

0

April 24, 2025 at 7:41:59 AM EDT

April 24, 2025 at 7:41:59 AM EDT

GPT-4oは私のコードテストのほとんどを完璧にこなすので感動しました!しかし、その一つの奇妙な結果が気になりました。それでも、テキスト、グラフィック、音声での多様性は素晴らしいです。あの奇妙な結果を説明できれば完璧だったのに!🤔

0

0

April 23, 2025 at 1:12:28 AM EDT

April 23, 2025 at 1:12:28 AM EDT

¡El GPT-4o me impresionó con sus habilidades de codificación! Pasó todos mis tests excepto por un resultado extraño que me dejó pensando. Su versatilidad en texto, gráficos y voz es genial! Pero ese fallo, hay que arreglarlo, OpenAI! 😎

0

0

April 22, 2025 at 10:12:49 PM EDT

April 22, 2025 at 10:12:49 PM EDT

GPT-4oのコードスキルには感心しました!私のテストをほぼ全てクリアしましたが、一つの奇妙な結果が気になります。テキスト、グラフィック、ボイスでの多才さは素晴らしい!でも、その一つのバグ、修正してほしいですね、OpenAI!😅

0

0

April 22, 2025 at 1:04:24 PM EDT

April 22, 2025 at 1:04:24 PM EDT

GPT-4o is impressive, acing most of my coding tests! But that one weird result threw me off. Still, it's versatile across text, graphics, and voice. If only it could explain that odd outcome, it'd be perfect! 🤔

0

0