Gemma 2 Now Accessible to Researchers, Developers

AI has the power to tackle some of the toughest challenges we face today—but that's only if everyone can get their hands on the tools to use it. That's why we kicked off the year by introducing Gemma, a set of lightweight, cutting-edge open models, crafted from the same tech that powers our Gemini models. Since then, we've expanded the Gemma family with CodeGemma, RecurrentGemma, and PaliGemma, each tailored for different AI tasks and easily accessible through partnerships with companies like Hugging Face, NVIDIA, and Ollama.

Now, we're excited to announce the global release of Gemma 2 for researchers and developers. Available in 9 billion (9B) and 27 billion (27B) parameter sizes, Gemma 2 offers superior performance and efficiency compared to its predecessor, with significant safety improvements. The 27B version is a powerhouse, rivaling models that are over twice its size, and it can run on a single NVIDIA H100 Tensor Core GPU or TPU host, slashing deployment costs. It's pretty cool how we've made high performance achievable without breaking the bank, right?

A new standard for efficiency and performance in open models

We've put a lot of effort into redesigning the architecture for Gemma 2, aiming for top-notch performance and efficiency. Here's what sets it apart:

- Outsized performance: At 27B, Gemma 2 is the top dog in its class, even competing with models more than twice its size. The 9B version also shines, outperforming Llama 3 8B and other open models in its category. For all the juicy details, check out the technical report.

- Unmatched efficiency and cost savings: The 27B Gemma 2 runs smoothly at full precision on a single Google Cloud TPU host, NVIDIA A100 80GB Tensor Core GPU, or NVIDIA H100 Tensor Core GPU, which means you can keep costs down without sacrificing performance. This makes AI deployments more accessible and budget-friendly.

- Blazing fast inference across hardware: Gemma 2 is designed to run quickly on everything from gaming laptops and high-end desktops to cloud setups. You can test it out at full precision in Google AI Studio, run it locally with the quantized version on Gemma.cpp on your CPU, or try it on your home computer with an NVIDIA RTX or GeForce RTX via Hugging Face Transformers.

Built for developers and researchers

Gemma 2 isn't just more powerful; it's also designed to fit seamlessly into your workflows:

- Open and accessible: Like the original Gemma models, Gemma 2 comes with a commercially-friendly license, allowing developers and researchers to share and monetize their creations.

- Broad framework compatibility: You can easily integrate Gemma 2 with your favorite tools and workflows, thanks to its compatibility with major AI frameworks like Hugging Face Transformers, and JAX, PyTorch, and TensorFlow via native Keras 3.0, vLLM, Gemma.cpp, Llama.cpp, and Ollama. It's also optimized with NVIDIA TensorRT-LLM for NVIDIA-accelerated infrastructure or as an NVIDIA NIM inference microservice, with optimization for NVIDIA’s NeMo on the horizon. You can start fine-tuning today with Keras and Hugging Face, and we're working on more parameter-efficient fine-tuning options.

- Effortless deployment: Starting next month, Google Cloud customers can easily deploy and manage Gemma 2 on Vertex AI.

Dive into the new Gemma Cookbook, packed with practical examples and recipes to help you build your own applications and fine-tune Gemma 2 for specific tasks. Learn how to use Gemma with your preferred tools, including for tasks like retrieval-augmented generation.

Responsible AI development

We're committed to helping developers and researchers build and deploy AI responsibly. Our Responsible Generative AI Toolkit is part of this effort. The recently open-sourced LLM Comparator helps with detailed evaluations of language models. Starting today, you can use the companion Python library to run comparative evaluations with your model and data, and visualize the results in the app. We're also working on open-sourcing our text watermarking technology, SynthID, for Gemma models.

When training Gemma 2, we followed our rigorous internal safety processes, filtering pre-training data and conducting thorough testing and evaluation against a wide range of metrics to identify and mitigate potential biases and risks. We share our results on public benchmarks related to safety and representational harms.

Projects built with Gemma

Our first Gemma launch sparked over 10 million downloads and tons of amazing projects. For example, Navarasa used Gemma to develop a model celebrating India's linguistic diversity.

With Gemma 2, developers can take on even more ambitious projects, pushing the boundaries of what's possible in AI. We'll keep exploring new architectures and developing specialized Gemma variants to tackle a broader range of AI tasks and challenges. We're also gearing up to release a 2.6B parameter Gemma 2 model, designed to balance lightweight accessibility with powerful performance. You can find out more about this in the technical report.

Getting started

Gemma 2 is now available in Google AI Studio, so you can test its full capabilities at 27B without any hardware requirements. You can also download Gemma 2's model weights from Kaggle and Hugging Face Models, with Vertex AI Model Garden coming soon.

To support research and development, Gemma 2 is available free of charge through Kaggle or via a free tier for Colab notebooks. First-time Google Cloud customers might be eligible for $300 in credits. Academic researchers can apply for the Gemma 2 Academic Research Program to get Google Cloud credits to speed up their research with Gemma 2. Applications are open now until August 9.

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

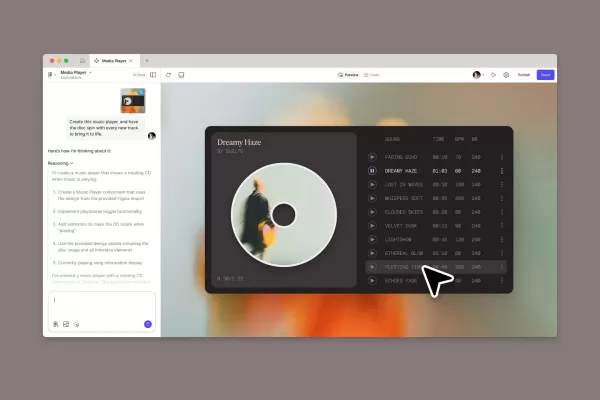

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (62)

0/200

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (62)

0/200

![MiaDavis]() MiaDavis

MiaDavis

September 14, 2025 at 8:30:40 PM EDT

September 14, 2025 at 8:30:40 PM EDT

Gemma 2가 개발자들에게 공개되었다니 기대되네요! 경량 모델이라서 스타트업이나 개인 개발자도 활용하기 좋을 것 같아요. 근데 진짜 빨리 써보고 싶은데 한국어 지원은 언제될까요? ㄷㄷ

0

0

![JuanMoore]() JuanMoore

JuanMoore

July 27, 2025 at 9:20:54 PM EDT

July 27, 2025 at 9:20:54 PM EDT

Wow, Gemma 2 sounds like a game-changer for researchers! Open models like this could spark some wild innovations. Anyone else excited to see what devs cook up with this? 🚀

0

0

![AndrewGarcía]() AndrewGarcía

AndrewGarcía

April 20, 2025 at 1:42:54 PM EDT

April 20, 2025 at 1:42:54 PM EDT

Gemma 2 é uma revolução para pesquisadores! É tão acessível e fácil de usar. O único ponto negativo é a curva de aprendizado para iniciantes. Mas, uma vez que você pega o jeito, é incrível! 🚀

0

0

![TimothyMitchell]() TimothyMitchell

TimothyMitchell

April 20, 2025 at 11:50:33 AM EDT

April 20, 2025 at 11:50:33 AM EDT

研究者にとってGemma 2は革命的です!アクセスしやすく、使いやすいです。ただ、初心者にとっては学習曲線が高いです。慣れれば素晴らしいツールです!🚀

0

0

![BillyWilson]() BillyWilson

BillyWilson

April 16, 2025 at 12:44:50 AM EDT

April 16, 2025 at 12:44:50 AM EDT

Gemma 2는 연구자들에게 혁신적이에요! 접근성이 좋고 사용하기 쉬워요. 다만, 초보자에게는 학습 곡선이 높아요. 익숙해지면 정말 대단해요! 🚀

0

0

![StevenGonzalez]() StevenGonzalez

StevenGonzalez

April 16, 2025 at 12:31:23 AM EDT

April 16, 2025 at 12:31:23 AM EDT

Gemma 2는 연구자와 개발자에게 혁신적인 도구입니다! 시작하기 쉬워서 좋고, 모델도 최첨단이에요. 다만 문서가 조금 더 자세했으면 좋겠어요. 그래도 AI에 도전하고 싶은 분들에게 강력 추천합니다! 🚀

0

0

AI has the power to tackle some of the toughest challenges we face today—but that's only if everyone can get their hands on the tools to use it. That's why we kicked off the year by introducing Gemma, a set of lightweight, cutting-edge open models, crafted from the same tech that powers our Gemini models. Since then, we've expanded the Gemma family with CodeGemma, RecurrentGemma, and PaliGemma, each tailored for different AI tasks and easily accessible through partnerships with companies like Hugging Face, NVIDIA, and Ollama.

Now, we're excited to announce the global release of Gemma 2 for researchers and developers. Available in 9 billion (9B) and 27 billion (27B) parameter sizes, Gemma 2 offers superior performance and efficiency compared to its predecessor, with significant safety improvements. The 27B version is a powerhouse, rivaling models that are over twice its size, and it can run on a single NVIDIA H100 Tensor Core GPU or TPU host, slashing deployment costs. It's pretty cool how we've made high performance achievable without breaking the bank, right?

A new standard for efficiency and performance in open models

We've put a lot of effort into redesigning the architecture for Gemma 2, aiming for top-notch performance and efficiency. Here's what sets it apart:

- Outsized performance: At 27B, Gemma 2 is the top dog in its class, even competing with models more than twice its size. The 9B version also shines, outperforming Llama 3 8B and other open models in its category. For all the juicy details, check out the technical report.

- Unmatched efficiency and cost savings: The 27B Gemma 2 runs smoothly at full precision on a single Google Cloud TPU host, NVIDIA A100 80GB Tensor Core GPU, or NVIDIA H100 Tensor Core GPU, which means you can keep costs down without sacrificing performance. This makes AI deployments more accessible and budget-friendly.

- Blazing fast inference across hardware: Gemma 2 is designed to run quickly on everything from gaming laptops and high-end desktops to cloud setups. You can test it out at full precision in Google AI Studio, run it locally with the quantized version on Gemma.cpp on your CPU, or try it on your home computer with an NVIDIA RTX or GeForce RTX via Hugging Face Transformers.

Built for developers and researchers

Gemma 2 isn't just more powerful; it's also designed to fit seamlessly into your workflows:

- Open and accessible: Like the original Gemma models, Gemma 2 comes with a commercially-friendly license, allowing developers and researchers to share and monetize their creations.

- Broad framework compatibility: You can easily integrate Gemma 2 with your favorite tools and workflows, thanks to its compatibility with major AI frameworks like Hugging Face Transformers, and JAX, PyTorch, and TensorFlow via native Keras 3.0, vLLM, Gemma.cpp, Llama.cpp, and Ollama. It's also optimized with NVIDIA TensorRT-LLM for NVIDIA-accelerated infrastructure or as an NVIDIA NIM inference microservice, with optimization for NVIDIA’s NeMo on the horizon. You can start fine-tuning today with Keras and Hugging Face, and we're working on more parameter-efficient fine-tuning options.

- Effortless deployment: Starting next month, Google Cloud customers can easily deploy and manage Gemma 2 on Vertex AI.

Dive into the new Gemma Cookbook, packed with practical examples and recipes to help you build your own applications and fine-tune Gemma 2 for specific tasks. Learn how to use Gemma with your preferred tools, including for tasks like retrieval-augmented generation.

Responsible AI development

We're committed to helping developers and researchers build and deploy AI responsibly. Our Responsible Generative AI Toolkit is part of this effort. The recently open-sourced LLM Comparator helps with detailed evaluations of language models. Starting today, you can use the companion Python library to run comparative evaluations with your model and data, and visualize the results in the app. We're also working on open-sourcing our text watermarking technology, SynthID, for Gemma models.

When training Gemma 2, we followed our rigorous internal safety processes, filtering pre-training data and conducting thorough testing and evaluation against a wide range of metrics to identify and mitigate potential biases and risks. We share our results on public benchmarks related to safety and representational harms.

Projects built with Gemma

Our first Gemma launch sparked over 10 million downloads and tons of amazing projects. For example, Navarasa used Gemma to develop a model celebrating India's linguistic diversity.

With Gemma 2, developers can take on even more ambitious projects, pushing the boundaries of what's possible in AI. We'll keep exploring new architectures and developing specialized Gemma variants to tackle a broader range of AI tasks and challenges. We're also gearing up to release a 2.6B parameter Gemma 2 model, designed to balance lightweight accessibility with powerful performance. You can find out more about this in the technical report.

Getting started

Gemma 2 is now available in Google AI Studio, so you can test its full capabilities at 27B without any hardware requirements. You can also download Gemma 2's model weights from Kaggle and Hugging Face Models, with Vertex AI Model Garden coming soon.

To support research and development, Gemma 2 is available free of charge through Kaggle or via a free tier for Colab notebooks. First-time Google Cloud customers might be eligible for $300 in credits. Academic researchers can apply for the Gemma 2 Academic Research Program to get Google Cloud credits to speed up their research with Gemma 2. Applications are open now until August 9.

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

September 14, 2025 at 8:30:40 PM EDT

September 14, 2025 at 8:30:40 PM EDT

Gemma 2가 개발자들에게 공개되었다니 기대되네요! 경량 모델이라서 스타트업이나 개인 개발자도 활용하기 좋을 것 같아요. 근데 진짜 빨리 써보고 싶은데 한국어 지원은 언제될까요? ㄷㄷ

0

0

July 27, 2025 at 9:20:54 PM EDT

July 27, 2025 at 9:20:54 PM EDT

Wow, Gemma 2 sounds like a game-changer for researchers! Open models like this could spark some wild innovations. Anyone else excited to see what devs cook up with this? 🚀

0

0

April 20, 2025 at 1:42:54 PM EDT

April 20, 2025 at 1:42:54 PM EDT

Gemma 2 é uma revolução para pesquisadores! É tão acessível e fácil de usar. O único ponto negativo é a curva de aprendizado para iniciantes. Mas, uma vez que você pega o jeito, é incrível! 🚀

0

0

April 20, 2025 at 11:50:33 AM EDT

April 20, 2025 at 11:50:33 AM EDT

研究者にとってGemma 2は革命的です!アクセスしやすく、使いやすいです。ただ、初心者にとっては学習曲線が高いです。慣れれば素晴らしいツールです!🚀

0

0

April 16, 2025 at 12:44:50 AM EDT

April 16, 2025 at 12:44:50 AM EDT

Gemma 2는 연구자들에게 혁신적이에요! 접근성이 좋고 사용하기 쉬워요. 다만, 초보자에게는 학습 곡선이 높아요. 익숙해지면 정말 대단해요! 🚀

0

0

April 16, 2025 at 12:31:23 AM EDT

April 16, 2025 at 12:31:23 AM EDT

Gemma 2는 연구자와 개발자에게 혁신적인 도구입니다! 시작하기 쉬워서 좋고, 모델도 최첨단이에요. 다만 문서가 조금 더 자세했으면 좋겠어요. 그래도 AI에 도전하고 싶은 분들에게 강력 추천합니다! 🚀

0

0