Billionaires Discuss Automating Jobs Away in This Week's AI Update

Hey everyone, welcome back to TechCrunch's AI newsletter! If you're not already subscribed, you can sign up here to get it delivered straight to your inbox every Wednesday.

We took a little break last week, but for good reason—the AI news cycle was on fire, thanks in large part to the sudden surge of Chinese AI company DeepSeek. It's been a whirlwind, but we're here now, and just in time to catch you up on the latest from OpenAI.

Over the weekend, OpenAI CEO Sam Altman made a stop in Tokyo to chat with Masayoshi Son, the head honcho at SoftBank. SoftBank, a big investor in OpenAI, has committed to helping fund a massive data center project in the U.S. So, it's no surprise Altman took some time to sit down with Son.

What did they talk about? Well, according to reports, it was all about AI "agents" and how they can streamline work processes. Son dropped a bombshell, announcing that SoftBank plans to spend a whopping $3 billion annually on OpenAI products. They're also teaming up to create something called "Cristal Intelligence," aiming to automate millions of white-collar jobs.

"By automating and autonomizing all of its tasks and workflows, SoftBank Corp. will transform its business and services, and create new value," SoftBank declared in a press release on Monday.

Exhibit at TechCrunch Sessions: AI

Secure your spot at TC Sessions: AI and show 1,200+ decision-makers what you've built—without breaking the bank. Spots are available until May 9 or while tables last.

Exhibit at TechCrunch Sessions: AI

Secure your spot at TC Sessions: AI and show 1,200+ decision-makers what you've built—without breaking the bank. Spots are available until May 9 or while tables last.

Berkeley, CA | June 5 BOOK NOW

But let's take a step back and consider the human side of all this. When CEOs like Sebastian Siemiatkowski of Klarna boast about AI replacing humans, and Son echoes similar sentiments, it's easy to get caught up in the vision of wealth and efficiency. But what about the workers who might find themselves out of a job? It's a tough pill to swallow, especially when those leading the AI charge seem more focused on corporate gains than the impact on employees.

It's a bit disheartening, isn't it? Companies like OpenAI and investors like SoftBank are shaping the future of AI, yet they spend their time in the spotlight talking about automated businesses with fewer people on the payroll. I get it, they're businesses, not charities, and AI development is pricey. But maybe if they showed a bit more concern for the human element, people would trust AI more.

Just something to chew on.

News

- Deep research: OpenAI has rolled out a new AI "agent" that's designed to help you dive deep into complex research using ChatGPT.

- O3-mini: In other OpenAI news, they've launched o3-mini, a new AI "reasoning" model. It's not their most powerful, but it's more efficient and faster.

- EU bans risky AI: As of Sunday, the European Union can now ban AI systems that pose "unacceptable risk" or harm, including those used for social scoring and subliminal advertising.

- A play about AI "doomers": There's a new play out there that dives into AI "doomer" culture, inspired by Sam Altman's brief ousting from OpenAI last November. My colleagues Dominic and Rebecca have shared their thoughts after seeing the premiere.

- Tech to boost crop yields: Google's X "moonshot factory" has introduced Heritable Agriculture, a startup using data and machine learning to improve crop yields.

Research paper of the week

Reasoning models are great at tackling tough problems, especially in science and math. But they're not perfect. A study from Tencent researchers looks into "underthinking" in these models, where they drop promising lines of thought too soon. The study found that this happens more with harder problems, causing models to jump between ideas without reaching a solution.

The researchers suggest a "thought-switching penalty" to encourage models to fully explore each reasoning path before moving on, which could improve their accuracy.

Model of the week

Image Credits: YuE

Image Credits: YuE

A team backed by TikTok's ByteDance, Chinese AI company Moonshot, and others have released YuE, a new open model that can generate high-quality music from prompts. It can create songs up to a few minutes long, complete with vocals and backing tracks, and it's available under an Apache 2.0 license, so it's free for commercial use.

But there are some catches. Running YuE requires a powerful GPU—generating a 30-second song takes six minutes with an Nvidia RTX 4090. Also, there's uncertainty about whether copyrighted data was used to train the model, which could lead to IP issues down the line.

Grab bag

Image Credits: Anthropic

Image Credits: Anthropic

Anthropic claims to have developed a new technique called Constitutional Classifiers to better protect against AI "jailbreaks." It uses two sets of classifier models: one for input and one for output. The input classifier adds prompts to a safeguarded model to flag potential jailbreaks, while the output classifier checks responses for harmful content.

Anthropic says this method can catch most jailbreaks, but it's not without its drawbacks. It makes each query 25% more computationally intensive, and the safeguarded model is slightly less likely to respond to harmless questions.

Related article

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

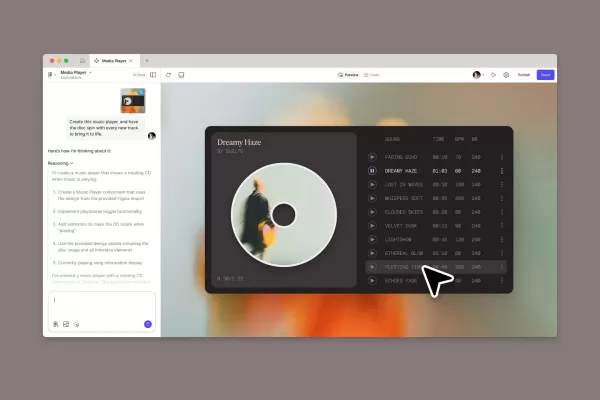

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (2)

0/200

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (2)

0/200

![JonathanMiller]() JonathanMiller

JonathanMiller

September 29, 2025 at 12:30:35 AM EDT

September 29, 2025 at 12:30:35 AM EDT

Also die Milliardäre diskutieren wieder über Arbeitsplatz-Automatisierung...das ist ja total neu und überraschend! 😒 Vielleicht sollten die mal mit Leuten reden, die tatsächlich von diesem Wandel betroffen sind, statt nur in ihren Elfenbeintürmen zu schwadronieren.

0

0

![BillyRoberts]() BillyRoberts

BillyRoberts

July 27, 2025 at 9:19:05 PM EDT

July 27, 2025 at 9:19:05 PM EDT

Wow, automating jobs away? Billionaires chatting about AI taking over is wild! 😲 Feels like we're in a sci-fi flick, but I wonder if they’re thinking about the folks losing jobs. Hope there’s a plan for that!

0

0

Hey everyone, welcome back to TechCrunch's AI newsletter! If you're not already subscribed, you can sign up here to get it delivered straight to your inbox every Wednesday.

We took a little break last week, but for good reason—the AI news cycle was on fire, thanks in large part to the sudden surge of Chinese AI company DeepSeek. It's been a whirlwind, but we're here now, and just in time to catch you up on the latest from OpenAI.

Over the weekend, OpenAI CEO Sam Altman made a stop in Tokyo to chat with Masayoshi Son, the head honcho at SoftBank. SoftBank, a big investor in OpenAI, has committed to helping fund a massive data center project in the U.S. So, it's no surprise Altman took some time to sit down with Son.

What did they talk about? Well, according to reports, it was all about AI "agents" and how they can streamline work processes. Son dropped a bombshell, announcing that SoftBank plans to spend a whopping $3 billion annually on OpenAI products. They're also teaming up to create something called "Cristal Intelligence," aiming to automate millions of white-collar jobs.

"By automating and autonomizing all of its tasks and workflows, SoftBank Corp. will transform its business and services, and create new value," SoftBank declared in a press release on Monday.

Exhibit at TechCrunch Sessions: AI

Secure your spot at TC Sessions: AI and show 1,200+ decision-makers what you've built—without breaking the bank. Spots are available until May 9 or while tables last.

Exhibit at TechCrunch Sessions: AI

Secure your spot at TC Sessions: AI and show 1,200+ decision-makers what you've built—without breaking the bank. Spots are available until May 9 or while tables last.

Berkeley, CA | June 5 BOOK NOW

But let's take a step back and consider the human side of all this. When CEOs like Sebastian Siemiatkowski of Klarna boast about AI replacing humans, and Son echoes similar sentiments, it's easy to get caught up in the vision of wealth and efficiency. But what about the workers who might find themselves out of a job? It's a tough pill to swallow, especially when those leading the AI charge seem more focused on corporate gains than the impact on employees.

It's a bit disheartening, isn't it? Companies like OpenAI and investors like SoftBank are shaping the future of AI, yet they spend their time in the spotlight talking about automated businesses with fewer people on the payroll. I get it, they're businesses, not charities, and AI development is pricey. But maybe if they showed a bit more concern for the human element, people would trust AI more.

Just something to chew on.

News

- Deep research: OpenAI has rolled out a new AI "agent" that's designed to help you dive deep into complex research using ChatGPT.

- O3-mini: In other OpenAI news, they've launched o3-mini, a new AI "reasoning" model. It's not their most powerful, but it's more efficient and faster.

- EU bans risky AI: As of Sunday, the European Union can now ban AI systems that pose "unacceptable risk" or harm, including those used for social scoring and subliminal advertising.

- A play about AI "doomers": There's a new play out there that dives into AI "doomer" culture, inspired by Sam Altman's brief ousting from OpenAI last November. My colleagues Dominic and Rebecca have shared their thoughts after seeing the premiere.

- Tech to boost crop yields: Google's X "moonshot factory" has introduced Heritable Agriculture, a startup using data and machine learning to improve crop yields.

Research paper of the week

Reasoning models are great at tackling tough problems, especially in science and math. But they're not perfect. A study from Tencent researchers looks into "underthinking" in these models, where they drop promising lines of thought too soon. The study found that this happens more with harder problems, causing models to jump between ideas without reaching a solution.

The researchers suggest a "thought-switching penalty" to encourage models to fully explore each reasoning path before moving on, which could improve their accuracy.

Model of the week

Image Credits: YuE

Image Credits: YuE

A team backed by TikTok's ByteDance, Chinese AI company Moonshot, and others have released YuE, a new open model that can generate high-quality music from prompts. It can create songs up to a few minutes long, complete with vocals and backing tracks, and it's available under an Apache 2.0 license, so it's free for commercial use.

But there are some catches. Running YuE requires a powerful GPU—generating a 30-second song takes six minutes with an Nvidia RTX 4090. Also, there's uncertainty about whether copyrighted data was used to train the model, which could lead to IP issues down the line.

Grab bag

Image Credits: Anthropic

Image Credits: Anthropic

Anthropic claims to have developed a new technique called Constitutional Classifiers to better protect against AI "jailbreaks." It uses two sets of classifier models: one for input and one for output. The input classifier adds prompts to a safeguarded model to flag potential jailbreaks, while the output classifier checks responses for harmful content.

Anthropic says this method can catch most jailbreaks, but it's not without its drawbacks. It makes each query 25% more computationally intensive, and the safeguarded model is slightly less likely to respond to harmless questions.

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

September 29, 2025 at 12:30:35 AM EDT

September 29, 2025 at 12:30:35 AM EDT

Also die Milliardäre diskutieren wieder über Arbeitsplatz-Automatisierung...das ist ja total neu und überraschend! 😒 Vielleicht sollten die mal mit Leuten reden, die tatsächlich von diesem Wandel betroffen sind, statt nur in ihren Elfenbeintürmen zu schwadronieren.

0

0

July 27, 2025 at 9:19:05 PM EDT

July 27, 2025 at 9:19:05 PM EDT

Wow, automating jobs away? Billionaires chatting about AI taking over is wild! 😲 Feels like we're in a sci-fi flick, but I wonder if they’re thinking about the folks losing jobs. Hope there’s a plan for that!

0

0