AI Power Surge: Anthropic CEO Warns of Race to Understand

Right after the AI Action Summit in Paris wrapped up, Anthropic's co-founder and CEO, Dario Amodei, didn't hold back. He called the event a "missed opportunity" and stressed that we need to ramp up the focus and urgency on several key issues, considering how fast AI tech is moving. He shared these thoughts in a statement released on Tuesday.

Anthropic teamed up with the French startup Dust for a developer-focused event in Paris, where TechCrunch got to chat with Amodei on stage. He shared his perspective and pushed for a balanced approach to AI innovation and governance, steering clear of both extreme optimism and harsh criticism.

Amodei, who used to be a neuroscientist, said, "I basically looked inside real brains for a living. And now we're looking inside artificial brains for a living. So we will, over the next few months, have some exciting advances in the area of interpretability—where we're really starting to understand how the models operate." But he also pointed out that it's a race. "It's a race between making the models more powerful, which is incredibly fast for us and incredibly fast for others—you can't really slow down, right? ... Our understanding has to keep up with our ability to build things. I think that's the only way," he added.

Since the first AI summit in Bletchley, UK, the conversation around AI governance has shifted a lot, influenced by the current geopolitical climate. U.S. Vice President JD Vance, speaking at the AI Action Summit, said, "I'm not here this morning to talk about AI safety, which was the title of the conference a couple of years ago. I'm here to talk about AI opportunity."

Amodei, however, is trying to bridge the gap between safety and opportunity. He believes that focusing more on safety can actually be an opportunity. "At the original summit, the UK Bletchley Summit, there were a lot of discussions on testing and measurement for various risks. And I don't think these things slowed down the technology very much at all," he said at the Anthropic event. "If anything, doing this kind of measurement has helped us better understand our models, which in the end, helps us produce better models."

Even when emphasizing safety, Amodei made it clear that Anthropic is still all in on building frontier AI models. "I don't want to do anything to reduce the promise. We're providing models every day that people can build on and that are used to do amazing things. And we definitely should not stop doing that," he said. Later, he added, "When people are talking a lot about the risks, I kind of get annoyed, and I say: 'oh, man, no one's really done a good job of really laying out how great this technology could be.'"

When the topic turned to Chinese LLM-maker DeepSeek's recent models, Amodei downplayed their achievements, calling the public reaction "inorganic." He said, "Honestly, my reaction was very little. We had seen V3, which is the base model for DeepSeek R1, back in December. And that was an impressive model. The model that was released in December was on this kind of very normal cost reduction curve that we've seen in our models and other models." What caught his attention was that the model wasn't coming from the usual "three or four frontier labs" in the U.S., like Google, OpenAI, and Anthropic. He expressed concern about authoritarian governments dominating the technology. As for DeepSeek's claimed training costs, he dismissed them as "just not accurate and not based on facts."

While Amodei didn't announce any new models at the event, he hinted at upcoming releases with enhanced reasoning capabilities. "We're generally focused on trying to make our own take on reasoning models that are better differentiated. We worry about making sure we have enough capacity, that the models get smarter, and we worry about safety things," he said.

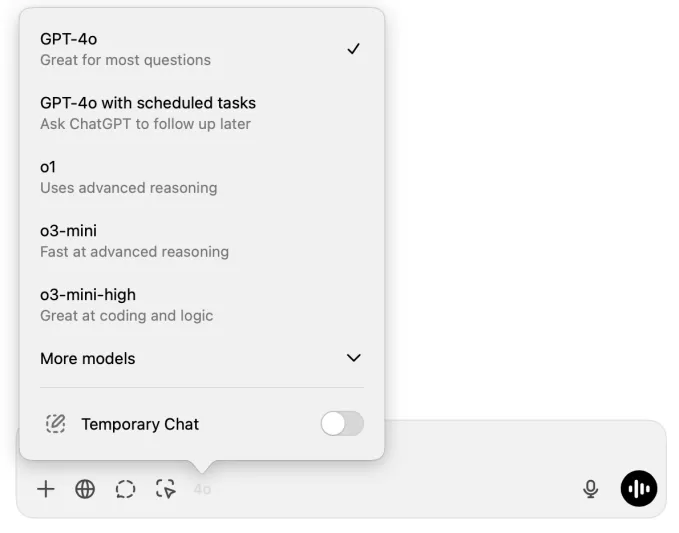

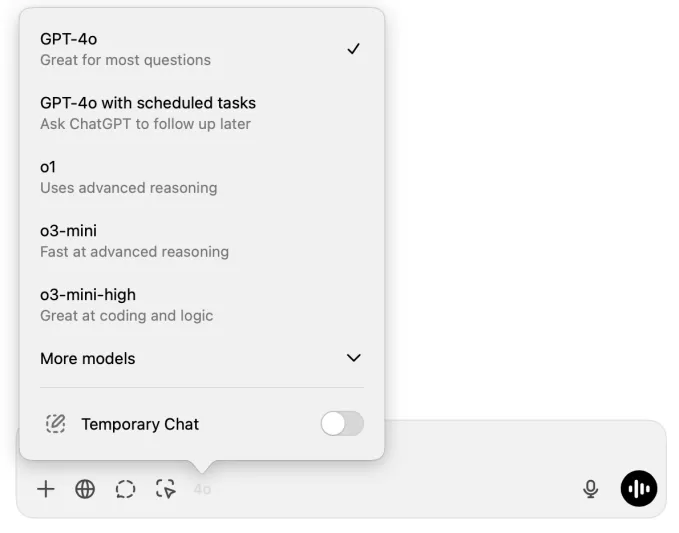

Anthropic is also tackling the model selection challenge. If you're a ChatGPT Plus user, for instance, it can be tough to decide which model to use for your next message.

Image Credits:Screenshot of ChatGPT The same goes for developers using large language model (LLM) APIs in their apps, who need to balance accuracy, response speed, and costs.

Amodei questioned the distinction between normal and reasoning models. "We've been a little bit puzzled by the idea that there are normal models and there are reasoning models and that they're sort of different from each other," he said. "If I'm talking to you, you don't have two brains and one of them responds right away and like, the other waits a longer time."

He believes there should be a smoother transition between pre-trained models like Claude 3.5 Sonnet or GPT-4o and models trained with reinforcement learning that can produce chain-of-thoughts (CoT), like OpenAI's o1 or DeepSeek's R1. "We think that these should exist as part of one single continuous entity. And we may not be there yet, but Anthropic really wants to move things in that direction," Amodei said. "We should have a smoother transition from that to pre-trained models—rather than 'here's thing A and here's thing B.'"

As AI companies like Anthropic keep pushing out better models, Amodei sees huge potential for disruption across industries. "We're working with some pharma companies to use Claude to write clinical studies, and they've been able to reduce the time it takes to write the clinical study report from 12 weeks to three days," he said.

He envisions a "renaissance of disruptive innovation" in AI applications across sectors like legal, financial, insurance, productivity, software, and energy. "I think there's going to be—basically—a renaissance of disruptive innovation in the AI application space. And we want to help it, we want to support it all," he concluded.

Read our full coverage of the Artificial Intelligence Action Summit in Paris.

TechCrunch has an AI-focused newsletter! Sign up here to get it in your inbox every Wednesday.

Related article

Seeking Faith and Purpose in an Age of Skepticism

In our modern age of scientific inquiry and critical thinking, maintaining spiritual faith often feels like walking against the tide. Many struggle to reconcile timeless beliefs with contemporary skepticism, leaving them longing for deeper meaning. T

Seeking Faith and Purpose in an Age of Skepticism

In our modern age of scientific inquiry and critical thinking, maintaining spiritual faith often feels like walking against the tide. Many struggle to reconcile timeless beliefs with contemporary skepticism, leaving them longing for deeper meaning. T

How ChatGPT Works: Capabilities, Applications, and Future Implications

The rapid evolution of artificial intelligence is transforming digital interactions and communication. Leading this transformation is ChatGPT, an advanced conversational AI that sets new standards for natural language processing. This in-depth examin

How ChatGPT Works: Capabilities, Applications, and Future Implications

The rapid evolution of artificial intelligence is transforming digital interactions and communication. Leading this transformation is ChatGPT, an advanced conversational AI that sets new standards for natural language processing. This in-depth examin

Salesforce’s Transformer Model Guide: AI Text Summarization Explained

In an era where information overload is the norm, AI-powered text summarization has become an indispensable tool for extracting key insights from lengthy documents. This comprehensive guide examines Salesforce's groundbreaking AI summarization techno

Comments (53)

0/200

Salesforce’s Transformer Model Guide: AI Text Summarization Explained

In an era where information overload is the norm, AI-powered text summarization has become an indispensable tool for extracting key insights from lengthy documents. This comprehensive guide examines Salesforce's groundbreaking AI summarization techno

Comments (53)

0/200

![ArthurLopez]() ArthurLopez

ArthurLopez

August 26, 2025 at 1:01:20 PM EDT

August 26, 2025 at 1:01:20 PM EDT

Dario's got a point—AI's zooming ahead, and we're still playing catch-up. Feels like we're in a sci-fi flick, but nobody read the script! 😅 Curious if we'll ever get a grip on this tech race.

0

0

![StephenHernández]() StephenHernández

StephenHernández

August 26, 2025 at 7:01:14 AM EDT

August 26, 2025 at 7:01:14 AM EDT

Dario's got a point—AI's moving way too fast for these summits to just be talkfests. We need real action, not just fancy speeches. 😤 Curious what Anthropic’s cooking up next to keep up with the race!

0

0

![JasonSanchez]() JasonSanchez

JasonSanchez

August 20, 2025 at 11:01:16 AM EDT

August 20, 2025 at 11:01:16 AM EDT

Dario's got a point—AI's zooming ahead, and we’re barely keeping up! The Paris summit sounded like a snooze fest. Anyone else worried we’re not ready for what’s coming? 🤔

0

0

![DavidGonzalez]() DavidGonzalez

DavidGonzalez

April 21, 2025 at 4:30:10 AM EDT

April 21, 2025 at 4:30:10 AM EDT

Anthropic's CEO really laid it out at the AI Summit. It's scary how fast AI is moving, and he's right—we need to take it more seriously. His warning about the race to understand AI hit home. We've gotta step up our game! 🚀

0

0

![EricRoberts]() EricRoberts

EricRoberts

April 18, 2025 at 8:48:00 PM EDT

April 18, 2025 at 8:48:00 PM EDT

AI 정상회의에서 Anthropic의 CEO가 정말 솔직하게 말했어요. AI가 얼마나 빨리 발전하는지 무섭네요, 그의 말대로 더 진지하게 다뤄야 해요. AI를 이해하기 위한 경쟁에 대한 경고는 마음에 와닿았어요. 우리도 더 노력해야 해요! 🚀

0

0

![JamesMiller]() JamesMiller

JamesMiller

April 18, 2025 at 6:08:01 AM EDT

April 18, 2025 at 6:08:01 AM EDT

A visão de Dario sobre o AI Action Summit estava certa! Realmente pareceu uma oportunidade perdida para explorar mais profundamente as questões críticas que a IA enfrenta. A urgência dele é exatamente o que precisamos agora. Mal posso esperar para ver quais serão os próximos passos! 🚀

0

0

Right after the AI Action Summit in Paris wrapped up, Anthropic's co-founder and CEO, Dario Amodei, didn't hold back. He called the event a "missed opportunity" and stressed that we need to ramp up the focus and urgency on several key issues, considering how fast AI tech is moving. He shared these thoughts in a statement released on Tuesday.

Anthropic teamed up with the French startup Dust for a developer-focused event in Paris, where TechCrunch got to chat with Amodei on stage. He shared his perspective and pushed for a balanced approach to AI innovation and governance, steering clear of both extreme optimism and harsh criticism.

Amodei, who used to be a neuroscientist, said, "I basically looked inside real brains for a living. And now we're looking inside artificial brains for a living. So we will, over the next few months, have some exciting advances in the area of interpretability—where we're really starting to understand how the models operate." But he also pointed out that it's a race. "It's a race between making the models more powerful, which is incredibly fast for us and incredibly fast for others—you can't really slow down, right? ... Our understanding has to keep up with our ability to build things. I think that's the only way," he added.

Since the first AI summit in Bletchley, UK, the conversation around AI governance has shifted a lot, influenced by the current geopolitical climate. U.S. Vice President JD Vance, speaking at the AI Action Summit, said, "I'm not here this morning to talk about AI safety, which was the title of the conference a couple of years ago. I'm here to talk about AI opportunity."

Amodei, however, is trying to bridge the gap between safety and opportunity. He believes that focusing more on safety can actually be an opportunity. "At the original summit, the UK Bletchley Summit, there were a lot of discussions on testing and measurement for various risks. And I don't think these things slowed down the technology very much at all," he said at the Anthropic event. "If anything, doing this kind of measurement has helped us better understand our models, which in the end, helps us produce better models."

Even when emphasizing safety, Amodei made it clear that Anthropic is still all in on building frontier AI models. "I don't want to do anything to reduce the promise. We're providing models every day that people can build on and that are used to do amazing things. And we definitely should not stop doing that," he said. Later, he added, "When people are talking a lot about the risks, I kind of get annoyed, and I say: 'oh, man, no one's really done a good job of really laying out how great this technology could be.'"

When the topic turned to Chinese LLM-maker DeepSeek's recent models, Amodei downplayed their achievements, calling the public reaction "inorganic." He said, "Honestly, my reaction was very little. We had seen V3, which is the base model for DeepSeek R1, back in December. And that was an impressive model. The model that was released in December was on this kind of very normal cost reduction curve that we've seen in our models and other models." What caught his attention was that the model wasn't coming from the usual "three or four frontier labs" in the U.S., like Google, OpenAI, and Anthropic. He expressed concern about authoritarian governments dominating the technology. As for DeepSeek's claimed training costs, he dismissed them as "just not accurate and not based on facts."

While Amodei didn't announce any new models at the event, he hinted at upcoming releases with enhanced reasoning capabilities. "We're generally focused on trying to make our own take on reasoning models that are better differentiated. We worry about making sure we have enough capacity, that the models get smarter, and we worry about safety things," he said.

Anthropic is also tackling the model selection challenge. If you're a ChatGPT Plus user, for instance, it can be tough to decide which model to use for your next message.

Amodei questioned the distinction between normal and reasoning models. "We've been a little bit puzzled by the idea that there are normal models and there are reasoning models and that they're sort of different from each other," he said. "If I'm talking to you, you don't have two brains and one of them responds right away and like, the other waits a longer time."

He believes there should be a smoother transition between pre-trained models like Claude 3.5 Sonnet or GPT-4o and models trained with reinforcement learning that can produce chain-of-thoughts (CoT), like OpenAI's o1 or DeepSeek's R1. "We think that these should exist as part of one single continuous entity. And we may not be there yet, but Anthropic really wants to move things in that direction," Amodei said. "We should have a smoother transition from that to pre-trained models—rather than 'here's thing A and here's thing B.'"

As AI companies like Anthropic keep pushing out better models, Amodei sees huge potential for disruption across industries. "We're working with some pharma companies to use Claude to write clinical studies, and they've been able to reduce the time it takes to write the clinical study report from 12 weeks to three days," he said.

He envisions a "renaissance of disruptive innovation" in AI applications across sectors like legal, financial, insurance, productivity, software, and energy. "I think there's going to be—basically—a renaissance of disruptive innovation in the AI application space. And we want to help it, we want to support it all," he concluded.

Read our full coverage of the Artificial Intelligence Action Summit in Paris.

TechCrunch has an AI-focused newsletter! Sign up here to get it in your inbox every Wednesday.

Seeking Faith and Purpose in an Age of Skepticism

In our modern age of scientific inquiry and critical thinking, maintaining spiritual faith often feels like walking against the tide. Many struggle to reconcile timeless beliefs with contemporary skepticism, leaving them longing for deeper meaning. T

Seeking Faith and Purpose in an Age of Skepticism

In our modern age of scientific inquiry and critical thinking, maintaining spiritual faith often feels like walking against the tide. Many struggle to reconcile timeless beliefs with contemporary skepticism, leaving them longing for deeper meaning. T

How ChatGPT Works: Capabilities, Applications, and Future Implications

The rapid evolution of artificial intelligence is transforming digital interactions and communication. Leading this transformation is ChatGPT, an advanced conversational AI that sets new standards for natural language processing. This in-depth examin

How ChatGPT Works: Capabilities, Applications, and Future Implications

The rapid evolution of artificial intelligence is transforming digital interactions and communication. Leading this transformation is ChatGPT, an advanced conversational AI that sets new standards for natural language processing. This in-depth examin

Salesforce’s Transformer Model Guide: AI Text Summarization Explained

In an era where information overload is the norm, AI-powered text summarization has become an indispensable tool for extracting key insights from lengthy documents. This comprehensive guide examines Salesforce's groundbreaking AI summarization techno

Salesforce’s Transformer Model Guide: AI Text Summarization Explained

In an era where information overload is the norm, AI-powered text summarization has become an indispensable tool for extracting key insights from lengthy documents. This comprehensive guide examines Salesforce's groundbreaking AI summarization techno

August 26, 2025 at 1:01:20 PM EDT

August 26, 2025 at 1:01:20 PM EDT

Dario's got a point—AI's zooming ahead, and we're still playing catch-up. Feels like we're in a sci-fi flick, but nobody read the script! 😅 Curious if we'll ever get a grip on this tech race.

0

0

August 26, 2025 at 7:01:14 AM EDT

August 26, 2025 at 7:01:14 AM EDT

Dario's got a point—AI's moving way too fast for these summits to just be talkfests. We need real action, not just fancy speeches. 😤 Curious what Anthropic’s cooking up next to keep up with the race!

0

0

August 20, 2025 at 11:01:16 AM EDT

August 20, 2025 at 11:01:16 AM EDT

Dario's got a point—AI's zooming ahead, and we’re barely keeping up! The Paris summit sounded like a snooze fest. Anyone else worried we’re not ready for what’s coming? 🤔

0

0

April 21, 2025 at 4:30:10 AM EDT

April 21, 2025 at 4:30:10 AM EDT

Anthropic's CEO really laid it out at the AI Summit. It's scary how fast AI is moving, and he's right—we need to take it more seriously. His warning about the race to understand AI hit home. We've gotta step up our game! 🚀

0

0

April 18, 2025 at 8:48:00 PM EDT

April 18, 2025 at 8:48:00 PM EDT

AI 정상회의에서 Anthropic의 CEO가 정말 솔직하게 말했어요. AI가 얼마나 빨리 발전하는지 무섭네요, 그의 말대로 더 진지하게 다뤄야 해요. AI를 이해하기 위한 경쟁에 대한 경고는 마음에 와닿았어요. 우리도 더 노력해야 해요! 🚀

0

0

April 18, 2025 at 6:08:01 AM EDT

April 18, 2025 at 6:08:01 AM EDT

A visão de Dario sobre o AI Action Summit estava certa! Realmente pareceu uma oportunidade perdida para explorar mais profundamente as questões críticas que a IA enfrenta. A urgência dele é exatamente o que precisamos agora. Mal posso esperar para ver quais serão os próximos passos! 🚀

0

0