Ethical AI Development: Critical Considerations for Responsible Innovation

As Artificial Intelligence (AI) advances and integrates into daily life, ethical considerations in its development and use are paramount. This article explores essential aspects such as transparency, fairness, privacy, accountability, safety, and leveraging AI for societal benefit. Understanding these dimensions is key to building trust, promoting responsible innovation, and maximizing AI’s potential while minimizing risks. Let’s navigate the ethical complexities of AI to ensure it serves humanity responsibly and effectively.

Key Takeaways

Transparency and explainability build confidence in AI systems.

Fairness and bias reduction are vital to prevent discrimination and promote equity.

Prioritizing privacy and data security safeguards sensitive information.

Accountability frameworks are essential for addressing AI-related harms.

AI safety and robustness prevent unintended consequences and ensure reliability.

Using AI for social good addresses challenges in healthcare, education, and beyond.

Mitigating labor market impacts reduces job displacement and inequality.

Preserving human autonomy maintains agency and decision-making control.

Ethical AI research requires careful consideration of benefits and risks.

Effective AI governance ensures responsible development and deployment.

Exploring AI’s Ethical Dimensions

Transparency and Explainability in AI

As AI systems grow more sophisticated, understanding their operations becomes increasingly difficult. Transparency and explainability are critical for fostering trust and accountability.

Transparency in AI involves clarity about how systems function, including their data, algorithms, and decision-making processes. Explainability ensures AI decisions are comprehensible to humans, enabling stakeholders to understand the reasoning behind outcomes.

The Role of Explainable AI (XAI):

Explainable AI (XAI) develops models that humans can interpret, allowing stakeholders to evaluate decision rationales, enhancing trust and oversight. Key XAI techniques include:

- Rule-based systems: Employ clear rules for transparent decision-making.

- Decision trees: Offer visual decision pathways for easier comprehension.

- Feature importance: Highlight key data factors driving model predictions.

Advantages of Transparency and Explainability:

- Fostering Trust: Clear systems encourage user confidence and adoption.

- Enhancing Accountability: Understanding operations aids in identifying and correcting errors or biases.

- Supporting Oversight: Transparency enables stakeholders to ensure ethical alignment.

- Improving Decision-Making: Clear rationales provide insights for informed choices.

Fairness and Bias Reduction in AI

AI systems may perpetuate biases present in training data, leading to unfair outcomes. Fairness ensures AI avoids discrimination based on attributes like race, gender, or religion, while bias reduction addresses inequities in system outputs.

Sources of AI Bias:

- Historical Bias: Reflects societal biases in training data.

- Sampling Bias: Stems from unrepresentative data samples.

- Measurement Bias: Results from flawed measurement methods.

- Algorithm Bias: Arises from algorithm design or implementation.

Bias Reduction Strategies:

- Data Preprocessing: Clean data to minimize biases.

- Algorithm Design: Create fairness-focused algorithms.

- Model Evaluation: Use metrics to assess bias and fairness.

- Post-processing: Adjust outputs to reduce discriminatory effects.

Promoting Equity:

- Diverse Data Sets: Train AI with representative data.

- Bias Audits: Regularly check systems for biased behavior.

- Stakeholder Engagement: Involve diverse groups in development.

- Transparency and Accountability: Ensure clear, accountable decision-making.

Privacy and Data Security in AI

AI relies on vast datasets, often including sensitive information. Protecting privacy and securing data are critical for user trust.

Privacy in AI protects personal data from unauthorized access or use, while data security prevents theft, loss, or corruption.

Core Privacy and Security Principles:

- Data Minimization: Collect only essential data.

- Purpose Limitation: Use data solely for intended purposes.

- Data Security: Apply robust safeguards against unauthorized access.

- Transparency: Inform users about data usage.

- User Control: Allow individuals to manage their data.

Techniques for Privacy and Security:

- Anonymization: Remove identifiable data to protect privacy.

- Differential Privacy: Add noise to data for analysis without compromising identities.

- Federated Learning: Train models on decentralized data without sharing it.

- Encryption: Secure data against unauthorized access.

Building Trust through Privacy:

- Privacy-Enhancing Technologies: Protect user data with advanced tools.

- Data Security Measures: Prevent breaches with strong protections.

- Regulatory Compliance: Adhere to laws like GDPR and CCPA.

- Clear Communication: Inform users about privacy practices.

Accountability and Responsibility in AI

Assigning responsibility for AI errors or harms is complex, especially with multiple stakeholders involved. Clear frameworks are needed to ensure accountability.

Accountability ensures those developing and deploying AI are answerable for its impacts, while responsibility focuses on ethical stewardship.

Accountability Challenges:

- System Complexity: Tracing errors in intricate AI systems is difficult.

- Multiple Stakeholders: Various parties are involved in AI’s lifecycle.

- Lack of Legal Frameworks: Clear liability guidelines are often absent.

Promoting Accountability:

- Clear Guidelines: Set standards for AI development and use.

- Legal Frameworks: Define liability for AI-driven outcomes.

- Auditing and Monitoring: Detect and address errors or biases.

- Explainable AI: Use XAI to clarify decision rationales.

Encouraging Ethical Practices:

- Ethics Training: Educate developers on ethical principles.

- Ethical Review Boards: Assess AI projects for ethical impacts.

- Industry Standards: Establish norms for responsible AI.

- Stakeholder Engagement: Involve diverse groups in evaluations.

AI Safety and Robustness

AI systems must be safe and resilient to avoid harm and ensure reliability against attacks or errors.

Key Safety and Robustness Considerations:

- Unintended Consequences: Prevent harm from AI actions.

- Adversarial Attacks: Protect against malicious manipulations.

- Unexpected Inputs: Handle out-of-distribution inputs effectively.

- System Reliability: Ensure consistent performance.

Enhancing Safety and Robustness:

- Formal Verification: Use mathematics to confirm system safety.

- Adversarial Training: Train systems to resist attacks.

- Anomaly Detection: Identify and flag unusual inputs.

- Redundancy: Build fault-tolerant systems for reliability.

Advancing Responsible Innovation:

- Safety Research: Invest in AI safety studies.

- Safety Standards: Develop guidelines for robust AI.

- Testing and Validation: Rigorously test systems for safety.

- Continuous Monitoring: Track systems to address issues.

AI for Social Good

AI can tackle societal challenges like healthcare, education, and sustainability, improving lives through targeted solutions.

Healthcare: Enhance diagnosis, treatment, and prevention.

Education: Personalize learning and improve access.

Environmental Sustainability: Optimize resources and reduce pollution.

Poverty Reduction: Expand financial access and job opportunities.

Disaster Relief: Improve preparedness and recovery.

Ethical Considerations for Social Good:

- Equity and Inclusion: Ensure benefits reach marginalized groups.

- Transparency and Accountability: Maintain clear decision-making processes.

- Privacy and Security: Protect sensitive data.

- Human Autonomy: Preserve individual control.

Promoting Social Good Initiatives:

- Fund Research: Support AI for social impact.

- Support Startups: Back ventures addressing societal issues.

- Public-Private Partnerships: Collaborate on impactful solutions.

- Stakeholder Engagement: Involve diverse groups in development.

Labor and Employment Impacts

AI’s automation potential raises concerns about job displacement and inequality, requiring proactive workforce strategies.

AI’s Labor Impacts:

- Job Displacement: Automation may reduce jobs in some sectors.

- Job Creation: New roles emerge in AI-related fields.

- Skill Gap: Demand grows for specialized skills.

- Income Inequality: Gaps widen between skill levels.

Mitigating Labor Impacts:

- Reskilling Programs: Train workers for new roles.

- Job Creation: Invest in AI-driven industries.

- Social Safety Nets: Support displaced workers.

- Lifelong Learning: Promote continuous skill development.

Ensuring a Fair Transition:

- Retraining Programs: Help workers shift to new industries.

- Unemployment Benefits: Support those affected by automation.

- Universal Basic Income: Explore safety nets for all.

- Worker Empowerment: Involve workers in AI decisions.

Preserving Human Autonomy

Advanced AI risks diminishing human control, making it essential to maintain agency and decision-making power.

Human autonomy ensures individuals retain control over their choices and lives, preventing undue AI influence.

Risks to Autonomy:

- AI Manipulation: Systems may sway behavior subtly.

- Over-Reliance: Dependence on AI could weaken critical thinking.

- Loss of Control: Unintended consequences from unchecked AI.

Preserving Autonomy:

- Transparency: Ensure clear, understandable AI processes.

- Human-in-the-Loop: Require human oversight in AI systems.

- User Control: Allow opting out of AI-driven decisions.

- Critical Thinking Education: Empower informed decision-making.

Ethical AI Development:

- Human Values: Align AI with societal well-being.

- Bias Mitigation: Prevent discriminatory outcomes.

- Data Privacy: Protect personal information.

- Accountability: Hold developers responsible for AI actions.

Ethical AI Research

Responsible AI research balances innovation with careful consideration of societal and environmental impacts.

Ethical Research Principles:

- Transparency: Share findings to foster collaboration.

- Accountability: Own the consequences of research.

- Beneficence: Maximize benefits, minimize harms.

- Justice: Ensure research benefits all, including marginalized groups.

Promoting Ethical Research:

- Ethics Review Boards: Evaluate research implications.

- Ethical Guidelines: Set standards for researchers.

- Stakeholder Engagement: Involve diverse perspectives.

- Public Education: Inform society about AI’s potential.

Driving Innovation:

- Open Research: Encourage data sharing for progress.

- AI Education: Train a skilled workforce.

- Collaboration: Foster partnerships across sectors.

- Ethical Innovation: Develop solutions for social challenges.

AI Governance and Regulation

Robust governance and regulations are crucial to address ethical concerns and ensure responsible AI development.

Governance Challenges:

- Rapid Advancements: Keeping up with AI’s pace.

- System Complexity: Understanding intricate AI ethics.

- Lack of Consensus: Agreeing on ethical standards.

Effective Governance Strategies:

- Ethical Standards: Define responsible AI principles.

- Regulatory Frameworks: Establish legal guidelines.

- Ethics Boards: Provide oversight and guidance.

- Transparency: Require clear decision-making processes.

Building Public Trust:

- Accountability: Ensure developers are answerable.

- Human Rights: Protect individual freedoms.

- Social Good: Use AI to address societal needs.

- Public Engagement: Involve communities in governance discussions.

Sample AI Governance Framework:

Component Description Ethical Principles Core values like fairness, transparency, and accountability guiding AI development. Policy Guidelines Rules detailing how AI systems should be designed and deployed. Regulatory Framework Legal requirements ensuring ethical compliance and human rights protection. Oversight Mechanisms Processes for monitoring and auditing AI for ethical use. Accountability Measures Systems to hold developers accountable, including penalties for ethical breaches.

Advantages of Ethical AI

Shaping a Responsible AI Future

Addressing AI ethics is critical for creating a future where AI benefits humanity responsibly. Prioritizing ethics unlocks AI’s potential while minimizing risks. Key benefits include:

- Building Trust: Ethical practices boost stakeholder confidence, driving AI adoption.

- Fostering Innovation: Ethical considerations spur value-aligned innovation, enhancing competitiveness.

- Promoting Equity: Ethical AI reduces biases, ensuring fair outcomes for all.

- Enhancing Transparency: Clear processes improve accountability and trust.

- Protecting Rights: Ethical AI safeguards human dignity and autonomy.

- Encouraging Collaboration: Shared ethical values unite stakeholders for responsible AI.

Ultimately, ethical AI is both a moral and strategic necessity, ensuring a future where AI enhances human well-being.

Guide to Ethical AI Development

Step 1: Define Ethical Principles

Start by setting clear ethical principles reflecting organizational values and societal norms, developed with diverse stakeholder input.

Key principles:

- Fairness: Ensure equitable treatment without bias.

- Transparency: Make AI processes clear to stakeholders.

- Accountability: Assign responsibility for AI actions.

- Privacy: Safeguard personal data.

- Safety: Design systems to prevent harm.

Step 2: Assess Ethical Risks

Conduct comprehensive risk assessments to identify ethical concerns, involving experts in ethics, law, and AI.

Risk areas:

- Bias: Detect biases in data and algorithms.

- Discrimination: Evaluate risks of unfair treatment.

- Privacy Violations: Assess data misuse risks.

- Security Threats: Identify vulnerabilities to attacks.

- Unintended Consequences: Consider potential harms.

Step 3: Embed Ethics in Design

Incorporate ethical considerations into AI design from the start, prioritizing fairness, transparency, and accountability.

Design approaches:

- Fairness-Aware Algorithms: Promote equitable outcomes.

- Explainable AI: Use XAI for understandable decisions.

- Privacy Technologies: Protect user data.

- Human Oversight: Require human intervention in AI.

- Transparency Tools: Inform users about data use.

Step 4: Monitor and Audit Systems

Implement monitoring and auditing to detect ethical issues, ensuring compliance with principles and regulations.

Practices:

- Performance Monitoring: Track metrics for bias.

- Data Auditing: Check data for biases.

- Algorithm Transparency: Monitor algorithms for ethics.

- User Feedback: Collect input on ethical concerns.

- Compliance: Ensure adherence to ethical standards.

Step 5: Ensure Accountability

Establish clear accountability for AI actions, with reporting mechanisms to address ethical concerns promptly.

Measures:

- Ethics Officer: Oversee ethical practices.

- Review Committee: Assess AI project ethics.

- Reporting Channels: Enable stakeholder feedback.

- Whistleblower Protection: Safeguard those reporting issues.

- Sanctions: Penalize ethical violations.

Pros and Cons of AI Ethics

Pros

Greater trust and adoption of AI systems

Enhanced accountability and clarity in decisions

Reduced bias and fairer outcomes

Stronger privacy and data security protections

Increased focus on AI for societal benefit

Cons

Higher development costs and time

Complexity in enforcing ethical guidelines

Balancing ethics with innovation

Challenges in agreeing on ethical standards

Risks of unintended ethical outcomes

AI Ethics FAQ

What are the key ethical concerns in AI?

Key concerns include transparency, fairness, privacy, accountability, and safety, all critical for responsible AI use.

How can fairness be ensured in AI?

Use diverse data, fairness-aware algorithms, regular audits, and stakeholder engagement to promote equitable outcomes.

How can privacy be protected in AI?

Adopt data minimization, encryption, differential privacy, federated learning, and transparent data practices.

Who is accountable for AI errors?

Clear guidelines and legal frameworks are needed to assign responsibility, supported by accountability measures.

How can AI systems be made safe?

Use formal verification, adversarial training, anomaly detection, and rigorous testing to ensure safety and robustness.

How can AI promote social good?

AI can address healthcare, education, and sustainability challenges, improving lives through ethical solutions.

Related AI Ethics Questions

What are AI’s implications for human autonomy?

AI risks eroding autonomy, requiring transparency, human oversight, and user control to maintain agency.

How can ethics be integrated into AI development?

Define principles, assess risks, embed ethics in design, monitor systems, and ensure accountability.

What challenges exist in AI governance?

Rapid advancements, system complexity, and lack of consensus complicate creating effective regulations.

How can we prepare for AI’s impact on work?

Implement reskilling, create new jobs, offer safety nets, and promote lifelong learning to adapt to AI-driven changes.

Related article

Google Unveils AI Mode and Veo 3 to Revolutionize Search and Video Creation

Google recently launched AI Mode and Veo 3, two innovative technologies poised to reshape web search and digital content creation. AI Mode delivers a tailored, AI-enhanced search experience that surpa

Google Unveils AI Mode and Veo 3 to Revolutionize Search and Video Creation

Google recently launched AI Mode and Veo 3, two innovative technologies poised to reshape web search and digital content creation. AI Mode delivers a tailored, AI-enhanced search experience that surpa

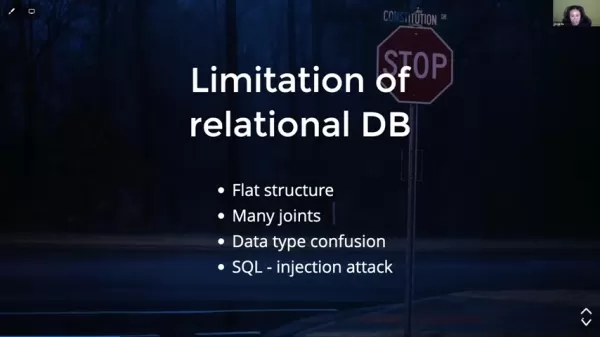

AI Industry's Urgent Need for Revision Control Graph Databases

The AI sector is advancing swiftly, requiring advanced tools to manage complex data and workflows. Traditional relational databases often fall short in addressing AI's dynamic data needs, particularly

AI Industry's Urgent Need for Revision Control Graph Databases

The AI sector is advancing swiftly, requiring advanced tools to manage complex data and workflows. Traditional relational databases often fall short in addressing AI's dynamic data needs, particularly

Unraveling Love and Duty in "Sajadah Merah"

The song "Sajadah Merah" weaves a poignant tale of love, faith, and the sacrifices made for family and obligation. It delves into the emotions of a man who loved a woman who, due to circumstances, wed

Comments (0)

0/200

Unraveling Love and Duty in "Sajadah Merah"

The song "Sajadah Merah" weaves a poignant tale of love, faith, and the sacrifices made for family and obligation. It delves into the emotions of a man who loved a woman who, due to circumstances, wed

Comments (0)

0/200

As Artificial Intelligence (AI) advances and integrates into daily life, ethical considerations in its development and use are paramount. This article explores essential aspects such as transparency, fairness, privacy, accountability, safety, and leveraging AI for societal benefit. Understanding these dimensions is key to building trust, promoting responsible innovation, and maximizing AI’s potential while minimizing risks. Let’s navigate the ethical complexities of AI to ensure it serves humanity responsibly and effectively.

Key Takeaways

Transparency and explainability build confidence in AI systems.

Fairness and bias reduction are vital to prevent discrimination and promote equity.

Prioritizing privacy and data security safeguards sensitive information.

Accountability frameworks are essential for addressing AI-related harms.

AI safety and robustness prevent unintended consequences and ensure reliability.

Using AI for social good addresses challenges in healthcare, education, and beyond.

Mitigating labor market impacts reduces job displacement and inequality.

Preserving human autonomy maintains agency and decision-making control.

Ethical AI research requires careful consideration of benefits and risks.

Effective AI governance ensures responsible development and deployment.

Exploring AI’s Ethical Dimensions

Transparency and Explainability in AI

As AI systems grow more sophisticated, understanding their operations becomes increasingly difficult. Transparency and explainability are critical for fostering trust and accountability.

Transparency in AI involves clarity about how systems function, including their data, algorithms, and decision-making processes. Explainability ensures AI decisions are comprehensible to humans, enabling stakeholders to understand the reasoning behind outcomes.

The Role of Explainable AI (XAI):

Explainable AI (XAI) develops models that humans can interpret, allowing stakeholders to evaluate decision rationales, enhancing trust and oversight. Key XAI techniques include:

- Rule-based systems: Employ clear rules for transparent decision-making.

- Decision trees: Offer visual decision pathways for easier comprehension.

- Feature importance: Highlight key data factors driving model predictions.

Advantages of Transparency and Explainability:

- Fostering Trust: Clear systems encourage user confidence and adoption.

- Enhancing Accountability: Understanding operations aids in identifying and correcting errors or biases.

- Supporting Oversight: Transparency enables stakeholders to ensure ethical alignment.

- Improving Decision-Making: Clear rationales provide insights for informed choices.

Fairness and Bias Reduction in AI

AI systems may perpetuate biases present in training data, leading to unfair outcomes. Fairness ensures AI avoids discrimination based on attributes like race, gender, or religion, while bias reduction addresses inequities in system outputs.

Sources of AI Bias:

- Historical Bias: Reflects societal biases in training data.

- Sampling Bias: Stems from unrepresentative data samples.

- Measurement Bias: Results from flawed measurement methods.

- Algorithm Bias: Arises from algorithm design or implementation.

Bias Reduction Strategies:

- Data Preprocessing: Clean data to minimize biases.

- Algorithm Design: Create fairness-focused algorithms.

- Model Evaluation: Use metrics to assess bias and fairness.

- Post-processing: Adjust outputs to reduce discriminatory effects.

Promoting Equity:

- Diverse Data Sets: Train AI with representative data.

- Bias Audits: Regularly check systems for biased behavior.

- Stakeholder Engagement: Involve diverse groups in development.

- Transparency and Accountability: Ensure clear, accountable decision-making.

Privacy and Data Security in AI

AI relies on vast datasets, often including sensitive information. Protecting privacy and securing data are critical for user trust.

Privacy in AI protects personal data from unauthorized access or use, while data security prevents theft, loss, or corruption.

Core Privacy and Security Principles:

- Data Minimization: Collect only essential data.

- Purpose Limitation: Use data solely for intended purposes.

- Data Security: Apply robust safeguards against unauthorized access.

- Transparency: Inform users about data usage.

- User Control: Allow individuals to manage their data.

Techniques for Privacy and Security:

- Anonymization: Remove identifiable data to protect privacy.

- Differential Privacy: Add noise to data for analysis without compromising identities.

- Federated Learning: Train models on decentralized data without sharing it.

- Encryption: Secure data against unauthorized access.

Building Trust through Privacy:

- Privacy-Enhancing Technologies: Protect user data with advanced tools.

- Data Security Measures: Prevent breaches with strong protections.

- Regulatory Compliance: Adhere to laws like GDPR and CCPA.

- Clear Communication: Inform users about privacy practices.

Accountability and Responsibility in AI

Assigning responsibility for AI errors or harms is complex, especially with multiple stakeholders involved. Clear frameworks are needed to ensure accountability.

Accountability ensures those developing and deploying AI are answerable for its impacts, while responsibility focuses on ethical stewardship.

Accountability Challenges:

- System Complexity: Tracing errors in intricate AI systems is difficult.

- Multiple Stakeholders: Various parties are involved in AI’s lifecycle.

- Lack of Legal Frameworks: Clear liability guidelines are often absent.

Promoting Accountability:

- Clear Guidelines: Set standards for AI development and use.

- Legal Frameworks: Define liability for AI-driven outcomes.

- Auditing and Monitoring: Detect and address errors or biases.

- Explainable AI: Use XAI to clarify decision rationales.

Encouraging Ethical Practices:

- Ethics Training: Educate developers on ethical principles.

- Ethical Review Boards: Assess AI projects for ethical impacts.

- Industry Standards: Establish norms for responsible AI.

- Stakeholder Engagement: Involve diverse groups in evaluations.

AI Safety and Robustness

AI systems must be safe and resilient to avoid harm and ensure reliability against attacks or errors.

Key Safety and Robustness Considerations:

- Unintended Consequences: Prevent harm from AI actions.

- Adversarial Attacks: Protect against malicious manipulations.

- Unexpected Inputs: Handle out-of-distribution inputs effectively.

- System Reliability: Ensure consistent performance.

Enhancing Safety and Robustness:

- Formal Verification: Use mathematics to confirm system safety.

- Adversarial Training: Train systems to resist attacks.

- Anomaly Detection: Identify and flag unusual inputs.

- Redundancy: Build fault-tolerant systems for reliability.

Advancing Responsible Innovation:

- Safety Research: Invest in AI safety studies.

- Safety Standards: Develop guidelines for robust AI.

- Testing and Validation: Rigorously test systems for safety.

- Continuous Monitoring: Track systems to address issues.

AI for Social Good

AI can tackle societal challenges like healthcare, education, and sustainability, improving lives through targeted solutions.

Healthcare: Enhance diagnosis, treatment, and prevention.

Education: Personalize learning and improve access.

Environmental Sustainability: Optimize resources and reduce pollution.

Poverty Reduction: Expand financial access and job opportunities.

Disaster Relief: Improve preparedness and recovery.

Ethical Considerations for Social Good:

- Equity and Inclusion: Ensure benefits reach marginalized groups.

- Transparency and Accountability: Maintain clear decision-making processes.

- Privacy and Security: Protect sensitive data.

- Human Autonomy: Preserve individual control.

Promoting Social Good Initiatives:

- Fund Research: Support AI for social impact.

- Support Startups: Back ventures addressing societal issues.

- Public-Private Partnerships: Collaborate on impactful solutions.

- Stakeholder Engagement: Involve diverse groups in development.

Labor and Employment Impacts

AI’s automation potential raises concerns about job displacement and inequality, requiring proactive workforce strategies.

AI’s Labor Impacts:

- Job Displacement: Automation may reduce jobs in some sectors.

- Job Creation: New roles emerge in AI-related fields.

- Skill Gap: Demand grows for specialized skills.

- Income Inequality: Gaps widen between skill levels.

Mitigating Labor Impacts:

- Reskilling Programs: Train workers for new roles.

- Job Creation: Invest in AI-driven industries.

- Social Safety Nets: Support displaced workers.

- Lifelong Learning: Promote continuous skill development.

Ensuring a Fair Transition:

- Retraining Programs: Help workers shift to new industries.

- Unemployment Benefits: Support those affected by automation.

- Universal Basic Income: Explore safety nets for all.

- Worker Empowerment: Involve workers in AI decisions.

Preserving Human Autonomy

Advanced AI risks diminishing human control, making it essential to maintain agency and decision-making power.

Human autonomy ensures individuals retain control over their choices and lives, preventing undue AI influence.

Risks to Autonomy:

- AI Manipulation: Systems may sway behavior subtly.

- Over-Reliance: Dependence on AI could weaken critical thinking.

- Loss of Control: Unintended consequences from unchecked AI.

Preserving Autonomy:

- Transparency: Ensure clear, understandable AI processes.

- Human-in-the-Loop: Require human oversight in AI systems.

- User Control: Allow opting out of AI-driven decisions.

- Critical Thinking Education: Empower informed decision-making.

Ethical AI Development:

- Human Values: Align AI with societal well-being.

- Bias Mitigation: Prevent discriminatory outcomes.

- Data Privacy: Protect personal information.

- Accountability: Hold developers responsible for AI actions.

Ethical AI Research

Responsible AI research balances innovation with careful consideration of societal and environmental impacts.

Ethical Research Principles:

- Transparency: Share findings to foster collaboration.

- Accountability: Own the consequences of research.

- Beneficence: Maximize benefits, minimize harms.

- Justice: Ensure research benefits all, including marginalized groups.

Promoting Ethical Research:

- Ethics Review Boards: Evaluate research implications.

- Ethical Guidelines: Set standards for researchers.

- Stakeholder Engagement: Involve diverse perspectives.

- Public Education: Inform society about AI’s potential.

Driving Innovation:

- Open Research: Encourage data sharing for progress.

- AI Education: Train a skilled workforce.

- Collaboration: Foster partnerships across sectors.

- Ethical Innovation: Develop solutions for social challenges.

AI Governance and Regulation

Robust governance and regulations are crucial to address ethical concerns and ensure responsible AI development.

Governance Challenges:

- Rapid Advancements: Keeping up with AI’s pace.

- System Complexity: Understanding intricate AI ethics.

- Lack of Consensus: Agreeing on ethical standards.

Effective Governance Strategies:

- Ethical Standards: Define responsible AI principles.

- Regulatory Frameworks: Establish legal guidelines.

- Ethics Boards: Provide oversight and guidance.

- Transparency: Require clear decision-making processes.

Building Public Trust:

- Accountability: Ensure developers are answerable.

- Human Rights: Protect individual freedoms.

- Social Good: Use AI to address societal needs.

- Public Engagement: Involve communities in governance discussions.

Sample AI Governance Framework:

| Component | Description |

|---|---|

| Ethical Principles | Core values like fairness, transparency, and accountability guiding AI development. |

| Policy Guidelines | Rules detailing how AI systems should be designed and deployed. |

| Regulatory Framework | Legal requirements ensuring ethical compliance and human rights protection. |

| Oversight Mechanisms | Processes for monitoring and auditing AI for ethical use. |

| Accountability Measures | Systems to hold developers accountable, including penalties for ethical breaches. |

Advantages of Ethical AI

Shaping a Responsible AI Future

Addressing AI ethics is critical for creating a future where AI benefits humanity responsibly. Prioritizing ethics unlocks AI’s potential while minimizing risks. Key benefits include:

- Building Trust: Ethical practices boost stakeholder confidence, driving AI adoption.

- Fostering Innovation: Ethical considerations spur value-aligned innovation, enhancing competitiveness.

- Promoting Equity: Ethical AI reduces biases, ensuring fair outcomes for all.

- Enhancing Transparency: Clear processes improve accountability and trust.

- Protecting Rights: Ethical AI safeguards human dignity and autonomy.

- Encouraging Collaboration: Shared ethical values unite stakeholders for responsible AI.

Ultimately, ethical AI is both a moral and strategic necessity, ensuring a future where AI enhances human well-being.

Guide to Ethical AI Development

Step 1: Define Ethical Principles

Start by setting clear ethical principles reflecting organizational values and societal norms, developed with diverse stakeholder input.

Key principles:

- Fairness: Ensure equitable treatment without bias.

- Transparency: Make AI processes clear to stakeholders.

- Accountability: Assign responsibility for AI actions.

- Privacy: Safeguard personal data.

- Safety: Design systems to prevent harm.

Step 2: Assess Ethical Risks

Conduct comprehensive risk assessments to identify ethical concerns, involving experts in ethics, law, and AI.

Risk areas:

- Bias: Detect biases in data and algorithms.

- Discrimination: Evaluate risks of unfair treatment.

- Privacy Violations: Assess data misuse risks.

- Security Threats: Identify vulnerabilities to attacks.

- Unintended Consequences: Consider potential harms.

Step 3: Embed Ethics in Design

Incorporate ethical considerations into AI design from the start, prioritizing fairness, transparency, and accountability.

Design approaches:

- Fairness-Aware Algorithms: Promote equitable outcomes.

- Explainable AI: Use XAI for understandable decisions.

- Privacy Technologies: Protect user data.

- Human Oversight: Require human intervention in AI.

- Transparency Tools: Inform users about data use.

Step 4: Monitor and Audit Systems

Implement monitoring and auditing to detect ethical issues, ensuring compliance with principles and regulations.

Practices:

- Performance Monitoring: Track metrics for bias.

- Data Auditing: Check data for biases.

- Algorithm Transparency: Monitor algorithms for ethics.

- User Feedback: Collect input on ethical concerns.

- Compliance: Ensure adherence to ethical standards.

Step 5: Ensure Accountability

Establish clear accountability for AI actions, with reporting mechanisms to address ethical concerns promptly.

Measures:

- Ethics Officer: Oversee ethical practices.

- Review Committee: Assess AI project ethics.

- Reporting Channels: Enable stakeholder feedback.

- Whistleblower Protection: Safeguard those reporting issues.

- Sanctions: Penalize ethical violations.

Pros and Cons of AI Ethics

Pros

Greater trust and adoption of AI systems

Enhanced accountability and clarity in decisions

Reduced bias and fairer outcomes

Stronger privacy and data security protections

Increased focus on AI for societal benefit

Cons

Higher development costs and time

Complexity in enforcing ethical guidelines

Balancing ethics with innovation

Challenges in agreeing on ethical standards

Risks of unintended ethical outcomes

AI Ethics FAQ

What are the key ethical concerns in AI?

Key concerns include transparency, fairness, privacy, accountability, and safety, all critical for responsible AI use.

How can fairness be ensured in AI?

Use diverse data, fairness-aware algorithms, regular audits, and stakeholder engagement to promote equitable outcomes.

How can privacy be protected in AI?

Adopt data minimization, encryption, differential privacy, federated learning, and transparent data practices.

Who is accountable for AI errors?

Clear guidelines and legal frameworks are needed to assign responsibility, supported by accountability measures.

How can AI systems be made safe?

Use formal verification, adversarial training, anomaly detection, and rigorous testing to ensure safety and robustness.

How can AI promote social good?

AI can address healthcare, education, and sustainability challenges, improving lives through ethical solutions.

Related AI Ethics Questions

What are AI’s implications for human autonomy?

AI risks eroding autonomy, requiring transparency, human oversight, and user control to maintain agency.

How can ethics be integrated into AI development?

Define principles, assess risks, embed ethics in design, monitor systems, and ensure accountability.

What challenges exist in AI governance?

Rapid advancements, system complexity, and lack of consensus complicate creating effective regulations.

How can we prepare for AI’s impact on work?

Implement reskilling, create new jobs, offer safety nets, and promote lifelong learning to adapt to AI-driven changes.

AI Industry's Urgent Need for Revision Control Graph Databases

The AI sector is advancing swiftly, requiring advanced tools to manage complex data and workflows. Traditional relational databases often fall short in addressing AI's dynamic data needs, particularly

AI Industry's Urgent Need for Revision Control Graph Databases

The AI sector is advancing swiftly, requiring advanced tools to manage complex data and workflows. Traditional relational databases often fall short in addressing AI's dynamic data needs, particularly

Unraveling Love and Duty in "Sajadah Merah"

The song "Sajadah Merah" weaves a poignant tale of love, faith, and the sacrifices made for family and obligation. It delves into the emotions of a man who loved a woman who, due to circumstances, wed

Unraveling Love and Duty in "Sajadah Merah"

The song "Sajadah Merah" weaves a poignant tale of love, faith, and the sacrifices made for family and obligation. It delves into the emotions of a man who loved a woman who, due to circumstances, wed