AI Crawlers Surge Wikimedia Commons Bandwidth Demand by 50%

The Wikimedia Foundation, the parent body behind Wikipedia and numerous other crowd-sourced knowledge platforms, announced on Wednesday a staggering 50% increase in bandwidth usage for multimedia downloads from Wikimedia Commons since January 2024. This surge, as detailed in a blog post on Tuesday, isn't driven by an uptick in human curiosity, but rather by automated scrapers hungry for data to train AI models.

“Our infrastructure is designed to handle sudden surges in traffic from humans during major events, but the volume of traffic from scraper bots is unmatched and poses increasing risks and costs,” the post explains.

Wikimedia Commons serves as a freely accessible hub for images, videos, and audio files, all available under open licenses or in the public domain.

Delving deeper, Wikimedia revealed that a whopping 65% of the most resource-intensive traffic—measured by the type of content consumed—comes from bots. Yet, these bots account for just 35% of overall pageviews. The discrepancy, according to Wikimedia, stems from how frequently accessed content is cached closer to users, while less popular content, which bots often target, is stored in the more costly "core data center."

“While human readers tend to focus on specific, often similar, topics, crawler bots tend to ‘bulk read’ a larger number of pages and visit less popular ones as well,” Wikimedia noted. “This results in these requests being forwarded to the core datacenter, which significantly increases our resource consumption costs.”

As a result, the Wikimedia Foundation's site reliability team is dedicating substantial time and resources to blocking these crawlers to prevent disruptions for everyday users. This doesn't even touch on the escalating cloud costs the Foundation is contending with.

This scenario is part of a broader trend that's endangering the open internet. Just last month, software engineer and open-source advocate Drew DeVault lamented that AI crawlers are blatantly ignoring “robots.txt” files intended to deter automated traffic. Similarly, Gergely Orosz, known as the "pragmatic engineer," recently voiced his frustration over how AI scrapers from companies like Meta have spiked bandwidth demands for his projects.

While open-source infrastructures are particularly vulnerable, developers are responding with ingenuity and determination. TechCrunch highlighted last week that some tech companies are stepping up. For instance, Cloudflare introduced AI Labyrinth, designed to slow down crawlers with AI-generated content.

Yet, it remains a constant game of cat and mouse, one that might push many publishers to retreat behind logins and paywalls, ultimately harming the open nature of the web we all rely on.

Related article

AI Reimagines Michael Jackson in the Metaverse with Stunning Digital Transformations

Artificial intelligence is fundamentally reshaping our understanding of creativity, entertainment, and cultural legacy. This exploration into AI-generated interpretations of Michael Jackson reveals how cutting-edge technology can breathe new life int

AI Reimagines Michael Jackson in the Metaverse with Stunning Digital Transformations

Artificial intelligence is fundamentally reshaping our understanding of creativity, entertainment, and cultural legacy. This exploration into AI-generated interpretations of Michael Jackson reveals how cutting-edge technology can breathe new life int

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

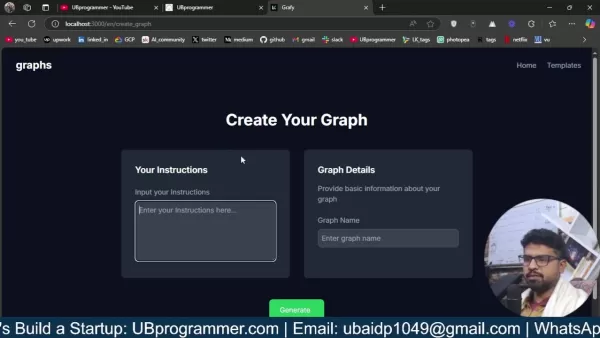

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

Comments (14)

0/200

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

Comments (14)

0/200

![KevinBrown]() KevinBrown

KevinBrown

August 23, 2025 at 11:01:15 AM EDT

August 23, 2025 at 11:01:15 AM EDT

Incroyable, 50% d'augmentation de bande passante pour Wikimedia Commons ! Ça montre à quel point l'IA aspire tout sur son passage, non ? 😅 J’espère juste que ça ne va pas surcharger les serveurs ou freiner l’accès pour les utilisateurs classiques.

0

0

![CharlesWhite]() CharlesWhite

CharlesWhite

August 13, 2025 at 9:00:59 AM EDT

August 13, 2025 at 9:00:59 AM EDT

Whoa, a 50% spike in Wikimedia Commons bandwidth? AI crawlers are eating up data like it’s an all-you-can-eat buffet! 😄 Makes me wonder how much of this is legit research vs. bots just hoarding images for some shady AI training. Anyone else curious about what’s driving this?

0

0

![SamuelClark]() SamuelClark

SamuelClark

July 31, 2025 at 7:35:39 AM EDT

July 31, 2025 at 7:35:39 AM EDT

Wow, a 50% spike in bandwidth for Wikimedia Commons? That’s wild! AI crawlers are probably gobbling up all those images for training. Kinda cool but also makes me wonder if this is pushing the limits of what open platforms can handle. 😅

0

0

![KennethJohnson]() KennethJohnson

KennethJohnson

July 30, 2025 at 9:42:05 PM EDT

July 30, 2025 at 9:42:05 PM EDT

Wow, a 50% spike in bandwidth for Wikimedia Commons? That’s wild! AI crawlers are probably gobbling up all those images for training. Makes me wonder how much data these AI models are chugging through daily. 😳 Cool to see open knowledge fueling innovation, though!

0

0

![WillieAnderson]() WillieAnderson

WillieAnderson

April 18, 2025 at 2:23:40 AM EDT

April 18, 2025 at 2:23:40 AM EDT

Wikimedia Commons에서 AI 크롤러로 인한 대역폭 수요 증가는 미쳤어요! AI가 이렇게 널리 사용되는 건 멋지지만, 조금 걱정되기도 해요. 사용자 경험에 큰 영향을 주지 않으면서 이를 관리할 방법을 찾았으면 좋겠어요. 🤔

0

0

![RaymondGreen]() RaymondGreen

RaymondGreen

April 18, 2025 at 2:01:01 AM EDT

April 18, 2025 at 2:01:01 AM EDT

ウィキメディア・コモンズの帯域使用量が50%増えたって?😲 信じられない!AIクローラーがデータを欲しがってるんだね。ウィキメディアが情報を共有してくれるのはいいけど、これで遅くなるのは嫌だな。ユーザー体験を壊さずに対応できるといいね!🤞

0

0

The Wikimedia Foundation, the parent body behind Wikipedia and numerous other crowd-sourced knowledge platforms, announced on Wednesday a staggering 50% increase in bandwidth usage for multimedia downloads from Wikimedia Commons since January 2024. This surge, as detailed in a blog post on Tuesday, isn't driven by an uptick in human curiosity, but rather by automated scrapers hungry for data to train AI models.

“Our infrastructure is designed to handle sudden surges in traffic from humans during major events, but the volume of traffic from scraper bots is unmatched and poses increasing risks and costs,” the post explains.

Wikimedia Commons serves as a freely accessible hub for images, videos, and audio files, all available under open licenses or in the public domain.

Delving deeper, Wikimedia revealed that a whopping 65% of the most resource-intensive traffic—measured by the type of content consumed—comes from bots. Yet, these bots account for just 35% of overall pageviews. The discrepancy, according to Wikimedia, stems from how frequently accessed content is cached closer to users, while less popular content, which bots often target, is stored in the more costly "core data center."

“While human readers tend to focus on specific, often similar, topics, crawler bots tend to ‘bulk read’ a larger number of pages and visit less popular ones as well,” Wikimedia noted. “This results in these requests being forwarded to the core datacenter, which significantly increases our resource consumption costs.”

As a result, the Wikimedia Foundation's site reliability team is dedicating substantial time and resources to blocking these crawlers to prevent disruptions for everyday users. This doesn't even touch on the escalating cloud costs the Foundation is contending with.

This scenario is part of a broader trend that's endangering the open internet. Just last month, software engineer and open-source advocate Drew DeVault lamented that AI crawlers are blatantly ignoring “robots.txt” files intended to deter automated traffic. Similarly, Gergely Orosz, known as the "pragmatic engineer," recently voiced his frustration over how AI scrapers from companies like Meta have spiked bandwidth demands for his projects.

While open-source infrastructures are particularly vulnerable, developers are responding with ingenuity and determination. TechCrunch highlighted last week that some tech companies are stepping up. For instance, Cloudflare introduced AI Labyrinth, designed to slow down crawlers with AI-generated content.

Yet, it remains a constant game of cat and mouse, one that might push many publishers to retreat behind logins and paywalls, ultimately harming the open nature of the web we all rely on.

AI Reimagines Michael Jackson in the Metaverse with Stunning Digital Transformations

Artificial intelligence is fundamentally reshaping our understanding of creativity, entertainment, and cultural legacy. This exploration into AI-generated interpretations of Michael Jackson reveals how cutting-edge technology can breathe new life int

AI Reimagines Michael Jackson in the Metaverse with Stunning Digital Transformations

Artificial intelligence is fundamentally reshaping our understanding of creativity, entertainment, and cultural legacy. This exploration into AI-generated interpretations of Michael Jackson reveals how cutting-edge technology can breathe new life int

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

August 23, 2025 at 11:01:15 AM EDT

August 23, 2025 at 11:01:15 AM EDT

Incroyable, 50% d'augmentation de bande passante pour Wikimedia Commons ! Ça montre à quel point l'IA aspire tout sur son passage, non ? 😅 J’espère juste que ça ne va pas surcharger les serveurs ou freiner l’accès pour les utilisateurs classiques.

0

0

August 13, 2025 at 9:00:59 AM EDT

August 13, 2025 at 9:00:59 AM EDT

Whoa, a 50% spike in Wikimedia Commons bandwidth? AI crawlers are eating up data like it’s an all-you-can-eat buffet! 😄 Makes me wonder how much of this is legit research vs. bots just hoarding images for some shady AI training. Anyone else curious about what’s driving this?

0

0

July 31, 2025 at 7:35:39 AM EDT

July 31, 2025 at 7:35:39 AM EDT

Wow, a 50% spike in bandwidth for Wikimedia Commons? That’s wild! AI crawlers are probably gobbling up all those images for training. Kinda cool but also makes me wonder if this is pushing the limits of what open platforms can handle. 😅

0

0

July 30, 2025 at 9:42:05 PM EDT

July 30, 2025 at 9:42:05 PM EDT

Wow, a 50% spike in bandwidth for Wikimedia Commons? That’s wild! AI crawlers are probably gobbling up all those images for training. Makes me wonder how much data these AI models are chugging through daily. 😳 Cool to see open knowledge fueling innovation, though!

0

0

April 18, 2025 at 2:23:40 AM EDT

April 18, 2025 at 2:23:40 AM EDT

Wikimedia Commons에서 AI 크롤러로 인한 대역폭 수요 증가는 미쳤어요! AI가 이렇게 널리 사용되는 건 멋지지만, 조금 걱정되기도 해요. 사용자 경험에 큰 영향을 주지 않으면서 이를 관리할 방법을 찾았으면 좋겠어요. 🤔

0

0

April 18, 2025 at 2:01:01 AM EDT

April 18, 2025 at 2:01:01 AM EDT

ウィキメディア・コモンズの帯域使用量が50%増えたって?😲 信じられない!AIクローラーがデータを欲しがってるんだね。ウィキメディアが情報を共有してくれるのはいいけど、これで遅くなるのは嫌だな。ユーザー体験を壊さずに対応できるといいね!🤞

0

0