3 ways Meta's Llama 3.1 is an advance for Gen AI

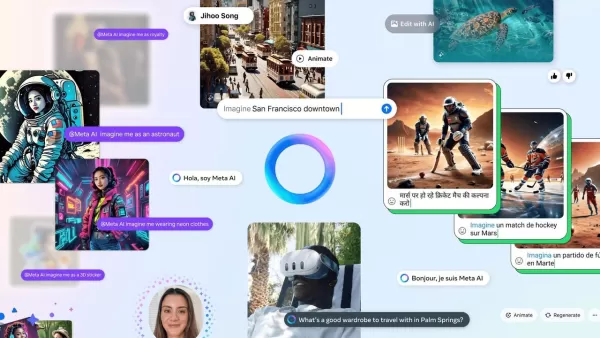

On Tuesday, Meta pulled back the curtain on the latest addition to its Llama family of large language models (LLMs), introducing Llama 3.1. The company proudly touts Llama 3.1 as the first open-source "frontier model," a term typically reserved for the most advanced AI models out there.

Llama 3.1 comes in various sizes, but it's the behemoth "405B" that really turns heads. With a staggering 405 billion neural "weights," or parameters, it outmuscles other notable open-source models like Nvidia's Nemotron 4, Google's Gemma 2, and Mixtral. What's even more intriguing are the three key decisions the Meta team made in crafting this giant.

These decisions are nothing short of a neural network engineering masterclass, forming the backbone of how Llama 3.1 405B was built and trained. They also build on the efficiency gains Meta demonstrated with Llama 2, which showed promising ways to reduce the overall compute budget for deep learning.

First off, Llama 3.1 405B ditches the "mixture of experts" approach, which Google uses for its closed-source Gemini 1.5 and Mistral uses for Mixtral. This method involves creating different combinations of neural weights, some of which can be turned off to streamline predictions. Instead, Meta's researchers stuck with the tried-and-true "decoder-only transformer model architecture," a staple since Google introduced it in 2017. They claim this choice leads to a more stable training process.

Secondly, to boost the performance of this straightforward transformer-based model, Meta's team came up with a clever multi-stage training approach. We all know that balancing the amount of training data and compute can significantly impact prediction quality. But traditional "scaling laws," which predict model performance based on size and data, don't necessarily reflect how well a model will handle "downstream" tasks like reasoning tests.

So, Meta developed its own scaling law. They ramped up both the training data and compute, testing different combinations over multiple iterations to see how well the resulting model performed on those crucial downstream tasks. This meticulous process helped them pinpoint the sweet spot, leading to the choice of 405 billion parameters for their flagship model. The final training was powered by 16,000 Nvidia H100 GPU chips on Meta's Grand Teton AI server, with a complex system to run data and weights in parallel.

The third innovation lies in the post-training phase. After each training round, Llama 3.1 goes through a rigorous process guided by human feedback, similar to what OpenAI and others do to refine their models' outputs. This involves "supervised fine-tuning," where the model learns to distinguish between desirable and undesirable outputs based on human preferences.

Meta then throws in a twist with "direct preference optimization" (DPO), a more efficient version of reinforcement learning from human feedback, pioneered by Stanford University AI scholars this year. They also train Llama 3.1 to use "tools," like external search engines, by showing it examples of prompts solved with API calls, boosting its "zero-shot" tool use capabilities.

To combat "hallucinations," the team curates specific training data and creates original question-answer pairs, fine-tuning the model to answer only what it knows and refuse what it's unsure about.

Throughout the development, the Meta researchers emphasized simplicity, stating that high-quality data, scale, and straightforward approaches consistently delivered the best results. Despite exploring more complex architectures and training recipes, they found the added complexity didn't justify the benefits.

The scale of Llama 3.1 405B is a landmark for open-source models, typically dwarfed by their commercial, closed-source counterparts. Meta's CEO, Mark Zuckerberg, highlighted the economic advantages, noting that developers can run inference on Llama 3.1 405B at half the cost of using models like GPT-4o.

Zuckerberg also championed open-source AI as a natural progression of software, likening it to the evolution of Unix from proprietary to a more advanced, secure, and broader ecosystem thanks to open-source development.

However, as Steven Vaughan-Nichols from ZDNET points out, some details are missing from Meta's code posting on Hugging Face, and the code license is more restrictive than typical open-source licenses. So, while Llama 3.1 is kind of open source, it's not entirely there. Yet, the sheer volume of detail about its training process is a refreshing change, especially when giants like OpenAI and Google are increasingly tight-lipped about their closed-source models.

Related article

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Meta Shares Revenue with Hosts of Llama AI Models, Filing Discloses

While Meta CEO Mark Zuckerberg emphasized in July 2023 that "selling access" isn't their business model for Llama AI models, newly disclosed court filings reveal Meta engages in revenue-sharing partnerships with cloud providers hosting these open-sou

Meta Shares Revenue with Hosts of Llama AI Models, Filing Discloses

While Meta CEO Mark Zuckerberg emphasized in July 2023 that "selling access" isn't their business model for Llama AI models, newly disclosed court filings reveal Meta engages in revenue-sharing partnerships with cloud providers hosting these open-sou

Unlock 99% of Hidden Data Now Optimized for AI

For generations, organizations across industries have understood that their accumulated information represents a transformative asset – one capable of enhancing customer interactions and shaping data-driven business strategies with unparalleled preci

Comments (27)

0/200

Unlock 99% of Hidden Data Now Optimized for AI

For generations, organizations across industries have understood that their accumulated information represents a transformative asset – one capable of enhancing customer interactions and shaping data-driven business strategies with unparalleled preci

Comments (27)

0/200

![DavidRodriguez]() DavidRodriguez

DavidRodriguez

August 30, 2025 at 12:30:32 PM EDT

August 30, 2025 at 12:30:32 PM EDT

Interessant, dass Meta Llama 3.1 als erstes Open-Source-Modell bezeichnet. Aber wer kann so ein riesiges Modell eigentlich sinnvoll nutzen? Für kleine Unternehmen bestimmt zu teuer im Betrieb. 🧐

0

0

![ThomasBaker]() ThomasBaker

ThomasBaker

July 30, 2025 at 9:41:20 PM EDT

July 30, 2025 at 9:41:20 PM EDT

Wow, Llama 3.1 sounds like a game-changer! Open-source and frontier-level? That’s huge for AI devs. Curious how it stacks up against closed models like GPT-4. 😎

0

0

![AlbertThomas]() AlbertThomas

AlbertThomas

April 22, 2025 at 11:18:49 AM EDT

April 22, 2025 at 11:18:49 AM EDT

O Llama 3.1 é incrível! Adoro que seja de código aberto, é como ter um superpoder no meu arsenal de programação. No começo pode ser um pouco confuso, mas vale a pena experimentar se você gosta de IA! 🚀

0

0

![GaryGonzalez]() GaryGonzalez

GaryGonzalez

April 22, 2025 at 4:13:48 AM EDT

April 22, 2025 at 4:13:48 AM EDT

ラマ3.1は本当にすごい!オープンソースで使えるのが最高です。最初は少し圧倒されましたが、慣れると便利です。AIに興味があるなら、ぜひ試してみてください!🚀

0

0

![AnthonyPerez]() AnthonyPerez

AnthonyPerez

April 22, 2025 at 3:26:53 AM EDT

April 22, 2025 at 3:26:53 AM EDT

¡Llama 3.1 es una bestia! Me encanta que sea de código abierto, es como tener un superpoder en mi arsenal de programación. Al principio puede ser un poco abrumador, pero definitivamente vale la pena probarlo si te interesa la IA! 🚀

0

0

![JustinAnderson]() JustinAnderson

JustinAnderson

April 20, 2025 at 5:42:32 PM EDT

April 20, 2025 at 5:42:32 PM EDT

¡Llama 3.1 de Meta es una maravilla! Me sorprende cómo están empujando los límites con la IA de código abierto. El rendimiento es genial, pero desearía que hubiera más documentación para principiantes. De todas formas, ¡es una herramienta que hay que probar! 💪

0

0

On Tuesday, Meta pulled back the curtain on the latest addition to its Llama family of large language models (LLMs), introducing Llama 3.1. The company proudly touts Llama 3.1 as the first open-source "frontier model," a term typically reserved for the most advanced AI models out there.

Llama 3.1 comes in various sizes, but it's the behemoth "405B" that really turns heads. With a staggering 405 billion neural "weights," or parameters, it outmuscles other notable open-source models like Nvidia's Nemotron 4, Google's Gemma 2, and Mixtral. What's even more intriguing are the three key decisions the Meta team made in crafting this giant.

These decisions are nothing short of a neural network engineering masterclass, forming the backbone of how Llama 3.1 405B was built and trained. They also build on the efficiency gains Meta demonstrated with Llama 2, which showed promising ways to reduce the overall compute budget for deep learning.

First off, Llama 3.1 405B ditches the "mixture of experts" approach, which Google uses for its closed-source Gemini 1.5 and Mistral uses for Mixtral. This method involves creating different combinations of neural weights, some of which can be turned off to streamline predictions. Instead, Meta's researchers stuck with the tried-and-true "decoder-only transformer model architecture," a staple since Google introduced it in 2017. They claim this choice leads to a more stable training process.

Secondly, to boost the performance of this straightforward transformer-based model, Meta's team came up with a clever multi-stage training approach. We all know that balancing the amount of training data and compute can significantly impact prediction quality. But traditional "scaling laws," which predict model performance based on size and data, don't necessarily reflect how well a model will handle "downstream" tasks like reasoning tests.

So, Meta developed its own scaling law. They ramped up both the training data and compute, testing different combinations over multiple iterations to see how well the resulting model performed on those crucial downstream tasks. This meticulous process helped them pinpoint the sweet spot, leading to the choice of 405 billion parameters for their flagship model. The final training was powered by 16,000 Nvidia H100 GPU chips on Meta's Grand Teton AI server, with a complex system to run data and weights in parallel.

The third innovation lies in the post-training phase. After each training round, Llama 3.1 goes through a rigorous process guided by human feedback, similar to what OpenAI and others do to refine their models' outputs. This involves "supervised fine-tuning," where the model learns to distinguish between desirable and undesirable outputs based on human preferences.

Meta then throws in a twist with "direct preference optimization" (DPO), a more efficient version of reinforcement learning from human feedback, pioneered by Stanford University AI scholars this year. They also train Llama 3.1 to use "tools," like external search engines, by showing it examples of prompts solved with API calls, boosting its "zero-shot" tool use capabilities.

To combat "hallucinations," the team curates specific training data and creates original question-answer pairs, fine-tuning the model to answer only what it knows and refuse what it's unsure about.

Throughout the development, the Meta researchers emphasized simplicity, stating that high-quality data, scale, and straightforward approaches consistently delivered the best results. Despite exploring more complex architectures and training recipes, they found the added complexity didn't justify the benefits.

The scale of Llama 3.1 405B is a landmark for open-source models, typically dwarfed by their commercial, closed-source counterparts. Meta's CEO, Mark Zuckerberg, highlighted the economic advantages, noting that developers can run inference on Llama 3.1 405B at half the cost of using models like GPT-4o.

Zuckerberg also championed open-source AI as a natural progression of software, likening it to the evolution of Unix from proprietary to a more advanced, secure, and broader ecosystem thanks to open-source development.

However, as Steven Vaughan-Nichols from ZDNET points out, some details are missing from Meta's code posting on Hugging Face, and the code license is more restrictive than typical open-source licenses. So, while Llama 3.1 is kind of open source, it's not entirely there. Yet, the sheer volume of detail about its training process is a refreshing change, especially when giants like OpenAI and Google are increasingly tight-lipped about their closed-source models.

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Unlock 99% of Hidden Data Now Optimized for AI

For generations, organizations across industries have understood that their accumulated information represents a transformative asset – one capable of enhancing customer interactions and shaping data-driven business strategies with unparalleled preci

Unlock 99% of Hidden Data Now Optimized for AI

For generations, organizations across industries have understood that their accumulated information represents a transformative asset – one capable of enhancing customer interactions and shaping data-driven business strategies with unparalleled preci

August 30, 2025 at 12:30:32 PM EDT

August 30, 2025 at 12:30:32 PM EDT

Interessant, dass Meta Llama 3.1 als erstes Open-Source-Modell bezeichnet. Aber wer kann so ein riesiges Modell eigentlich sinnvoll nutzen? Für kleine Unternehmen bestimmt zu teuer im Betrieb. 🧐

0

0

July 30, 2025 at 9:41:20 PM EDT

July 30, 2025 at 9:41:20 PM EDT

Wow, Llama 3.1 sounds like a game-changer! Open-source and frontier-level? That’s huge for AI devs. Curious how it stacks up against closed models like GPT-4. 😎

0

0

April 22, 2025 at 11:18:49 AM EDT

April 22, 2025 at 11:18:49 AM EDT

O Llama 3.1 é incrível! Adoro que seja de código aberto, é como ter um superpoder no meu arsenal de programação. No começo pode ser um pouco confuso, mas vale a pena experimentar se você gosta de IA! 🚀

0

0

April 22, 2025 at 4:13:48 AM EDT

April 22, 2025 at 4:13:48 AM EDT

ラマ3.1は本当にすごい!オープンソースで使えるのが最高です。最初は少し圧倒されましたが、慣れると便利です。AIに興味があるなら、ぜひ試してみてください!🚀

0

0

April 22, 2025 at 3:26:53 AM EDT

April 22, 2025 at 3:26:53 AM EDT

¡Llama 3.1 es una bestia! Me encanta que sea de código abierto, es como tener un superpoder en mi arsenal de programación. Al principio puede ser un poco abrumador, pero definitivamente vale la pena probarlo si te interesa la IA! 🚀

0

0

April 20, 2025 at 5:42:32 PM EDT

April 20, 2025 at 5:42:32 PM EDT

¡Llama 3.1 de Meta es una maravilla! Me sorprende cómo están empujando los límites con la IA de código abierto. El rendimiento es genial, pero desearía que hubiera más documentación para principiantes. De todas formas, ¡es una herramienta que hay que probar! 💪

0

0