Techspert Explains: CPU vs. GPU vs. TPU Differences

Back at I/O in May, we unveiled Trillium, the sixth generation of our custom-designed chip called the Tensor Processing Unit, or TPU. Today, we're excited to share that it's now available for Google Cloud customers in preview. TPUs are the magic behind the AI that makes your Google devices and apps super helpful, and Trillium is the most powerful and eco-friendly TPU we've ever made.

So, what's a TPU, and what makes Trillium unique? To get the full picture, it's helpful to know about other types of compute processors like CPUs and GPUs, and what sets them apart. Chelsie Czop, a product manager working on AI infrastructure at Google Cloud, can explain it all. "I collaborate with various teams to ensure our platforms are as efficient as they can be for our customers building AI products," she says. And according to Chelsie, Google's TPUs are a big reason why our AI products are so effective.

Let's dive into the basics! What are CPUs, GPUs, and TPUs?

These are all chips that act as processors for computing tasks. Imagine your brain as a computer doing things like reading or solving math problems. Each of these activities is like a computing task. So, when you use your phone to snap a photo, send a text, or open an app, your phone's brain, or processor, is handling those tasks.

What do these acronyms stand for?

Even though CPUs, GPUs, and TPUs are all processors, they become increasingly specialized. CPU stands for Central Processing Unit. These are versatile chips that can handle a wide variety of tasks. Just like your brain, some tasks might take longer if the CPU isn't specialized for them.

Next up is the GPU, or Graphics Processing Unit. GPUs are the powerhouse for accelerated computing tasks, from rendering graphics to running AI workloads. They're a type of ASIC, or application-specific integrated circuit. Integrated circuits are usually made from silicon, which is why you might hear chips called "silicon" — it's the same thing (and yep, that's where "Silicon Valley" got its name!). In a nutshell, ASICs are designed for one specific purpose.

The TPU, or Tensor Processing Unit, is Google's own ASIC. We designed TPUs from scratch to handle AI-based computing tasks, making them even more specialized than CPUs and GPUs. TPUs are at the core of some of Google's most popular AI services, including Search, YouTube, and DeepMind's large language models.

Got it, so these chips are what make our devices tick. Where would I find CPUs, GPUs, and TPUs?

CPUs and GPUs are found in everyday items: CPUs are in nearly every smartphone and laptop, while GPUs are common in high-end gaming systems and some desktop computers. TPUs, on the other hand, are only found in Google data centers — massive buildings packed with racks of TPUs, working around the clock to keep Google's and our Cloud customers' AI services running globally.

What prompted Google to start developing TPUs?

CPUs were invented in the late 1950s, and GPUs came along in the late '90s. At Google, we started thinking about TPUs about a decade ago. Our speech recognition services were improving rapidly, and we realized that if every user started talking to Google for just three minutes a day, we'd need to double our data center computers. We knew we needed something far more efficient than the off-the-shelf hardware available at the time — and we needed a lot more processing power from each chip. So, we decided to build our own!

And that "T" stands for Tensor, right? Why?

Yep — a "tensor" is the general term for the data structures used in machine learning. There's a lot of math happening behind the scenes to make AI tasks work. With our latest TPU, Trillium, we've boosted the number of calculations it can perform: Trillium has 4.7 times the peak compute performance per chip compared to the previous generation, TPU v5e.

What does that mean, exactly?

It means Trillium can handle all the calculations needed for complex math 4.7 times faster than the last version. Not only is Trillium faster, but it can also manage larger, more complex workloads.

Is there anything else that makes it an improvement over our last-gen TPU?

Another big improvement with Trillium is that it's our most sustainable TPU yet — it's 67% more energy-efficient than our previous TPU. As AI demand keeps growing, the industry needs to scale infrastructure in a sustainable way. Trillium uses less power to do the same work.

Now that customers are starting to use it, what kind of impact do you think Trillium will have?

We're already seeing some amazing things powered by Trillium! Customers are using it for technologies that analyze RNA for various diseases, convert text to video at lightning speeds, and more. And that's just from our initial users — now that Trillium's in preview, we're excited to see what people will do with it.

Related article

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

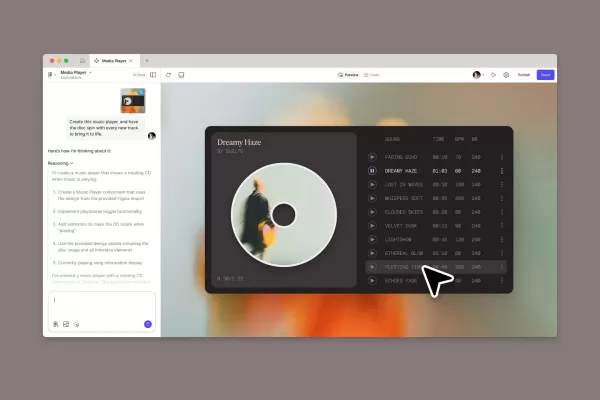

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (31)

0/200

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (31)

0/200

![NicholasLee]() NicholasLee

NicholasLee

August 30, 2025 at 8:30:34 AM EDT

August 30, 2025 at 8:30:34 AM EDT

TPU 的出現讓 AI 運算又更上一層樓了!不過對於一般開發者來說,GPU 可能還是比較實際的選擇,畢竟 Google Cloud 的 TPU 價格不是普通公司負擔得起的啊 😅

0

0

![JackWilson]() JackWilson

JackWilson

April 19, 2025 at 6:14:21 AM EDT

April 19, 2025 at 6:14:21 AM EDT

Techspert Explains really helped me understand the differences between CPU, GPU, and TPU! The way it breaks down the tech is super clear and engaging. Only wish it had more examples for TPUs, but still a solid tool for tech newbies like me! 🤓

0

0

![KennethJohnson]() KennethJohnson

KennethJohnson

April 15, 2025 at 8:02:13 AM EDT

April 15, 2025 at 8:02:13 AM EDT

Techspert's breakdown of CPU vs. GPU vs. TPU was super helpful! I finally get why my Google stuff runs so smoothly. Trillium sounds like the next big thing, but I wish it was easier to get my hands on it. 🤓💻

0

0

![RyanGonzalez]() RyanGonzalez

RyanGonzalez

April 14, 2025 at 3:36:51 PM EDT

April 14, 2025 at 3:36:51 PM EDT

Techspert's explanation on CPU vs. GPU vs. TPU was super clear! I finally understand the differences and how TPUs power Google's AI. The only downside was it was a bit too technical for my taste. Still, a must-watch for tech enthusiasts! 💻

0

0

![DonaldEvans]() DonaldEvans

DonaldEvans

April 14, 2025 at 11:28:01 AM EDT

April 14, 2025 at 11:28:01 AM EDT

टेक्स्पर्ट की CPU vs. GPU vs. TPU पर समझाइश बहुत स्पष्ट थी! मैंने अंत में अंतरों को समझा और यह भी जाना कि टीपीयू गूगल की एआई को कैसे शक्ति देते हैं। एकमात्र नकारात्मक पक्ष यह था कि यह मेरे स्वाद के लिए थोड़ा बहुत तकनीकी था। फिर भी, टेक एंथुजियास्ट्स के लिए देखना जरूरी है! 💻

0

0

![JimmyGarcia]() JimmyGarcia

JimmyGarcia

April 14, 2025 at 9:21:04 AM EDT

April 14, 2025 at 9:21:04 AM EDT

Techspert Explains me ajudou muito a entender as diferenças entre CPU, GPU e TPU! A forma como explica a tecnologia é super clara e envolvente. Só queria que tivesse mais exemplos sobre TPUs, mas ainda assim, uma ótima ferramenta para iniciantes como eu! 😊

0

0

Back at I/O in May, we unveiled Trillium, the sixth generation of our custom-designed chip called the Tensor Processing Unit, or TPU. Today, we're excited to share that it's now available for Google Cloud customers in preview. TPUs are the magic behind the AI that makes your Google devices and apps super helpful, and Trillium is the most powerful and eco-friendly TPU we've ever made.

So, what's a TPU, and what makes Trillium unique? To get the full picture, it's helpful to know about other types of compute processors like CPUs and GPUs, and what sets them apart. Chelsie Czop, a product manager working on AI infrastructure at Google Cloud, can explain it all. "I collaborate with various teams to ensure our platforms are as efficient as they can be for our customers building AI products," she says. And according to Chelsie, Google's TPUs are a big reason why our AI products are so effective.

Let's dive into the basics! What are CPUs, GPUs, and TPUs?

These are all chips that act as processors for computing tasks. Imagine your brain as a computer doing things like reading or solving math problems. Each of these activities is like a computing task. So, when you use your phone to snap a photo, send a text, or open an app, your phone's brain, or processor, is handling those tasks.

What do these acronyms stand for?

Even though CPUs, GPUs, and TPUs are all processors, they become increasingly specialized. CPU stands for Central Processing Unit. These are versatile chips that can handle a wide variety of tasks. Just like your brain, some tasks might take longer if the CPU isn't specialized for them.

Next up is the GPU, or Graphics Processing Unit. GPUs are the powerhouse for accelerated computing tasks, from rendering graphics to running AI workloads. They're a type of ASIC, or application-specific integrated circuit. Integrated circuits are usually made from silicon, which is why you might hear chips called "silicon" — it's the same thing (and yep, that's where "Silicon Valley" got its name!). In a nutshell, ASICs are designed for one specific purpose.

The TPU, or Tensor Processing Unit, is Google's own ASIC. We designed TPUs from scratch to handle AI-based computing tasks, making them even more specialized than CPUs and GPUs. TPUs are at the core of some of Google's most popular AI services, including Search, YouTube, and DeepMind's large language models.

Got it, so these chips are what make our devices tick. Where would I find CPUs, GPUs, and TPUs?

CPUs and GPUs are found in everyday items: CPUs are in nearly every smartphone and laptop, while GPUs are common in high-end gaming systems and some desktop computers. TPUs, on the other hand, are only found in Google data centers — massive buildings packed with racks of TPUs, working around the clock to keep Google's and our Cloud customers' AI services running globally.

What prompted Google to start developing TPUs?

CPUs were invented in the late 1950s, and GPUs came along in the late '90s. At Google, we started thinking about TPUs about a decade ago. Our speech recognition services were improving rapidly, and we realized that if every user started talking to Google for just three minutes a day, we'd need to double our data center computers. We knew we needed something far more efficient than the off-the-shelf hardware available at the time — and we needed a lot more processing power from each chip. So, we decided to build our own!

And that "T" stands for Tensor, right? Why?

Yep — a "tensor" is the general term for the data structures used in machine learning. There's a lot of math happening behind the scenes to make AI tasks work. With our latest TPU, Trillium, we've boosted the number of calculations it can perform: Trillium has 4.7 times the peak compute performance per chip compared to the previous generation, TPU v5e.

What does that mean, exactly?

It means Trillium can handle all the calculations needed for complex math 4.7 times faster than the last version. Not only is Trillium faster, but it can also manage larger, more complex workloads.

Is there anything else that makes it an improvement over our last-gen TPU?

Another big improvement with Trillium is that it's our most sustainable TPU yet — it's 67% more energy-efficient than our previous TPU. As AI demand keeps growing, the industry needs to scale infrastructure in a sustainable way. Trillium uses less power to do the same work.

Now that customers are starting to use it, what kind of impact do you think Trillium will have?

We're already seeing some amazing things powered by Trillium! Customers are using it for technologies that analyze RNA for various diseases, convert text to video at lightning speeds, and more. And that's just from our initial users — now that Trillium's in preview, we're excited to see what people will do with it.

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

August 30, 2025 at 8:30:34 AM EDT

August 30, 2025 at 8:30:34 AM EDT

TPU 的出現讓 AI 運算又更上一層樓了!不過對於一般開發者來說,GPU 可能還是比較實際的選擇,畢竟 Google Cloud 的 TPU 價格不是普通公司負擔得起的啊 😅

0

0

April 19, 2025 at 6:14:21 AM EDT

April 19, 2025 at 6:14:21 AM EDT

Techspert Explains really helped me understand the differences between CPU, GPU, and TPU! The way it breaks down the tech is super clear and engaging. Only wish it had more examples for TPUs, but still a solid tool for tech newbies like me! 🤓

0

0

April 15, 2025 at 8:02:13 AM EDT

April 15, 2025 at 8:02:13 AM EDT

Techspert's breakdown of CPU vs. GPU vs. TPU was super helpful! I finally get why my Google stuff runs so smoothly. Trillium sounds like the next big thing, but I wish it was easier to get my hands on it. 🤓💻

0

0

April 14, 2025 at 3:36:51 PM EDT

April 14, 2025 at 3:36:51 PM EDT

Techspert's explanation on CPU vs. GPU vs. TPU was super clear! I finally understand the differences and how TPUs power Google's AI. The only downside was it was a bit too technical for my taste. Still, a must-watch for tech enthusiasts! 💻

0

0

April 14, 2025 at 11:28:01 AM EDT

April 14, 2025 at 11:28:01 AM EDT

टेक्स्पर्ट की CPU vs. GPU vs. TPU पर समझाइश बहुत स्पष्ट थी! मैंने अंत में अंतरों को समझा और यह भी जाना कि टीपीयू गूगल की एआई को कैसे शक्ति देते हैं। एकमात्र नकारात्मक पक्ष यह था कि यह मेरे स्वाद के लिए थोड़ा बहुत तकनीकी था। फिर भी, टेक एंथुजियास्ट्स के लिए देखना जरूरी है! 💻

0

0

April 14, 2025 at 9:21:04 AM EDT

April 14, 2025 at 9:21:04 AM EDT

Techspert Explains me ajudou muito a entender as diferenças entre CPU, GPU e TPU! A forma como explica a tecnologia é super clara e envolvente. Só queria que tivesse mais exemplos sobre TPUs, mas ainda assim, uma ótima ferramenta para iniciantes como eu! 😊

0

0