Google I/O 2024: Unveiling Innovations for the Next Generation

Google is fully embracing what we're calling the Gemini era.

Before diving into the details, let me take a moment to reflect on where we are. We've been pouring resources into AI for over a decade, pushing the boundaries in research, product development, and infrastructure. Today, we're going to cover all of that and more.

We're still at the beginning of this AI platform shift, and the potential is enormous—for creators, developers, startups, and everyone else. That's what the Gemini era is all about: driving these opportunities forward. So, let's jump right in.

The Gemini era

Last year at I/O, we unveiled our vision for Gemini: a cutting-edge model designed to be natively multimodal right from the start, capable of processing text, images, video, code, and more. It's a significant leap towards transforming any input into any output—an "I/O" for the next generation.

Since then, we've rolled out the first Gemini models, which have set new standards in multimodal performance. Just two months later, we introduced Gemini 1.5 Pro, which brought a major breakthrough in handling long contexts. It can manage 1 million tokens in production, outpacing any other large-scale foundation model to date.

We're committed to making sure everyone can benefit from Gemini's capabilities. We've moved quickly to share these advancements with you. Today, over 1.5 million developers are using Gemini models across our tools, from debugging code to gaining new insights and building the next wave of AI applications.

We're also integrating Gemini's powerful features into our products in meaningful ways. You'll see examples today across Search, Photos, Workspace, Android, and beyond.

Product progress

Currently, all of our products with 2 billion users are powered by Gemini.

We've also launched new experiences, including a mobile app where you can interact directly with Gemini, available on both Android and iOS. And with Gemini Advanced, you get access to our most advanced models. Over 1 million people have signed up to try it in just three months, and the momentum keeps building.

Expanding AI Overviews in Search

One of the most thrilling developments with Gemini has been in Google Search.

Over the past year, we've handled billions of queries through our Search Generative Experience. Users are exploring Search in new ways, asking longer and more complex questions, even using photos to search, and getting the best results the web has to offer.

We've been testing this experience beyond Labs, and we're thrilled to see not just an increase in Search usage, but also higher user satisfaction.

I'm excited to announce that we'll start rolling out this fully revamped experience, AI Overviews, to everyone in the U.S. this week, with more countries to follow soon.

Thanks to Gemini, we're pushing the boundaries of what's possible in Search, including within our own products.

Introducing Ask Photos

Take Google Photos, for example, which we launched nearly nine years ago. It's become a go-to for organizing life's most precious memories, with over 6 billion photos and videos uploaded daily.

People love using Photos to search through their lives. With Gemini, we're making this even easier.

Imagine you're at a parking station and can't remember your license plate. Before, you'd have to search Photos with keywords and scroll through years of photos to find it. Now, you can just ask Photos. It recognizes the cars you frequently use, figures out which one is yours, and gives you the license plate number.

Ask Photos can also help you dive deeper into your memories. Say you're reminiscing about your daughter Lucia's early milestones. You can ask Photos, "When did Lucia learn to swim?"

You can then follow up with something more complex, like, "Show me how Lucia's swimming has progressed."

Here, Gemini goes beyond a simple search, understanding different contexts—from pool laps to ocean snorkeling to the text and dates on her swimming certificates. Photos then compiles it all into a summary, letting you relive those amazing memories. We're rolling out Ask Photos this summer, with more features on the way.

Unlocking more knowledge with multimodality and long context

Gemini's multimodality is designed to unlock knowledge across various formats. It's one model that understands and connects different types of input.

This approach expands the types of questions we can ask and the answers we receive. Long context takes this further, allowing us to process vast amounts of information: hundreds of pages of text, hours of audio, an hour of video, entire code repositories, or even 96 Cheesecake Factory menus.

For those many menus, you'd need a one million token context window, which is now possible with Gemini 1.5 Pro. Developers are using it in some pretty cool ways.

We've been rolling out Gemini 1.5 Pro with long context in preview over the last few months, making quality improvements in translation, coding, and reasoning. These updates are now reflected in the model.

I'm thrilled to announce that we're making this improved version of Gemini 1.5 Pro available to all developers globally. Additionally, Gemini 1.5 Pro with 1 million context is now directly accessible for consumers in Gemini Advanced, supporting 35 languages.

Expanding to 2 million tokens in private preview

One million tokens have opened up new possibilities, but we're not stopping there.

Today, we're expanding the context window to 2 million tokens, available for developers in private preview.

It's incredible to see how far we've come in just a few months. This is another step toward our ultimate goal of infinite context.

Bringing Gemini 1.5 Pro to Workspace

We've discussed two key technical advances: multimodality and long context. Each is powerful on its own, but together, they unlock even deeper capabilities and intelligence.

This is evident in Google Workspace.

People often search their emails in Gmail. With Gemini, we're making this much more powerful. For instance, as a parent, you want to stay updated on your child's school activities. Gemini can help you keep track.

You can ask Gemini to summarize recent emails from the school. Behind the scenes, it identifies relevant emails and even analyzes attachments like PDFs. You get a summary of key points and action items. If you missed the PTA meeting because you were traveling, and the recording is an hour long, Gemini can highlight the important parts if it's from Google Meet. If there's a call for volunteers and you're free, Gemini can draft a reply for you.

There are countless ways this can simplify your life. Gemini 1.5 Pro is available today in Workspace Labs. Aparna will share more details.

Audio outputs in NotebookLM

We've seen examples with text outputs, but with a multimodal model, we can do so much more.

We're making progress here, with more to come. Audio Overviews in NotebookLM demonstrate this. It uses Gemini 1.5 Pro to generate a personalized and interactive audio conversation from your source materials.

This is the potential of multimodality. Soon, you'll be able to mix and match inputs and outputs. This is what we mean by an "I/O" for a new generation. But what if we could go even further?

Going further with AI agents

One of the exciting opportunities we see is with AI Agents. These are intelligent systems that can reason, plan, and remember. They can think several steps ahead and work across software and systems to accomplish tasks on your behalf, always under your supervision.

We're still in the early stages, but let me give you a glimpse of the kinds of use cases we're working on.

Take shopping, for example. It's fun to buy shoes, but not so fun to return them if they don't fit.

Imagine if Gemini could handle all the steps for you:

- Searching your inbox for the receipt...

- Locating the order number from your email...

- Filling out a return form...

- Even scheduling a UPS pickup.

That's much easier, right?

Let's consider a more complex scenario. Say you've just moved to Chicago. Gemini and Chrome can work together to help you get settled—organizing, reasoning, and synthesizing information on your behalf.

You'll want to explore the city and find local services, from dry cleaners to dog walkers. You'll also need to update your new address across numerous websites.

Gemini can manage these tasks and will ask for more information when needed, ensuring you're always in control.

This is crucial—as we develop these experiences, we're focused on privacy, security, and making them accessible to everyone.

These are simple examples, but they illustrate the types of problems we aim to solve by building intelligent systems that think ahead, reason, and plan on your behalf.

What it means for our mission

The power of Gemini—with its multimodality, long context, and agents—brings us closer to our ultimate goal: making AI helpful for everyone.

This is how we'll make the most progress toward our mission: organizing the world's information across every input, making it accessible via any output, and combining the world's information with the information in YOUR world in a truly useful way.

Breaking new ground

To fully realize AI's potential, we need to push boundaries. The Google DeepMind team has been working hard on this.

We've seen a lot of excitement around 1.5 Pro and its long context window. But developers also wanted something faster and more cost-effective. So, tomorrow, we're introducing Gemini 1.5 Flash, a lighter-weight model designed for scale. It's optimized for tasks where low latency and cost are crucial. 1.5 Flash will be available in AI Studio and Vertex AI on Tuesday.

Looking further ahead, we've always wanted to build a universal agent useful in everyday life. Project Astra demonstrates multimodal understanding and real-time conversational capabilities.

We've also made strides in video and image generation with Veo and Imagen 3, and introduced Gemma 2.0, our next generation of open models for responsible AI innovation. You can read more from Demis Hassabis.

Infrastructure for the AI era: Introducing Trillium

Training state-of-the-art models requires a lot of computing power. The demand for ML compute has grown by a factor of 1 million in the last six years, and it increases tenfold every year.

Google was built for this. For 25 years, we've invested in world-class technical infrastructure, from the cutting-edge hardware that powers Search to our custom tensor processing units that drive our AI advances.

Gemini was trained and served entirely on our fourth and fifth generation TPUs. Other leading AI companies, including Anthropic, have also trained their models on our TPUs.

Today, we're excited to announce our 6th generation of TPUs, called Trillium. Trillium is our most performant and efficient TPU to date, delivering a 4.7x improvement in compute performance per chip over the previous generation, TPU v5e.

We'll make Trillium available to our Cloud customers in late 2024.

Alongside our TPUs, we're proud to offer CPUs and GPUs to support any workload. This includes the new Axion processors we announced last month, our first custom Arm-based CPU that delivers industry-leading performance and energy efficiency.

We're also one of the first Cloud providers to offer Nvidia's cutting-edge Blackwell GPUs, available in early 2025. Our longstanding partnership with NVIDIA allows us to bring Blackwell's breakthrough capabilities to our customers.

Chips are a foundational part of our integrated end-to-end system, from performance-optimized hardware and open software to flexible consumption models. This all comes together in our AI Hypercomputer, a groundbreaking supercomputer architecture.

Businesses and developers are using it to tackle more complex challenges, with more than twice the efficiency compared to just buying the raw hardware and chips. Our AI Hypercomputer advancements are made possible in part because of our approach to liquid cooling in our data centers.

We've been doing this for nearly a decade, long before it became state-of-the-art for the industry. Today, our total deployed fleet capacity for liquid cooling systems is nearly 1 gigawatt and growing—that's close to 70 times the capacity of any other fleet.

Underlying this is the sheer scale of our network, which connects our infrastructure globally. Our network spans more than 2 million miles of terrestrial and subsea fiber: over 10 times the reach of the next leading cloud provider.

We will continue making the investments necessary to advance AI innovation and deliver state-of-the-art capabilities.

The most exciting chapter of Search yet

One of our greatest areas of investment and innovation is in our founding product, Search. 25 years ago, we created Search to help people navigate the flood of information moving online.

With each platform shift, we've delivered breakthroughs to better answer your questions. On mobile, we unlocked new types of questions and answers—using better context, location awareness, and real-time information. With advances in natural language understanding and computer vision, we enabled new ways to search, like using your voice or humming to find your new favorite song, or using an image of that flower you saw on your walk. And now you can even Circle to Search those cool new shoes you might want to buy. Go for it, you can always return them!

Of course, Search in the Gemini Era will take this to a whole new level, combining our infrastructure strengths, the latest AI capabilities, our high standards for information quality, and our decades of experience connecting you to the richness of the web. The result is a product that does the work for you.

Google Search is generative AI at the scale of human curiosity. And it's our most exciting chapter of Search yet. Read more about the Gemini era of Search from Liz Reid.

More intelligent Gemini experiences

Gemini is more than just a chatbot; it's designed to be your personal, helpful assistant that can tackle complex tasks and take actions on your behalf.

Interacting with Gemini should feel conversational and intuitive. So, we're announcing a new Gemini experience called Live, which allows you to have an in-depth conversation with Gemini using your voice. We'll also be bringing 2M tokens to Gemini Advanced later this year, making it possible to upload and analyze super dense files like video and long code. Sissie Hsiao shares more details.

Gemini on Android

With billions of Android users worldwide, we're excited to integrate Gemini more deeply into the user experience. As your new AI assistant, Gemini is here to help you anytime, anywhere. We've incorporated Gemini models into Android, including our latest on-device model: Gemini Nano with Multimodality, which processes text, images, audio, and speech to unlock new experiences while keeping information private on your device. Sameer Samat shares the Android news here.

Our responsible approach to AI

We continue to approach the AI opportunity boldly, with a sense of excitement. We're also making sure we do it responsibly. We're developing a cutting-edge technique called AI-assisted red teaming, which draws on Google DeepMind's gaming breakthroughs like AlphaGo to improve our models. Plus, we've expanded SynthID, our watermarking tool that makes AI-generated content easier to identify, to two new modalities: text and video. James Manyika shares more.

Creating the future together

All of this shows the important progress we're making as we take a bold and responsible approach to making AI helpful for everyone.

We've been AI-first in our approach for a long time. Our decades of research leadership have pioneered many of the modern breakthroughs that power AI progress, for us and for the industry. On top of that, we have:

- World-leading infrastructure built for the AI era

- Cutting-edge innovation in Search, now powered by Gemini

- Products that help at extraordinary scale—including 15 products with half a billion users

- And platforms that enable everyone—partners, customers, creators, and all of you—to invent the future.

This progress is only possible because of our incredible developer community. You are making it real, through the experiences and applications you build every day. So, to everyone here in Shoreline and the millions more watching around the world, here's to the possibilities ahead and creating them together.

Get more stories from Google in your inbox.

Email addressYour information will be used in accordance withGoogle's privacy policy.

SubscribeDone. Just one step more.

Check your inbox to confirm your subscription.

You are already subscribed to our newsletter.

You can also subscribe with a different email address.

Related article

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

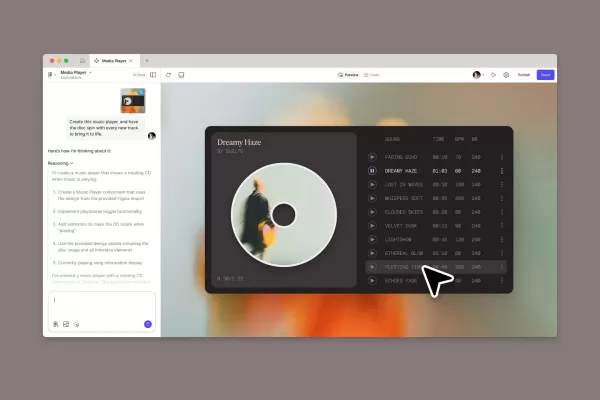

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (28)

0/200

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (28)

0/200

![ScottAnderson]() ScottAnderson

ScottAnderson

August 25, 2025 at 3:01:23 PM EDT

August 25, 2025 at 3:01:23 PM EDT

Google's AI push at I/O 2024 sounds like a sci-fi movie! Gemini era? I'm intrigued but also wondering if my phone will soon outsmart me. 😅 Exciting stuff!

0

0

![FredGreen]() FredGreen

FredGreen

August 21, 2025 at 3:01:19 AM EDT

August 21, 2025 at 3:01:19 AM EDT

Super cool to see Google's AI push at I/O 2024! The Gemini era sounds like a sci-fi movie, but I'm curious how it’ll actually change my daily apps. 😎

0

0

![TimothyHernández]() TimothyHernández

TimothyHernández

August 8, 2025 at 9:00:59 AM EDT

August 8, 2025 at 9:00:59 AM EDT

Wow, Google's AI push at I/O 2024 sounds massive! The Gemini era feels like sci-fi coming to life. Curious how it’ll stack up against competitors. 😎

0

0

![AlbertRodriguez]() AlbertRodriguez

AlbertRodriguez

April 24, 2025 at 6:33:43 PM EDT

April 24, 2025 at 6:33:43 PM EDT

Google I/O 2024 foi incrível! A era Gemini parece ser o futuro que estávamos esperando. Eles têm trabalhado em IA por tanto tempo e finalmente está dando frutos. Mal posso esperar para ver o que vem a seguir! 🚀

0

0

![StevenNelson]() StevenNelson

StevenNelson

April 24, 2025 at 2:18:04 AM EDT

April 24, 2025 at 2:18:04 AM EDT

Google I/O 2024は本当に衝撃的だった!ジェミニ時代は待ち望んでいた未来そのものだね。彼らがAIにどれだけ投資してきたかを見ると、次に何が来るのか楽しみで仕方ないよ!🚀

0

0

![MatthewGonzalez]() MatthewGonzalez

MatthewGonzalez

April 23, 2025 at 12:59:25 PM EDT

April 23, 2025 at 12:59:25 PM EDT

O Google I/O 2024 foi incrível! A era Gemini parece o futuro que estávamos esperando. É legal ver quanto eles investiram em IA ao longo dos anos. Mal posso esperar para ver o que vem por aí! 🚀

0

0

Google is fully embracing what we're calling the Gemini era.

Before diving into the details, let me take a moment to reflect on where we are. We've been pouring resources into AI for over a decade, pushing the boundaries in research, product development, and infrastructure. Today, we're going to cover all of that and more.

We're still at the beginning of this AI platform shift, and the potential is enormous—for creators, developers, startups, and everyone else. That's what the Gemini era is all about: driving these opportunities forward. So, let's jump right in.

The Gemini era

Last year at I/O, we unveiled our vision for Gemini: a cutting-edge model designed to be natively multimodal right from the start, capable of processing text, images, video, code, and more. It's a significant leap towards transforming any input into any output—an "I/O" for the next generation.

Since then, we've rolled out the first Gemini models, which have set new standards in multimodal performance. Just two months later, we introduced Gemini 1.5 Pro, which brought a major breakthrough in handling long contexts. It can manage 1 million tokens in production, outpacing any other large-scale foundation model to date.

We're committed to making sure everyone can benefit from Gemini's capabilities. We've moved quickly to share these advancements with you. Today, over 1.5 million developers are using Gemini models across our tools, from debugging code to gaining new insights and building the next wave of AI applications.

We're also integrating Gemini's powerful features into our products in meaningful ways. You'll see examples today across Search, Photos, Workspace, Android, and beyond.

Product progress

Currently, all of our products with 2 billion users are powered by Gemini.

We've also launched new experiences, including a mobile app where you can interact directly with Gemini, available on both Android and iOS. And with Gemini Advanced, you get access to our most advanced models. Over 1 million people have signed up to try it in just three months, and the momentum keeps building.

Expanding AI Overviews in Search

One of the most thrilling developments with Gemini has been in Google Search.

Over the past year, we've handled billions of queries through our Search Generative Experience. Users are exploring Search in new ways, asking longer and more complex questions, even using photos to search, and getting the best results the web has to offer.

We've been testing this experience beyond Labs, and we're thrilled to see not just an increase in Search usage, but also higher user satisfaction.

I'm excited to announce that we'll start rolling out this fully revamped experience, AI Overviews, to everyone in the U.S. this week, with more countries to follow soon.

Thanks to Gemini, we're pushing the boundaries of what's possible in Search, including within our own products.

Introducing Ask Photos

Take Google Photos, for example, which we launched nearly nine years ago. It's become a go-to for organizing life's most precious memories, with over 6 billion photos and videos uploaded daily.

People love using Photos to search through their lives. With Gemini, we're making this even easier.

Imagine you're at a parking station and can't remember your license plate. Before, you'd have to search Photos with keywords and scroll through years of photos to find it. Now, you can just ask Photos. It recognizes the cars you frequently use, figures out which one is yours, and gives you the license plate number.

Ask Photos can also help you dive deeper into your memories. Say you're reminiscing about your daughter Lucia's early milestones. You can ask Photos, "When did Lucia learn to swim?"

You can then follow up with something more complex, like, "Show me how Lucia's swimming has progressed."

Here, Gemini goes beyond a simple search, understanding different contexts—from pool laps to ocean snorkeling to the text and dates on her swimming certificates. Photos then compiles it all into a summary, letting you relive those amazing memories. We're rolling out Ask Photos this summer, with more features on the way.

Unlocking more knowledge with multimodality and long context

Gemini's multimodality is designed to unlock knowledge across various formats. It's one model that understands and connects different types of input.

This approach expands the types of questions we can ask and the answers we receive. Long context takes this further, allowing us to process vast amounts of information: hundreds of pages of text, hours of audio, an hour of video, entire code repositories, or even 96 Cheesecake Factory menus.

For those many menus, you'd need a one million token context window, which is now possible with Gemini 1.5 Pro. Developers are using it in some pretty cool ways.

We've been rolling out Gemini 1.5 Pro with long context in preview over the last few months, making quality improvements in translation, coding, and reasoning. These updates are now reflected in the model.

I'm thrilled to announce that we're making this improved version of Gemini 1.5 Pro available to all developers globally. Additionally, Gemini 1.5 Pro with 1 million context is now directly accessible for consumers in Gemini Advanced, supporting 35 languages.

Expanding to 2 million tokens in private preview

One million tokens have opened up new possibilities, but we're not stopping there.

Today, we're expanding the context window to 2 million tokens, available for developers in private preview.

It's incredible to see how far we've come in just a few months. This is another step toward our ultimate goal of infinite context.

Bringing Gemini 1.5 Pro to Workspace

We've discussed two key technical advances: multimodality and long context. Each is powerful on its own, but together, they unlock even deeper capabilities and intelligence.

This is evident in Google Workspace.

People often search their emails in Gmail. With Gemini, we're making this much more powerful. For instance, as a parent, you want to stay updated on your child's school activities. Gemini can help you keep track.

You can ask Gemini to summarize recent emails from the school. Behind the scenes, it identifies relevant emails and even analyzes attachments like PDFs. You get a summary of key points and action items. If you missed the PTA meeting because you were traveling, and the recording is an hour long, Gemini can highlight the important parts if it's from Google Meet. If there's a call for volunteers and you're free, Gemini can draft a reply for you.

There are countless ways this can simplify your life. Gemini 1.5 Pro is available today in Workspace Labs. Aparna will share more details.

Audio outputs in NotebookLM

We've seen examples with text outputs, but with a multimodal model, we can do so much more.

We're making progress here, with more to come. Audio Overviews in NotebookLM demonstrate this. It uses Gemini 1.5 Pro to generate a personalized and interactive audio conversation from your source materials.

This is the potential of multimodality. Soon, you'll be able to mix and match inputs and outputs. This is what we mean by an "I/O" for a new generation. But what if we could go even further?

Going further with AI agents

One of the exciting opportunities we see is with AI Agents. These are intelligent systems that can reason, plan, and remember. They can think several steps ahead and work across software and systems to accomplish tasks on your behalf, always under your supervision.

We're still in the early stages, but let me give you a glimpse of the kinds of use cases we're working on.

Take shopping, for example. It's fun to buy shoes, but not so fun to return them if they don't fit.

Imagine if Gemini could handle all the steps for you:

- Searching your inbox for the receipt...

- Locating the order number from your email...

- Filling out a return form...

- Even scheduling a UPS pickup.

That's much easier, right?

Let's consider a more complex scenario. Say you've just moved to Chicago. Gemini and Chrome can work together to help you get settled—organizing, reasoning, and synthesizing information on your behalf.

You'll want to explore the city and find local services, from dry cleaners to dog walkers. You'll also need to update your new address across numerous websites.

Gemini can manage these tasks and will ask for more information when needed, ensuring you're always in control.

This is crucial—as we develop these experiences, we're focused on privacy, security, and making them accessible to everyone.

These are simple examples, but they illustrate the types of problems we aim to solve by building intelligent systems that think ahead, reason, and plan on your behalf.

What it means for our mission

The power of Gemini—with its multimodality, long context, and agents—brings us closer to our ultimate goal: making AI helpful for everyone.

This is how we'll make the most progress toward our mission: organizing the world's information across every input, making it accessible via any output, and combining the world's information with the information in YOUR world in a truly useful way.

Breaking new ground

To fully realize AI's potential, we need to push boundaries. The Google DeepMind team has been working hard on this.

We've seen a lot of excitement around 1.5 Pro and its long context window. But developers also wanted something faster and more cost-effective. So, tomorrow, we're introducing Gemini 1.5 Flash, a lighter-weight model designed for scale. It's optimized for tasks where low latency and cost are crucial. 1.5 Flash will be available in AI Studio and Vertex AI on Tuesday.

Looking further ahead, we've always wanted to build a universal agent useful in everyday life. Project Astra demonstrates multimodal understanding and real-time conversational capabilities.

We've also made strides in video and image generation with Veo and Imagen 3, and introduced Gemma 2.0, our next generation of open models for responsible AI innovation. You can read more from Demis Hassabis.

Infrastructure for the AI era: Introducing Trillium

Training state-of-the-art models requires a lot of computing power. The demand for ML compute has grown by a factor of 1 million in the last six years, and it increases tenfold every year.

Google was built for this. For 25 years, we've invested in world-class technical infrastructure, from the cutting-edge hardware that powers Search to our custom tensor processing units that drive our AI advances.

Gemini was trained and served entirely on our fourth and fifth generation TPUs. Other leading AI companies, including Anthropic, have also trained their models on our TPUs.

Today, we're excited to announce our 6th generation of TPUs, called Trillium. Trillium is our most performant and efficient TPU to date, delivering a 4.7x improvement in compute performance per chip over the previous generation, TPU v5e.

We'll make Trillium available to our Cloud customers in late 2024.

Alongside our TPUs, we're proud to offer CPUs and GPUs to support any workload. This includes the new Axion processors we announced last month, our first custom Arm-based CPU that delivers industry-leading performance and energy efficiency.

We're also one of the first Cloud providers to offer Nvidia's cutting-edge Blackwell GPUs, available in early 2025. Our longstanding partnership with NVIDIA allows us to bring Blackwell's breakthrough capabilities to our customers.

Chips are a foundational part of our integrated end-to-end system, from performance-optimized hardware and open software to flexible consumption models. This all comes together in our AI Hypercomputer, a groundbreaking supercomputer architecture.

Businesses and developers are using it to tackle more complex challenges, with more than twice the efficiency compared to just buying the raw hardware and chips. Our AI Hypercomputer advancements are made possible in part because of our approach to liquid cooling in our data centers.

We've been doing this for nearly a decade, long before it became state-of-the-art for the industry. Today, our total deployed fleet capacity for liquid cooling systems is nearly 1 gigawatt and growing—that's close to 70 times the capacity of any other fleet.

Underlying this is the sheer scale of our network, which connects our infrastructure globally. Our network spans more than 2 million miles of terrestrial and subsea fiber: over 10 times the reach of the next leading cloud provider.

We will continue making the investments necessary to advance AI innovation and deliver state-of-the-art capabilities.

The most exciting chapter of Search yet

One of our greatest areas of investment and innovation is in our founding product, Search. 25 years ago, we created Search to help people navigate the flood of information moving online.

With each platform shift, we've delivered breakthroughs to better answer your questions. On mobile, we unlocked new types of questions and answers—using better context, location awareness, and real-time information. With advances in natural language understanding and computer vision, we enabled new ways to search, like using your voice or humming to find your new favorite song, or using an image of that flower you saw on your walk. And now you can even Circle to Search those cool new shoes you might want to buy. Go for it, you can always return them!

Of course, Search in the Gemini Era will take this to a whole new level, combining our infrastructure strengths, the latest AI capabilities, our high standards for information quality, and our decades of experience connecting you to the richness of the web. The result is a product that does the work for you.

Google Search is generative AI at the scale of human curiosity. And it's our most exciting chapter of Search yet. Read more about the Gemini era of Search from Liz Reid.

More intelligent Gemini experiences

Gemini is more than just a chatbot; it's designed to be your personal, helpful assistant that can tackle complex tasks and take actions on your behalf.

Interacting with Gemini should feel conversational and intuitive. So, we're announcing a new Gemini experience called Live, which allows you to have an in-depth conversation with Gemini using your voice. We'll also be bringing 2M tokens to Gemini Advanced later this year, making it possible to upload and analyze super dense files like video and long code. Sissie Hsiao shares more details.

Gemini on Android

With billions of Android users worldwide, we're excited to integrate Gemini more deeply into the user experience. As your new AI assistant, Gemini is here to help you anytime, anywhere. We've incorporated Gemini models into Android, including our latest on-device model: Gemini Nano with Multimodality, which processes text, images, audio, and speech to unlock new experiences while keeping information private on your device. Sameer Samat shares the Android news here.

Our responsible approach to AI

We continue to approach the AI opportunity boldly, with a sense of excitement. We're also making sure we do it responsibly. We're developing a cutting-edge technique called AI-assisted red teaming, which draws on Google DeepMind's gaming breakthroughs like AlphaGo to improve our models. Plus, we've expanded SynthID, our watermarking tool that makes AI-generated content easier to identify, to two new modalities: text and video. James Manyika shares more.

Creating the future together

All of this shows the important progress we're making as we take a bold and responsible approach to making AI helpful for everyone.

We've been AI-first in our approach for a long time. Our decades of research leadership have pioneered many of the modern breakthroughs that power AI progress, for us and for the industry. On top of that, we have:

- World-leading infrastructure built for the AI era

- Cutting-edge innovation in Search, now powered by Gemini

- Products that help at extraordinary scale—including 15 products with half a billion users

- And platforms that enable everyone—partners, customers, creators, and all of you—to invent the future.

This progress is only possible because of our incredible developer community. You are making it real, through the experiences and applications you build every day. So, to everyone here in Shoreline and the millions more watching around the world, here's to the possibilities ahead and creating them together.

Get more stories from Google in your inbox.

Email addressYour information will be used in accordance withGoogle's privacy policy.

SubscribeDone. Just one step more.

Check your inbox to confirm your subscription.

You are already subscribed to our newsletter.

You can also subscribe with a different email address.

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

August 25, 2025 at 3:01:23 PM EDT

August 25, 2025 at 3:01:23 PM EDT

Google's AI push at I/O 2024 sounds like a sci-fi movie! Gemini era? I'm intrigued but also wondering if my phone will soon outsmart me. 😅 Exciting stuff!

0

0

August 21, 2025 at 3:01:19 AM EDT

August 21, 2025 at 3:01:19 AM EDT

Super cool to see Google's AI push at I/O 2024! The Gemini era sounds like a sci-fi movie, but I'm curious how it’ll actually change my daily apps. 😎

0

0

August 8, 2025 at 9:00:59 AM EDT

August 8, 2025 at 9:00:59 AM EDT

Wow, Google's AI push at I/O 2024 sounds massive! The Gemini era feels like sci-fi coming to life. Curious how it’ll stack up against competitors. 😎

0

0

April 24, 2025 at 6:33:43 PM EDT

April 24, 2025 at 6:33:43 PM EDT

Google I/O 2024 foi incrível! A era Gemini parece ser o futuro que estávamos esperando. Eles têm trabalhado em IA por tanto tempo e finalmente está dando frutos. Mal posso esperar para ver o que vem a seguir! 🚀

0

0

April 24, 2025 at 2:18:04 AM EDT

April 24, 2025 at 2:18:04 AM EDT

Google I/O 2024は本当に衝撃的だった!ジェミニ時代は待ち望んでいた未来そのものだね。彼らがAIにどれだけ投資してきたかを見ると、次に何が来るのか楽しみで仕方ないよ!🚀

0

0

April 23, 2025 at 12:59:25 PM EDT

April 23, 2025 at 12:59:25 PM EDT

O Google I/O 2024 foi incrível! A era Gemini parece o futuro que estávamos esperando. É legal ver quanto eles investiram em IA ao longo dos anos. Mal posso esperar para ver o que vem por aí! 🚀

0

0