Google Gemini: Everything you need to know about the generative AI apps and models

What is Gemini?

Gemini is Google's highly anticipated next-generation family of generative AI models, developed through a collaboration between DeepMind and Google Research. It's designed to be versatile, coming in various sizes to cater to different needs:

- Gemini Ultra: A powerhouse model, designed for the most complex tasks.

- Gemini Pro: A robust model, with the latest version, Gemini 2.0 Pro, being Google's current flagship.

- Gemini Flash: A faster, streamlined version of Pro, perfect for quick tasks.

- Gemini Flash-Lite: Even smaller and faster than Flash, it's built for efficiency.

- Gemini Flash Thinking: A specialized version with enhanced reasoning capabilities.

- Gemini Nano: Consists of two compact models, Nano-1 and Nano-2, the latter capable of running offline.

One of the key features of Gemini is its multimodal nature. Unlike earlier models like Google's LaMDA, which were limited to text, Gemini models have been trained on a diverse dataset including audio, images, videos, code, and text in multiple languages. This allows them to not only process but also generate various types of content, setting them apart in the AI landscape.

However, it's worth noting the ethical and legal concerns surrounding the use of public data for training these models. Google offers an AI indemnification policy, but it's not a blanket protection, so if you're considering using Gemini for commercial purposes, tread carefully.

What’s the difference between the Gemini apps and Gemini models?

The Gemini models are the brains behind the operation, while the Gemini apps serve as user-friendly interfaces to access these models. These apps, available on web and mobile platforms (formerly known as Bard), act as front ends similar to ChatGPT or Anthropic's Claude. They offer a chatbot-like experience, allowing users to interact with Gemini's capabilities through a familiar interface.

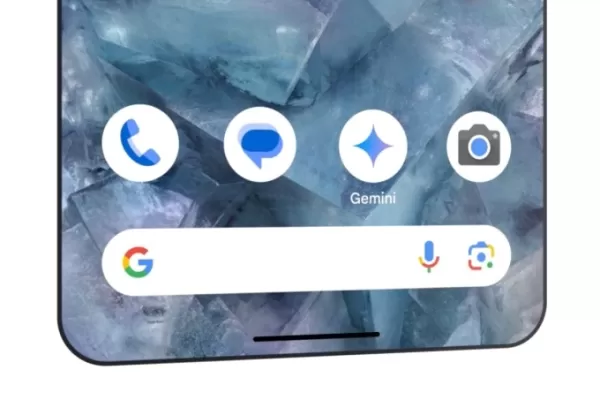

Image Credits: Google

On Android, the Gemini app has taken over from the Google Assistant, and on iOS, it's integrated into the Google and Google Search apps. Android users can even summon a Gemini overlay to interact with content on their screens, such as YouTube videos, by pressing the power button or using voice commands.

The apps support a range of inputs, including images, voice commands, and text, and can even generate images. Conversations are synced across devices if you're signed into the same Google Account.

Gemini Advanced

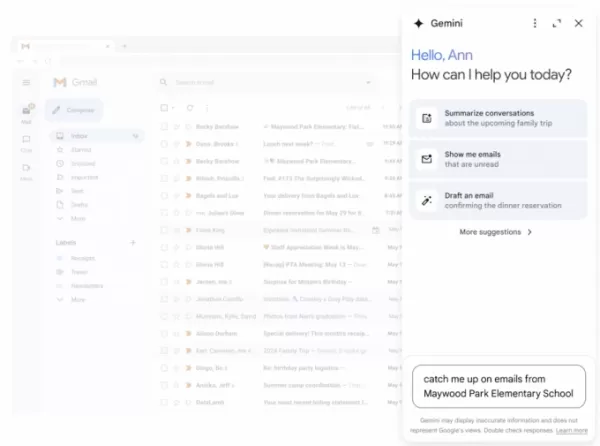

Beyond the basic apps, Gemini Advanced offers enhanced features for a monthly fee of $20 as part of the Google One AI Premium Plan. This plan integrates Gemini into Google Workspace apps like Gmail, Docs, Maps, and more, allowing for advanced tasks like email composition, document editing, and even generating slides.

Image Credits: Google

Gemini Advanced users enjoy perks like priority access to new features, the ability to run and edit Python code directly in the app, and increased limits for tools like NotebookLM. A recent addition, the memory feature, helps Gemini remember user preferences and past conversations, enhancing the user experience. One standout feature, Deep Research, uses advanced reasoning to create detailed briefs on complex topics.

Gemini in Gmail, Docs, Chrome, dev tools, and more

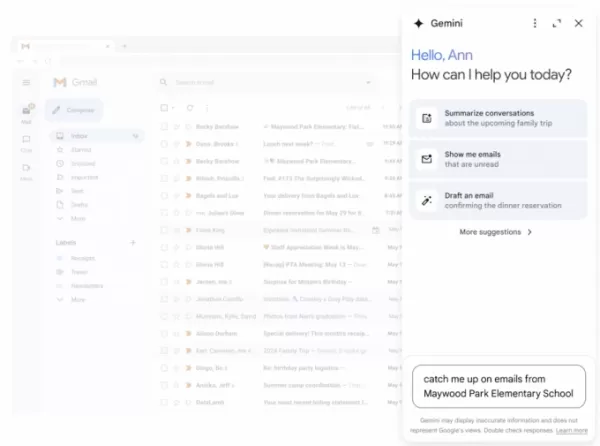

Gemini's integration extends to various Google services. In Gmail and Docs, it offers side panels for tasks like email composition and document refinement. In Slides, it generates custom images and slides, while in Sheets, it helps with data organization and formula creation.

Image Credits: Google

Gemini also enhances Google Maps with personalized recommendations and aggregates reviews. In Drive, it can summarize files and provide quick insights. In Chrome, it acts as an AI writing tool, adapting to the context of the webpage you're on. Gemini's influence reaches into Google's security and development tools, as well as apps like Photos, YouTube, and Meet, where it supports natural language searches and translations.

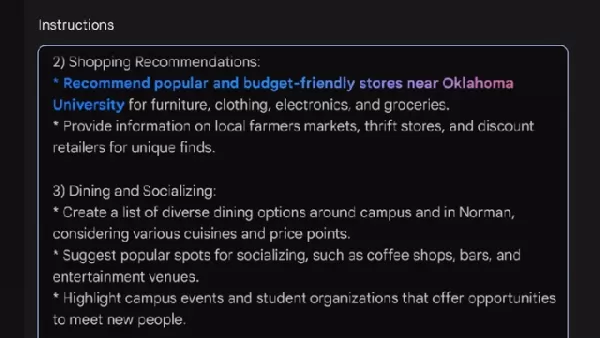

Gemini extensions and Gems

For Gemini Advanced users, the ability to create Gems is a unique feature. These are custom chatbots powered by Gemini models, which can be tailored to specific tasks like creating a daily running plan. Gems can be shared or kept private, adding a personal touch to AI interactions.

Image Credits: Google

Gemini apps also leverage "Gemini extensions" to integrate with Google services like Drive, Gmail, and YouTube, allowing for seamless interaction and information retrieval across platforms.

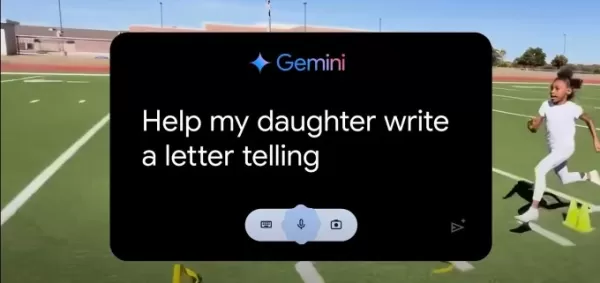

Gemini Live in-depth voice chats

Gemini Live offers a unique experience for voice interactions, available in the Gemini apps on mobile and the Pixel Buds Pro 2. It allows for real-time, adaptive conversations, where you can interrupt Gemini to ask questions or seek clarification. This feature is designed to help with tasks like job interview preparation and public speaking practice.

Image Credits: Google

Gemini for teens

Google has also introduced a teen-focused version of Gemini, designed for students. It includes additional safety measures and an AI literacy guide but otherwise offers a similar experience to the standard version, including the "double-check" feature for accuracy.

What can the Gemini models do?

Given their multimodal capabilities, Gemini models can handle a variety of tasks, from speech transcription to real-time image and video captioning. Google is constantly expanding these capabilities, promising even more in the future.

However, like all generative AI, Gemini isn't without its challenges, such as biases and the potential to generate inaccurate information. It's important to be aware of these limitations when using or considering paying for Gemini services.

Gemini Pro’s capabilities

The latest iteration, Gemini 2.0 Pro, excels in coding and handling complex prompts, outperforming its predecessor in various benchmarks. Developers can customize it through Google's Vertex AI platform, tailoring it to specific contexts and integrating it with third-party data or APIs. Google's AI Studio also offers tools for creating structured prompts and adjusting safety settings.

Gemini Flash is lightweight, while Gemini Flash Thinking adds reasoning

Gemini 2.0 Flash, designed for efficiency, is ideal for tasks like summarization and data extraction, while Gemini 2.0 Flash-Lite offers even better performance at the same price point. The "thinking" version of Gemini 2.0 Flash enhances reliability by taking time to reason through problems before responding.

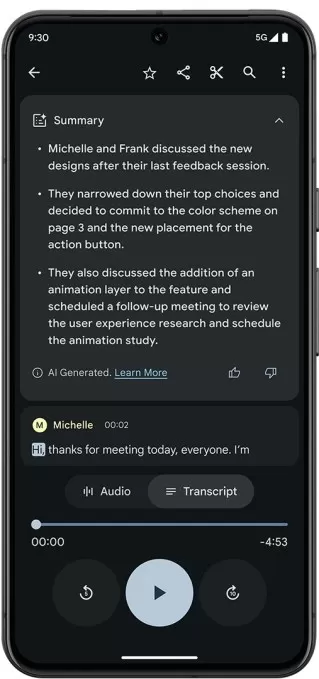

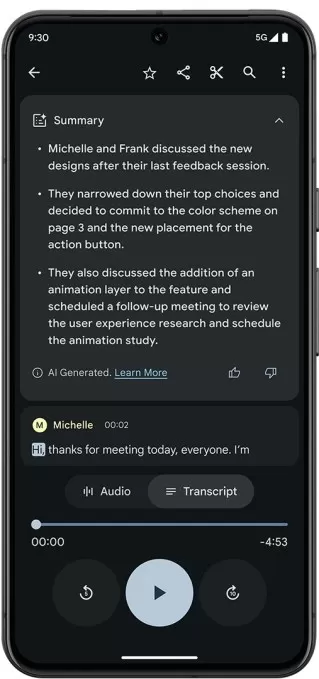

Gemini Nano can run on your phone

Gemini Nano is designed to run directly on devices, enhancing privacy and offline functionality. It powers features like Summarize in Recorder and Smart Reply in Gboard on devices like the Pixel 8 series and Samsung Galaxy S24. Future versions of Android will use Nano for scam detection during calls, and it's already enhancing weather reports and accessibility features.

Image Credits: Google

Gemini Ultra, MIA for now

While Gemini Ultra hasn't been in the spotlight recently, it remains a part of Google's plans, potentially returning with new capabilities in the future.

How much do the Gemini models cost?

The pricing for Gemini models through the Gemini API is structured as follows:

- Gemini 1.5 Pro: $1.25/$2.50 per million input tokens and $5/$10 per million output tokens, depending on prompt length.

- Gemini 1.5 Flash: 7.5/15 cents per million input tokens and 30/60 cents per million output tokens, depending on prompt length.

- Gemini 2.0 Flash: 10 cents per million input tokens and 40 cents per million output tokens, with audio input at 70 cents per million tokens.

- Gemini 2.0 Flash-Lite: 7.5 cents per million input tokens and 30 cents per million output tokens.

Pricing for Gemini 2.0 Pro and Nano has yet to be announced.

Is Gemini coming to the iPhone?

There's potential for Gemini to make its way to the iPhone. Apple has expressed interest in integrating Gemini and other third-party models into its Apple Intelligence suite, though specifics are still under wraps following discussions at WWDC 2024.

This post was originally published on February 16, 2024, and is regularly updated to reflect the latest developments.

Related article

Google's Latest Gemini AI Model Shows Declining Safety Scores in Testing

Google's internal testing reveals concerning performance dips in its latest AI model's safety protocols compared to previous versions. According to newly published benchmarks, the Gemini 2.5 Flash model demonstrates 4-10% higher rates of guideline vi

Google's Latest Gemini AI Model Shows Declining Safety Scores in Testing

Google's internal testing reveals concerning performance dips in its latest AI model's safety protocols compared to previous versions. According to newly published benchmarks, the Gemini 2.5 Flash model demonstrates 4-10% higher rates of guideline vi

Google's Stitch AI Simplifies App Design Process

Google Unveils Stitch AI Design Tool at I/O 2025Google introduced Stitch, its revolutionary AI-powered interface design tool, during the keynote at Google I/O 2025. This innovative solution transforms natural language prompts or reference images into

Google's Stitch AI Simplifies App Design Process

Google Unveils Stitch AI Design Tool at I/O 2025Google introduced Stitch, its revolutionary AI-powered interface design tool, during the keynote at Google I/O 2025. This innovative solution transforms natural language prompts or reference images into

Google Introduces AI-Powered Tools for Gmail, Docs, and Vids

Google Unveils AI-Powered Workspace Updates at I/O 2025During its annual developer conference, Google has introduced transformative AI enhancements coming to its Workspace suite, fundamentally changing how users interact with Gmail, Docs, and Vids. T

Comments (16)

0/200

Google Introduces AI-Powered Tools for Gmail, Docs, and Vids

Google Unveils AI-Powered Workspace Updates at I/O 2025During its annual developer conference, Google has introduced transformative AI enhancements coming to its Workspace suite, fundamentally changing how users interact with Gmail, Docs, and Vids. T

Comments (16)

0/200

![StevenSanchez]() StevenSanchez

StevenSanchez

August 15, 2025 at 3:01:00 PM EDT

August 15, 2025 at 3:01:00 PM EDT

Gemini sounds like a beast! I'm curious how it stacks up against other AI models in real-world tasks. 😎 Anyone tried it yet?

0

0

![StevenAllen]() StevenAllen

StevenAllen

April 25, 2025 at 6:35:39 AM EDT

April 25, 2025 at 6:35:39 AM EDT

Google Gemini는 꽤 멋지지만, 다양한 모델 때문에 조금 혼란스러워요! Gemini Ultra의 강력함은 좋지만, 일상적으로 사용할 수 있는 더 간단한 버전이 있었으면 좋겠어요. 그래도 할 수 있는 일이 대단해요! 🤯

0

0

![StevenGreen]() StevenGreen

StevenGreen

April 25, 2025 at 4:26:00 AM EDT

April 25, 2025 at 4:26:00 AM EDT

Google Gemini es bastante genial, pero es un poco abrumador con todos los diferentes modelos. Me gusta el Gemini Ultra por su potencia, pero desearía que hubiera una versión más simple para el uso diario. Aún así, es impresionante lo que puede hacer! 🤯

0

0

![StevenAllen]() StevenAllen

StevenAllen

April 25, 2025 at 2:08:55 AM EDT

April 25, 2025 at 2:08:55 AM EDT

구글 제미니 정말 멋지네요! 다양한 크기가 있어서 좋지만, 울트라 버전이 좀 더 쉽게 구할 수 있었으면 좋겠어요. 그래도 혁신적이에요! 😊

0

0

![JasonMartin]() JasonMartin

JasonMartin

April 25, 2025 at 1:13:47 AM EDT

April 25, 2025 at 1:13:47 AM EDT

Google Gemini é de tirar o fôlego! Testei o modelo Ultra e fiquei impressionado com o poder dele. A única desvantagem é que é um pouco caro. Mas pela qualidade, vale a pena. Definitivamente uma recomendação para entusiastas de IA! 🤯

0

0

![JackMartin]() JackMartin

JackMartin

April 24, 2025 at 10:23:13 PM EDT

April 24, 2025 at 10:23:13 PM EDT

Google Geminiは驚きだよ!Ultraモデルを試してみたけど、そのパワフルさにびっくりした。唯一の欠点はちょっと高価なところかな。でも品質を考えると価値があるよ。AI愛好者にはぜひ試してほしいね!🤯

0

0

What is Gemini?

Gemini is Google's highly anticipated next-generation family of generative AI models, developed through a collaboration between DeepMind and Google Research. It's designed to be versatile, coming in various sizes to cater to different needs:

- Gemini Ultra: A powerhouse model, designed for the most complex tasks.

- Gemini Pro: A robust model, with the latest version, Gemini 2.0 Pro, being Google's current flagship.

- Gemini Flash: A faster, streamlined version of Pro, perfect for quick tasks.

- Gemini Flash-Lite: Even smaller and faster than Flash, it's built for efficiency.

- Gemini Flash Thinking: A specialized version with enhanced reasoning capabilities.

- Gemini Nano: Consists of two compact models, Nano-1 and Nano-2, the latter capable of running offline.

One of the key features of Gemini is its multimodal nature. Unlike earlier models like Google's LaMDA, which were limited to text, Gemini models have been trained on a diverse dataset including audio, images, videos, code, and text in multiple languages. This allows them to not only process but also generate various types of content, setting them apart in the AI landscape.

However, it's worth noting the ethical and legal concerns surrounding the use of public data for training these models. Google offers an AI indemnification policy, but it's not a blanket protection, so if you're considering using Gemini for commercial purposes, tread carefully.

What’s the difference between the Gemini apps and Gemini models?

The Gemini models are the brains behind the operation, while the Gemini apps serve as user-friendly interfaces to access these models. These apps, available on web and mobile platforms (formerly known as Bard), act as front ends similar to ChatGPT or Anthropic's Claude. They offer a chatbot-like experience, allowing users to interact with Gemini's capabilities through a familiar interface.

On Android, the Gemini app has taken over from the Google Assistant, and on iOS, it's integrated into the Google and Google Search apps. Android users can even summon a Gemini overlay to interact with content on their screens, such as YouTube videos, by pressing the power button or using voice commands.

The apps support a range of inputs, including images, voice commands, and text, and can even generate images. Conversations are synced across devices if you're signed into the same Google Account.

Gemini Advanced

Beyond the basic apps, Gemini Advanced offers enhanced features for a monthly fee of $20 as part of the Google One AI Premium Plan. This plan integrates Gemini into Google Workspace apps like Gmail, Docs, Maps, and more, allowing for advanced tasks like email composition, document editing, and even generating slides.

Gemini Advanced users enjoy perks like priority access to new features, the ability to run and edit Python code directly in the app, and increased limits for tools like NotebookLM. A recent addition, the memory feature, helps Gemini remember user preferences and past conversations, enhancing the user experience. One standout feature, Deep Research, uses advanced reasoning to create detailed briefs on complex topics.

Gemini in Gmail, Docs, Chrome, dev tools, and more

Gemini's integration extends to various Google services. In Gmail and Docs, it offers side panels for tasks like email composition and document refinement. In Slides, it generates custom images and slides, while in Sheets, it helps with data organization and formula creation.

Gemini also enhances Google Maps with personalized recommendations and aggregates reviews. In Drive, it can summarize files and provide quick insights. In Chrome, it acts as an AI writing tool, adapting to the context of the webpage you're on. Gemini's influence reaches into Google's security and development tools, as well as apps like Photos, YouTube, and Meet, where it supports natural language searches and translations.

Gemini extensions and Gems

For Gemini Advanced users, the ability to create Gems is a unique feature. These are custom chatbots powered by Gemini models, which can be tailored to specific tasks like creating a daily running plan. Gems can be shared or kept private, adding a personal touch to AI interactions.

Gemini apps also leverage "Gemini extensions" to integrate with Google services like Drive, Gmail, and YouTube, allowing for seamless interaction and information retrieval across platforms.

Gemini Live in-depth voice chats

Gemini Live offers a unique experience for voice interactions, available in the Gemini apps on mobile and the Pixel Buds Pro 2. It allows for real-time, adaptive conversations, where you can interrupt Gemini to ask questions or seek clarification. This feature is designed to help with tasks like job interview preparation and public speaking practice.

Gemini for teens

Google has also introduced a teen-focused version of Gemini, designed for students. It includes additional safety measures and an AI literacy guide but otherwise offers a similar experience to the standard version, including the "double-check" feature for accuracy.

What can the Gemini models do?

Given their multimodal capabilities, Gemini models can handle a variety of tasks, from speech transcription to real-time image and video captioning. Google is constantly expanding these capabilities, promising even more in the future.

However, like all generative AI, Gemini isn't without its challenges, such as biases and the potential to generate inaccurate information. It's important to be aware of these limitations when using or considering paying for Gemini services.

Gemini Pro’s capabilities

The latest iteration, Gemini 2.0 Pro, excels in coding and handling complex prompts, outperforming its predecessor in various benchmarks. Developers can customize it through Google's Vertex AI platform, tailoring it to specific contexts and integrating it with third-party data or APIs. Google's AI Studio also offers tools for creating structured prompts and adjusting safety settings.

Gemini Flash is lightweight, while Gemini Flash Thinking adds reasoning

Gemini 2.0 Flash, designed for efficiency, is ideal for tasks like summarization and data extraction, while Gemini 2.0 Flash-Lite offers even better performance at the same price point. The "thinking" version of Gemini 2.0 Flash enhances reliability by taking time to reason through problems before responding.

Gemini Nano can run on your phone

Gemini Nano is designed to run directly on devices, enhancing privacy and offline functionality. It powers features like Summarize in Recorder and Smart Reply in Gboard on devices like the Pixel 8 series and Samsung Galaxy S24. Future versions of Android will use Nano for scam detection during calls, and it's already enhancing weather reports and accessibility features.

Gemini Ultra, MIA for now

While Gemini Ultra hasn't been in the spotlight recently, it remains a part of Google's plans, potentially returning with new capabilities in the future.

How much do the Gemini models cost?

The pricing for Gemini models through the Gemini API is structured as follows:

- Gemini 1.5 Pro: $1.25/$2.50 per million input tokens and $5/$10 per million output tokens, depending on prompt length.

- Gemini 1.5 Flash: 7.5/15 cents per million input tokens and 30/60 cents per million output tokens, depending on prompt length.

- Gemini 2.0 Flash: 10 cents per million input tokens and 40 cents per million output tokens, with audio input at 70 cents per million tokens.

- Gemini 2.0 Flash-Lite: 7.5 cents per million input tokens and 30 cents per million output tokens.

Pricing for Gemini 2.0 Pro and Nano has yet to be announced.

Is Gemini coming to the iPhone?

There's potential for Gemini to make its way to the iPhone. Apple has expressed interest in integrating Gemini and other third-party models into its Apple Intelligence suite, though specifics are still under wraps following discussions at WWDC 2024.

This post was originally published on February 16, 2024, and is regularly updated to reflect the latest developments.

Google's Stitch AI Simplifies App Design Process

Google Unveils Stitch AI Design Tool at I/O 2025Google introduced Stitch, its revolutionary AI-powered interface design tool, during the keynote at Google I/O 2025. This innovative solution transforms natural language prompts or reference images into

Google's Stitch AI Simplifies App Design Process

Google Unveils Stitch AI Design Tool at I/O 2025Google introduced Stitch, its revolutionary AI-powered interface design tool, during the keynote at Google I/O 2025. This innovative solution transforms natural language prompts or reference images into

Google Introduces AI-Powered Tools for Gmail, Docs, and Vids

Google Unveils AI-Powered Workspace Updates at I/O 2025During its annual developer conference, Google has introduced transformative AI enhancements coming to its Workspace suite, fundamentally changing how users interact with Gmail, Docs, and Vids. T

Google Introduces AI-Powered Tools for Gmail, Docs, and Vids

Google Unveils AI-Powered Workspace Updates at I/O 2025During its annual developer conference, Google has introduced transformative AI enhancements coming to its Workspace suite, fundamentally changing how users interact with Gmail, Docs, and Vids. T

August 15, 2025 at 3:01:00 PM EDT

August 15, 2025 at 3:01:00 PM EDT

Gemini sounds like a beast! I'm curious how it stacks up against other AI models in real-world tasks. 😎 Anyone tried it yet?

0

0

April 25, 2025 at 6:35:39 AM EDT

April 25, 2025 at 6:35:39 AM EDT

Google Gemini는 꽤 멋지지만, 다양한 모델 때문에 조금 혼란스러워요! Gemini Ultra의 강력함은 좋지만, 일상적으로 사용할 수 있는 더 간단한 버전이 있었으면 좋겠어요. 그래도 할 수 있는 일이 대단해요! 🤯

0

0

April 25, 2025 at 4:26:00 AM EDT

April 25, 2025 at 4:26:00 AM EDT

Google Gemini es bastante genial, pero es un poco abrumador con todos los diferentes modelos. Me gusta el Gemini Ultra por su potencia, pero desearía que hubiera una versión más simple para el uso diario. Aún así, es impresionante lo que puede hacer! 🤯

0

0

April 25, 2025 at 2:08:55 AM EDT

April 25, 2025 at 2:08:55 AM EDT

구글 제미니 정말 멋지네요! 다양한 크기가 있어서 좋지만, 울트라 버전이 좀 더 쉽게 구할 수 있었으면 좋겠어요. 그래도 혁신적이에요! 😊

0

0

April 25, 2025 at 1:13:47 AM EDT

April 25, 2025 at 1:13:47 AM EDT

Google Gemini é de tirar o fôlego! Testei o modelo Ultra e fiquei impressionado com o poder dele. A única desvantagem é que é um pouco caro. Mas pela qualidade, vale a pena. Definitivamente uma recomendação para entusiastas de IA! 🤯

0

0

April 24, 2025 at 10:23:13 PM EDT

April 24, 2025 at 10:23:13 PM EDT

Google Geminiは驚きだよ!Ultraモデルを試してみたけど、そのパワフルさにびっくりした。唯一の欠点はちょっと高価なところかな。でも品質を考えると価値があるよ。AI愛好者にはぜひ試してほしいね!🤯

0

0